-

Posts

655 -

Joined

-

Last visited

-

Days Won

70

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by LogMAN

-

-

Here is a KB article with information about the design decision of password protection: https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z0000019LvFSAU

QuoteIn order for LabVIEW to be able to recompile a VI, it must be able to read the VI’s block diagram. Because LabVIEW must be able to execute this without prompting the user for a password, LabVIEW cannot use any strong encryption to protect the VI’s block diagram.

Not sure why strong encryption would be impossible. One way to do that is to store a private key on the developer(s) machine to encrypt and decrypt files. Of course loosing that key would be a disaster

-

1 hour ago, X___ said:

The strange behavior I am observing is that:

(i) if the user accepts the proposed path, it is passed along right away (that is not the strange part), but

(ii) if she/he/they move up one folder and press OK, the dialog will pop-up again and essentially refuse the proposed path (this is the strange part). If the user presses OK, the path is of course accepted as we are back in case (i).

(iii) The only way to achieve what step (ii) is supposed to do is to move up TWO folders, then press OK once (which brings the dialog back up) and then once again.

Any idea why this would make any sense or whether this qualifies as a bug?

Perhaps I'm missing something, but the way this works is when you move up one folder, that folder is "selected", so pressing OK will open the folder again. What makes it confusing is that the filename (and not the folder) is highlighted active (blue and not gray), so a user might think that pressing OK will confirm the filename, which is not the case. To my knowledge there is no way to change this behavior in Windows (the dialog is not actually part of LV).

By the way, even if you deselect the folder, it will still enter the previous folder, which is certainly not intuitive to most users (including myself). The only way to make it work is to "change" the filename (even just replacing one character with the same letter will do the job).

Here are the different scenarios I tested:

-

The file still exists, only the link above is dead.

-

1

1

-

-

On 5/21/2021 at 7:24 PM, Neil Pate said:

Being able to drop pictures on a block diagram is so amazing.

Why stop there?

-

I'm no expert with CLFNs but I know this much: LabVIEW does not have a concept for unions but you can always pass an array of bytes or a numeric of appropriate size and convert later. Just make sure that the container is large enough to contain the largest member of the union (should be 64 bit from what I can tell).

Conversion should be simple enough for numeric types but the pointer requires additional work. Here is some information for dereferencing pointers in LabVIEW (there is a section about dereferencing strings). https://forums.ni.com/t5/Developer-Center-Resources/Dereferencing-Pointers-from-C-C-DLLs-in-LabVIEW/ta-p/3522795

-

1 hour ago, ThomasGutzler said:

Looking at the options we have, is it fair to assume that I can ALSO set unattended mode or might that already be included?

It creates a headless instance, so no dialogs. I haven't checked the unattended mode flag, but I'd assume it is set by default 🤷♂️

-

4 hours ago, ThomasGutzler said:

So I need to save everything to avoid the "Don't you want to save" dialog blocking everything

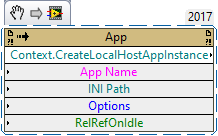

You could run the build in its own context, which (to my knowledge) does not save any changes unless option 0x2 is active (see Wiki for more details).

Application class/Context.Create Local Host App Instance method - LabVIEW Wiki

-

Thanks, this is very helpful. One more reason to replace the built-in functions with your library.

-

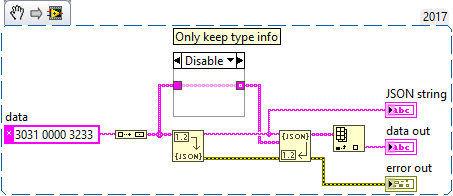

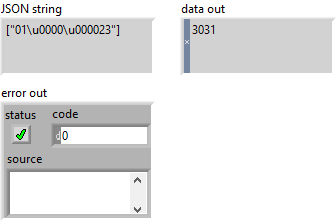

I recently stumbled upon this issue while debugging an application that didn't handle JSON payload as expected.

As it turns out, Unflatten From JSON breaks on NUL characters, even though Flatten To JSON properly encodes them as ("\u0000").

I have confirmed the behavior in LabVIEW 2017, 2019, and 2020 (all with latest service packs and patches), currently waiting for confirmation by NI.

Workaround: Use JSONtext

-

1 hour ago, thols said:

Is it only me?

Got the same issue in LV2020 SP1

The page exists, it is just incorrectly linked

-

12 hours ago, bjustice said:

Does anyone know if it's possible to compose/decompose map/sets in LabVIEW?

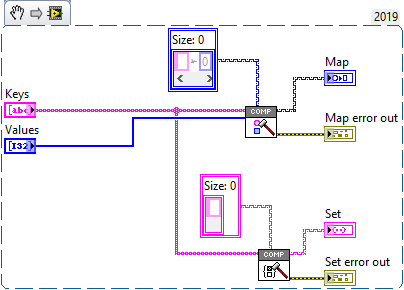

Yes it is, both ways.

12 hours ago, bjustice said:@LogMAN wrote a fantastic "LabVIEW Composition" library which is able to :

- compose/decompose LabVIEW classes

- decompose maps

- decompose sets

However, there is no support for composition of maps/sets.

(Link: https://github.com/LogMANOriginal/LabVIEW-Composition)I'm glad you like it

There is a new release which adds support for composition of maps and sets. Here is an example of what you can do with it:

12 hours ago, bjustice said:

12 hours ago, bjustice said:@jdpowell and @Antoine Chalons had an interesting discussion on a JSONtext issue ticket about this subject:

(link: https://bitbucket.org/drjdpowell/jsontext/issues/74/add-support-for-maps-set)

However, it doesn't look like there has been resolution yet?

Thanks for any input/insight

This is actually a very smart way of doing it. My library takes the keys and values apart into individual arrays. A key-value-pair is certainly easier to work with...

-

1

1

-

1

1

-

It's probably not a good idea sharing DBC files publicly. That said, your database loads perfectly fine for me. Perhaps your XNET is outdated?

I have no problems with XNET 19.6

-

Welcome to LAVA 🎉

2 hours ago, maristu said:I’ve read that the performance of labview is better with the compiled code in the VI.

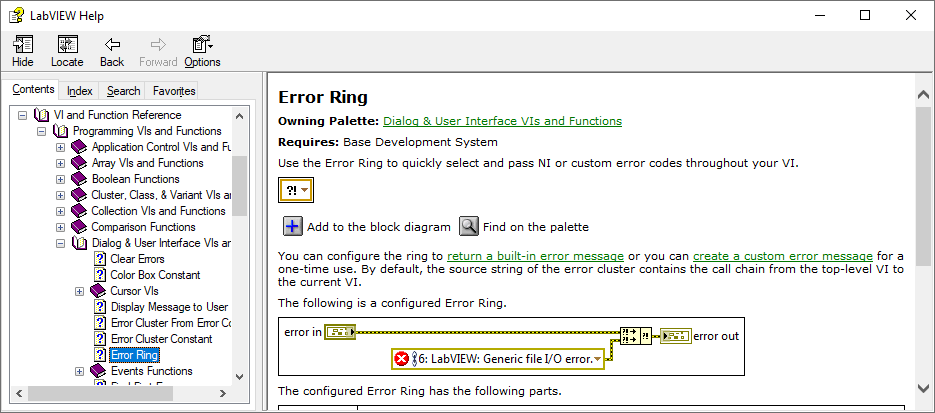

Not sure where you read that, here is what the LabVIEW help says:

QuoteLabVIEW can load source-only VIs more quickly than regular VIs. To maximize this benefit, separate compiled code from all the files in a VI hierarchy or project.

2 hours ago, maristu said:Could it be a good idea to unmark the separate compiled code programmatically on each installed file (vi, ctl, class, lvlib… )?

I don't see the benefit. Your projects will take longer to load and if the compiled code breaks you can't even delete the cache, which means you have to forcibly recompile your VIs, which is the same as what you have right now.

-

2 hours ago, rharmon@sandia.gov said:

I was thinking I would just branch off the second project and make the necessary changes to make the second project work. It would not be my intension to ever re-unite the branches.

What you describe is called a fork

Forks are created by copying the main branch of development ("trunk") and all its history to a new repository. That way forks don't interfere with each other and your repositories don't get messy.

-

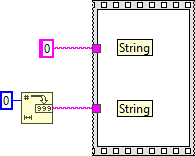

In my opinion NI should finally make up their mind to whether objects are inherently serializable or not. The current situation is dissatisfying.

There are native functions that clearly break encapsulation:

Then there is one function that doesn't, although many users expect it to (not to mention all the other types it doesn't support):

Of course users will eventually utilize one to "fix" the other. Whether or not it is a good design choice is a different question.

-

11 minutes ago, rharmon@sandia.gov said:

I think reading through these posts I'm leaning toward Subversion or Mercurial... Probably Mercurial because from my conversation leading me toward source control touched on the need to branch my software to another project.

If you are leaning towards Mercurial you should visit the Mercurial User Group: https://forums.ni.com/t5/Mercurial-User-Group/gh-p/5107

-

- Git

- I don't hate it - the centralized nature of SVN simply didn't cut it for us.

- No. It was my decision.

- Pro - everything is available at all times, even without access to a central server. Con - It has too many features, which makes it hard to learn for novice users.

- Only when I merge the unmergeable, so anything related to LabVIEW 😞

-

9 hours ago, LogMAN said:

The user interface does not follow the dataflow model.

9 hours ago, G-CODE said:I think it's really helpful to point that out.

7 hours ago, Mads said:I think this is about as wrong as it can get.

Please keep in mind that it is only my mental image and not based on any facts from NI.

9 hours ago, G-CODE said:Thinking about this.... I can't figure out if now we are trying to explain why it's expected behavior or if we are trying to justify unexpected behavior (or something in between). 🙂

Perhaps both. If we can understand the current behavior it is easier to explain to NI how to change it in a way that works better for us.

7 hours ago, Mads said:If an indicator is wired only (no local variables or property nodes breaking the data flow) it shall abide the rules of data flow. The fact that the UI is not synchronously updated (it can be set to be, but it is not here) can explain that what you see in an indicator is not necessarily its true value (the execution, if running fast, will be ahead of the UI update)- but it will never be a *future* value(!).

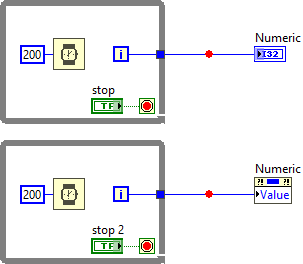

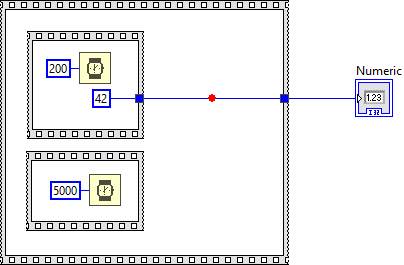

Here is an example that illustrates the different behavior when using indicators vs. property nodes. The lower breakpoint gets triggered as soon as the loop exits, as one would expect. I have tried synchronous display for the indicator and it doesn't affect the outcome. Not sure what to make of it, other than what I have explained above 🤷♂️

7 hours ago, Mads said:

7 hours ago, Mads said:A breakpoint should really (we expect it to) as soon as it has its input value cause a diagram-wide break, but it does not. Instead it waits for the parallell code to finish, then breaks.

I agree, this is what most users expect from it anyway. It would be interesting to hear the reasoning from NI, maybe there is a technical reason it was done this way.

-

Disclaimer: The following is based on my own observations and experience, so take it with a grain of salt!

15 hours ago, G-CODE said:How is it possible to update an indicator if the upstream wire has a breakpoint that hasn't paused execution?

The user interface does not follow the dataflow model. It runs in its own thread and grabs new data as it becomes available. In fact, the UI update rate is much slower than the actual execution speed of the VI -->VI Execution Speed - LabVIEW 2018 Help - National Instruments (ni.com). The location of the indicator on the block diagram simply defines which data is used, not necessarily when the data is being displayed. In your example, the numeric indicator uses the data from the output terminal of the upper while loop, but it does not have to wait for the wire to pass the data. Instead it grabs the data when it is available. Because of that you can't rely on the front panel to tell dataflow.

Execution Highlighting is also misleading because it isn't based on VI execution, but rather on a simulation of the VI executing (it's a approximation at best). LabVIEW simply displays the dot and postpones UI updates until the dot reaches the next node. Not to forget that it also forces sequential execution. It probably isn't even aware of the execution system, which is why it will display the dot on wires that (during normal execution) wouldn't have passed any data yet.

Breakpoints, however, are connected to the execution system, which is why they behave "strangely". In dataflow, data only gets passed to the next node when the current node has finished. The same is true for diagrams! The other thing about breakpoints to keep in mind is that "execution pauses after data passes through the wire" --> Managing Breakpoints - LabVIEW 2018 Help - National Instruments (ni.com)

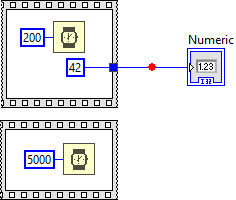

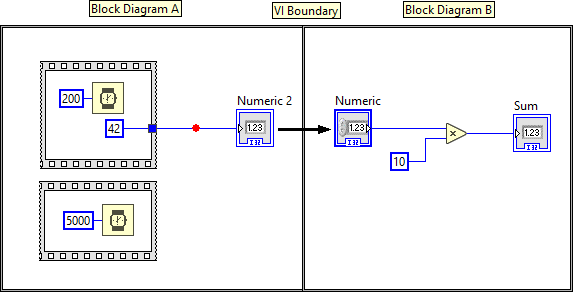

In your example, data passes on the wire after the block diagram is finished. Here is another example that illustrates the behavior (breakpoint is hit when the block diagram and all its subdiagrams are finished):

Now think about indicators and controls as terminals from one block diagram (node) to another.

According to the dataflow model, the left diagram (Block Diagram A) only passes data to the right diagram (Block Diagram B) after it is complete. And since the breakpoint only triggers after data has passed, it needs to wait for the entire block diagram to finish. Whether or not the indicator is connected to any terminal makes no difference.

This is also not limited to indicators, but any data that is passed from one diagram to another:

Hope that makes sense 😅

-

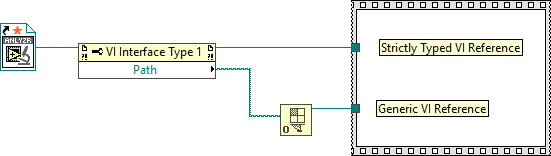

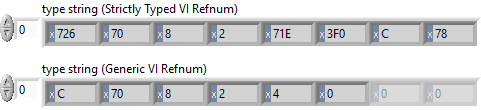

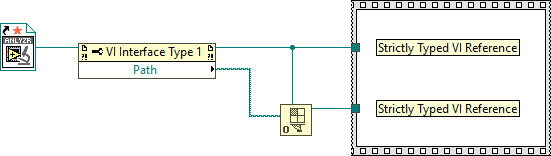

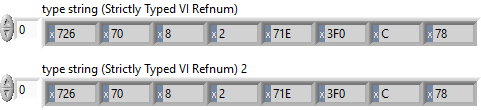

A Static VI Reference is simply a constant Generic VI Reference. There is no way to distinguish one from another.

It's like asking for the difference between a string constant and a string returned by a function.

The Strictly Typed VI Reference @Darren mentioned is easily distinguishable from a Generic VI Reference (notice the orange ⭐ on the Static VI Reference).

However, if you wire the type specifier to the Open VI Refnum function, the types are - again - indistinguishable.

Perhaps you can use VI Scripting to locate Static VI References on the block diagram?

-

Sure thing, it's also good to know there is a thread like that - first time I've head of it 😮

-

6 hours ago, infinitenothing said:

It's more the output of number to string conversion that's not fixed that's my issue at the moment.

The number to string functions all have a width parameter: Number To Decimal String Function - LabVIEW 2018 Help - National Instruments (ni.com)

As long as you can guarantee that the number of digits does not exceed the specified width, it will always produce a string with fixed length (padded with spaces).

-

Thanks, I'll report this to NI

-

I discovered a potential memory corruption when using Variant To Flattened String and Flattened String To Variant functions on Sets. Here is the test code:

PotentialMemoryCorruptionwhen(de-)serializingSets.png.e31ac61a8ef3ee1d71ad471d67565015.png)

In this example, the set is serialized and de-serialized without changing any data. The code runs in a loop to increase the chance of crashing LabVIEW.

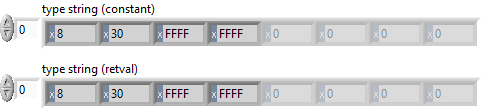

Here is the type descriptor. If you are familiar with type descriptors, you'll notice that something is off:

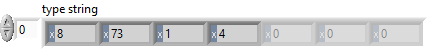

Here is the translation:

- 0x0008 - Length of the type descriptor in bytes, including the length word (8 bytes) => OK

- 0x0073 - Data type (Set) => OK

- 0x0001 - Number of dimensions (a set is essentially an array with dimension size 1) => OK

- 0x0004 - Length of the type descriptor for the internal type in bytes, including the length word (4 bytes) => OK

- ???? - Type descriptor for the internal data type (should be 0x0008 for U64) => What is going on?

It turns out that the last two bytes are truncated. The Flatten String To Variant function actually reports error 116, which makes sense because the type descriptor is incomplete, BUT it does not always return an error! In fact, half of the time, no error is reported and LabVIEW eventually crashes (most often after adding a label to the numeric type in the set constant). I believe that this corrupts memory, which eventually crashes LabVIEW. Here is a video that illustrates the behavior:

Can somebody please confirm this issue?

LV2019SP1f3 (32-bit) Potential Memory Corruption when (de-)serializing Sets.vi

does labview have a future?

in LabVIEW General

Posted

You are right, I haven't thought it through. Source access is required to recompile, which makes any encryption/obfuscation/protection annoying to reverse engineer at best.