-

Posts

1,986 -

Joined

-

Last visited

-

Days Won

183

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by drjdpowell

-

-

And I don't do OOP (

).

). Are you sure? Have you ever used the "design pattern" of having a type-def cluster and a set of subVIs that act on that cluster? With the cluster going in the top left terminal and out the top right? Because that is the basic design pattern that is LVOOP. Looking at a long list of impenetrable "design patterns" makes OOP look like its impossibly hard to start with, but actually it's very easy to start using as a cleaner, better way to do this important pattern you already do in LabVIEW. I recall in a conversation somewhere with someone saying that a big advantage of LVOOP is the ability to customize the appearance of the wires. Some people thought they must be joking, but I understood and agreed completely. LVOOP formalizes and supports the "type-def cluster" design pattern.

A less-common LabVIEW pattern is to allow the same set of subVIs to act on related, but different, "things". If I recall correctly, ShaunR uses this pattern in his Dispatcher/Transport code to allow use of either TCP, UDP or Bluetooth as a communication method in a common set of VIs. I don't remember the method he uses (there is more than one way), but this is another design pattern that LVOOP makes cleaner and also more powerful (through "inheritance"). Inheritance is harder to get comfortable with, admittedly, but that's because this design pattern is harder to grasp regardless of how it's done. LVOOP makes it easier to do and more widely applicable (and thus you can make use it more often).

Starting with those two patterns (or even just the top one) which you are likely already using is an easy way to get started on LVOOP.

-- James

-

Functional globals, global variables, local variables, DVRs, etc. are all (imo) different ways to pass messages to a running loop. Is "no shared memory" a requirement for something to be a "message?"

I believe an "Actor" can't have any direct sharing of it's internal state information. Using a global to pass a message for the Actor to act on (in a well-defined, controlled, atomic way), is different than being able to directly fiddle with state parameters (as I did with my PID controller). It's a kind of encapsulation, in the same way that an OOP object controls access to its private data.

-

It's not at all clear to me how the implementations for an Actor, Active Object, and Agent (to throw another construct into the mix) differ.

I think an "Active Object" is anything that is an "object" and has an active internal process. The first active object I ever created was a PID temperature controller. Methods such as "Change Setpoint" or "Set PID parameters" could be called on the PID controller "object" (this was pre LVOOP), while the PID control cycle continued automatically in the background.

An "Actor" is a process that interacts with other processes solely by passing messages (it has no shared memory with any other process). My PID controller was not really an Actor.

-

Changing from a parallel loop to an Actor object feels like a big jump to me. There are probably logical intermediate steps between them, but without any experience using it I don't know what they are. Maybe Stephen will weigh in.

I would imagine that, if one were strictly using command-pattern messages with dynamic dispatch (instead of case structures), it would be relatively easy to convert from an on-diagram loop to an Actor (since the messages are decoupled from the structure handling them). Of course, if one doesn't strictly use the command pattern from the start, converting to an Actor would be much harder.

(After I release Collections, which is only... oh... 13 months late.)I could really use such a a thing and am looking forward to it.

I don't think so. A Slave object is an intermediate step between a parallel loop on a block diagram and an Active Object, Observable, or other higher level functionality. I'm not sure what Stephen's intent is but the literature I've been able to find on Actors seems to indicate different architectural considerations. That said, I haven't heard what *he* thinks the differences between and Actor/ActiveObject/Slave are. I could be completely off base.

I don't think so. A Slave object is an intermediate step between a parallel loop on a block diagram and an Active Object, Observable, or other higher level functionality. I'm not sure what Stephen's intent is but the literature I've been able to find on Actors seems to indicate different architectural considerations. That said, I haven't heard what *he* thinks the differences between and Actor/ActiveObject/Slave are. I could be completely off base.I think it's all a loosely defined continuum. Your Slaves certainly satisfy key aspects, like only being coupled by message passing.

Wikipedia says: "In computer science, the Actor model is a mathematical model of concurrent computation that treats "actors" as the universal primitives of concurrent digital computation: in response to a message that it receives, an actor can make local decisions, create more actors, send more messages, and determine how to respond to the next message received."

Your Slave loop can do all that except create more actors in response to a message (but adding dynamic launching would allow this too).

-- James

-

I haven't actually used either and don't even have a working computer with LabVIEW at the moment, but from memory of looking at them:

I would say the the "Slave Loop" design is a type of actor framework.

The biggest thing about the "Actor Framework" is that one can create child actors that inherit from parent actors and add functionality. This is because it uses an "Actor" object to contain the internal state data and dynamically dispatches important methods like "Actor Core" off of it.

-- James

PS> you could also have a look at mje's "Message Pump" framework for a different design that does similar things.

-

Some of the details still escape me though... For example, a trait provides a set of methods that implement a behavior and requires a set of methods the class (or another trait, or perhaps a child class?) needs to implement. (page 7) Can these methods be overridden in child classes? My guess is provided methods cannot be overridden but required methods can.

From reading the paper, I believe provided methods can be overridden; in fact they sometimes have to be, when there is a "conflict" between identically names methods provided by multiple traits (section 3.5, page 11).

-- James

-

Just skimmed the paper, but it looks quite promising. The .lvtrait library would presumably be able to be owned simultaneously by multiple .lvclass libraries; I wonder if NI might have some difficulties with that.

-- James

-

If you find yourself getting tired of writing proxies, perhaps a better solution would be to create a reusable Proxy class encapsulating the common requirements. Then you can create a ComponentProxy class (possibly with Component or Proxy as the parent, depending on the situation) and extend/customize it for the specific need.

Can I encapsulate the local queues as well and call my Proxy class "Postman"?

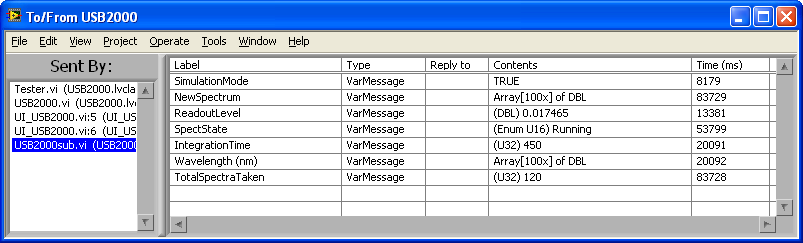

I actually use my version of Postman ("Send") as the parent of classes that serve as Proxies for my Active Objects ("Parallel Process"). So I can extend/customize these proxies if needed. For example, my "MessageLogger" class (really a proxy of communication with a parallel-running VI that records messages) overrides "SendMessage" to collect additional information from the sending process:

The sending VI name and the message time seen above is extra functionality added by override.

So, I think I can add desired custom functionality in a non-clumsy way for that "other 10%" through inheriting off of Postman. And I like the Proxies being "up front"; my preferred abstraction is:

CompA --> CompB(proxy)

Which may explain why I'm uninterested in queue operations like "Flush" or "EnqueueInFront"; they don't mean anything in this abstraction.

-- James

-

It appears this is one of our core differences. I'm not looking for plug-and-play interchangability between message transport mechanisms. It's an additional abstraction layer I haven't needed. I decide on the best transport mechanism at edit time based on on the design and purpose of the receiving loop. Most of the time a queue is the best/most flexible solution. Occasionally a notifier or user event is better for a specific loop.

Well, it just seems to me that this route leads to less reusability/flexibility, or alternately, a lot more work. A component written to communicate in one way can't be reused in an application where it must communicate another way without either modifying it or writing an entire Proxy for it. A custom Proxy may be more flexible that a generic solution, but 90% of the time the generic solution is fine.

For example, suppose you're sending sensor data from one loop to another loop so it can be stored to disk. What happens when the link between the two loops breaks? Generally this isn't a concern using queues--you can verify it via inspection--so there's no point in writing a bunch of code to protect against it. But it is a big concern with network communication. How should the sender respond in that situation? In the sending loop SendMsg is inline with the rest of the processing code so it will (I think) block until connection is reestablished or times out. Do you really want your data acquisition loop blocked because the network failed?No. But not blocking and throwing an error is fine. I must admit that my network experience is only one project using Shared Variables, but the likely use I would have for networking is between Real-Time controllers and one or more PCs in the same room on a dedicated subnet. Network failure is rare, and in any case, there is little value in half my distributed application running if the network connection has failed.

Using the network example from above, *something* on ActorB/CompA's side of the tcp connection needs to store the data locally if the network fails. What's going to do that? TcpPostman?They call that "message persistence" in the stuff on Message Queues like RabbitMQ. The reason I would like to wrap one of the open-source messaging designs is to get advanced functionality like this for free. I'm not sure I would have much use for it in typical applications of mine, though, so I would be happy with a TcpPostman with buffers at each end that errors out in the unlikely event that the buffers fill up.

Absolutely you can, and I encourage you to do so... but it doesn't mean *I* should.

If only my computer hadn't up and died on me last week!

Gotta wait a couple of weeks for a new one. In the meantime I can keep bugging you.

Gotta wait a couple of weeks for a new one. In the meantime I can keep bugging you.

-- James

-

I don't see much point in creating a common parent unless I'm going to be using that parent in the code somewhere. A common parent (I'll call it "Postman" for lack of a better term) that abstracts the underlying message passing technology (queue, notifier, user event, etc.) would be very small. The Postman class can only expose those methods that are common to all child classes--essentially, "SendMsg" and "Destroy." But, if we look a little closer at SendMsg we see that the queue's SendMsg implementation has multiple optional arguments whereas the others have none. That means Postman.SendMsg won't have any optional arguments either and I've removed useful functionality from the queue class.

Two classes inheriting from a common parent should share a lot of common behavior. Events and queues share very little common behavior. True, they both can be used to send messages in an abstract sense, but that's about it. They offer very different levels of control (i.e. have different behavior.)

Ah, but isn't "Send Message" the central functionality of a message sending system? Aren't these "extra levels of control", just like the "priority queue", not central to the business of messaging? "The ideal queueing system... spits things out in the order it received them..."; User Events do that.

Having a common parent class means one can write code with "plugin" communication methods. "Actor A" can register with "Actor B" for message updates without Actor B needing to depend on the exact communication method that A provided. Why does Actor B need to do anything other that "Send Message"?

Note that this does not mean throwing out the option to use the Queue advanced features; you can write Actor A to deal with a Queue explicitly (and use Queue methods like Queue.Preview or Queue.Flush) while Actor B just uses Postman.SendMsg. Or you could write both Actors to use Queues specifically. Having a parent class doesn't eliminate the ability to use the child classes fully, it just adds the ability to use them generically, using the common subset of functionality.

And to repeat the point, the one common function, "Send Message", is the only crucial one for a messaging system. In my own design of a messaging system, my parent class is actually called "Send", and it is used all over the place, particularly in implementing "Observer Pattern" designs.

All in all, the "convenience" of having a single SendMsg method that works for both user events and queues simply isn't worth it.If not user events, what abut TCP? How would you extend LapDog to a network communication method, and wouldn't it be good to be able to take a previously-written design and just substitute a TCP_Postman for the Queue? Do you really need methods like TCP_Postman.Flush or TCP_Postman.Preview?

I view notifiers and user events sort of like specialized queues to be used in specific situations. I find notifiers most useful when a producer generates data faster than a consumer can consume it and you don't want to throttle the producer. (i.e. You want the consumer to run as fast as possible or you have multiple consumers that consume data at different rates.) Unless those situations are present queues are more flexible, more robust, and imo (though many disagree with me) preferable to either of those for general purpose messaging.Well, I'm interested not in queues, but in message-passing methods, which is not exactly the same. For example, queues have the ability to have blocking enqueue (if a limited-size queue is full); but a blocking "SendMsg" is NOT acceptable in asynchronous messaging (and you can't have an effective "Observer Pattern" with a potentially blocking Observer.Notify). So I don't want full Queue flexibility. I want a selection of message-passing methods with some degree of plug-and-play interchangeability (on the sending side, at least).

One thing that is important (imo) for robustness is that each loop in your application have only a single mechanism for receiving messages. ... If you have a loop that sometimes needs queue-style messages and sometimes needs notifier-style messages, that's a good indication your loop is trying to do too much. Break it out into one loop with a queue and another loop with a notifier.I'm not sure this is true for a notifier, which is less a method of receiving messages that must be acted on than a bulletin board of posted information that I can choose to look at if I need to (or ignore if I don't). A queue-based message handler could consult a notifier to get information needed to handle a message arriving on the queue. I envision querying my MessageNotifier for the last message of a specific name/label/type, but only when I need the information.

Regardless of the implementation, there are design decisions I'd have to make that end up reducing the overall usefulness for a subset of the potential users....

There are no absolute answers to these questions. It's up to the developer to weigh the tradeoffs and make the correct decision based on the specific project requirements, not me.

Can I not provide another tool in the toolkit because it might not be perfect for every job? Can't provide a hammer 'cause someone might prefer a slightly larger hammer? I certainly think I can come up with a reasonably good set of design choices in making a MessageNotifier that would be reasonably useful (assuming one really wants a notifier).

[bTW, I'm thinking "sorted array", "binary search", "in the enqueue method", and "exact order not important" (a notifier is a bulletin board, not an inbox).]

BTW, even though I've rejected nearly all your suggestions I do appreciate them. It challenges my thinking and forces me to justify the decisions I've made. I hope my responses are useful to others, but the act of composing responses has led to several "a ha" moments for me.And I've gone back to using QSMs, but with better clarity of their pitfalls due to your reasoned arguments against them.

-- James

-

You mentioned a state message is rendered obsolete in a few seconds and would like the old message replaced in the queue by the new message. If I may ask a pointed question, why do allow messages--which should be propogated immediately through the application--to sit around in the queue for several seconds unprocessed? What can you do to improve your application's response time to messages? When I asked myself those (and other) questions I ended up with the hierarchical messaging architecture.

I've been thinking about your pointed question, and, though I agree with you about the importance of servicing a message in a queue promptly, I wonder if an alternate valid answer is "sometimes one doesn't need a queue, one needs a notifier." A notifier is like a queue where only the last element matters; perhaps the message-object extension of a notifier is something that returns the last message of each type/label/name. Like the notifier primitive, this might have use cases where it is preferable to a queue. I'll have to think some more about it, and perhaps experiment with adding such a message notifier to my messaging library.

-- James

-

Actually I was thinking about debugging and maintenance more than writing the code to launch the vi. Personally I find it a pain to debug code when dynamically launched vis are used.

I haven't found them to be a pain, as long as my method of using them has a way of opening their block diagrams, and has them shutdown cleanly. I've found "Shared Clones" VI's harder to debug than dynamically-launch ones.

That's exactly what I do when using LapDog.Messaging. (At least the technique is the same. Updating front panel controls may or may not occur in the user input loop.)Have you considered wrapping the User Event primitives in a LapDog class, and adding a parent class of both it and the Queue class? If you put the "send message" methods in the parent, it would allow sending processes to treat the two types generically. This would probably not be that useful to you, if you only use User Events between loops on the same block diagram, but it might be an advantage to other LapDog Users that might code differently.

-- James

-

You wish the Dow Jones dropped to 212 points?

-

Also, rather than the fill-in-the-blank approach, I would have the VI accept a VI reference and use a blocking Run call. It would force you to make all of the potentially-slow code a subVI, but that may not really be a bad thing. On second thought, though, if the potentially-slow code needed any local data (or returned anything), it would take a more work to pull that off.

One could make two subVI's, "Open FP after delay" that outputs the notifier, and "Close FP" that inputs the notifier (as well as the error out of the rest of the code). Then it would be easy to add to any VI and be quite clear. Internals of the two VIs would need to be more complex, with an async call of a third subVI with the actual wait on notification, and some method of making sure "Open..." is finished before "Close..." actually closes the front panel.

-- James

[i'd try and write it if the graphics card on my work laptop hadn't up and died two days ago! "Seven working days to fix"

]

] -

Wow, now you're getting into the realm of large messaging middleware system companies charge through the nose for. It would be interesting to work on something like that but it's way outside anything I've ever been asked to do in Labview.

I don't need it that complex, but I was looking into using one of the Open Source (ie free) middleware messaging systems such as RabbitMQ or ZeroMQ. Why reinvent the wheel? Unfortunately, there are no LabVIEW wrappers and I don't have the skills to create them (anyone have any experience with using these from LabVIEW?). Instead, I'm thinking of just doing a simple Messenger using the TCP primitives.

-- James

-

I respectfully disagree...

I agree! In fact, I started to immediately think of "Priority Queues" as an example of a queue extension that didn't seem that useful to me. The design should be such that messages are handled promptly. In fact, I've never seen much advantage in "Preview" or "Flushing" of the queue. That's one of the reasons that my own messaging system abstracts Queues, User Events and the like as "Messengers"; I'm happy to ignore the extra features of Queues over User Events.

I was thinking about an eventual design of a "Network Messenger" when wrote my previous post, and distributed computing where the network may have temporary disruptions. Or a computer may have to reboot or something. It was in that context that I was interested in what happens to queued messages building up. I certainly don't want to add extra bells and whistles to the raw queue primitives.

Been down that road. It certainly works, but unless you want to create a unique loop for every dialog box (not very scalable at all) you're pushed into calling the vi dynamically and dealing with those associated headaches. I find it easier to save dynamic calls for those situations when they're really necessary.I've been trying a different tactic of standardizing the dynamic call, with the launching of all VI's being identical and accomplished with the same "Launch" subVI. Every dynamic VI sits behind a "Messenger" and receives "Messages". Thus I have to only deal with the complexity once. I think I can easily add async dialog boxes to this design without ever having to revisit the details of dynamic launching.

(Why do they want a new dialog box for validating their inputs? Seems like it would be easier to color controls red if the input is invalid, refuse to let them progess until valid inputs are entered, or something like that.)The inputs are "valid", they just forget to change them from the default, or change them and forget to change them back.

-- James

-

I have finally uploaded the code (still very unfinished) in my other thread. See the example "Test Active Object.vi".

-- James

-

I've always been meaning to post this code library, once it is meaningfully "finished", but that may be a LONG time. So here is a very "beta", "lightly documented", and "less than entirely untested" version as of today.

NOTE: newer version (2011) in a later post.

Contains a "Messenging" library and a set of examples that are up-to-date versions of the ones I posted here and in my Parallel Process thread. Dependancies are the OpenG toolkit (Data Tools, mostly) and the JKI statemachine, both available with VI Package Manager. LabVIEW 8.6.1.

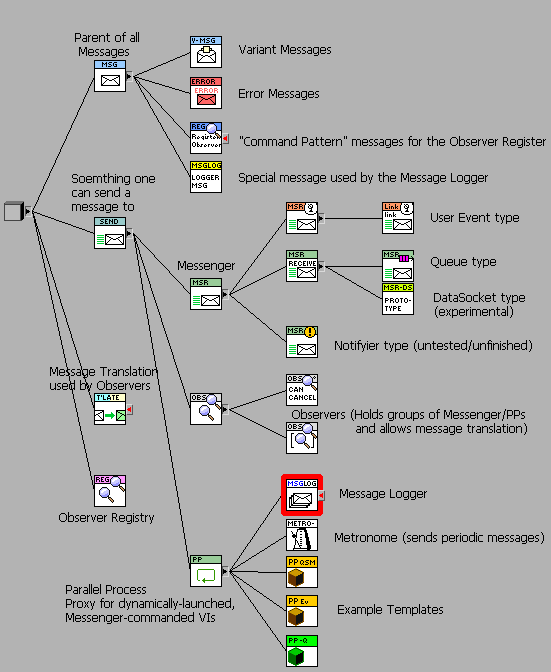

Here is a a Class Diagram with labels:

There is a Palette Menu for the library, but if that doesn't work there is also a "Tree.vi" of the most useful VIs.

Any comments appreciated,

-- James

-

1

1

-

-

What I don't understand is why the Event Structure locks the FP after 'Done Calibrating' has stopped the bottom loop.

'Cause you hit the button again. Stopping the loop doesn't stop the event queue inherent to the event structure. It locks the front panel, as instructed, until the event is handled. That it will never be handled because the loop is stopped is immaterial.

-

1

1

-

-

First thing to do is put a Wait in the upper loop, which is currently running at max CPU and starving other processes. That might have strange effects.

-

The only thing I'm left wondering is when I would use the default implementation of an override method (ie call parent method), it doesn't seem to be any use apart from as a placeholder.

It serves as a starting point for adding functionality. For example, if your "Car" needs to always have it's headlights on when the engine is on, you would override "Start Engine" and add turning on of the lights to it, while calling the parent method to actually start the engine.

-

To make sure I understand I've made another screenshot...I've created a class called Vehicle and a child class called car.

To extend the analogy let's replace your numerics with something vehicles have. A vehicle has an engine, and a method to "Start Engine". You've created a "car", which being a vehicle has an engine that can be started, and you've given it a second engine (!) and were surprised when the "Start Engine" method started the first engine.

OK, so, why did you add a second engine?

-- James

BTW. The "parent/child" terminology of OO is actually confusing; "Car" is not a child of "Vehicle", it is a type of vehicle.

-

1

1

-

-

A quick question though, when the VI that creates a queue is shut down, obviously it destroys that queue reference as all other VI's subsequently return an error. Is this the same as calling the "Release reference" queue command in terms of memory behaviour? In other words, if the queue reference is lost this way, is the memory still freed up?

I believe so. It's "garbage collection"; where LabVIEW frees up the resources of the VI.

-

I can't figure out how to create a scenario where a dynamically launched sub-vi creates a data pipeline queue (for offloading processing of high-speed data to another asynchronous parallel process), then registers the queue to the mediator which passes it to a sub-vi that calls for it.

Alex, you can guess my advice: do away with the over-complexity of dynamic launching and the like. But ignoring that...

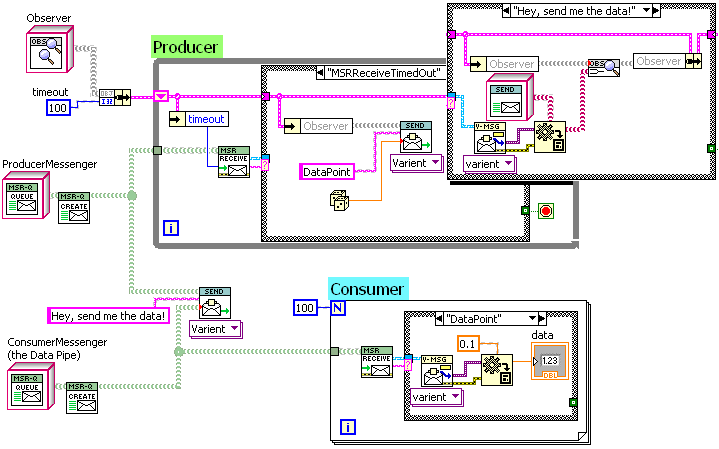

Whatever way you do this, it is better to think of the consumer making the data pipeline and getting that reference to the producer, rather than the other way round. The consumer is dependent on the queue, the producer is not. If I were designing something like this with my messaging design shown above, the producer would send it's data to an "ObserverSet", which by default is empty (data sent nowhere). The consumer would create a "messenger" (queue) and send it to the producer in a "Hey, send me the data!" message (alternately, the higher-level part of the program that creates both consumer and producer would create the queue and the message). The producer would add the provided messenger to the ObserverSet, allowing data piping to commence.

In the below example, the consumer code registers it's own queue with the producer and is piped 100 data points.

I had a look over AF, but I just don't think I'm ready (no free time atm) to battle with the OOP concepts.The Actor Framework is rather advanced. You might want to look at "LapDog" though, as it is a much simpler application of LVOOP that one can "get" more easily (I am surprised AQ thinks the Actor Framework is simpler).

-- James

-

1

1

-

Non-OOP Design Patterns

in Application Design & Architecture

Posted

Only, presumably, if the team agree that OOP is a reasonably good set of rules. I doubt ShaunR will be much impressed with this argument.