-

Posts

1,986 -

Joined

-

Last visited

-

Days Won

183

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by drjdpowell

-

-

Hi Norm, I'm not sure I follow. Can you (or AQ) explain more about possible issues with putting an event registration in a subVI?

-

That was my reaction a month or two ago.

-

so you have to provide the event reference (or create a member VI for every single type of event structure which will ever be used by someone who uses the event, something which is obviously impossible).

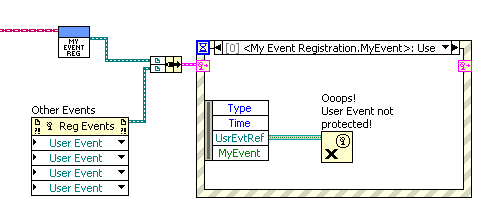

I think GregR means that you provide a function that produces an event registration for just the encapsulated User Event, and that registration is clustered with another registration for any other events before being passed to the event structure. Thus you only need one member VI. However, this doesn't keep the User Event encapsulated, because it can be accessed in the event structure frame itself. See below:

-- James

BTW, I learned only recently that one can use a cluster of multiple event registrations for handling by the same event structure; does anyone know if there are any performance differences with using multiple registrations instead of a single one?

-

1

1

-

-

I'm talking Modbus TCP to the PLC using the library on NI's website.

Is it this library you are using? If so, did you correct the bug pointed out in the comments:

a flaw in MB CRC-16.vi in NI Modbus.llb

A failure mode turn up in testing at 1200 baud. It turned out to be a flaw in MB CRC-16.vi in NI Modbus.llb. The failure mode is that the MB Serial Recieve.vi that waits for a Modbus reply to a command may falsely detemines that the message is complete before the last byte is recieved. This is becuase because MB CRC-16.vi coerces the U16 CRC to a U8, and the U8 becomes zero for a message with all but the last byte transmitted. The correction is to change the data type of the Exception Code indicator from U8 to U16.

- Jim Figucia, Code G Automation. labview_work@msn.com - Sep 15, 2009

I haven't used it but when I downloaded the library a few months ago the bug was still there. Don't know if it could cause your problem.

-

I am Officially a CLA

I've just become an official CLA..........

......D

I wasn't that worried about failing.

-

If all your doing is showing the User information, you have a third option to Daklu's two. Just have the subVI open its front panel and display the information, then immediately finish (but leaving the front panel open). To modify the example from the conversation you linked to, just delete the loop and the "Stop" button. I think you need to select "Show front panel when called" and NOT select "Close afterwards if originally closed". And make sure it isn't "Modal", as ShaunR suggests. The User can close the window when they don't the info anymore.

-- James

-

I think you badly need the opportunity to use LVOOP for a couple of small projects, in a simple way. First just try using an object or two in place of type-def-clusters. Then a try a parent and two children (your USB or TCP/IP communication might be a good choice). With experience in the basics, you'll be able to get more out of all that reading. There is quite a few examples of people on LAVA trying to develop tools to handle the complex tasks with multiple processes running in parallel, but it would be quite hard to just jump right in without some experience.

-

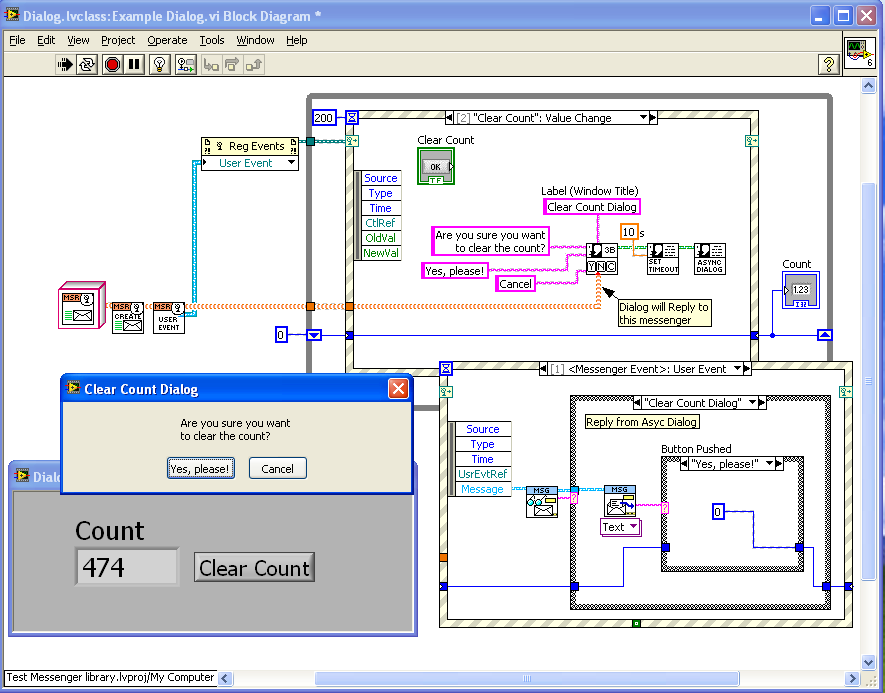

BTW, here's an extension I am working on, that uses the "Self-Addressed Envelope" aspect of the framework: a class of Asynchronous Dialog boxes. This allows a process loop to display a dialog box for User input, without waiting. It can then continue to perform other actions. When the User dismisses the dialog, his/her input is sent back to the loop's Messenger via a "reply". So far, I have only created a Dialog that is a modification of the standard LabVIEW "Three Button Dialog"; an example is shown below:

Here, the example process increments a count every 200 ms (in the Timeout case). If the User presses the "Clear Count" button, a dialog is configured and displayed. Had the standard LabVIEW dialog been used, this would block the incrementing of Count until the dialog completes. But here the dialog is dynamically launched and asynchronous, and thus the loop keeps counting. Part of the dialog configuration is to give it a message Label (also used as the dialog title) and attach the loop's own Messenger (Event-type, in this case) as a "return address". When the User pushes a button and dismisses the dialog, the name of that button is sent back the the return address with the supplied label. Thus, the loop can perform the required action, such as resetting the Count to zero if the User responded "Yes, please!". The asynchronous dialog box has an optional timeout function (it replies "window closed") and will automatically close if it's calling VI goes idle (it uses a Notifier internally to achieve these functions).

-- James

-

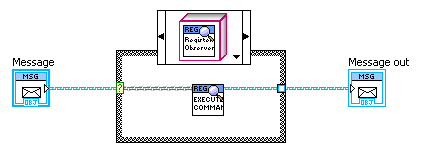

The only comment I have is somewhat superficial and simplistic (meaning not entirely thought through): since the logger display is called by reference based on a relative path, couldn't it somehow be included in the Create.vi of CommandMessenger[A,B]? Then, instead of the observer being CC'd in the Controller, the Messenger's "Receive" could send the copy. I'm thinking only of keeping the top level VI's diagram simple.

Thanks for replying,

If I understand your question correctly, your asking if a message logger could automatically be attached inside the "Create" methods, thus making logging of all messages automatic while hiding this fact in the top-level diagram. Correct?

One could easily create a "Create and auto-log" VI that does exactly this, encapsulating the currently existing functions, and setting up a logger that records all messages to the created Messengers. Personally, I wouldn't use this, as I don't actually want all messages logged except when I'm debugging, and when I am logging I want this fact to be very clear from the top-level VI. What is logged and where isn't something that should be hidden, IMO.

-

Yes, it would. Insert standard arguments here regarding priorities, resources...

Ah, but at least the resources would be spent on "interesting new functionality", rather than boring old "rehashing existing functionality".

-

Hello,

Any comments on the code library I uploaded a month ago? If nothing else, did the thing download without any broken arrows (i.e. did I miss any dependancies)? Did the examples run?

-- James

-

Regarding this code fragment, wouldn't a high-performance, flexible, and clearly-readable solution be to add the functionality to the case structure itself? By implimenting these Ideas: Wire Class To Case Selector and Allow vi server reference type as case selector.

It would look something like this (the "default" case would return the parent "Message" wire type):

-- James

-

2

2

-

-

24.5, then.

Actually, looking at the "Like"s from those who didn't post strongly suggests 4 additional votes for small terminals.

So that makes it 28.5 to 4.5.

-- James

-

Final score:

Small terminals: 23.5 votes (that includes the "mostly-no"s, the "no"s and the "HELL, NO"s).

Icons: 4.5 votes.

-- James

-

Everything's by-value, not by-reference, so the variant attributes disappear with the variant and containing object.

-

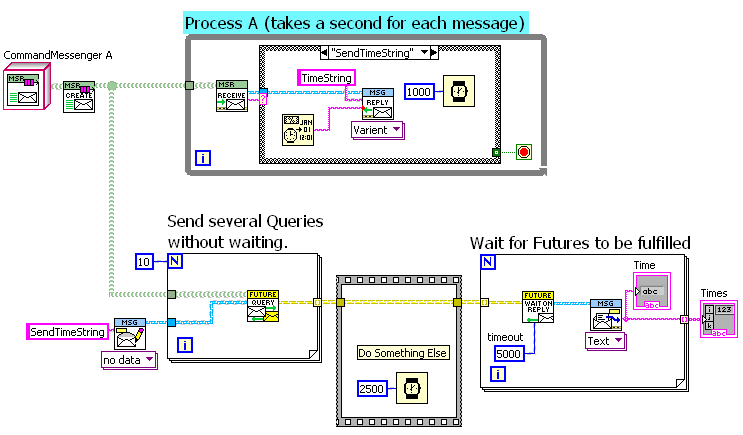

For fun, I whipped up a version of a "Future" in my Messaging Library. It takes my existing "Query" function and splits it among two VIs, connected by a "Future Reply" object. In the below example I have a process that takes a second per received message to reply. I start 10 queries, sending the "SendTimeString" message each time, then simulate doing something else for 2.5sec, then I wait for the futures to be completed. This is similar to the way "Wait on Async Call" works.

-

1

1

-

-

Just as an aside, the new "Wait on Asynchronous Call" Node is another example of a type of "future".

-

I actually want my wires to kink in a specific, readable way.

The feature I'm imagining will only snap to a possible position when it is within a couple of pixels. This should be small enough that is will act to help the programmer align things exactly as he/she wants. So, if you want the tops of subVIs to line up instead of straight wires then no problem. Want bend the wires in a readable way, no problem. Basically, you place the item where you want and the program helps with the micro-positioning.

We can actually do this by using the alignment tools as I wrote on 1) if you select both terminals before. If this is done automatically it will cause headache on my side, since I don't want all my controls to fit together.You would have to place the terminal within two pixels of aligned to trigger the snap-to; nothing is forced on the programmer. Now, if you actually wanted the terminal one or two pixels off alignment with another, you would need to hit the arrow key once or twice. Hopefully, such situations will be rare. If not, there would be the ability (including a hot key) to turn the feature on/off.

Unfortunately, it's hard to verbally communicate the advantages of CAD-like guides.

-- James

-

1

1

-

-

Correct me if I'm wrong, but there's no way to set the fill direction, is there?

I had a similar application and could not figure out how to do it. Sorry.

-

Structurally what you're describing is, if I'm understanding correctly, similar to the decorator pattern but it is not (imo) a decorator.

You're right, it doesn't really fit the intention of the "Decorator pattern", but structurally it is very similar.

Forgive me for saying so, but applying that in this particular case smells of too much indirection. I don't see that it provides any real benefit in this use case.In the mental image I use for my messages, envelops with contents inside and a label on the outside, having the Messenger place the received envelope inside another envelop and labeling it "This is a message from from Slave1" doesn't seem confusing to me. Not much more than altering the label of the original message and then feeding into a parser, anyway.

Just an idea; as I said, I have never used it yet.

-- James

-

If the state is exported as a flattened string or variant the external code can unflatten it. Not enough data protection. There's also the potential of having the external code send an incorrect flattened type to the component. No type safety. In the case of mementos classes provide a far better combination of data protection, type safety, and decoupling than what is easily available using traditional techniques.

As an aside: is this really true? LabVIEW objects can easily be flattened to a string, allowing the external code to manipulate the string, and then unflattened back to an object. So it's no safer than a variant or flattened data. And, hey, Shaun has an encryption package, so he could provide an encrypted, flattened string, which is more protected than a LabVIEW object.

-

Ok I'll shut up then. Obviously completely over my head

Not over your head, I'm sure, but I know you don't like getting POOP-y

-

This comment got me wondering... Maybe 10% of my app-specific classes use dynamic dispatching to provide the application's functionality. What's the going rate for others?

I've only been using OOP inheritance for less than two years, and my job has never been more than 30% programming, so I don't have meaningful statistics. But I have had one program that involves look-up and display of data records from seven very different measurements from a database. This led to a complex "Record" class with seven children. I also developed my messaging design for this project; it has seven "Plot" actors to display the datasets of the seven record types in a subpanel. Making a matching change in seven subVIs (actually eight, including the parent) is a pain, regardless of what the change is. It only takes one project to learn that lesson.

-- James

-

Hello,

Not entirely following the latest part of the discussion, but there may be some confusion between what Daklu was doing, extending the message identifier with "Slave1:", and the common method of adding parameters into a text command (as, for example, in the JKI statemachine with its ">>" separator). Parameters have to be parsed out of the command when received, thus the need to decide on separating characters and write subVIs to do the parsing. But Daklu's "Slave1:ActionDone" never needs to be parsed, as it is an indivisible, parameterless command that triggers a specific case in his "Master" case structure (see his example diagram).

Now, if he did want to separate the "Slave1" and "ActionDone" parts (for example, to use the same "ActionDone" code with messages from both slaves using the slave name as a parameter) then he could parse the message name on the ":" or other separator. But there is an alternate possibility that avoids any possibility of an error in parsing (Shaun, stop reading, this is getting OOP-y; there's even a pattern used, "Decorator"). Instead of using a "PrefixedMessageQueue", use a variation of that idea that I might call an "OuterEnvelopeMessageQueue". The later queue takes every message enqueued and encloses it as the data of another message with the enclosing messages all having the name specified by the Master ("Slave1" for example). When the Master receives any "Slave1" message, it extracts the internal message and then acts on it, with the knowledge of which slave the message came from.

You can use "OuterEnvelopes" to accomplish the same things as prefixes, though it is more work to read the message (though not more work than parsing a prefixed message) and less human-readable (unless you make a custom probe). It may useful, though.

-- James

Note: I put an OuterEnvelope ability in my messaging design, but I've never actually used it yet, so I'm speaking theoretically.

Interesting, intermittent problem

in User Interface

Posted

Just a small suggestion, but you could place your code in the TRUE case of case structure connected to the "NewVal" output of the event case. That would drop any double clicks by the user (as the second would be false). Can't help with the dialogs; though the primitive 2-button can be OKed with the Enter key, your second one has no key bindings and thus should require a very deliberate User action to dismiss.

How do you know the event happened, BTW? Are you sure the action contained isn't being triggered by some other method than the event case?

-- James