-

Posts

590 -

Joined

-

Last visited

-

Days Won

26

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ensegre

-

How to load a base64-encoded image in LabVIEW?

ensegre replied to Harris Hu's topic in LabVIEW General

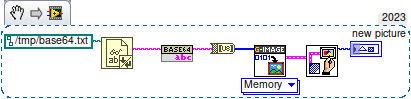

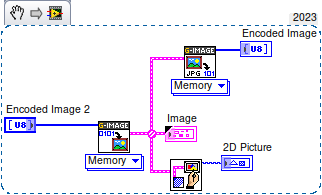

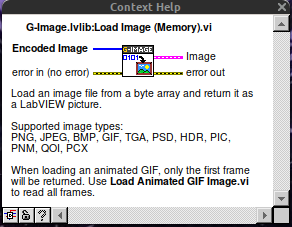

So in LV>=20, using OpenSerializer.Base64 and G-Image. That simple. Linux just does not have IMAQ. Well, who said that the result should be an IMAQ image? -

How to load a base64-encoded image in LabVIEW?

ensegre replied to Harris Hu's topic in LabVIEW General

Where do you get that from? -

How to load a base64-encoded image in LabVIEW?

ensegre replied to Harris Hu's topic in LabVIEW General

-

How to load a base64-encoded image in LabVIEW?

ensegre replied to Harris Hu's topic in LabVIEW General

I haven't yet really looked into, but why not just wrapping libjpeg, instead of endeavoring the didactic exercise of G-reinventing of the wheel? -

How to load a base64-encoded image in LabVIEW?

ensegre replied to Harris Hu's topic in LabVIEW General

You could also check https://github.com/ISISSynchGroup/mjpeg-reader which provides a .Net solution (not tried). So, who volunteers for something working on linux? -

How to load a base64-encoded image in LabVIEW?

ensegre replied to Harris Hu's topic in LabVIEW General

As for converting jpg streams in memory, very long time ago I have used https://forums.ni.com/t5/Example-Code/jpeg-string-to-picture/ta-p/3529632 (windows only). At the end of the discussion thread there, @dadreamer refers to https://forums.ni.com/t5/Machine-Vision/Convert-JPEG-image-in-memory-to-Imaq-Image/m-p/3786705#M51129, which links to an alternative WinAPI way. -

How to load a base64-encoded image in LabVIEW?

ensegre replied to Harris Hu's topic in LabVIEW General

I haven't tried any of them, but these are the first 3 results popping up from a web search: https://forums.ni.com/t5/Example-Code/LabVIEW-Utility-VIs-for-Base64-and-Base32Hex-Encoding-Using/ta-p/3491477 https://www.vipm.io/package/labview_open_source_project_lib_serializer_base64/ https://github.com/LabVIEW-Open-Source/Serializer.Addons (apparently the repo of the code of the previous one) -

What are some choices for producer-consumer protocols?

ensegre replied to Reds's topic in LabVIEW General

Redis is certainly high performance and suited to multiple, loose writers, readers and subscribers, with bindings for so many ecosystems. One of its several features, which I haven't perused, are Streams. I'd be curious too to know whether continuous cross-app data streaming could be efficiently implemented using them. -

So I have been given this monster FPGA project to patch. The main target VI is a monster with a BD of 9034x10913px, with 72 different loops . Yes there is some documentation an comments, but that does not really cut it. The logic uses I haven't counted how many tens or hundreds of different FIFOs and memory locations, read and written by this or that loop of the hyerarchy. Why, Why, don't I have a"Find all instances" for FIFOs , like there is for VIs, to navigate them?

-

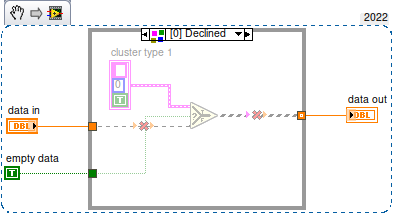

Maybe the question is if you have to be committed to this array of clusters of string+variant for some specific reason, or if it is an attempt to implement some architecture which might have a different implementation. I.e., I don't understand if yours is a general question about design, or a specific question about the mechanics of converting variants to data.

-

Serial Communication Question, Please

ensegre replied to jmltinc's topic in Remote Control, Monitoring and the Internet

i.e. crossover cable vs. null modem cable. But the OP says that the exe succeeds with the command, so that doesn't seem to be the issue here. -

Serial Communication Question, Please

ensegre replied to jmltinc's topic in Remote Control, Monitoring and the Internet

Just to make sure - if you read 5 bytes, you get the first 5 bytes. If you increase the number to 'byte count', you would get a timeout warning if the other program really outputs only 5 bytes, but you would see the trailing ones, if there were. Also, right-click the string indicator, check 'Hex display' may come handy. -

Serial Communication Question, Please

ensegre replied to jmltinc's topic in Remote Control, Monitoring and the Internet

Can't say without seeing the rig's documentation (if at all good) and some amount of trial and error. These old devices may be quirky when it comes to response times (the internal uP has to interpret and to effect the command) and protocol requirements. Are you sure the message does not require an EOT or a checksum of some sort? Does the rig respond with some kind of ACK or error, which you could read back? Are you sure of your BCD encoding? Wikipedia lists so many flavours of it: https://en.wikipedia.org/wiki/Binary-coded_decimal If there are read commands, returning a known value, like the current frequency or the radio model, I would start with them, to make sure that the handshake works as expected. -

Serial Communication Question, Please

ensegre replied to jmltinc's topic in Remote Control, Monitoring and the Internet

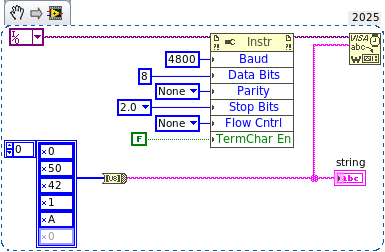

-

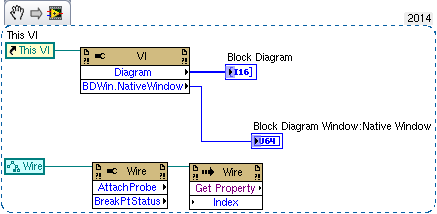

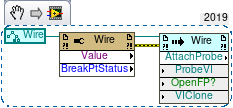

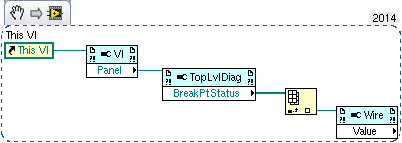

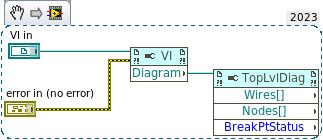

This is the situation in 14 and 19 here. In 14 Wire has some interesting properties in 14, but not Value. Note that e.g. Attach Probe is accessed as a property in 14 and a Method in 19; and BD is an array of integers in 14.

- 16 replies

-

- breakpoints

- wire values

-

(and 2 more)

Tagged with:

-

Rolf is correct, I forgot to enable scripting in the LV19 I used to check OTOH, in LV14 "Block diagram" is indeed present but is an array of I6 rather than a ref. I can't guess what was its intended use back then, maybe it was just an uncooked feature.

- 16 replies

-

- breakpoints

- wire values

-

(and 2 more)

Tagged with:

-

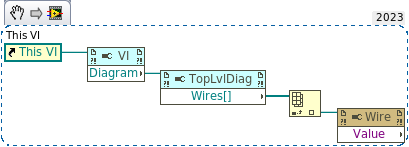

Since you asked, here are my findings: LV23 - can create the attached snippet (don't know if it works), and save it for LV14 (also attached). All the pulldown menues show relevant properties LV21 and LV 19 - open the saved for LV14, show a correct image, but the first property node lacks the "Block Diagram" entry in the pulldown; further properties have no menu ETA - and show the relevant pulldown menues when "Show VI scripting" is checked. LV14 - the first property node menu *has* a "Block Diagram" entry, but the further properties don't match LV23 W14.vi W23.vi

- 16 replies

-

- 1

-

-

- breakpoints

- wire values

-

(and 2 more)

Tagged with:

-

I thought it had to be read as in "the *top level node* of the BD of that particular instance", not as "the BD of the Top (prototype) VI", but haven't really tried... How do you get the values running onto a wire, btw, for my education?

- 16 replies

-

- breakpoints

- wire values

-

(and 2 more)

Tagged with:

-

I assumed that if you obtain externally the clone VI ref and just pass it to the VI Block Diagram property you get the clone block diagram ref, but I haven't really tried it. Isn't that the case? If so, sorry for misleading you...

- 16 replies

-

- breakpoints

- wire values

-

(and 2 more)

Tagged with:

-

There was this thing about getting the VI ref of the actual clone instance; I remember that in the past I asked about that (it must be somewhere here on Lava), and besides, there is this nice Vi commander tool (forgot its name, windows only) which obviously uses it. Once I have a moment of time I'll look for it and report. ETA: , of course!

- 16 replies

-

- breakpoints

- wire values

-

(and 2 more)

Tagged with:

-

A bit sad to have to say this nowadays that most of the traffic on this forum is about leaked videos, money rituals and human sacrifices, but isn't this the part where someone starts to repost links to basic LabVIEW training resources on the NI site?