-

Posts

3,943 -

Joined

-

Last visited

-

Days Won

273

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

Well a Windows bitmap file starts with a BITMAPFILEHEADER. typedef struct tagBITMAPFILEHEADER { WORD bfType; DWORD bfSize; WORD bfReserved1; WORD bfReserved2; DWORD bfOffBits; } BITMAPFILEHEADER, *LPBITMAPFILEHEADER, *PBITMAPFILEHEADER; This is a 14 byte structure with the first two bytes containing the characters "BM" which corresponds nicely with our 66 = 'B' and 77 = 'M'. The next 4 bytes are a Little Endian 32-bit unsigned integer indicating the actual bytes in the file. So here we have 56 * 65536 + 64 * 256 + 54 bytes. Then there are two 16-bit integers whose meaning is reserved and and then another 32-bit unsigned integer indicating the offset of the actual bitmap bits from the start of the byte stream, which surprisingly is 54, the 14 byte of this structure plus the 40 bytes of the following BITMAPINFO structure. If you were sure what format of bitmap is in the stream you could just jump right there but that is usually not a good idea. You do want to interpret the bitmap header to find out what format is really in there and only try to "decode" the data if you understand the format. After this there is a BITMAPINFO (or in some obscure cases a BITMAPCOREINFO structure, this was the format used by OS-2 bitmaps in a long ago past. Windows doesn't create such files but most bitmap functions in Windows are capable of reading it). Which of the two can be found by interpreting the next 4 bytes as a 32-bit unsigned integer and looking at its value. A BITMAPCOREINFO would have a value of 12 in here, the size of the BITMAPCOREHEADER structure. A BITMAPINFO structure has a value of 40 in here, the size of the BITMAPINFOHEADER inside BITMAPINFO. Since you have 40 in there it must be a BITMAPINFO structure, surprise! typedef struct tagBITMAPINFOHEADER { DWORD biSize; LONG biWidth; LONG biHeight; WORD biPlanes; WORD biBitCount; DWORD biCompression; DWORD biSizeImage; LONG biXPelsPerMeter; LONG biYPelsPerMeter; DWORD biClrUsed; DWORD biClrImportant; } BITMAPINFOHEADER, *PBITMAPINFOHEADER; biWidth and biHeight are clear, biPlanes can be confusing but should usually be one. biBitCount is the most interesting right now as it indicates how many bytes a pixel has. If this is less or equal than 8, a pixel is only an index in the color table that follows directly after the BITMAPINFOHEADER. If it is bigger than 8 there is usually NO color table at all but you need to check biClrUsed to be 0, if this is not 0 there are biClrUsed color elements in the RGBQUAD array that can be used to optimize the color handling. If the bitCount is 8 or less, biClrUsed only indicates which of the color palette elements are important, it always contains 2^bitCount elements. With bitCount > 8 the pixel values encode directly the color. You have probably either a 24 or 32 in here. 24 means that each pixel consists of 3 bytes and each row of pixels is padded to a 4 byte boundary. 32 means that each pixel is 32-bits and directly encodes a LabVIEW RGB value but you should make sure to mask out the uppermost byte by ANDing the pixels with 0xFFFFFF. biCompression is also important. If this is not BI_RGB (0) you will likely want to abort here as you have to start bit shuffling. RLE encoding is fairly doable in LabVIEW but if the compression indicates a JPEG or PNG format we are back at square one. Now the nice thing about all this is that there are actually already VIs in LabVIEW that can deal with BMP files (vi.lib\picture\bmp.llb). The less nice thing is that they are written to work directly on file refnums. To turn them into interpreting a byte stream array, will be some work. A nice exercise in byte shuffling. It's not really complicated but if you haven't done it it s a bit of work the first time around. But a lot easier than trying to get a callback DLL working.

-

There is another function in the PlayCtrl.dll called PlayM4_GetBMP() There is a VI in my last archive that already should get the BMP data using this function. It is supposed to return a Windows bitmap and yes that one is fully decoded. But! You retrieve currently a JPG from the stream which most likely isn't exactly the same format as what the camera delivers so this function already does some camera stream decoding and than JPEG encoding, only to then have the COM JPEG decoder pull the undecoded data out of the JPEG image anyhow! Except that you do not know if the BMP decoder in PlayCtrl.dll is written at least as performant as the COM JPEG decoder, it is actually likely that the detour through the JPEG format requires more performance than directly going to BMP. And the BMP format only contains a small header of maybe 50 bytes or so that is prepended in front of the bitmap data. So not really more than what you get after you have decoded your JPEG image.

-

Well, how many times per second do you call that VI? In this camera application it was called ONLY about 20 to 50 times per second. And MSDN definitely and clearly states that you should balance every call to CoInitialize(Ex) with exactly one call to CoUnitialize. And that of course has to happen in the same thread! If it would not allocate something, somehow, somewhere, that would not be necessary as the CoIntialize(Ex) when it returns TRUE (actually S_FALSE) simply indicates that it did not initialize the COM system for the current thread, but MSDN still says you need to call CoUninitialize even when CoInitialize(Ex) returns S_FALSE. That is definitely not for nothing! And if you do it in the LabVIEW VI you have actually a problem as you can not guarantee that CoUnitialze is called in the same thread as CoInitialize was, unless you make the entire VI subroutine priority. This guarantees that LabVIEW will NOT switch threads ever for the duration of the entire VI call. If it always returns TRUE (S_FALSE) even the first time you call it, it simply means that LabVIEW apparently already initialized the COM system for that thread. CoUnitialize should not do much, except maybe deallocate that thread local storage that it somehow creates to maintain some management information unless it is the matching call to the first CoIntialize(Ex). In that case it gets indeed rather expensive as it would deinitialize the COM system for the current thread. So to be fully proper without risking to allocate new resources with every call I think the safest would be: To add a CoUnitialize call at the end of the VI if CoInitializeEx returned 1 (S_FALSE) to make sure nothing is accumulating but don't call it if CoInitializeEx returned 0 (S_OK) as it was the first initialization of COM for the current thread. And make that VI subroutine. It's a pitta for debugging but once it works you simply should guarantee that COM functions execute from the same thread in which an object was created. Most COM class implementations are not guaranteed to work reliable in full multithreading operation. And please do NOT try to pass the pointer that the callback function receives directly through the LabVIEW event. That pointer ceases to be valid at the moment the callback function returns control to the caller, but that event goes through an event queue and then the event structure and by the time your event structure sees that event the pointer from the callback function has either been reused by the SDK already for something else, or even completely deallocated. You MUST create a copy of that pointer if you want to read the data outside of the callback function. I did check the callback function from earlier again and there is of course memory leak in there! The NumericArrayResize() is called twice. This in itself would be just useless but not fatal if there was not also a new eventData structure declared inside the function. Without that the second call would be simply useless, it would not really do much as the size is both times the same and the DSSetHandleSize() used internally in that function is basically a NO-OP if the new size is the same as what the handle already has. extern "C" __declspec(dllexport) void __stdcall DataCallBack(LONG lRealHandle, DWORD dwDataType, BYTE * pBuffer, DWORD dwBufSize, DWORD dwUser) { if (cbState == LVBooleanTrue) { LVEventData eventData = { 0 }; MgErr err = NumericArrayResize(uB, 1, (UHandle*)&(eventData.handle), dwBufSize); if (!err) // send callback data if there is no error and the cbstatus is true. { // LVEventData eventData = { 0 }; // MgErr err = NumericArrayResize(uB, 1, (UHandle*)&(eventData.handle), dwBufSize); // Not useful LVUserEventRef userEvent = (LVUserEventRef)dwUser; MoveBlock(pBuffer, (*(eventData.handle))->elm, dwBufSize); (*(eventData.handle))->size = (int32_t)dwBufSize; eventData.realHandle = lRealHandle; eventData.dataType = dwDataType; PostLVUserEvent(userEvent, &eventData); DSDisposeHandle(eventData.handle); } } } But with the eventData structure declared again it creates in fact two handles on each call but only deallocates one of them. Get rid of the first two lines inside the if (!err) block!

-

There of course always is. But I have no idea where the memory leak would be. One thing that looks not only suspicious but is in fact possibly unnecessary is the call to CoInitializeEx(). LabVIEW has to do that early on during startup already in order to be ever able to access ActiveX functionality. Of course I'm not sure if LabVIEW does this on every possible thread that it initializes, most likely not. So you run into a potential problem here. The one thread it for sure will do CoInitialize(ex) on is the UI thread. So if you execute all your COM functions in that decoder VI in the UI thread you can forget about calling this function. However that has of course implications for your performance since the entire decoding is then done in the UI thread. If you want to do in any arbitrary thread for performance reason you may need to call the CoInitializeEx anyways just to be sure, BUT!!!!!! Go read the documentation for that function! Your function does call CoInitializeEx() on every invocation but never the CoUnintialize(). That certainly has the potential of allocating new resources on every single invocation that are never ever released again. You will need to add a CoUnintialize() at the end of that function and not just in the SUCCESS (return value 0) case but also in the Done Nothing (return value 1) case when CoInitializeEx() returns. Of course returning a BMP instead has likely the advantage to not only do away with that entire CoInitialize() and CoUninitialze() business but also avoids the potential need of extra resources to decode the MPEG (or with another camera maybe H264) frame, encode it into a JPEG image, and then decode it back into a bitmap. Instead you get immediately a decoded flat bitmap that you only have to index at the right location from interpreting some of the values in the BITMAPINFOHEADER in front of the pixel data.

-

What I meant to say is that the callback alone should not leak memory. But the frequent allocation and deallocation certainly will fragment memory over time. That is not the same, although it can look similar. Due to fragmentation more and more blocks of memory are getting allocated and while LabVIEW (or whatever manages the memory for the LabVIEW memory manager functions) does know about these allocations and hasn't forgotten about them (which is the meaning of a memory leak) it can't reuse those blocks easily for new memory requests, leaving that memory reserved but unused. That way the memory footprint of an application can slowly increase. It's not a memory leak since that memory is still accounted for internally but it causes more and more memory to be allocated. If you however allocate a memory pointer (or handle) and then consequently forget about it you have created a true memory leak. In the case of a handle there is the potential to hand it to LabVIEW for further use in some instances which will make LabVIEW responsible to release it, but these are fairly limited, usually only to parameters that are passed in from the diagram through Call Library Node parameters and then returned back.

-

The callback itself should not leak memory but it does two handle allocations and deallocations each time around. The first handle is allocated and deallocated in the callback function itself. The second handle is allocated in LVPostUserEvent() when it copies the incoming data and released in the callback event frame. I never understood why there is not at least an option to tell LVPostUserEvent() to actually take ownership of the data. But it can't be changed by now. The optimization would be to store the handle between callback invocations somewhere and only resize it when the new data needs a bigger buffer. However do not attempt to do that yourself! This is fraught with trouble! You can't just store it in a static global variable since the callback can be potentially called from more than one thread in parallel once you would operate two or more cameras at the same time. You would need to store that handle in TLS (Thread Local Space).

-

As said many times before. I can't test the software without hardware so this is all a dry swim exercise for me.Hikvision-labview SDK -Test-v1.1.1.zip But try with this version, it should fix this particular problem. I excluded the lib folder as it is huge and has no changes in respect to the earlier version. So you need to extract this into your previous sources folder (but make sure to delete all LabVIEW VIs in there to avoid potential conflicts).

-

The Call Library Node itself is NEVER switching threads on the fly. When LabVIEW executes VIs it can arbitrarily switch between the multiple threads it has allocated for a particular execution system. But when it executes a Call Library Node it selects a thread (usually the one it was already executing the VI in except that thread is already tied up for something else) and passes control to the shared library function. LabVIEW has no way to take control back from this until the function returns control to the caller. The setting really is called "Execute in any thread", not "reentrant", but a function needs to be reentrant or multi-threading safe in order for this to be safe. If a function is not reentrant safe (for instance because it uses global resources such as a global variable or accessing some hardware, or is simply badly programmed) then you should not use the "Execute in any thread" setting since there could be situation where the shared library gets called multiple times in parallel when doing so and then the multithreading unsafe functions could stomp on each others global resources and cause race conditions or worse. Then you can choose to run the nodes in the "UI thread" this is the only execution system in LabVIEW that is guaranteed to only use a single thread. So multiple Call Library Nodes set to run in the "UI thread" can NEVER execute in parallel and therefore can not stomp on each others global resources or similar at the same time. But they also have to compete with many other things in LabVIEW that are required to execute in a single thread, including all screen updates. This can lead to very sluggish operation as each Call Library Node set to run in the UI thread has to wait for that thread to be available before it can call the shared library function. If a shared library (or particular functions in a shared library) are multithreading safe is only something that can be answered by the original developer. However many developers have just like you no good idea what the issues could be and if asked about this they will simply give you an empty stare, wondering what you are talking about, respectively either saying that is isn't multithreading safe if they are the cautious type or boldly claiming that it of course is, if they prefer to look cool and are not ashamed to lie flat out. In the case of the PlayCtrl.dll it states explicitly that since version 6.1.1.17 the DLL is multithreading safe. It mentions a caveat that it may block if you try to call certain shared library APIs from within a callback. This is because they seem to have made the library multithreading safe by using mutexes. An API that you call from your application acquires the mutex for that resource, preventing other threads to use that resource for the duration of the call. Before returning from that function the mutex is released. As long as your application only calls APIs it can't really deadlock. The worst that can happen is that some calls will have to wait for the mutex to be available again before they can start doing their work. But if such an API causes callback functions to be called synchronously then it may hand off an already acquired resource to the callback which then calls a library API that tries to acquire its mutex too, but it can't get it until the original API has released the mutex, which it can't do until the callback returns. => Fatal deadlock.

-

Sometimes I feel like I must be talking some obscure galactic slang or something! Setting Call Library Nodes to run in UI thread is indeed safe but I never said this is required here. What about that English sentence is not clear? Have you looked at the last attachment I added to one of my last messages?

-

No, the opposite if you have a DLL version 6.1.1.17 or newer. In that case it SHOULD be safe to set all Call Library Nodes for the PlayCtrl.dll functions to reentrant. No again! The byte stream coming from PlayM4_GetJPEG() is already a JPEG formatted byte stream. Dump into a binary file and it is a JPEG image. For Windows make sure to give it the filename extension .jpg or .jpeg as otherwise Windows Explorer will not understand that it is a JPEG image, but that is just a Windows thing. Microsoft in its infinite wisdom decided loooooooooong ago that the file ending is the only reliable way to determine what type a file is. Of course that is a very unreliable assumption but hey they have gotten away with it for about 40 years. So what!

-

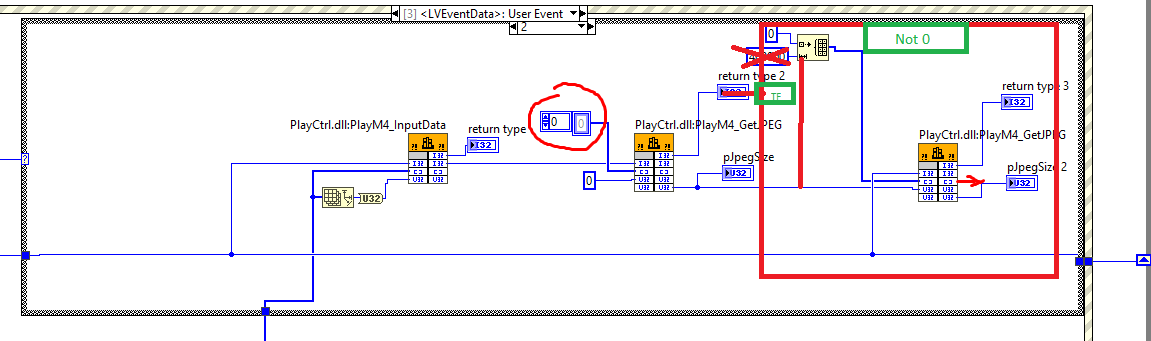

The documentation for the PlayM4 shared library states explicitly that the DLL should be since version 6.1.1.17 multithreading safe. Of course that statement can be as true or false as anything but it is probably safe to assume for now that it SHOULD work if we don't do massive multithreading. And since we run pretty much everything actually in our test VI event structure it is actually executing very sequentially so the multithreading aspect shouldn't even come into play for now. For a more generic library there would need to be a lot more testing needed however. And I would actually pull the whole thing a bit apart. I have cleaned up a lot of stuff and put the PlayM4 functions in their own library. My idea was to push the image retrieval into the the timeout case as in included example. Adding that into the data callback may be a little too much taxing. Also Once we know that the callback data seems to be working I would actually remove the data array on the front panel. That does take quite a bit of performance as LabVIEW needs to create a copy of that array every time to display in the control. Depending on how much the callback event taxes the system it may however never arrive in the timeout case so possibly there is never any update of the BMP data. If that happens you have anyhow already reached the limit of what your system can do and there is simply no way to try to make this still work in this way. The main idea is that if the program does get into the timeout event case I try to retrieve the current played/decoded frame number and if that is different than the previous one, the stream should be able to provide a BMP image. Retrieve it and once we get there we can look further. I choose to use a BMP retrieval as that should be already in 32-bit RGBx format and that should then be fairly easy (but anything but performant) to pull into a LabVIEW pixmap. 184663212_Hikvision-labviewSDK-Test-v1.1.0.zip

-

No it may not be. Last error values are notoriously tricky to handle in multithreading code and LabVIEW is highly multithreading. Even if they are stored in thread local storage as do the Microsoft APIs, they are difficult to handle in LabVIEW as your call to GetLastError() is potentially executed in a different thread than the previous call that caused an error and voila you are reading the last error from some completely different function call. And if you force all calls into the UI thread you are in fact calling the functions all in the same thread but between the call to the error causing function and the retrieval of the last error there could be zillion other calls that might have overwritten that last error value already. Same problem exists independent of how the functions are called when the library only maintains a global last error value. If this library has a thread safe last error value or not is not documented as far as I remember. And are you sure that the PlayM4 APIs make use of the NET_DVR_GetLastError()? If so they are almost certainly thread local storage and you need to make sure that all the functions execute in the same thread. But with call backs going on in the background there could be all kinds of things going on in the time between calling the PlayM4_GetJPEG() function and the NET_DVR_GetLastError() one. No it has its own PlayM4_GetLastError(long nPort) function! And since it takes the port (handle) as a parameter it is most likely thread context safe as the error is apparently stored in the port somehow.

-

I'm afraid that doesn't work. It was an idea based on the fact that this function does return a size in its last parameter. If it was a Microsoft API it would work like that, and there was a reasonable chance that they would have followed the model of Microsoft APIs but alas, it seems not the case. It would have been to simple. 😃 I think you have to use the PlayM4_GetPictureSize() to calculate a reasonably large buffer and allocate that and pass it directly to the PlayM4_GetJPEG() function to MAYBE make it work.

-

Partly valid. This driver independence should have been there before SystemLink was a thing really. It might have made SystemLink a bit harder to make work more comfortable but still. As to SystemLink itself, when it came out it had this "SliverLight" feeling to it for me. It didn't quite go as bad so far, but still. And on top of that it is very expensive. I do often combine desktop and multiple real-time target projects. It works of course best with compiled code separated but I have worked that way before separate compiled code was a (reliably working) fact.

-

It absolutely is needed. How should the decoder otherwise get the compressed data to actually get image data???? You have no idea what you are doing here and will never get anywhere in this way. You do not understand what data comes from where, where it should go and how that all relates to each other. 1) The NET_DVR functions are the functions that communicate with the camera. 2) You can let them draw directly into a window handle. Fairly easy and works as proven already. 3) You can instead (or in addition) install a callback function which is called whenever there is a data package from the camera and that data is passed to that callback. 3a) This data is compressed in the camera in some way, in your case it seems to be MPEG4 data but it could be also another compression. 3b) When you have determined that it is the HKMI (apparently their HikVision Media data Identifier) datastream in the SYSHEAD message, you need to pass this data to their decoder, this are your PlayM4 functions. If you do not pass those data packages to the MPEG4 decoder stream it has NOTHING to decode and therefore can NOT return any image data ever!!!!! 3c) This passing of the compressed data packages happens with the PlayM4_InputData() method. This function hands the received data do the decoder which then buffers it until it has a complete frame and then decodes that frame and probably, maybe, possibly, hopefully or something stores it in a different buffer in its port (handle) as a decoded frame buffer. 3d) Only once a complete frame has been received and decoded, can you hope to get something back from the PlayM4_GetJPEG() or one of the other PlayM4_Get****() functions. If you would not keep putting only images in your post, I might be tempted to give every now and then a fixed VI but I'm not feeling inclined to try to debug images and fix them. Something like this MIGHT work. But there is no guarantee and I can not find any documentation for those functions, not even in bad English. The idea is that the function is called with an empty array buffer and a 0 nBufSize, then on return it MIGHT return a TRUE status if there is a full frame available or it might NOT. If it returns true then the last parameter is hopefully valid and can be used to determine what size of buffer needs to be allocated and then the function is called again with that buffer. If it does not return true you should not even try to interpret the returned last parameter. Another possibility MIGHT be to use the PlayM4_GetPictureSize() function. It returns a height and width value. Multiply those and multiply the result with 4 which hopefully should be enough for an entire picture buffer. The code in the Client Demo application does a bit more elaborate calculation for this, naemly: size = 3 * (4 * lWidth * lHeight + 100) It then calls PlayM4_GetJPEG() with a buffer allocated to that size, and the size value. And if PlayM4_GetJPEG() does not return true it did not return an image, no matter what. Maybe because there is not yet a full data frame to decode, maybe because one of the maybes up to now in above text didn't hold true, or some other bad luck or whatever. However even if this eventually works you have another problem. You have data in JPEG format, yet another compressed format that you still can't do much with. Sure you can decompress it with one of the earlier mentioned JPEG Stream to LabVIEW pixmap functions but it is getting now pretty complicated and multi-decoding and multi layer and therefore slooooooooooooooooow. There seems to be another method which uses yet another callback!! Yupee!! Looking at the Client Example JPEGPicDownload.cpp file you may have to actually use PlayM4_SetDecCallBack() to install a new callback function that is called when a full frame has been decoded. Fuuuuuuuuuun! This full frame seems to be fully decoded, so this COULD be converted into an IMAQ Vision image for instance. How? How many more weeks do you have for this available? PS: The StandardCallback I tried to use in my original code only works if the camera supports RTP according to the SDK documentation, so yours may not support that.

-

Interesting Announcements from NI Connect?

Rolf Kalbermatter replied to Reds's topic in LabVIEW General

I think the separate compiled code is only a by-product. It could have been done even without the VIs having been set to use separate compiled code. In the worst case the VIs would have been compiled the first time you open a project that uses them. So what? Inconvenient? I don't think so, at least not nearly as much as having to always chase the right drivers. -

Interesting Announcements from NI Connect?

Rolf Kalbermatter replied to Reds's topic in LabVIEW General

Definitely agree that it should have been done like that many moons ago already. But it was in a way maybe easier to do it like that as there was limited testing needed and maybe there was also a bit of an intention behind it as if you wanted to use new hardware you had to install the new driver and that made also a new IDE version necessary if the previous one was to old. So a bit of a hidden upgrade nudge. Now with the new NI structure where the different software departments are very much decoupled and independent from each other it proved to be an unmanageable modus operandi. It's not feasible anymore to have to pester DAQmx (and cRIO and System Control and, and, and) folks to release a new version of their software simply because there is a new LabVIEW version. -

Unless your data strream consists of very large packets in the beginning, there is absolutely no guarantee that your PlayM4 stream already has enough data the first time around to produce a valid JPG image. It is very well possible that you must feed it with a dozen or more compressed data packages before it is able to even create a single full frame image. And until then the GetJPEG function will likely return false as it has no data to return yet. Once it has enough data stored internally it may return a valid image but not in the buffer size that you passed to the IntputData method. That image will typically require quite a bit more bytes. What I did mean to do is to call GetJPEG() first with a null pointer for the data and 0 for nBufSize and then use the return value of pJPEGSize to allocate a big enough buffer to call the function again This may or may not work (again!) depending on the PlayM4 API functionality. Currently you are anyhow creating a crasho-mobil (again!!) since you pass in an empty byte array and have not configured that parameter to have a Minumum Size that is nBufSize. So you tell the functon: Look I have here a buffer and it contains at least nBufSize bytes, but you really pass in a buffer that contains 0 bytes. Kabom! Crash! as soon as the function has something to write into that buffer and tries to do that, since you told it that there is a buffer of a certain size it can write into. I have no idea what you try to say with this. If you mean to create an array constant with that size, you can but it is useless. Either you use Initialize Array to create an array of the necessary size or you configure in the Call Library Node the Minimum Size parameter of your array to be that of one of the other parameters, (why not use the nBufSize parameter here, as it very accidentally tells the function what size the byte array parameter has been allocated to). I mean it is just an idea, but it would seem logical to me. 😃

-

You definitely will need the PlayM4_InputData(). Somehow you must provide the decoder with the continuous stream of data packages. Then it may or may not choke on the NULL HWND and it may or may not store the decoded data somewhere internal in the port and if it doesn't choke on the NULL HWND AND does store the decoded data somewhere in the port, you can call the PlayM4_GetJPEG() data. But of course you need to provide a large enough buffer to it, so MAYBE you can first call it with a NULL pJpeg and nBufSize = 0 and use the pJpegSize to call it again with a properly allocated buffer. Lots of may and may not and the only one who can find out about that is you!

-

Leaving NI (for real this time)... for SpaceX

Rolf Kalbermatter replied to Aristos Queue's topic in LAVA Lounge

Not sure why you respond in this thread with this. But yes that has always been like this. Except that the locking goes away if you remove the reference to the class, or library from any but one of the targets. -

What's the calling convention you set for these functions? And no, you can calculate that multiplication by hand, your hand calculator, an abacus or explicitly in LabVIEW and wire that result to the Call Library Node. It does NOT matter. But you will want to store that "port" value somewhere (maybe in a shift register, it's just a suggestion) to use when sending the actual data packages to the decoder.

-

NO! Configure that parameter simply as Array, Element Type U8, Pass as Data Pointer! But seriously you should first learn more C before trying to go further on this! And there is no need to convert the byte array into a string to determine its length to pass to the DLL function. There is also an Array Size function, you know?