-

Posts

3,940 -

Joined

-

Last visited

-

Days Won

273

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

That callback will almost certainly never get triggered! The SDK is not aware about that it is drawing into a .Net PictureBox. It only sees the window handle that is used by the PictureBox for its drawing canvas. And that works on a level way below .Net in the Windows window manager inside the kernel. If you would try to do anything with that PictureBox such as drawing lines or anything into, it you would get very nasty flickering as the SDK function trying to bitblit into the windows device context (HDC) will fight with the PictureBox functions who tries to do GDI drawing into the same HDC. We are abusing here the PictureBox simply as a container to provide a window handle. In this way we can let Windows window clipping handle all the issues about making sure that the SDK can't draw beyond that area provided by the PictureBox control. But for the rest we are not really using any functionality from the PictureBox .Net control. Respectively when we tried to retrieve the image, that failed since the PictureBox control is not aware about what was drawn into its window. And the same applies for the LabVIEW Get Image control function.

-

There definitely is data in the "Image Data" Cluster. Of course you forgot again to show the part of the screen where it would display the "new picture" to proof your claim that there is nothing shown. So we can not tell what is captured but something for sure is captured. You may also want to remove the Draw Unflattened function. It adds nothing anymore.

-

Leaving NI (for real this time)... for SpaceX

Rolf Kalbermatter replied to Aristos Queue's topic in LAVA Lounge

Fun! Good luck in your new endeavor. But the LabVIEW development team loses a very valuable and important member for sure. -

Of course you do as you use the VI refnum. Right click on your PictureBox control in the LabVIEW front panel and select Create->Reference. Connect that reference to the Method node instead of the VI reference. And of course you need to use the VI with the PictureBox that you had earlier, not the one trying to use the subPanel.

-

That code does not exist yet (in a public location)! So yes it is impossible. More precise: That code does not exist yet in a public location! It for sure has been developed numerous times for various in house projects, although almost 100% certainly not for the HikVision SDK. But in-house means that it is not feasible or even possible to publish it because of copyright, license and other issues. And even if those issues would not exist, nobody is going to search his project archives for the code to post here.

-

Doesn't matter. The SDK draws asynchonously into the Picture Box window. No matter which window handle you use, the moment you cause the screen capture from the window handle to occur is totally and completely asynchronous to the drawing of the SDK. And there is a high chance that the screen capture operations BitBlit() or PrintWindow() will capture parts of two different images drawn (blitted into the window) by the SDK function. So yes after all these troubles we may have to consider that what we did so far is perfectly fine for displaying the camera image on a LabVIEW panel. You can also cause the SDK to write the image data into a file on disk. But if you want to get at the image data in realtime into LabVIEW memory to process as an image, you won't get around the callback and that is where I stop. This would be a serious development effort even if I did it all here on my own system for a real project. With the forum back and forth and the fact that it is advanced callback programming that even seasoned C programmers usually struggle with, it's simply unfeasible to continue here. And I already see the next request: You did it with IMAQ Vision images but that comes with a license cost. Please I want to do it with OpenCV to save some dollars! And a multiday development job turns into a multi week development job with one single sentence!

-

Yes, this choppyness is of course expected and there is nothing you can do about it with this method. The SDK function draws into that window handle when it pleases and how it pleases. Your Get Image function copies the screen pixel into its own buffer when it pleases and how it pleases. No synchronization whatsoever. And there is no trivial synchronization possible. If you were old enough you would know a similar effect from television when a TV screen was captured by a TV camera. Since the two never ever work exactly with the same picture frequency you get stripes and flickers across the screen as the camera picture takes part of one image and then part of the next image in each image shot and the dysonchron frequency causes the stripes and flickers to wander up or down on the screen.

-

The problem is that the .Net PictureBox does not contain an image. It is just used to get a window handle to pass to the SDK library which then draws the image data directly onto the window surface. And the PictureBox knows nothing about that and therefore won't return any pixel images for the non existing image it has. The LabVIEW control method Get Image on the other hand should simply take a screen shot of the control screen area. It's the only way to get an image for LabVIEW as it does not know what object control is displayed in it and most classes do not have a GetBitmap method anyways. For native controls it may use a control method, which redraws the control into a bitmap, but for the .Net and Active X Control that certainly won't work and the only way to get an image is by doing a screen capture of the area.

-

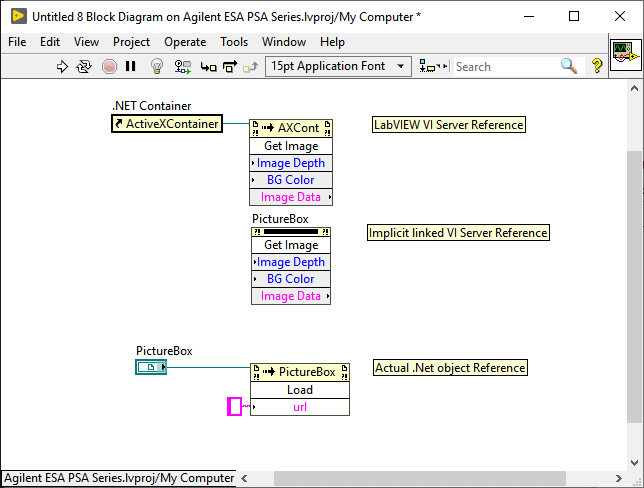

That's definitely the case. He only passes the window handle of the Picture box into the HikVision camera. And the SDK then directly bitblits into that window surface. It does not know about PictureBox or anything, only the window handle. The PictureBox really just is placeholder to provide a handle. It would likely better work if you actually called the LabVIEW VI Server Method "Get Image" on the LabVIEW .Net Container Refnum rather than the PictureBox .Net refnum. Yes it is not exactly intuitive but fairly consistent with other LabVIEW controls. The terminal is the data (for a .Net control its contained object class refnum) but the VI server interface has to be retrieved either from a Reference node or the Property/Method needs to be implicitly linked to the control

-

Well, a handle is in terms of the C signature simply a pointer sized integer. typedef void * HANDLE; That it is "usually" a 24-bit index into a Windows object table specific to your handle type, doesn't mean that every API that "exports" a HANDLE data type also uses this. For one it only applies to objects that the Windows kernel manages. Second, the functions to create such handles for your own handle type are located in ntoskrnl and as such considered non-public Windows APIs. While you can call them directly and in some cases some of those APIs are documented at least in the Windows DDK, using them is at your own risk and potentially a compatibility liability as Microsoft does specifically not guarantee these APIs to not change in their signature or behaviour, nor simply disappear at any point in a new Windows version. Also that 24 bit index is only part of the truth, the rest of the standard 32-bit value is used to encode the type of handle and allows to verify that the handle is actually for the object table the caller claims it to be. As such it is almost exactly like a LabVIEW refnum which implements pretty much the same semantics. Only that LabVIEW refnums are also guaranteed to be always 32-bit entities as that is how NI publically defined them and they did not change that definition in the published API code when adding support for 64-bit LabVIEW in 2009. Microsoft on the other hand declared a HANDLE to be a pointer sized entity since many moons ago. Only very early Windows SDKs did define the HANDLE to be an alias for a DWORD. At some point they consistently changed that to a LPVOID, respectively a pointer to an anonymous struct (and in some scenarios even a pointer to a struct containing an int value). So while in most cases a HANDLE indeed is simply a 32-bit integer under the hood, that is always an implementation specific detail that you rely on for that specific type of handle. It may not hold true for every handle, especially APIs that to not refer to kernel objects itself but simply use the HANDLE data type as an opaque parameter to pass their own object identifier between API calls, which in many cases can be a pointer to a struct. And since a HANDLE is defined to be an anonymous identifier whose implementation is implementation specific, that is all very legal. And in programming it is always a bad idea to rely upon implementation specific details behind abstract interfaces. So if you want to treat your HANDLEs as 32-bit value because it USUALLY works, that is up to you. I prefer to work according to the published definition and if that wastes 32-bit of memory on a 64-bit platform because the higher significant 32-bit are never used, so be it. It will guarantee that the software will keep working when the underlying API one day decides that the private and hence undocumented implementation detail of what the handle really means will change. Will that cause a compatibility nightmare for all the software which never bothered to follow the actual spec rather than private implementation specific details? You bet. Is it likely to happen? Maybe not. And for any API using the HANDLE for anything else than Windows kernel objects, it really and absolutely can matter already today. And you may not always know if an API uses this data type for a real kernel object or something entirely different for its internal implementation.

-

Are you sure the handle can not get negative? In fact I'm pretty sure the handle could get 64 bit on LabVIEW 64-bit. It usually shouldn't as Microsoft tried to keep the handle values below the 32 bit boundary by somehow not directly making it a pointer, but I have seen cases where Windows handles did use the upper 32-bit for something and failed if you treated the handle only as 32-bit, effectively clearing out the top most 32 bits. I think the safest here would be to use ToUInt64(). It shouldn't hurt even if Windows never uses more than 32-bit but it may prevent potential problems in the future.

-

Interesting Announcements from NI Connect?

Rolf Kalbermatter replied to Reds's topic in LabVIEW General

Those properties and methods are part of the object manager interface. This is despite its name not the same as LabVIEW classes. It was introduced with NI VISA back in LabVIEW 4 or 5 and later improved for other software interfaces. The according files have the ending .rc and .rch and are located in the resources/objmgr directory in LabVIEW. They define the menu hierarchy in the property and method nodes and the connection between them and the actual shared library (DLL) implementation for that interface. The same object manager infrastructure is in fact also used for Active X, .Net and in some way the LabVIEW VI Server interface but these are not defined by external .rc files but directly in the c++ source code of LabVIEW. If you want to change what methods and properties are available for a certain class you need to change these files too, but of course the properties and methods that are defined in those files need to connect to correct selectors in the according shared library, so adding new entries in the .rc file alone yourself makes little sense. You also need to have the shared library version that implements them. On the other hand only changing the shared library doesn't add new properties and methods to the object manager resources. You also need to copy the according .rc and .rch files. But beware, those files can implement different versions of object manager resource formats and there is a chance that if you copy one meant for a much newer LabVIEW version, it will make an older LabVIEW version not recognize the file at all as the version is to new for it to know about it. And the actual interface implementation for this interface is in most cases a separate shared library on top of the more generic driver library. The driver library implements the real business logic and the low level C interface that can be also used from other programming software. The higher level library is very much LabVIEW specific as it needs to directly provide LabVIEW native datatypes such as string handles to plug easily into the object manager interface. For the more esoteric features such as events it even must provide very specific functionality that connects the event to the LabVIEW user event mechanisme. -

Interesting Announcements from NI Connect?

Rolf Kalbermatter replied to Reds's topic in LabVIEW General

I know it is. But I'm not involved in that project. Still find that webpage on the NI site much less than overwhelming! 😀 -

Could this be the Linux kernel wanting to refuse to load non-GNU blessed kernel drivers to be loaded. This was a pretty controversial topic some time ago. In an effort to force graphic card manufacturers to release open source versions of their driver, the kernel folks decided that you only could load GNU marked drivers, but in order to mark your driver with the necessary symbols you had to somehow link it to some library that made your driver fall under the GNU GPL license by design. Neither at least one of the graphic chip manufacturers nor NI were happy nor willing to do that!

-

Interesting Announcements from NI Connect?

Rolf Kalbermatter replied to Reds's topic in LabVIEW General

That is a bit of an overreaching statement! It does not mean that you can call any version of driver with any version of LabVIEW. For one thing, most driver installers come with LabVIEW VIs and they have a minimum version in which they are compiled. No dice in trying to install those VIs into a LabVIEW version that is older than the VI format that is included in the installer. It does probably mean that an installer only comes with one version of VI libraries (the supported minimum version) and that it will invoke a mass compile after installation. And there is of course the option that some of those VIs use features that may get depreciated in a future LabVIEW version and eventually get disabled so that some driver VIs might not load without broken error in a far out version of LabVIEW. I wondered about TestScale too. The available webpage on the NI site is however underwhelming to say the least. The text and picture shown would be worthy for a preliminary PowerPoint presentation during an early product design meeting but not for something that is supposed to get released anytime soon. -

I know. I traced it to tdcore_xx_x.dll but that is as far as I'm willing to invest into this. Trying to find out the signature of C++ object dispatch tables is simply to tedious especially if they are raw dispatch tables and not based on IUnknown COM architecture.

-

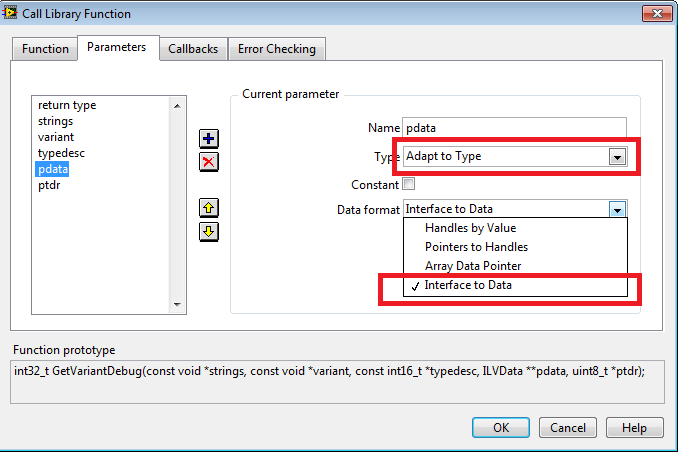

I believe that the correct definition is as follows: void *TDR; #include "lv_prolog.h" typedef struct { void *lpVTable; // object C++ virtual table LVBoolean flag; // bool void *mData; // the actual non-flat LabVIEW data void *mAttr; // Looks like a C++ object int32 refCount; int32 transaction; TDR mTD // Looks like a C++ object too } LvVariant, *LvVariantPtr; #include "lv_epilog.h But accessing this is highly tricky, LabVIEW version and platform dependent and should therefore be avoided at all costs even though that layout seems to hold true since at least 7.1 and until at least 2018. Also the lpVTable is very long and not a formalized COM interface (I was for a moment hoping it might be the ILVDataType from the ILVDataInterface.h header file in cintools, but alas). I'm still stumped about the presence of ILVDataInterface.h and ILVTypeInterface.h in the cintools directory as it seems to connect to absolutely nothing else in there so it is a bit hard to see how that would be even remotely useful. But the referencing of the interesting elements can be done by using these function prototypes (gleamed from an ni_extcode.h file contained in the LabVIEW for CUDA SDK download) #include "extcode.h" /* from extcode.h in cintools directory #ifdef __cplusplus class LvVariant; #else typedef struct LVVARIANT LvVariant; #endif typedef LvVariant* LvVariantPtr; */ /* from ni_extcode.h from CUDA SDK package */ #ifdef __cplusplus class TDR; #else typedef struct _TDR TDR; #endif // __cplusplus TH_REENTRANT EXTERNC const TDR * _FUNCC LvVariantGetType(LvVariantPtr lvVar); TH_REENTRANT EXTERNC const void *_FUNCC LvVariantGetDataPtr(LvVariantPtr lvVar); You just need to be aware that when you pass a LabVIEW Variant through a Call Library Node with the parameter set to Adapt to Type, that LabVIEW actually passes the variant as: retval FuncName(...., LvVariantPtr *lvVar, ....); The nasty thing is that the returned TDR data pointer seems indeed to be another C++ object with no declared interface anywhere, and no it does also not match the ILVTypeInterface in the above mentioned header file. And just after hitting the Save button on this post and having spend many hours researching this, the final heureka moment happened. 💡 Those two header files in cintools are of course related to this selection:

-

Yes of course it was a little to short in thought. Although what you report sounds wrong. It should give you something like 23 degree. It scales the decades correctly but also tries to scale the interval in between each decade which it should not. This makes that the results like 1.0, 10.0, 100.0 are correct but values in between get over scaled, not under scaled.

-

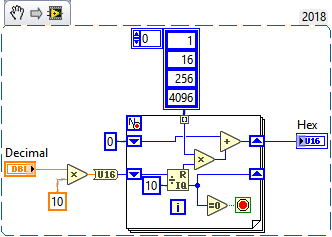

Seems about right. A few comments: - You don't need the numeric conversion nodes. They do nothing as the incoming type is already what you convert it to. - That function returns a Boolean error indicator. If the value is TRUE it was successful. Otherwise you should retrieve the last error code to get an indication what went wrong. Just use the error handler VI I used in the same way as I did in "Init Driver.vi" of my little "LabVIEW SDK". The SDK states: Actual PTZ value is one-tenth of the got value, for example: if the got P value is 0x1750, the actual P value is 175 degree; if the got T value is 0x0789, the actual T value is 78.9 degree; if the got Z value is 0x1100, the actual Z value is 110 degree. So use a floating point value as VI input, multiply it by 10. And then the fun starts as they seem to want to get hex values that correspond to the actual degree value. I'm sure there is a mathematical formula for that but my jerk reaction to this would be to convert the result into a string with the %d format specifier and then back into a 16-bit integer number with the %x format specifier. Dirty, non-performant but it does the job. Note: After fetching a coffee the enlightening idea came to me. The mathematical formula for this would be hexval = exp16(log10(inputvalue) + 1)

-

It seems you have placed transparent nodes in your VI and wired them all up with transparent wires. 😀 That function is simply expecting specific parameters in the lpInBuffer, and what data should be in there depends on the value of dwCommand. So you have to do some manual work here. First you need to figure out what command you want to use. Look its numeric value up in the header file. Then look the definition of the data structure up in the header file that is in the same row behind the command name in your SDK documentation. As example if you may want to change PTZPOS you need to find the NET_DVR_SET_PTZPOS value which is 292, also documented in the last column of the Remarks table. This number must be passed to the dwCommand value. According to the SDK this command parameter requires the NET_DVR_PTZPOS data structure which is defined like this: struct{ WORD wAction; WORD wPanPos; WORD wTiltPos; WORD wZoomPos; }NET_DVR_PTZPOS, *LPNET_DVR_PTZPOS; You need to build this data structure as a LabVIEW cluster but EXACTLY. In this specific case you need to create a cluster that contains the 4 numeric values of uInt16 integers. You can pass this directly to the functions lpInBuffer parameter by configuring it as Pass Native Datatype. Then you need to calculate the bytes size of that data structure and pass that in as dwInBufferSize. This is 4 times 2 bytes, so 8.

-

Yes, when you posted that link I actually had a bit of a look around and Shaun did apparently the same, seeing his comment that nothing beyond LabVIEW 2018 could be found there.

-

Yes there is. 😆 My Chrome browser is configured to simply not download exe files. The problem is that I wasn't looking for those specific files but rather trying to find something else. And there is a difference now. A few days ago you could simply browse that entire directory tree and find out what was on there. Now the entire softlib subdirectory and all its contents is not browsable anymore. Someone shut a door somewhere. 😀 They probably couldn't rename the entire tree as then the download page for all the products might not work anymore. But that might be just a question of time too.