-

Posts

3,954 -

Joined

-

Last visited

-

Days Won

275

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

Including solicitation of interest from potential acquirers

Rolf Kalbermatter replied to gleichman's topic in LAVA Lounge

And it of course shot to over 54 $ this morning to now settle around the Emerson bid price 🙂, while Emerson lost 5%. Of course that is speculation and market overreaction by trigger happy stock market cowboys. -

Including solicitation of interest from potential acquirers

Rolf Kalbermatter replied to gleichman's topic in LAVA Lounge

Well, they make it sound like it is a very bad decision not to want to sell to them, almost criminal. I always thought in a free market there wasn't any obligation to sell something to an interested party even if they offer a premium (which I'm not really sure they did). But they make it sound like that doesn't apply here. 😁 -

Do you have the source code of that wrapper? If so you can modify it, otherwise you will have to create a wrapper around this wrapper or around the original lower level API. Or maybe that wrapper implements this callback functionality and the lower level API is simply a function API where the caller has to implement its own task handling. In that case it may be simpler to directly go to this lower level API and implement the parallel task doing the logging monitoring entirely in LabVIEW.

-

Including solicitation of interest from potential acquirers

Rolf Kalbermatter replied to gleichman's topic in LAVA Lounge

That was actually my first name I came up with when reading that press release from NI. About the dilution of shares, it was exactly my understanding that this is to fight against a hostile takeover. But the rest of the press release does not sound like they are trying to fight to be taken over, rather the opposite, and that felt kind of contradictive. -

Including solicitation of interest from potential acquirers

Rolf Kalbermatter replied to gleichman's topic in LAVA Lounge

That were my thoughts too, but I read it on Yahoo. And while they don't usually tell total bullshit like some other news sources, their reporting is usually not very accurate. -

Including solicitation of interest from potential acquirers

Rolf Kalbermatter replied to gleichman's topic in LAVA Lounge

I own some. But it has even in promilles of the outstanding stock way to many zeros after the decimal point. 😀 I didn't get them to get rich but because I believed at that time in the company to have good products and moral. Who could want to buy them? No idea really, technically Keysight might be an option, politically I doubt that would work. It's more likely that it is going to be either a hedge funds of some sort or one of those huge nameless engineering service conglomerates that nobody knows but everybody uses various things from but they are sold under a different name. It's unlikely that any of those options cares about the products that NI made or the software portfolio. It's purely financial, buy theoretical market share, port as many customers as possible over to your own products and then close the operations. And it is certainly not an overnight decision. That has been planned for quite some time, and really put in motion even before the last original founder retired. I'm not sad that LabVIEW NXG was canceled, but the reason why it actually was, are in hindsight also very sad. -

LabVIEW's "hidden" decoration styles

Rolf Kalbermatter replied to Sparkette's topic in LabVIEW General

There is potentially a mixup somewhere with namings. It's a long long time ago that I looked at this and I'm not sure about the exact format of a PICC at this time. LabVIEW also has something that it calls pixmaps (and is in fact the Picture Control data format which has opcodes and parameters as can be seen when looking at the Picture Control VIs). It may technically not be the same as the PICC resource format, but I'm really not sure at this point and don't feel like doing that sort of archeology at this moment. There are in fact several different formats in LabVIEW for graphics. Some are LabVIEW native, some are bridges to platform specific formats. Some are pixel based and others are more vector based. And if I had access to the source code of LabVIEW I would first fix some annoying bugs and shortcomings before looking at that sort of things. -

I worked some on this over the holidays and made some more progress. Still quite a bit of cleanup to do, the underlaying code in the shared library was a bit a work in progress in several phases and there was some code duplication and inconsistencies. But I'm slowly getting there.

-

gcc should only be required if you intend to recompile the shared library yourself. However glibc compatibility between different compiler versions always is a pitta. Usually compiling with the oldest version you expect to be used is best.

- 172 replies

-

That means that your system misses some dependencies. To solve this we would need to have a list of possible dependencies and their version that this library may have. Aside from obvious dependencies that should be apparent to anyone having compiled this library you would also want to know the system and gcc version on which it was compiled. Depending on that there might be various other dependencies that your Ubuntu system may or may not come preinstalled with in the correct version.

- 172 replies

-

It's guessing but I could imagine that there is actually a situation possible where the Test Stand Editor doesn't know about the Friendship of objects but the user may want to select a Community scoped class anyhow. If that class is then executed in a context that has friendship relationship it still succeeds. Otherwise it gives a failure. Bad UX maybe. A feature that makes things possible that should be possible, quite likely. Fixing that may require to teach TestStand about LabVIEW implementation specific details and present its test adapters in a way that forces dependencies into the TestStand paradigma that it doesn't really care about otherwise. Likely weeks or months of extra work and a brittle interface that can fall flat on its nose anytime LabVIEW makes subtle changes to something in these implementation specific private features. Much safer to leave this inconsistency in there, save time, sweat and money and call it a day.

-

The 32-bit version may be difficult to test. 2016 was the last version that had a 32-bit version of LabVIEW both on Mac and Linux. After that it was 64-bit only. Not sure how many people still have a 2015 or 2016 installation of those.

- 172 replies

-

- 1

-

-

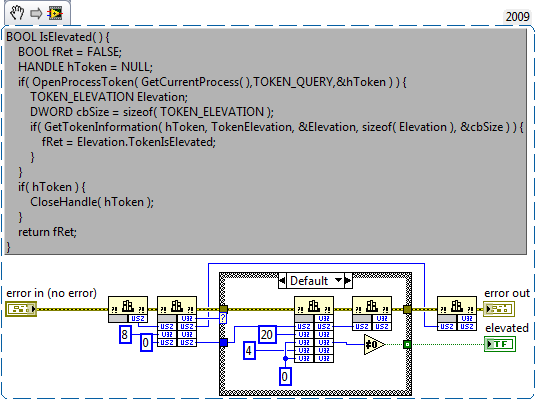

Launch via a VI an executable as administrator

Rolf Kalbermatter replied to CIPLEO's topic in LabVIEW General

-

Extract all rows from 2d array

Rolf Kalbermatter replied to Thomas Granito's topic in LabVIEW General

I'm not sure what you want to do. All rows from a 2D array would sound like the whole 2D array to be. When you mean a particular column that contains values for every row, just check out the Index Array function. If you connect a 2D array to its input it will expand to have one index and one size input per dimension. Connect the index value you want to have for either a particular row or column to extract and leave the rest unconnected. -

Launch via a VI an executable as administrator

Rolf Kalbermatter replied to CIPLEO's topic in LabVIEW General

No you can't launch VIs as administrator. You need to launch LabVIEW (or VIPM) as such in order to have a VI executed with administrator rights. Windows does not allow to change the privilege of a process after it is launched, respectively if you find a way to do that you found a zero day hacker exploit, that will be closed immediately as soon as Microsoft learns about it. What you could do is to add an executable that was configured to need administrator rights through a manifest file to the installation package and then launch that. It will cause a privilege escalation dialog when you launch that, and require the user to enter the login credentials for an admin if he isn't already admin. The dialog will appear anyhow even if he is an admin already, but as admin the user won't have to enter the password again, but still confirm that he does want to have that executable launched. -

While your previous approach might pose problems depending on what you intend to do with the data, as the number of read samples can be very variable, your current approach sounds honestly corner case. What do you mean samples per channel being 1000? Is that at the Create Task? This would be the hint for DAQmx about how much buffer to allocate and should be actually higher than the number of samples you want to read per iteration. My experience is that one read per 10 ms is not safe under Windows, but 1 read per 100 to 200 ms can sustain operation for a long time if you make the internal buffer big enough (I usually make it at least 5 times the intended number of read samples per interval).

-

Dynamically Copy, Rename, and Use a DLL

Rolf Kalbermatter replied to mcduff's topic in LabVIEW General

It is not! All the language interfaces they have on that page are simply wrappers around the DLL. Some more complete than others. The C# one seems to import all the functions (well at least a lot), the LabVIEW wrapper is extremely minimalistic. -

That loop looks nice, but I prefer to use Initialize Array. 😀 But I'm pretty sure the generated code is in both cases pretty similar in performance. 😁

-

No! The DLL also operates on native memory just as LabVEW itself does. There is no Endianness disparity between the two. Only when you start to work with flattened data (with the LabVIEW Typecast, but not a C typecast) do you have to worry about the LabVIEW Big Endian preferred format. The issue is in the LabVIEW Typecast specifically (and in the old flatten functions that did not let you choose the Endianness). LabVIEW started on Big Endian platforms and hence the flatten format is Big Endian. That is needed so LabVIEW can read and write flattened binary data independent of the platform it works on. All flattened data is Endian de-normalized on importing, which means it is changed to whatever Endianness the current platform has so that LabVIEW can work on the data in memory without having to worry about the original byte order. And it is normalized on exporting the data to a flattened format. But all the numbers that you have on your diagram are always in the native format for that platform! Your assumption that LabVIEW somehow always operates in Big Endian format would be a performance nightmare as it would need to convert every numeric data every time it wants to do some arithmetic or similar on it. That would really suck great time! Instead it defines an external flattened format for data (which happens to be Big Endian) and only does the swapping whenever that data crosses the boundary of the currently operating system. That means when streaming data over some byte channel, be it file IO, or network or a memory byte stream. And yes, when writing a VI to disk (or streaming it over the network to download it to a real-time system for instance), all numeric data in it is in fact normalized to Big Endian, but when loading it into memory everything is reversed to whatever endianness format is appropriate for the current platform. And even if you use Typecast it only will swap elements if the element size on the input side doesn't match the element size on the output. For instance Byte Array (or String, which unfortunately still is just a syntactic sugar to a Byte Array) to something else. Try a Typecast from an (u)int32 to a single precision float. LabVIEW won't swap bytes since the element size on both sides is the same! That even applies to arrays of (u)int32 to array of single precision (or between (u)int64 and double precision floats). Yes it may seem unintuitive when there is swapping or not but it is actually very sane and logical. Indeed, and no there is no problem about Endianness here at al. The only thing you need to make sure is that the array of clusters is pre-allocated to the size needed to copy the elements into and that you have in fact three different size elements here: 1) the size of the uint64 array, lets call it n 2) the size of the cluster array, which must be at least n + (e - 1) / e, with e being the number of u64 elements in the cluster 3) the size of bytes to copy which will be n * 8

-

You can forget about that comment about endianess. MoveBlock is not endianess aware and operates directly on native memory. Only if you incorporate the LabVIEW Typecast do you have to consider the LabVIEW Big Endian preference. For the Flatten and Unflatten you can nowadays choose what Endianess LabVIEW should use and the same applies for the Binary File IO. TCP used to have an unrealeased FlexTCP interface that worked like the Binary File IO but they never released that, most likely figuring that using the Flatten and Unflatten together with TCP Read and Write does actually the same. PS: A little nitpick here: The size parameter for MoveBlock is defined to be size_t. This is a 32-bit unsigned integer on 32-bit LabVIEW and a 64-bit unsigned integer on 64-bit LabVIEW.

-

That's hardly efficient as you actually copy the memory buffer at least twice (but most likely three times), likely once in the .Net function you call, then with memcpy() in your C++/CLI wrapper and then again with your GetValueByPointer.xnode. Basically you created a complicated solution to supposedly make something performant, but made it anything but performant. If your C++/CLI DLL instead provides a function where the caller can pass in the pre-allocated array as an actual array (of bytes, integers, doubles, apples or whatever) and request to have the data copied into it, you are already done. Without pointer voodoo on the LabVIEW diagram and at least one memory copy less.

-

So far it's all guessing. You haven't shown us an example of what you want to do nor the according C# code that would do the same. It depends a lot on how this mysterious array data pointer by reference is actually defined in the .Net method. Is it a full .Net Object, or an IntPtr?

-

It's definitely a hack. But if it works it works, it may just be a really nasty surprise for anyone having to maintain that code after you move on. It would figure very high on my list of obscure coding. The solution of Shaun is definitely a lot cleaner, without abusing an IMAQ image to achieve your goal. But!!! Is this pointer passed inside a structure (cluster)? If it is directly passed as a function parameter, there really is no reason to try to outsmart LabVIEW. Simply allocate an array of the correct size and pass it as an Array (correct data type), Pass as: Array Data Pointer and you are done. If you want to keep this array in memory to avoid having LabVIEW allocate and deallocate it repeatedly just keep it in a shift register (or feedback node) and loop it through the Call Library Node. The LabVIEW optimizer will then always attempt to reuse that buffer whenever possible (and if you don't branch that wire anywhere out of the VI or to functions that want to modify it, this is ALWAYS).

-

The thread context switch itself to the UI thread and back again should and won't take that long, it's more in the tenths of microseconds. But that UI thread may be busy doing your front panel drawing or just about anything else that is UI related or needs to run in the only available single threaded protected context in LabVIEW and then the context switch to the UI thread has to arbitrate for it. And that means the LabVIEW code simply sits there and waits until the UI thread finally gets available again and can be acquired by this code clump.

- 5 replies

-

- labview

- memory management

-

(and 1 more)

Tagged with:

-

UI Element Property Nodes ALWAYS execute in the UI thread. This applies to VI server nodes operating on front panel and control refnums (and almost certainly on diagram refnums too, but this would be pure scripting so if you do anything time critical here, you are definitely operating in very strange territory). CLFN is Call Library Function Node. These calls can be configured to run either reentrant or in the UI thread. If set to run in UI thread and it is a lengthy function (for instance waiting for some event in the external code) it will consume the UI thread and block it for anybody else including your nice happy property nodes! Now don't run and change all CLFN to run reentrant! If the underlaying DLL is not programmed in a way that it is multithreading safe (and quite some are not) you can end up getting all sorts of weird results from totally wrong computations to outright crashes! So your VI may have worked for many years by chance. But as we all had to learn for those to nice to be true earnings from investments, results from the past are no guarantee for the future! 🙂