-

Posts

69 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by codcoder

-

Yes, I agree with that. Just because you know JavaScript as a frontend web developer doesn't mean you can transition to C and become an embedded developer. There is a need for more domain expertise than simply knowing how to code. I am not sure how common it is for programmers to switch fields and programming languages altogether. On another note, for me being a LabVIEW developer is a very unique role. It revolves around seamless integration with hardware; one cannot exist without the other. Ironically, this cross-disciplinary approach poses another challenge in our corporate world: it doesn't align well with the predefined roles and resource categories. You're either considered a software engineer or an electronics engineer—rarely both.

-

The discussion here often assumes that all text-based languages are generic and interchangeable. I don't get that.

-

I firmly believe there is a future for LabVIEW. But I have met too many developers who fail to see that they are the odd ones in a world of text-based programming. They fail to integrate into this world, and in the process, they also fail to demonstrate to other people the value that LabVIEW can bring. This only accelerates the downfall, as they are inevitably seen as burdens. I understand that this is an international forum, and so on, but your first sentence struck a chord: 'These are being slowly replaced by ODMs developing their own test solutions.' My questions to you: Why are these being replaced? What advantages do ODMs see in developing their own test solutions? And why doesn't LabVIEW integrate with this?

-

Where do y'all get your (free) artwork for UI elements?

codcoder replied to David Boyd's topic in User Interface

I've always found it contradictory that LabVIEW itself, which relies so heavily on GUI, has such a limited selection of provided icons and buttons. Especially those useful for electronic testing and similar purposes: remixicon.com contains over 2000 icons, but the word 'electronic' yields no results. Additionally, the word 'test' yields only one result, an icon for A/B testing primarily used in software testing. NI: why you do this? -

Including solicitation of interest from potential acquirers

codcoder replied to gleichman's topic in LAVA Lounge

Two thoughts. One, does this include everyone who still holds licenses from before LabVIEW became a subscription service? And two, does everyone pay full price? Large corporations surely must receive significant discounts through licensing plans, right? -

Other people's code lacks comments. Mine is self-documenting.

-

TCP/IP communication using Labview

codcoder replied to dipanwita's topic in Remote Control, Monitoring and the Internet

If you haven't already I suggest that you install Wireshark on your computer. This will allow you to analyze the ethernet traffic and help you debug. As a disclaimer I am sure there are many other well-suited communication analyzers but Wireshark is the default tool for me. -

When I did my first big LabVIEW OOP project (from scratch) a few years back I went "all in" with creating accessor nodes. Maybe it works better in other programming languages but in LabVIEW all those read write vi's clutters up. There are a few cases where it could be useful, like if you have a underlying property of the class (like time) and you want to read it in different formats (timestamp, string, double) making an accessor vi with small modifing code can be quite neat. Also I work with a real time application and since each accessor is a vi I'm not sure of it affects preformance. Extra settings like reentrancy needs to be taken into account I guess. So now when I'm trying to identify preformance issues I replace any accessor node possible with bundle/unbundle just to be safe. Fully agrees with @Neil Pate. Nowadays I'm much more restrictive.

-

Including solicitation of interest from potential acquirers

codcoder replied to gleichman's topic in LAVA Lounge

It can also be that they simply need to frame it somehow. There is most likely a overlap in the middle, NI is more suited for production but Emerson atleast a little for development (I have no experience of Emerson's offering). But somewhere I line had to be drawn. -

Oh turn that frown upside down! 🙃 The bug tracker for Python isn't exactly short either. LabVIEW is what it is. It isn't mainstream, you can't git merge, and as a developer I will perhaps never be understood by the text programmers, but at least where I work we like it and find it useful. 😊

-

One could argue that LabVIEW has reached such a state of complete perfection that all is left to do is to sort out the kinks and that those minuscule fixes are what there is left to discuss! 😄

-

Oh but I like that! 💗 Small stuff like that has always bothered me in LabVIEW. And fixing small stuff is a way for them to show that they care. And that a big company on which I hinge my career cares is important for me.

-

But this isn't LabVIEW specific. There are a lot of situations in corporate environments where you simply don't upgrade to the latest version all time of a software. It's simply too risky.

-

So it's settled then? NI will become a subdivision of Emerson?

-

Thanks for sharing! I guess I'm just trying to stay positive. Every time the future of LabVIEW is brought up here things goes so gloomy so fast! And I'm trying to wrap my head around what is true and what is nothing but mindset. It's ironic though: last months there has been so much hype around AI being used for programming, especially Googles AlphaCode ranking top 54% in competetive programming (https://www.deepmind.com/blog/competitive-programming-with-alphacode). Writing code from natural text input. So we're heading towards a future where classic "coding" could fast be obsolete, leaving the mundane tasks to the AI. And still, there already is a tool that could help you with that, a tools that has been around for 30 years, a graphical programming tool. So how, how, could LabVIEW not fit in this future?

-

Yes, of course. I can't argue with that (and since English isn't my first language and all my experience comes from my small part of the world, it's hard for me to argue in general 😁). We don't use python where I work. We mainly use Teststand to call C based drivers that communicate with the instruments. That could have been done in LabVIEW or Python as well but I assume the guy who was put to do it knew C. But for other tasks we use LabVIEW FPGA. It's really useful as it allows us to incorporate more custom stuff in a more COTS architecture. And we also use "normal" LabVIEW where we need a GUI (it's still very powerful at that, easy to make). And in a few cases we even use LabVIEW RT where we need that capability. I neither of those cases we plan to throw NI/LabVIEW away. Their approach is the entire reason we use them! I don't really know where I'm heading here. Maybe something like if you're used to LabVIEW as a general purpose software tool then yes, maybe Python is the best choice these days. Maybe, maybe, "the war is lost". But that shouldn't mean LabVIEW development is stagnating or dying. It's just that those areas where NI excel in general aren't as big and thriving compared to other. I.e. HW vs. SW.

-

Idk, why should the failure of NXG mark the failue of NI? Aren't there other examples of attempts ro reinvent the wheel, discovering that it doesn't work, scrap it and then continuing building on what you got? I can think of XHTML which W3C tried to push as a replacement of HTML but in failure to do so, HTML5 became the accepted standard (based on HTML 4.01 while XHTML was more based on XML). Maybe the comparision isn't that apt but maybe a little. And also, maybe I work in a back water slow moving company, but to us for example utilizing LabVIEW FPGA and the FPGA module for the PXI is something of a "game changer" in our test equipment. And we are still developing new products, new systems, based on NI tech. The major problem of course for me as an individual is that the engineering world in general have moved a lot from hardware to software in the last ten years. And working with hardware, whether or not you specilize in the NI eco system, gives you less oppurtunities. There are hyped things like app start ups, fin tech, AI, machine learning (and what not) and if you want to work in those areas, then sure, LabVIEW isn't applicable. But it never as been and that shouldn't mean it can't be thriving in the world it was originally conceived for.

-

Are you asking about taking the exam at a physical location or online? I've taken both the CLD and CLD-R online and I can recommend it. It worked fairly well. And the CLAD is still just multiple choice questions right? BUT regarding the online exam NI is apparanelty moving to a new provider so you can't take it right now: https://forums.ni.com/t5/Certification/NI-Certification-Transition-to-a-New-Online-Exam-Delivery-System/td-p/4278030

-

Out of curiosity, in which country do you work/live?

-

Transform JSON text to Cluster using "Unflatten From JSON"

codcoder replied to CIPLEO's topic in LabVIEW General

The short answer about arrays in LabVIEW is that they cannot contain mixed data types. There is a discussion here about using variants as a work around (https://forums.ni.com/t5/LabVIEW/Creating-an-array-with-different-data-types/td-p/731637) but unless someone is faster than me I'll need to get back to how that translates to your use case with JSON. -

Hi, So I have question about the inner working of the host to target FIFO for a setup with a Windows PC and a PXIe-7820R (if the specific hardware is important). But it really isn't so much of question as me trying to understand something. My setup: I transfer data from host to the target (the FPGA module 7820). First I simply configured my FIFO to use U8 as datatype and read one element at a time on the FPGA target. It worked but when I increased the amount of data I ran into a performance issue. In order to increase the throughput I both increased the width of the FIFO, packing four bytes into one U32, and also reconfigured the target to read two elements at a time. This worked, so there really isn't any issue here that needs to be resolved. But afterwards I thought occurd: would I have achieved the same result if I kept the width U8 but read eight elements at a time on the FPGA? Since 4*2 and 1*8 both are 8, would I have achieved the same throughput? Or is it better to read fewer but longer integers (and then splitting them up into U8's)? I've read NI's white paper but it doesn't cover this specific subject. Thanks for any thoughts given on the topic! 😊

-

I can confirm that I was unable to execute the community scoped VI inside the packed library from a TestStand sequence. I didn't fill my VI with anything special, just a dialog box. TestStand reported an error that it was unable to load the VI. Can't remember the exact phrasing, something general, but it was unrelated to the scope of the VI. So yes, good that the VI with commuty scope atleast can't be executed. But still bad UX that it is accessible.

-

Thanks for your answer! So the hiding of community scoped vi's in LabVIEW is cosmetic only? I guess it makes sense that a VI that must be accessible from something outside the library, let that be the end user through LabVIEW/TestStand or another library, will be treated diferently. But I still think it's bad ux that TestStand doesn't hide the community scoped vi's like LabVIEW does.

-

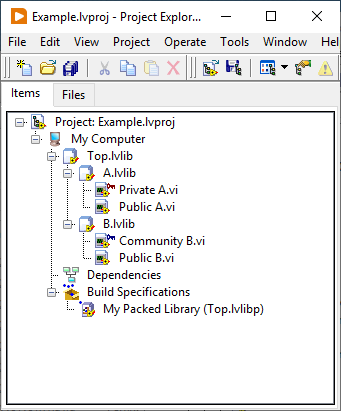

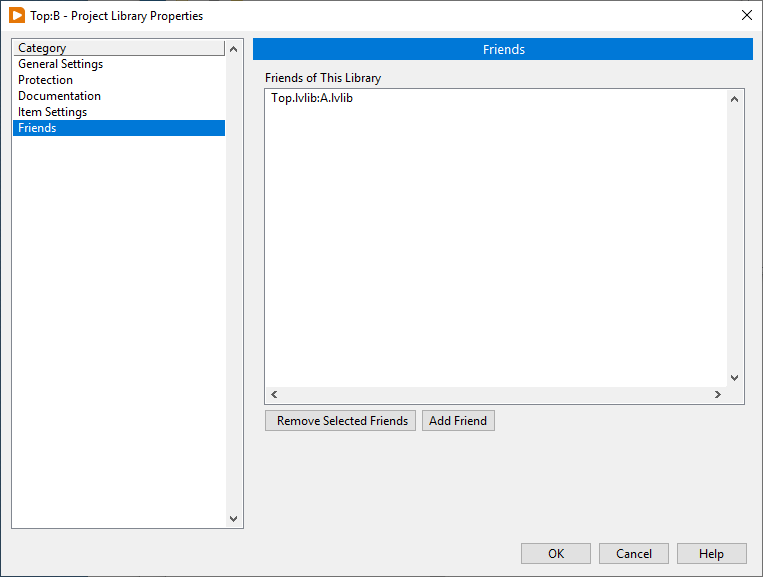

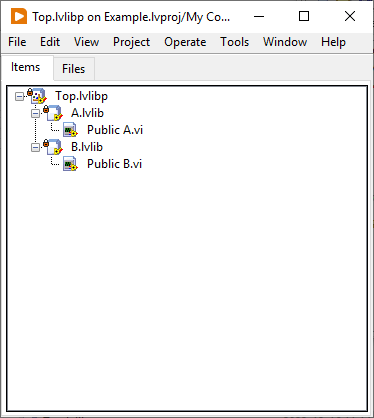

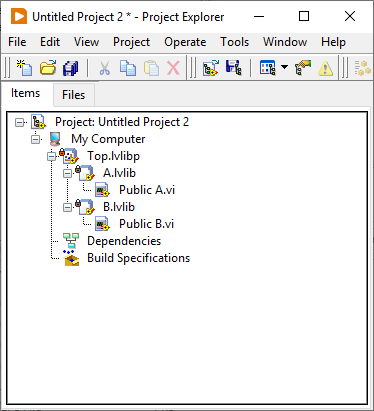

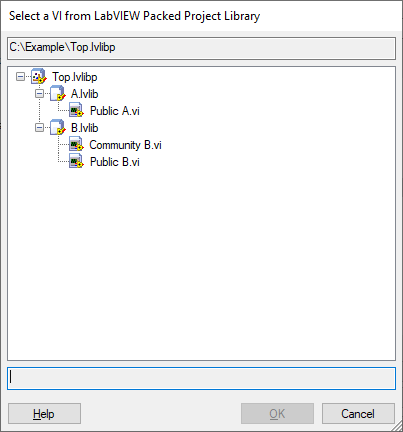

Hi, This is actually a re-post of a message I posted in the offical forum but since I've failed to receive any answer there I'm going to try my luck here as well. So I am having an issue with the community access scope of a VI inside a packed library (hence the title...) and I can't figure out if I have actually stumbled upon something noteworthy or if I simply fail to understand what the community access scope implies. Let me exemplify: Image 1 I create a library with two sub libraries. I set two of the vi's to public, one to private and one to community access scope. And I create a build specification for a packed library. This is of course an example but it resembles my actual library structure. Image 2 I also let library A be a friend of B (although not doing this doesn't seem to affect anything). In practice I want library A to access certain VI's inside library B but they should not be visible to the outside world. Image 3 and 4 If I now make a build of the packed library and open that build either stand alone or load it inside a new LabVIEW project the packed library behaves as I expect. Only the public vi's are accessible from the outside world but the private and community are not. And, to be clear, this is what I want to achieve. Image 5 Now this is the strange part: if I load this packed library into TestStand, and open it to select a VI, the public VI's are accessible and the private is not (as expected) but also the community VI is accessible. This is not the expected behaviour, atleast not according to my expectations. So to all of you who knows more than me: Is this an expected behaviour? Is there something about the community access scope I am not understanding? Is there some setting I should or should not activate? Or have I actually stumbled upon a bug? BR, CC

-

I was afraid the answer would be something along the line "just do it properly" The thing is the application does have that feature. It is just that the option to command reboot on system level is so appealing as it works in those cases where the application is non responsive.