Leaderboard

Popular Content

Showing content with the highest reputation on 08/22/2013 in all areas

-

A) No, there's no mechanism for forcing the use of property accessors within a class. As Mikael points out, that's a sign of a class that has grown beyond its mandate if you're having problems with that. It's usually pretty trivial to make either a conscious decision to use the accessors internally or not. Commonly, multiple methods all need direct access to the fields, using IPE structures to modify values, so such a restriction would have limited use. B) The property node mechanism is nothing more than syntactic sugar around the subVIs. Since I eliminate the error terminals from my property accessors these days, the property node syntax isn't even an option for me -- the dataflow advantages (not forcing fake serialization of operations that don't need serialization) are obvious... the memory benefits of not having error clusters are *massive* (many many cases of data copies can be eliminated when the data accessors are unconditional). I've been trying to broadcast that far and wide ... it isn't that property nodes are slow or getting slow. It's that the LV compiler is getting lots smarter about optimizing unconditional code, which means that if you can eliminate the error cluster check around the property access LV can often eliminate data copies. This gets even more optimized in LV 2013. That means creating data accessor VIs that do not have error clusters, which rules out the property node syntax.2 points

-

Some options: Lock the class so that only people you trust can edit it. Add a VI analyzer test that checks that each element in the cluster is only bundled/unbundled in a single VI. Encrypt the data in the cluster and put the encryption/decryption code in your locked VIs. I'm going to assume you're not going to use this one. Assuming there are no bugs or performance issues with the property node call, I think the answer to this is very simple: if the VI has more than one input/output, you call the VI, because you can't use the node. Otherwise, it's a matter of taste and personal preference. You would probably call the node if you want to do a direct DVR access or if you want to serialize calls. I often use the node if I don't want to create icons for the VIs and that API isn't one that needs them. Personally, for VIs which have properties, I almost always use the node.2 points

-

I suggest you all start using the new Event Inspector Window. On the left-most column you'll see a sequence#. This is a globally unique number that each event has and is used to order the events. This is a new (behind the scenes) feature of LabVIEW 2013 that guarantees that events now will always be handled in the order they are fired.2 points

-

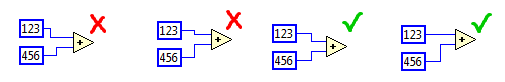

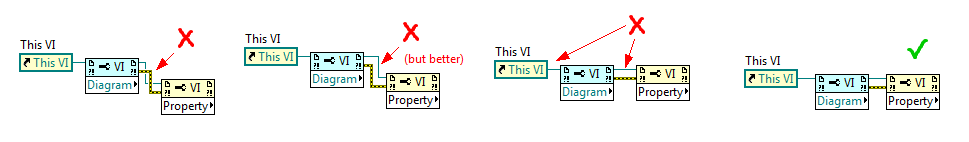

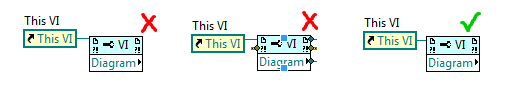

When designing block diagrams, I tend to spend way too much time making sure things are aligned perfectly, down to the pixels. It's really annoying. This is most noticeable with bends in wires. I don't like having "uneven" bends, or bends where they aren't necessarily needed, or wires that cross unnecessarily. For instance: Now you might wonder why I have bends there if I can just move the wires so they look straight. Well then they won't be perfectly aligned with the node's terminal. For instance: Of course, when there are bends, I want them to be even. For instance, these are the steps I might take to "fix" this sequence of property nodes (that I wouldn't likely put together in practice to my knowledge): Does anyone else have issues with trivial things like this? I find myself using the arrow keys a LOT on the block diagram to fix these kinds of things. Am I alone in that?1 point

-

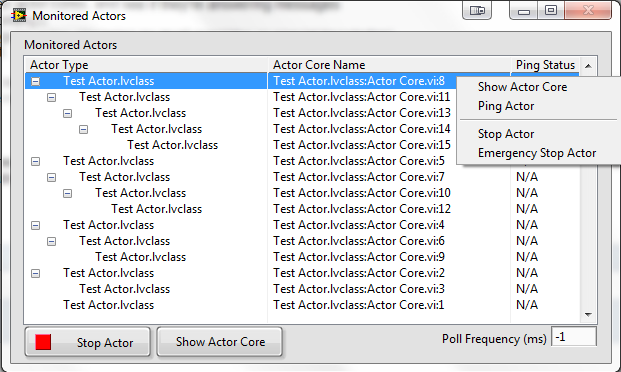

I don't think it takes too long after deciding to do an actor based project to run into the case where you have an actor spun off without a way to kill it. You'll figure that out and get your solution working so you can pass it off to someone else and forget about it. Soon after, that someone else will come to you and say something like "yeah, those actors are cool and all, but they're really hard to debug" I ran into some of these problems a while ago, so I decided to write a little tool to help with it. I decided to call it a monitored actor. Here were my design criteria: I want a visual representation of when a monitored actor is spun up, and when it is shut down. From the visual representation, I want to be able to: stop actor, open their actor cores, and see if they're answering messages The visual representation should give you an idea of nested actor relationships. Implementing a monitored actor should be identical to implementing a regular actor. (meaning no must overrides or special knowledge) Monitored actors behave identically to Actors in the runtime environment. It turns out that you can pretty much accomplish this by creating a child actor class, which I've called Monitored Actor. A monitored actor's Pre-launch Init will try to make sure that the actor monitor window is made aware of the spawned actor, and Stop core will notify it that the actor is now dead. The actor monitor window contains ways to message the actors and pop up their cores and such. I think it's fairly obvious what each button does except pinging. Pinging will send a message to the actor and time how long it takes to get a response. This can be used to see if your actor is locked up. The time it takes to respond will be shown in the ping status column. if you want to periodically ping all the actors, set the "poll Frequency" to something greater than 10ms. This will spawn a process that just sends ping messages every X ms. Where I didn't quite meet my criteria: If you were to spawn a new actor and Immediately (in pre-launch init) spam it with High priority messages, the Actor monitor window will not know about the spawned actor until it's worked through all the High priority messages that got their first. You probably shouldn't be doing this anyways, so don't. Download it. Drop the LVLIB into your project. Change your actor's inheritance hierarchy to inherit from monitored actor instead of actor. You shouldn't have to change any of your actor's code. The monitored window will pop up when an actor is launched, and kill it's self when all actors are dead. Final note: This was something I put together for my teams use. It's been working well and fairly bug free for the past few months. It wasn't something I planned on widely distributing. A co-worker went to NI week though and he said that in every presentation where actors were mentioned, someone brought up something about they being hard to debug or hard to get into the actual running actor core instance. So I decided to post this tool here to get some feedback. Maybe find some bugs, and get a nice tool to help spread the actor gospel. Let me know what you think. Monitored Actor.zip1 point

-

1 point

-

A gentle reminder: sending in reports when the NI Error Reporting dialog pops up helps us make future versions of LabVIEW more stable!1 point

-

I've heard it said that Von Neumann's architecture is the single worst thing ever to happen to computer technology. Von Neumann gave us a system that Moore's Law applied to and so economics pushed every hardware maker into the same basic setup but there were other hardware architectures that would have given us acceleration even faster than Moore's Law. At the very least we would have benefited from more hardware research that broke that mold instead of just making that mold go faster. All research in the alternate structures dried up until we exhausted the Von Neumann setup. Now it is hard to break because of the amount of software that wouldn't upgrade to these alternate architectures. Don't know how true it is, but I like blaming the hardware for yet another software problem. ;-)1 point

-

The slow down was probably the biggest one, though I believe that was addressed some time ago. I'm still not convinced property nodes were the cause though so much as poor architecture on my part, the project that exhibited the behavior was old and refactoring has since improved it considerably. I have also seen properties "go bad", wherin they turn black and become unwirable: here and here. I've also had a few cases where using a property node broke the VI, but replacing the property node with the actual VIs worked just fine, this was posted some time ago to lava but my searching is failing me. I have also seen problems with property nodes getting confused about their types, where a VI will suddenly break a wire because of an apparent type mismatch between source and sink, however deleting the wire and rewiring works just fine. The kicker about these issues is they tend to creep up in re-use libraries seemingly randomly, and to fix any of them I need to trigger recompiles in those libraries, which sends source code control into a tizzy. My work around: I no longer use property nodes in re-use libraries, and as habit I rarely use them other code either because who knows when something will get promoted to a library.1 point

-

As time goes on this "Windows API" becomes less useful and more dated in the development style, but I still find uses for it. http://zone.ni.com/devzone/cda/epd/p/id/4935 It has a subVI making a window front most given a HWND. Now getting the HWND of a VI is a simple property node away, no longer do you need to rely on the window title. http://lavag.org/topic/13803-getting-the-window-handle-for-a-fp-with-no-title-bar/1 point

-

I've heard that if you name the property folders akin to polymorphic Menu items then you'll have a property node hierarchy. I've never actually tried it though. Read:Data Read:Status Read:Otherstuff Write:Data Write:Status should give you a hierarchy when choosing the property node with Read and Write sub-menus.1 point

-

I've been bitten a few times by having to edit a class such that some of its data has to be coerced/validated on access (be it reading or writing). It is far easier to do this if you've stuck to the rule of using accessors from the beginning: all you need to do is place the new code in one place rather than tracking down every place which operates on the data. This is the main reason I use accessors-- except for when inplaceness is required/desired. I love the idea of a VI analyzer test. As for properties, I've largely stopped using them due to buggy behavior I've observed over the years and have continued to see as of LabVIEW 2012. I also see no reason to wrap all my access around error logic.1 point

-

There is not an easy solution. The only proper way would be to implement some DLL wrapper that uses the Call Library Node callback methods to register any session that gets opened in some private global queue in the wrapper DLL. The close function then removes the session from the queue. The CLN callback function for abort will check the queue for the session parameter and close it also if found. That CLN callback mechanism is the only way to receive the LabVIEW Abort event properly in external code.1 point

-

For me I use accessors in all cases except where I'm making specific attempts to work in-place, often when manipulating large arrays or variants for example. It goes without saying that most of my accessors are declared as inline such that there's no functional difference between the accessor and an unbundle proper (this is the default for VIs created via the project explorer's template). I doubt there's a way to prevent using the bundling primitives.1 point

-

I like this tool, I'll definitely give it a go once the bugs are worked out. I've also wished that this functionality were built into the Project Explorer. When a new class is generated and the inheritance assigned, we would see the static parent class method VIs already populated in the child. The VIs could be grayed or italicized to visually indicate that they are inherited methods. These wouldn't need to be actual VIs stored on disk, just visual pointers back to the parent methods. We could also have dynamic dispatch VIs come in as italicized and red font or something to indicate they can be overwritten...1 point

-

1 point

-

Both FrontPanelWindow:OSWindow and FP.NativeWindow are in the Run Time Engine. They are not in RT (Real Time). - Mike1 point

-

This is a topic I've been experimenting with for a while. Basically, I've found it's insufficient to have just a central error handler or just a local error handler. I think you need to have strategies for both. If you do just central error handling, it becomes difficult to do things like retry an operation, because it requires a lot of code for the central error handler to communicate with the specific section of code that threw the error. You also have to deal with the behavior of other code as you pass the error around, which is difficult, because different VIs and APIs treat incoming errors in different ways (which means that for any sufficiently complex secton of code, the behavior on an incoming error is essentially undefined). If you do just local error handling (I use the term "specific"), you end up calling dialogs or accessing files from loops you probably shouldn't be accessing them from (I do a lot of RT programming). My strategy has been to create a specific error handler which you call after each functional segment of code (which can be a loop iteration, subVI, or something more granular), and which can take actions based on specific error codes that happen in that segment (much like exception handling in other languages like Java). The specific error handler can take actions like retrying code, ignoring the error, converting it to a warning, or categorizing it. Ideally, the concept is that at the end of any functional segment of code, the errors from that segment have been handled if possible and categorized if not. You can then avoid passing them to other segments of code to get around the problem with undefined behavior that I mentioned before. For usability's sake, my specific error handler is an express VI that lets you configure a list of error codes or ranges and actions for each. I categorize errors by using the <append> tag in the source field, which keeps them fully compatible with all of the normal error handling functions (one drawback is that this requires string manipulation, which is kind of a no-no time-critical RT code, I haven't yet come up with an alternative I'm comfortable with though). A categorized error feeds into the central error handler (you can pass them with queues, events, FGs, or whatever you like), which can take actions based on categories of error. Each error category can take multiple actions, examples of actions are notifying the user, logging, placing outputs in a safe state, and system shutdown/reboot. Of course, there is always the case of an error code you've never seen before, which I usually treat as a critical error that puts the system in a safe state, logs, and notifies the user. At some point I'll get the kinks ironed out of my code to the point where I feel comfortable posting it (at that point it will probably show up as a reference design on ni.com), but I think the concepts are solid no matter what implementation you use. Regards, Ryan K.1 point

-

QUOTE (Anders Björk @ May 25 2009, 11:51 AM) Great ideas Anders - That's very close to what we'll be presenting at NI-Week.1 point