Leaderboard

Popular Content

Showing content with the highest reputation on 10/24/2011 in all areas

-

Name: State Machine Follower Submitter: jcarmody Submitted: 19 Oct 2011 File Updated: 20 Oct 2011 Category: JKI Right-Click Framework Plugins LabVIEW Version: 2009 License Type: Creative Commons Attribution 3.0 This JKI RCF Plugin sets the Visible Frame property of a Case Structure while the owning VI is running. Use it to follow the execution through the cases in your string-based State Machine/Sequencer when Execution Highlighting isn't fast enough (and, it's never fast enough). The use-case I wrote this to improve is setting a Breakpoint on the Error wire coming out of the main Case Structure and probing the Case Selector terminal so I can float-probe wires during execution. The problem with this is that I had to manually select the current frame every time. This is boring, so I developed this plugin to automatically set the Visible Frame to the case most recently executed. Use - Select the String wire connected to the main Case Structure's selector terminal, invoke the JKI RCF and select StateFollower. Special thanks to - AristosQueue, for his help over here. LabVIEW versions - tested in 2009 & 2011 - it should work in 2010 as well Installation - use VIPM to install the VI Package License - Creative Commons 3.0 Attribution (Really, do whatever you want. I don't care.) Click here to download this file1 point

-

The idea is you don't do the same processing in each slave. In the navigation system you may have several unrelated processes that act on the same command "get directions home." One loop might search for the most direct route, another loop might try to find a route that results in the highest average speed. The results are then collected and based on the desired parameters (most direct route, least amount of time in car, avoiding hiways, etc it uses the correct route) Would the actual implementation actually use this kind of a transport layer? That I'm not so sure of. The actual implementation is more complex than that, so who knows if what you'd end up with would look anything like the original description of how the system is "supposed" to work. ~Jon1 point

-

I read both Atlas Shrugged and the Fountainhead soon after I started my business. Those books made me feel I was on to something... I couldn't make it through Zen and the Art of Motorcycle Maintenance. Most of the time I was thinking "Get to the Freeking point!" The worst time to read a book in a bad situation IMHO is Catch 22. I was working at a crappy job at the time. Made me feel hopeless... It's a good book, I read it at the wrong time..1 point

-

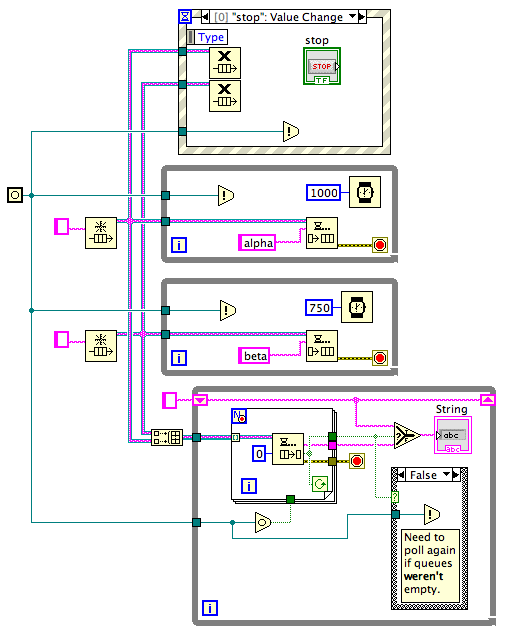

Here's a better example of the use of the Occurrences. Here it is used to implement efficient dequeue from multiple queues. It was built using the instructions: "Build the system with polling first, then add the occurrences to avoid polling." Note that you shouldn't actually use this example directly if you are going to wait on multiple queues because there's a starvation issue -- if the first queue gets heavy with elements, the consumer loop will never get around to dequeuing from the second queue. You'd need to put some fairness into the consumer's behavior to make sure it checked each queue equally as often for elements, maybe by rotating the queue array on every iteration of the consumer while loop, or something like that. Left as an exercise to the reader... this post is just about Occurrences. What's that you say? There are times when the loop will execute a poll when there's nothing to do? There's an extra iteration after every dequeued element just to prove that the queues are empty? Yes, you're correct. That's how the occurrences work. They are not the signal that there *is* data waiting. They are the signal that there *might* be data waiting and it's a good time to go check. This is their intended design because they are deliberately as lightweight as possible so as to be the atomic unit of locking in LabVIEW. Consider what would happen if the two producer loops both did "Set Occurrence" at the same time without the consumer getting an iteration in between, something that will happen every once in a while. Handling that case is why the consumer loop has to do its own secondary polling. The intention is that occurrences are used to write higher level APIs, and those higher APIs give the calelr the illusion of "I'll actually wait until data is available". And this is why occurrences are almost never used by LabVIEW customers -- those higher level APIs already generally exist. Here's the VI, saved in LV 2011: Wait For Multiple Queues.vi1 point

-

One thing to think about is the possibility of organizing instruments into "subsystems", so the higher level program controls subsystems and each subsystem deals with a limited number of instruments that work together. Then no part of the program is trying to juggle too many parts, and you can test the subsystems independently. It depends if your application can be organized into subsystems ("VacuumSystem", "PowerSystem"). I think that is called the "Facade Pattern" in OOP. Personally, I usually find it worthwhile to give each instrument a VI running in parallel that handles all communication with it. Then any feature like connection monitoring (as Daklu mentioned) can be made a part of this VI; this can include things like alarm conditions, or statistics gathering, or even active things like PID control (the first instrument I ever did this way was a PID temperature controller). Think of this as a higher-level driver for the instrument, which the rest of the program manipulates. You can use a class to represent or proxy for each subsystem or instrument; this class would mainly contain the communication method to the parallel-running VI. Daklu's "Slave loop" is an example. You can either write API methods for this class (as Daklu does) or send "messages" to this object (which I've been experimenting with recently).1 point

-

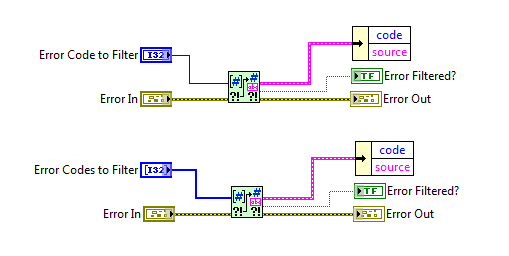

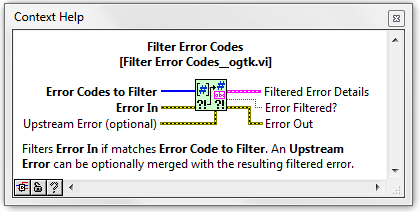

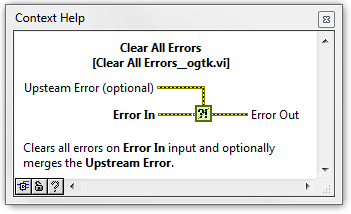

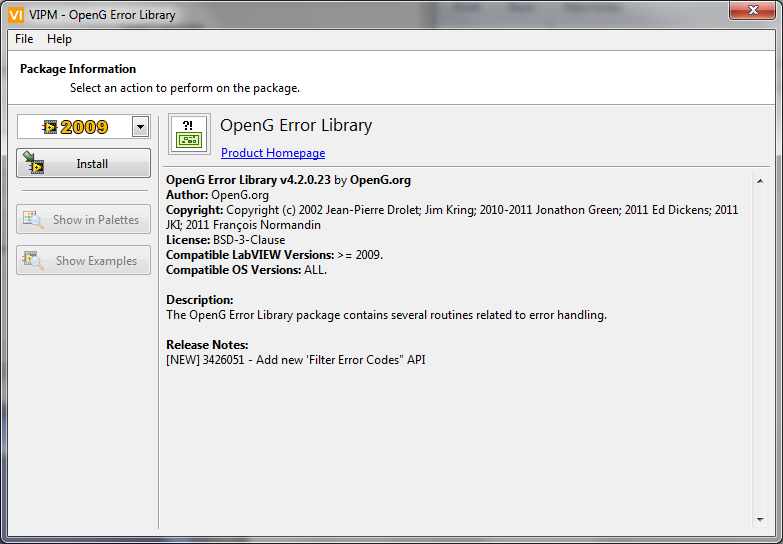

This package will be available for download through VIPM in a few days and covers some new VIs donated by JKI. [NEW] 3426051 - Add new 'Filter Error Codes" API New Palette New Filter Error Codes VIs that support Scalar and Array Error Code inputs New smaller Clear Errors VI (compared to NI) with additional Merge Upstream Error functionality Kind regards Jonathon Green OpenG Manager I would also like to thank Ed Dickens for reviewing this package for release.1 point

-

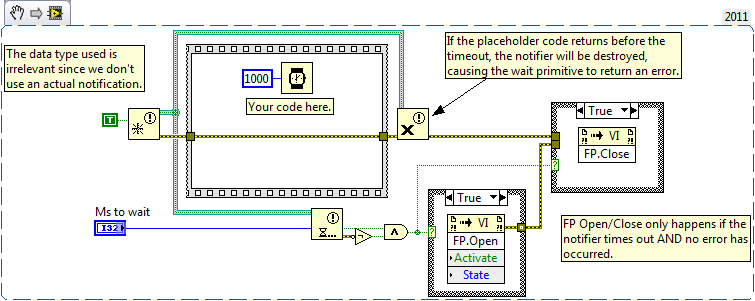

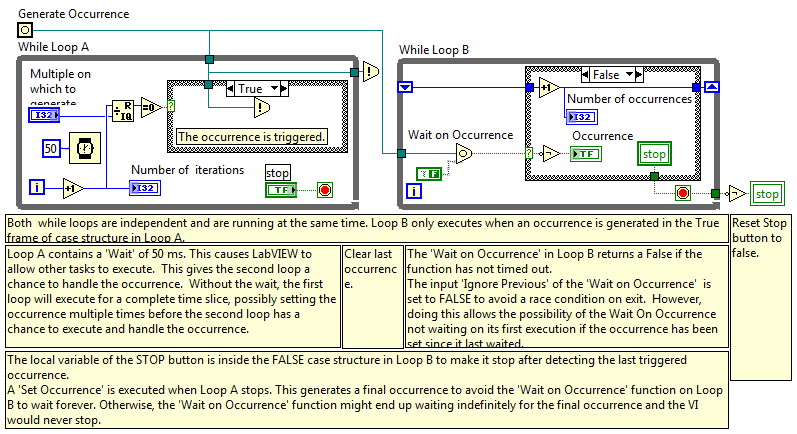

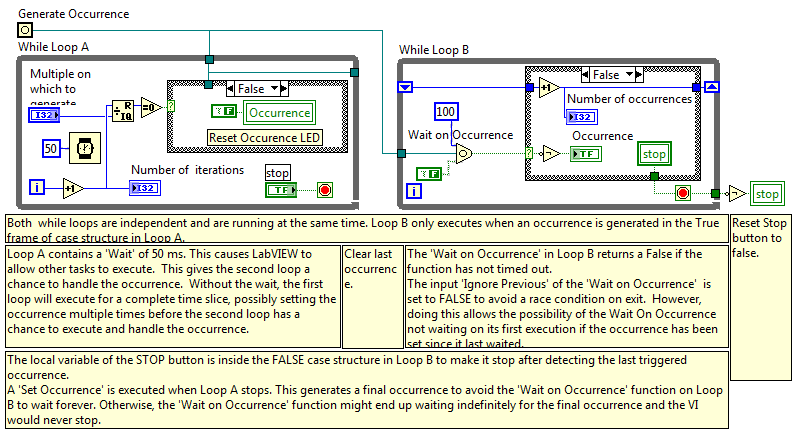

Thanks Rolf. As always, your knowledge of the lesser known aspects of Labview leave me slack-jawed. (I had never heard of "Labview Manager" and had to Google it.) Here's the "Continuously Generate Occurrences" example that ships with 2009. Every 10th iteration of loop A generates an occurrence and causes loop B to execute. I thought it was interesting to see a Set Occurrence after loop A exits. I understand why it's needed, it's just not the first route I would have tried. (But as you can see from my earlier posts I have very little understanding of this construct.) This causes loop B to get an extra iteration when you exit loop A. I started wondering how to prevent the extra occurrence, so I added a timeout and removed the tailing Set Occurrence. On the surface it seems like that should work, but there's a subtle race condition. Because the WoO has a timeout it is possible for loop B to read the stop button, exit, and reset before loop A has an opportunity to read it. With an infinite timeout that will never happen. I suppose you could put the button reset in a sequence structure and wire outputs from both loop A and B to it, but that feels kind of klunky to me. The other alternative is to set the timeout longer than the time between occurrences, so it will only timeout once the occurrences have stopped being generated. That doesn't seem like a very robust solution. I guess I'm left thinking they're okay for simple cases--such as Tim posted--where the occurrence will only fire once telling the receiving loop to stop. But at the same time I can't think of any reason (outside of interacting with external code) I'd want to bother with them. Notifiers and queues have the same functionality, provide more options for expansion, and the overhead is insignificant.1 point