fabric

Members-

Posts

29 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by fabric

-

I've enjoyed this hack for many years, but noticed it is not working in LV2023Q1. See here for problem description: https://forums.ni.com/t5/LabVIEW/Darren-s-Weekly-Nugget-05-10-2010/m-p/4360614/highlight/true#M1280554

-

Anyone else OCD about alignment and positioning in block diagrams?

fabric replied to Sparkette's topic in LabVIEW General

FWIW, here is an idea I recently posted that addresses one of the major sources of unnecessary bends... -

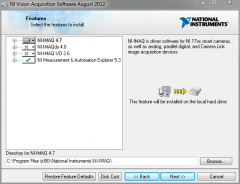

Actually it is a little better than you think... If you install the Vision Acquisition Software (i.e. the dev toolkit) then you don't need a license at all provided you UNCHECK the IMAQdx component... Magic! Of course, this will limit you to basic Image Management functions, but if that's all you need then you you don't need to pay half a year's salary for a full Vision license A handy side effect of this is that you can acquire images using IMAQ with a 3rd party CameraLink board without any license (since camera link uses plain IMAQ, rather than IMAQdx), but if you use a GigE camera then you need IMAQdx and therefore you need a license. Something to think about if you are building a vision system and have a choice of hardware interfaces! I tested this a little while back. Let's say you have a 1024x1024 RGB image: The IMAQ control uses 4MB to display the image, as expected since the underlying data type is u32 (4 bytes per pixel). Pretty efficent. The picture control uses 7MB which includes 4MB to display the image plus an additional 3MB for the input data. Why 3MB for the data? Well, the picture data is smart enough to know that an RGB image only has three bytes per pixel that are worth encoding. Note that the IMAQ control doesn't have the overhead for the input data since IMAQ images are by-ref.

-

The Vision Common Resources install is FREE and allows you to use the IMAQ image control in your application. You don't get any image processing but it is fine for loading images and displaying them with all the ROI tools. I believe the only bit you need a license for is IMAQdx...

-

-

You forgot "Inability to use in arrays"

-

Yes - my compiled code is separated... By the way, my current workaround for this is the old "pre-save, change, check, re-save" routine. Pre-saving the project before making any changes is starting to become second nature before editing any enum typedefs. Checking is a pain if you're paranoid, but usually I can tell if morphing has occurred by looking at any one instance. There seems to be an all-or-nothing pattern here... If there is suspicion, then I have found that saving the enum and then reverting the entire project usually does the trick, i.e. the morphing seems to be an edit-time effect and generally does not occur if the project is re-opened with a modified enum. (At least, this is what I have seen when adding values or deleting unused values...)

-

Yeah. I guess empathy was what I was shooting for. There might very well be something in this. Today I added a value in the middle and I think I often do that when the morphing occurs.

-

Grrr... It's happening more often now. I'm sure I've read about it here before but maybe it's time to table the issue again: I'm working on a large project, and I'm running LV2012 32 and 64 bit on Windows. I have an typedef enum inside a class. The enum happens to be the sole member of the class private data. The enum is public and is used here and there all over my application I decide to add another value to the enum and ... BAM! ... All instances throughout my project are reset to the first value of the enum. Kind of defeats the purpose of the enum, don't you think?! What do other people do? Define all enums fully during the design phase and never change them again? Label each instance of the enum with the desired value to provide some insurance against morphing? Don't use typedefs and update them all manually?

-

A simple solution is to put a collection of buttons in a cluster and set to arrange horizontally. Then you can show/hide buttons according to context using property nodes. Just use an event handler to detect clicks. How much easier can it be??

-

Organizing Actors for a hardware interface

fabric replied to Mike Le's topic in Object-Oriented Programming

<blockquote class='ipsBlockquote'data-author="Mike Le" data-cid="101054" data-time="1359502271"><p> This makes sense, but does that mean EVERY time the UI queries a setting, it has to go through the Controller to query the Hardware Actor? </p></blockquote> The preferred way to think about your actors is that they post information, rather than requesting it. That turns your issue around slightly:<br /> - The hardware actor stores it's own settings. If they change then it notifies someone who cares... probably the controller, which in turn notifies the UI.<br /> - The UI then does what it wants with the information. -

Sequencing alternatives to the QSM

fabric replied to PHarris's topic in Application Design & Architecture

Does the sequence need to change dynamically? If not, then a chain of sub VIs is hard to beat :-)- 17 replies

-

- qsm

- queued state machine

-

(and 2 more)

Tagged with:

-

I have done something similar using win API calls: Use event structure (with timeout = -1) to detect the original click on the thing you want to drag When a Mouse down is detected then store the starting position and toggle the event timeout to ~10ms In the Timeout case of the event structure, do two things: Poll the mouse button (e.g. user32:GetAsyncKeyState) to know when drag has ended Manually move the floating panel into position (I use user32:SetWindowPos) When mouse button is released then reset timeout back to -1

-

Just years? Excellent!! I was thinking it would be somewhere between decades and never...

-

Really?! Then can I put on a request for "CTRL +" and "CTRL -"? It works amazingly on adobe apps, and after a few hours of icon editing I'm always disappointed to come back to LV and find that my muscle memory has disappointed me. Reminds me of all those touchscreen projects... After a few hours of testing I've been caught out tapping the "normal" monitor back at my desk...

-

Best practises: organize large application

fabric replied to downwhere's topic in Application Design & Architecture

I'd say the single best thing you can do to fix all (yes, all!) the issues you mentioned is to restructure your code with the intent of simplifying the dependency tree and reducing cross linking. If many of your libraries are statically dependent on other libraries then you can quickly end up with a very complex dependency tree. This is compounded when using classes since loading any class member causes the entire class to load, as well as any owning libraries. (Sticking a bunch of classes in a .lvlib can cause major IDE slowdowns...) Many of us have seen great benefit in using interfaces to abstract the low-level layers from the application layers. This is a big plus for multi-developer environments and for improving load time and IDE performance. You might want to read up on the Dependency Inversion Principle. A few lava links: http://lavag.org/top...d-vi-templates/ http://lavag.org/top...existing-class/ And you might want to vote for this idea over on the blue side - you could be vote #100! http://forums.ni.com...s/idi-p/2150332 -

Cross-posted to the blue side: http://forums.ni.com/t5/LabVIEW/VI-object-cache-shared-between-32-and-64-bit/td-p/2230316

-

Right.... but if there was a separate VI obj cache for each platform, then most VIs would NOT need to be recompiled when switching versions. At least that's how I think it should be! My current project contains around 5000 VIs, so the unwanted recompile is a bit of an inconvenience...

-

Maybe... How do I do that?? (I'm running LV 2012, by the way)

-

Ok... here's my test: Open LV32 Make a new VI, save it Close LV32 Check timestamp of "objFileDB.vidb" file. Open LV64 Open previous VI and run it (but don't save it) Close LV64 Check timestamp again and notice that it has changed. If I open my VI in a different version of LV but don't run it, then the timestamp of my obj cache file doesn't change. Conclusive? Maybe not... but interesting!

-

I am currently working on a large project that needs to be deployed in both 32-bit and 64-bit flavours. This means I need to periodically switch between installed LV versions to build. It *seems* that if I have been working in 32-bit LV, and then I close everything and reopen my project in 64-bit LV, that everything needs to recompile... even if I had only changed a few items since I last worked in 64-bit. It appears that while LV does keep a separate VI Object Cache for each LV version, that it does NOT keep a separate cache for 32- and 64- bit versions. Is this really the case?