-

Posts

1,068 -

Joined

-

Last visited

-

Days Won

48

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by mje

-

Good point, I was totally wrong on that part. Not even going to try to fake my way through justifying what I wrote. I guess that's the magic of Windows on Windows, the wizardry that happens behind the scenes allows you to make calls like that that just automatically work. I've not really dealt with this, the overlapped calls were just something I ran into a few times now but never attempted because synchronized access worked just fine in either case. So basically there is no real use for this as far as what I've outlined above. I can just throw my cluster in a conditional disable structure and be done with it. Depending on the IDE, it will pick the right cluster and then we're off to the races. Well that was far easier than I thought.

-

It's not so rare that I deal with operating system calls directly via the call library function node (CLFN), I'm sure many of us break out these calls from time to time. When dealing with pointers, LabVIEW conveniently allows us to declare a CLVN terminal as a pointer sized integer (SZ) or an unsigned pointer sized integer (USZ). The behavior of such terminals is that at run time the value that is passed in is coerced to the right size depending on the architecture of the host operating system. For those who are unfamiliar, this is not something that can be resolved before hand in all cases. A 32-bit LabVIEW application may indeed be run on a 64-bit operating system, it's not until run-time that this can be resolved. When using SZ or USZ nodes, LabVIEW treats them all as 64-bit numbers on the block diagram, and coerces them down to 32-bits if necessary depending on the host operating system when the node executes. To be clear, this works excellently. But what to do when you need to pass pointers around as part of non-native types. Take for example the OVERLAPPED structure which bears the following typedef: typedef struct _OVERLAPPED { ULONG_PTR Internal; ULONG_PTR InternalHigh; union { struct { DWORD Offset; DWORD OffsetHigh; }; PVOID Pointer; }; HANDLE hEvent;} OVERLAPPED, *LPOVERLAPPED; Not to get into details of the Windows API, but the ULONG_PTR, PVOID, and HANDLE types are all pointer sized values, the DWORD is a "double word" and always a 32-bit value. So if we know we're dealing with a 64-bit host operating system, one possible way to represent this structure in LabVIEW is a cluster of the form: { U64 Internal; U64 InternalHigh; U32 Offset; U32 OffsetHigh; I64 hEvent;} This assumes that you'd rather interact with the union as a pair of Offset/OffsetHigh values rather than a single Pointer value. On a 32-bit host operating system though, we need a different cluster: { U32 Internal; U32 InternalHigh; U32 Offset; U32 OffsetHigh; I32 hEvent;} So what's the problem here? Well, basically we need to duplicate code for each of these situations. If I have a string of CLFN calls, I need to have one case for each host OS type. This seems error prone to me because the two cases would otherwise be identical other than the typedef I'm stringing around for the cluster/struct. So at long last, do you think there would be value in being able to directly have a pointer sized type we can put in a cluster? { USZ Internal; USZ InternalHigh; U32 Offset; U32 OffsetHigh; SZ hEvent;} The behavior of these would be similar to how they behave in the actual CLFN node. For all intents and purposes in LabVIEW they're 64-bit numbers. However when passing through the CLVN node, their size is coerced when necessary. I'd also go so far as to perhaps expect their size to be properly coerced for example when typecasting, flattening etc. Am I way off base here or would there be an actual use case for this? Note several typos where CLVN should be CLFN. I'm loathe to edit the post because the last few times I've tried doing as much lava has more or less destroyed any semblance of formatting that existed...

-

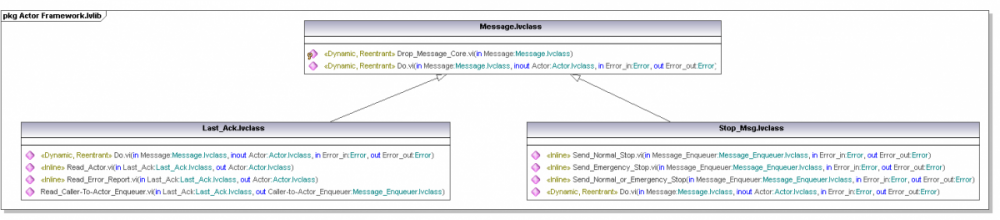

Dataflow outputs and ParameterDirectionKind

mje replied to PaulL's topic in Object-Oriented Programming

Hah, yeah. Error I/O omitted for the sake of brevity laziness. Declarations can be looooooooong when you start enumerating the terminals. Often to the point of where I don't see the point of a diagram at all: Do you really think this would be readable if this diagram were anything but a simple class? Really? When 4+ terminals start to be listed in any method, classes takes up the whole width of a reasonably sized diagram. Have a class where most terminals on your 4x4 are used up? Good luck with that! To be honest, I find myself using pseudocode more then UML class diagrams. I end up with something that looks like a mutant offspring of C++ and UML. It's faster and just as legible when looking at any individual class, but it does lose the ability to easily diagram class relationships. I always specify class I/O because not all methods have both in and out, see the Message.lvclass hierarchy above as an example. Anyways, really trying hard not to derail this. Sorry Paul! But I do believe the desire to use inout versus explicit in/out, as well as for example Mikael's desire to move class terminals to stereotypes are all related to the topic at hand: UML can be way too verbose to be useful as a diagram in my opinion, but I absolutely recognize the need for the verbosity. Perhaps these competing forces are why I find myself never really getting serious about UML... -

Dataflow outputs and ParameterDirectionKind

mje replied to PaulL's topic in Object-Oriented Programming

I'm in a very similar school. I think "return" values are meaningless in LabVIEW, I use in/out/inout exclusively. Disclosure: my UML use isn't exactly rigorous, I use it for my own personal mapping of our projects, it's not used in official documentation or anything. My usage of in/out is pretty basic, corresponding to control/indicator terminals. I don't think this needs any real explanation. As for "inout", after a few iterations (including ditching it completely only to revive its use) I've managed to settle on only using this for terminal pairs which type propagation is guaranteed. The obvious example would be dynamic dispatch terminals, but there are other cases (explicit use of the PRTC primitive). Random stream of thought that landed me here: Take for example: Foo(in MyClass_in:MyClass, out MyClass_out:MyClass) (Pardon the pseudo code) I don't like this for two reasons: it doesn't make it clear there's a type propagation between MyClass_in and MyClass_out, and it doesn't show the dynamic dispatch relationship. My solution is the use the "inout" enumeration to show that indeed, there is a relationship between input and output: Foo(inout MyClass:MyClass) Here this implies a pair of terminals in LabVIEW, but still doesn't imply dynamic dispatch, as well as it shouldn't (again, PRTC comes to mind). So for DD I adopt the use of a <<Dynamic>> stereotype. Thus the following three prototypes are indeed distinct: <<Dynamic>> Foo(inout MyClass:MyClass) <<Dynamic>> Foo(in MyClass_in:MyClass, out MyClass_out:MyClass) Foo(in MyClass_in:MyClass, out MyClass_out:MyClass) Now straight away, I admit this isn't ideal. One point I don't like is how my use of the single inout parameter maps to a pair of terminals in LabVIEW. I also realize propagation is not the intention of "inout". But I see no other way of for example, distinguishing a prototype function that has a pair of dynamic dispatch terminals, versus one that has a pair of class terminals, of which only the input is dynamic dispatch (see for example state machine implementations, or more recently the actor framework message core). Can we extend/restrict the ParameterDirectionKind enumeration? I have not found a way to do so in the tool I use (UModel) and I'm honestly too much of an amateur with regards to UML to know. I'm thinking along the lines of XSL how I'd really like to apply a LabVIEW profile which mutates the enumeration to something like: in out dynamic_in dynamic_out prtc_in (?) prtc_out (?) Nomenclature aside, I see a use for all six of them. Ideally I don't think inout and return apply in LabVIEW. Also, while I don't mean to derail, in addition to the <<Dynamic>> stereotype, I also have ones for <<Reentrant>> and <<Property>>. I don't use property nodes but I recognize they exist and feel defining properties at the design level is important if the class indeed implements them. Still on the fence regarding a <<Deprecated>> stereotype. -

type def automatic changes not being saved in LV2013

mje replied to John Lokanis's topic in LabVIEW General

Interesting. I've been dealing with this for years on one of our projects. The project has seen major development bouts in versions 2009, 2011, and now 2013, but also went through the intermediate versions and some of the code is legacy which pre-dates 2009. Only difference with my problem is once saved on a computer it is good-- the full project can be opened/closed as many times as you like without anything appearing dirty. Commit the changes though and update your source on another machine and you're SOL: dozens of VIs (not ctl or lvclass files) give the dreaded and oh-so-helpful "Type definition modified" message. Wreaks havoc on source code control. I've lost the ability to develop on multiple machines for that project because it's just impossible to untangle the legitimate changes from the the other ones. To allow multiple developers each library is "owned" by a person and never worked on in tandem. Major libraries are pushed not through source code control but VIPM repository updates. I *hate* this solution but I've literally invested hundreds of hours into trying to make LabVIEW work right and have frankly given up. I have big projects which are fine in LabVIEW, but I also have ones like this. I'm a firm believer in LabVIEW for large application development, but shenanigans like this make it a hard sell at times. I'm at the point in my career where a language is frankly just the grammar I use to get my ideas down. I may be more proficient in LabVIEW now, but that's just because it's been the tool du jour for the last few years. It's the development environment I care more and more about, and I'm not sure NI is winning that battle. To be fair, they have made fantastic advances, and it's not like other IDEs/languages are all rainbows and such. Anyways, sorry for the rant. You hit a nerve: this is one standing issue I have no patience for and I am a very patient man. Hopefully this reply makes some semblance of sense, it's late and a little hard to proof through lava's mobile interface. -

LabVIEW 2013 Favorite features and improvements

mje replied to John Lokanis's topic in LabVIEW General

Ditto. Its really hard for anything NI offers on the FPGA level to compete with our ASIC. Which means we tend to re-purpose and hack a lot of our old boards when prototyping next generation or new products. MyRIO may be cost effective enough to consider using, I really want to do more than just dabble in LabVIEW RT/FPGA. -

Yes, there is a difference. By saving the queue refnum and re-using it you avoid the overhead of having to do a name based look-up every iteration. Depending on the frequency of your loop this may or may not be significant. Regardless, obtaining a refnum before a loop, using that refnum over and over again on each iteration, then finally releasing the refnum after you're done with it is pretty standard practice. You may also consider passing the refnum directly into the subVI and not having to obtain it in the first place.

-

Norm, that is a fantastic walk through. I can't thank you enough. Cheers!

-

Indeed. I have some proof of principle code laying around that takes XML data from one of our applications and produced an HTML report with embedded SVG for things like chart data (via some stylesheet transform-fu). Slap that in the right browser and it was beautiful. Not so much that the graphics looked that good (it was only a proof after all), but beautiful from an elegance stand point: you had a portable (single file) report with all the styles and graphics directly embedded which could be rendered potentially on any target with any resolution to high fidelity. I'm so on board with what is possible with an overhaul of the picture palette. I find most of my displays now revolve around pictures now. Even my graphs really only use the graph/chart element for the cheap anti-aliasing and scale rendering, then a whole mess of business dropped into the plot image layers. Not that I'm belittling graphs and charts-- it's so nice to be able to drop an array of doubles/clusters/whatever into a control and just have a plot work with no worry, however the rest of the functional elements are little rough around the edges. I don't really like the way cursors, annotations, digital displays, etc are implemented, so I usually roll my own with supplementary plot images and mouse tracking.

-

Hi Norm, that's great news! It's just a combination of the existing line/text/shape operations, there's no image or bitmap data. I can't imagine there would be more than 100 elements in the string since I'd be handling the translations at a pretty low level before too many of the picture strings are concatenated. Also, LV2013.

-

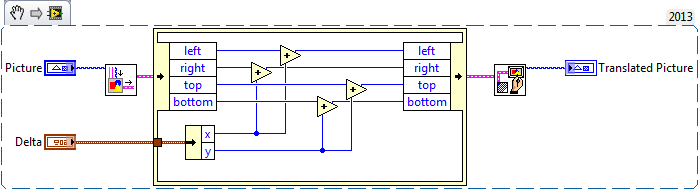

I have a situation where I have some pre-rendered picture data and I wish to translate it by some number of pixels. This is what I came up with: Problem is though the intermediate pixmap format completely flattens the data, thus removing any trace of vector based content that was in the original picture (blue wire). Is there some operation I can do that will translate all coordinates in an existing picture string?

-

I'm just glad we can identify what's causing the issue. Hard to create work-arounds if you don't even know what to work around. I imagine manually setting the scale marker values array may fix the problem (then again it may also trigger it just the same). Rendering grid lines manually via the plot image layers also comes to mind if one doesn't want a grid at each division. Reported to R&D with CAR 427628.

-

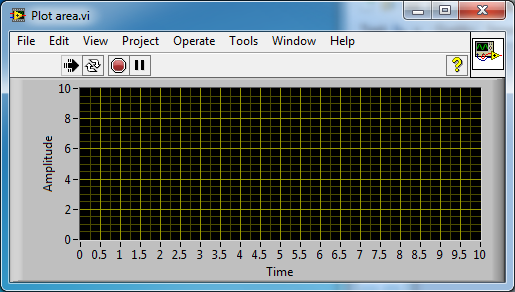

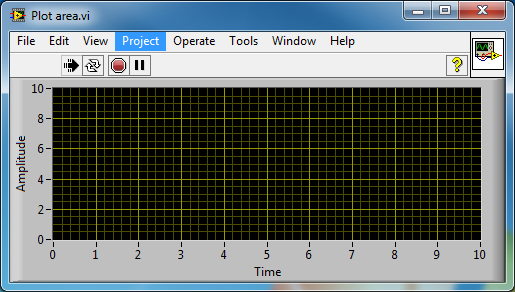

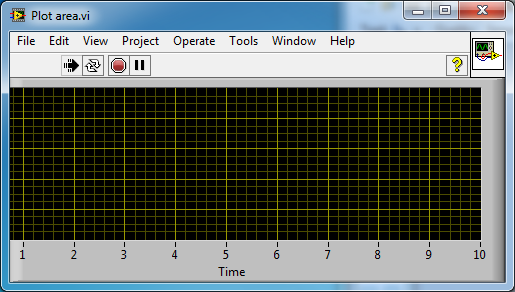

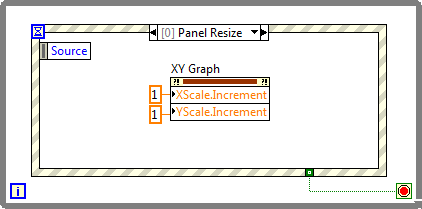

I've had a standing defect on one of our products for a few years now where under some situations one of our plots would slowly march off screen on each window resize event. Well I finally tracked this one down. Attached is code to reproduce (LV 2013). Plot area.vi If you open the VI and run it, then proceed to do multiple window resize events you'll notice the left edge of the plot area slowly marches over. My recommendation is to maximize/restore/repeat a few times to see the effect. Take for example this series of images: The offending code is simple, I'm setting the scale increment on a resize event: If the VI is edited such that the property node is removed the behavior vanishes. I wouldn't expect setting this property to cause the issue, I think I smell a bug in here somewhere. Confirmation?

-

Yep, that's pretty much what I was planning on doing should I get the time. I think it would be rewarding as well, but I've done a few things like this already in the app so I'm well aware of the time sink. I hope to get to it, but there are far more important things to tackle if it boils down to having to invent something like that. And talk about a nugget on that GDI resize. I love it!

-

I'm looking to create a UI element something like so: That is I want to present a bunch of images in a pulldown menu for selection. The number of selections could potentially be large, so a scroll bar would have to appear depending on size etc. I don't particularly care for the built in picture rings because of their selection mechanism (bringing up a poorly sampled square thumbnail for preview) let alone a non-system styled control will really look out of place on my UIs. It seems half of this application's UI implementation basically boils down to getting creative with picture controls-- and boy can you do a lot with them. Before I have relegate this feature's importance to "only if everything else is done", does anyone have any bright ideas that may make this reasonably trivial to implement? Win32/.NET objects are OK for this application, but I don't think I have time to bust out any C#/WPF level programming for creating my own widget, otherwise I'd likely continue down the well beaten path of abusing picture controls and bending them to my will.

-

Built Application Occasionally Freezes on Windows Open/Save Dialog

mje replied to mje's topic in LabVIEW General

Well. This morning I got into work, fired up my application and tried to open a document, sure enough the dialog froze. Excellent, I'll use procmon to try to figure out what's wrong. Right. Open my app. Fire up procmon. Start capturing. Try opening a document. And everything works. I. Just. Don't. Know. I reproduced this bug dozens of times over the last few days, in fact I have not been able to use my application on our workstation all week due to the bug. And the first honest attempt at capturing information about where things are going wrong and the bug vanishes. Sigh... Well, procmon did capture literally tens of thousands of events when I triggered the dialog, so at least if the problem creeps up again I now have a needle in a haystack to look for, rather than nothing at all to go on. Still not sure how to debug this next time a customer has this issue. I really want to know if it's something I'm triggering from my LabVIEW code. I suspect not, since it crept up on a system that had functioned for over a year, and the problem survives reinstalls, reboots, and clearing any serialized data that I (directly) access. -

Built Application Occasionally Freezes on Windows Open/Save Dialog

mje replied to mje's topic in LabVIEW General

Nice read indeed, thanks! More info: Renaming the executable "fixes" the problem, if I run the application once I've renamed the exe and I present the open dialog, it appears as the default size, in the default position. However shutting the application down, renaming it back to the original name and trying again results in the same problem. Changing the path of the executable (but not the name) has no effect. If I rename the folder containing the application, the freezing behavior persists. It appears the serialized data is tied to the image name. Uninstalling the application, ensuring the folder which contained the application is gone, rebooting, then reinstalling does not clear the problem either. So it appears whatever data is being serialized is not removed during uninstallation. I'll add that our installer/uninstaller is created by a third party application due to a defect in the NI product which prevents us from using it. At this point I'm hesitant to call renaming the executable a workaround: yes, it clears the bug, but should a customer inquire about this is telling them to go to their PF folder and rename an EXE really a good "solution"? Obviously no... I'll post here if further spelunking turns up clues and/or fixes. -

Built Application Occasionally Freezes on Windows Open/Save Dialog

mje replied to mje's topic in LabVIEW General

If only it were that easy. The first thing I did was unmap all of my network locations and disconnect everything I could with a "net use" command. We have a "My Documents" library which is remote and I left intact. Out of desperation I've also run the System File Checker tool (sfc /scannow from an elevated command prompt) but nothing was found. Later today I'll try to do a full uninstall, clean the application's file system location out manually if it remains, and reinstall in hopes that whatever that serialized information is will get nuked. An hour of google searching has turned up no other hints. I can't find any documentation on what data is stored, other than people pointing out the same thing as me-- that data must be stored somewhere and it appears to be application and folder specific. -

Built Application Occasionally Freezes on Windows Open/Save Dialog

mje replied to mje's topic in LabVIEW General

Well this is interesting, on my development computer, my built application has been rendered unuseable by this bug. The word "built" is important because that means nothing has changed with respect to the logic that makes the call to the dialog. I now start my application, do a File Open command, and the dialog freezes. The behavior persists even after a full system reboot. It is obvious that some information is serialized permanently for the dialog because it opens to a non-default size for example. I'm not in control of this information, so either Windows or LabVIEW is serializing it and something about it is corrupt and freezing the modal dialog, in turn freezing my application. Argh. This is going to be annoying to debug since it's a released application with no debug info. -

Returning to state where you left off

mje replied to GregFreeman's topic in Object-Oriented Programming

Perhaps. Many ways to skin the state machine. My preferred implementation is a loop with two shift registers: one for the Context and one for the State. Each iteration of the loop calls the State:Act method, which has a dynamic dispatch in but static dispatch out, as well as a pair of Context in/out terminals. The goal of State:Act is to operate on the Context by whatever means makes sense for the State, and to return what State will be used on the next iteration. The fact that the output is static dispatch means the method is free to switch out any State on the output-- while an Act implementation may return the same State out as was passed in, it can also return any other state depending on whatever conditions apply. For your situation, you'll likely be dealing with a stack of states. Store the stack where you like, the idea being at some time an Act implementation will decide that it's reached a terminal iteration and has no next state, where it may wish to operate on the stack, returning the State pushed from the top as the next State. -

Returning to state where you left off

mje replied to GregFreeman's topic in Object-Oriented Programming

This is my default implementation for state machines. Works wonderfully. -

The system controls resize depending on font scale settings as well Even if they don't have text (I'm looking at you, booleans with custom decals and no text). I don't have a solution to this, my only retort to our testers has been to "deal with it". Crank the font sizes up to 150% and most of my UIs become an overlapped mess of text and controls unfortunately.

-

The slow down was probably the biggest one, though I believe that was addressed some time ago. I'm still not convinced property nodes were the cause though so much as poor architecture on my part, the project that exhibited the behavior was old and refactoring has since improved it considerably. I have also seen properties "go bad", wherin they turn black and become unwirable: here and here. I've also had a few cases where using a property node broke the VI, but replacing the property node with the actual VIs worked just fine, this was posted some time ago to lava but my searching is failing me. I have also seen problems with property nodes getting confused about their types, where a VI will suddenly break a wire because of an apparent type mismatch between source and sink, however deleting the wire and rewiring works just fine. The kicker about these issues is they tend to creep up in re-use libraries seemingly randomly, and to fix any of them I need to trigger recompiles in those libraries, which sends source code control into a tizzy. My work around: I no longer use property nodes in re-use libraries, and as habit I rarely use them other code either because who knows when something will get promoted to a library.