-

Posts

4,942 -

Joined

-

Days Won

308

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ShaunR

-

-

I haven't tried porting mine to mac yet, but I figured out how to get the calls to work. If I remember all this right (don't have access to mac right now and it's been a couple of weeks) you need to create a "sqlite3.framework" folder and copy a sqlite3.dylib file into it (you can get one from the user/lib or extract it from firefox). You set the CLN in LabVIEW to point to the framework directory, and you have to type the functions names in (the function name ring didn't work for me).

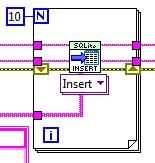

I hacked together (barely tested on LV2010f2 WinXP and not documented) a gettable implementation to see if there was any speed improvement. There wasn't much since get table is just a wrapper around all the other functions.

GetTable

Insert 141

Dump 49

Sweet. Nice work on that.

I think perhaps blobs my be an issue with this as they are with straight text. But still, its definitely worth exploring further. You've shown me the way forward, so I will definitely be looking at this later.

I'm not sure why you think its not much of an improvement. For select queries its. ~60% increase on mine and ~40% increase on yours (using your benchmark results) . I wouldn't expect much on inserts since no data is returned, therefore you don't have to iterate over the result rows.

Incidentally. These VIs run faster on LV 2009 (for some reason). Your "get_table" example on LV64/32 2009 inserts at ~220ms and dumps at ~32 ms (averaged over 100 executions). On LV2010 I get roughly the same as you. Similarly, my 1.1 version inserts at 220 ms and dumps at 77 ms (averaged over 100 executions). Again. I get similar results to you in 2010. Of course. Dramatic insert improvement can be obtained by turning off synchronization. Then you are down to an insert speed of ~45ms.

My next goal is to get it working on the Mac. I have a another release lined up and am just holding off so that I can include Mac also. So I will have a go with your suggestion, but it looks like a lot of work if you cannot simply select the lib. Do you know of a web-page (for newbies) that details the "Framework" directory details (e.g, why it needs to be like this. What its purpose is etc)?

-

Hi all.

Not really a "Labview" question....but related.

I've "zero" experience with Macs so apart from a huge learning curve, I'm getting bogged down with multiple tool chains and a severe lack of understanding of MAC (which I think is BSD based).

I've released an API which currently supports windows (x32 and x64) and has been reported to work with Linux x32. These work because I'm able to compile dll and .so for those targets. I'd really like to include Mac in the list of support but am having difficulty compiling a shared library (dynlib?) that Labviewwill accept.

I have set up a Mac OSX Leopard 1.5 virtual machine with labview and Code:Blocks.It all runs (very slowly) but I'm able to compile a shared library in both x32 and x64 (Well. I think I can at least.. The gcc compiler is using -m32 for the 32 bit and won't compile if I have the wrong targets). The trial version of Labview I downloaded I know is 32 bit (got that from conditional disable structure) and I think the Mac is 64 bit.

So I have the tool-chains set up and can produce outputs which I name with a "dynlib" extension. However. No matter what compiler options I try, whenever I try to load a successful build using the labview library function (i.e. select it in the dialogue) it always says it's "Not a valid library".

Does anyone know what the build requirements (compiler options, architecture, switches etc) are for a Mac shared library? There are a plethora of them and I'm really in the dark as to what is required (i386?, Intel?, AMD? all of the above?, -m32?, BUILD_DLL?, shared?)

Any help would be appreciated.

-

I suggest you create a thread in the mac section to discuss this issue.

On a side note, I think there are some mac maniacs on info-LabVIEW, dunno if you use it...

I didn't even know LAVA had a Mac section

Thanks for that. I'll give it a whirl.

Your not doing anything wrong. You'll just have to wait for the next release. All that's happened is I've wrapped the original in a polymorphic VI since there is another insert which should make it easier to insert tables of data without the "clunky" addition of fields (which is fine for single row iterative inserts).

-

The difference between a patch and a stability and performance release is largely in the integration between features and how deep into the corners we sweep. A patch fixes very targeted bugs, bugs that crash, bugs that have been specifically noted by a large number of customers, or bugs affecting high profile features which have no workarounds available. This release is going after a lot of non-critical issues.

A fair comment. Although I do subscribe to the premiss that the corners are swept away on every release and a product up-issue is an extension of a rugged base. But then again, I'm more involved with mission critical software where even "minor" annoyances are unacceptable..

Lets hope the "Tabs Panel" resizing is fixed finally

-

I finished up the last (hopefully) pass through my library a week or two ago. I got permission from my employer to post my SQLite library (I need to decide on a license and mention the funding used to create it). and when I went to try to figure out how to make a openg library (which I still haven't done yet) I saw the SQLiteVIEW library in VIPM. Which is similar to mine. But mine has a few advantages.

Gives meaningful errors

handles strings with /00 in them

can store any type of of LabVIEW value (LVOOP classes, variant with attributes),

can handle multistatement querys.

has a caching system to help manage prepared querys.

As for benchmarks I modified your speed example to use my code (using the string based api I added to mine since to the last comparison), and the SQLiteVIEW demo from VIPM. This is on LV2010f2 winxp

Yours

Insert 255 Dump 107

This no longer available library that I've been using up until my new one (I'm surprised how fast this is, I think it's from having a wrapper dll handling the memory allocations instead of LabVIEW)

Insert 158 Dump 45

SQLiteVIEW

Insert 153 Dump 43

Mine

Insert 67 Dump 73

Splendid.

The wrapper (if its the one I'm thinking of) uses sqlite_get_table. I wanted to use this to save all the fetches in labview, but it requires a char *** and I don't know how to reference that. The advantage of the wrapper is that you can easily use the "Helper" functions" (e.g exec, get_table) which would vastly improve performance. But I'm happy with those results for now. I'll review the performance in detail in a later release.

Did you manage to compile SQLite for the Mac?

I managed to compile sqlite on a virtual machine, but whatever I tried, labview always said it was an invalid library.

Even the Mac library I downloaded from the sqlite site wouldn't load into labview.

Even the Mac library I downloaded from the sqlite site wouldn't load into labview. I think it probably has something to do with the "bitness",

I think it probably has something to do with the "bitness",Any Mac gurus out there?

-

Partial INSERTs would be nice.

What do you mean?

You can insert a single column if multiple columns have been defined. the others just get populated with blanks (unless you have defined a field as NOT NULL)

Can you elaborate?

-

1

1

-

-

I remember seeing an example (can't remember where it is now) which was representing RGB colour correction and you could grab a points on the line of the graph and them around to change the colour profile. May have been in the Vision stuff.

-

other SQLite tools.

Such as? LV tools for SQLite are few and far between. hence the reason for publishing this API.

If you look back, Matt W has done some benchmarking against his implementation. There is a "Speed" demo which means anyone can try it for themselves against their tools (post them here folks

).

).There are a few tweaks in the next release, but the improvements are nowhere near the same as between versions 1.0 and 1.1. Now its more to do with what hard disk you are using, what queries you are performing, whether you can tolerate less robust settings of the SQLite dll.

-

What exactly is meant by "stability release" anyway?

Can we expect that it won't require patches?

Can we expect all previously reported bugs to be fixed?

Do we have to pay for a more stable environment?

What is the difference between a "stability release" and a "patch"?

-

Thanks guys.

No wish list? Gripes? Suggestions?

-

Anyone actually using this? (apart from me

)

)I've another release almost ready so if you want any additional features / changes.... now is the time to mention it (no promises

).

).-

1

1

-

-

Posting the command reference manual would be helpful

-

If the above suggestions don't work. then try forcing the libs into the exe:

-

-

Hi Shaun,

I can find my USB camera in MAX, But if i try to stop the grab in max after caputuring the image the total MAX gets closed. the same thing is happening in LabView also( whenever i call the USB close.vi function). whether this is camera issue or i have to do some other thing. please give your ideas. (I am using Mightex USB camera).

Regards,

Kalanga

MAX uses NI-IMAQdx so you should be using the IMAQdx functions instead of the USB driver.llb functions (see the link I posted earlier). These are notoriously problematic. Make sure they are uninstalled / removed from your system.

Also, make sure you have the latest IMAQdx drivers installed.

-

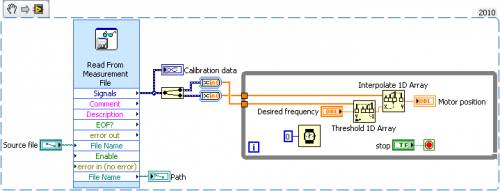

Interpolation (As the name suggests) is for creating intermediate values where none exist. I don't think this is what you are trying to do (maybe wrong

).

).From the VI implementation and description it looks like you are trying to find a motor position that is a close match to the desired frequency since the motor position cannot be fractions (discrete steps). I.e you have a look-up table.

So based on that I have modified your VI to do this.

-

The .vi and .lvm files are attached.

Post them and we'll take a look

-

You may wish to look at this - http://lavag.org/fil...ol-inheritance/

I haven't used it myself, so I can't comment on it.

Thanks Yair. Unfortunately uses OGlibs so cannot try it. It talks about inheriting "daughters'" properties which might be the reverse of what I was seeking.But should we really use a tool to do this? I did briefly look at scripting to generate the VIs for the properties and methods, but couldn't get a list of them from the original control. But still. That's a lot of VIs to do what the control already does

-

I have been playing with them more and more lately and getting a good feel for the style thats required to use them.

I have a few gripes too that make me stay away from them, e.g. I can't include it's reference as a member of a Class (works in src not in build) etc...

But some really powerful stuff there - they just need a little make-over IMHO.

Indeed. My gripes are a bit more fundamental than that though.

All I wanted to do was add a "Clear" method,alternating row colours and make it accept arrays of strings instead of using the "Item Strings" property to a multicolumn list box. But the amount of effort in re-implementing all the standard stuff just doesn't make it worth it. Only took me 15 minutes to create the control and make the changes I wanted, then it looked like another 2 days to re-implement the standard stuff.

-

ShaunR - I appreciate the replies. At this point I have NI Tech Support performing a lot of the same head-scratching that I've been doing.

I like the use of the shift register to read additional elements.

The CPU pegged at 100% usage, which isn't surprising since there is not a wait in the loop. I opened and closed various windows and was still able to create the timeout condition in the FPGA FIFO. The timeout didn't consistently happen when I minimized or restored the Windows Task Manager as before, but it does seem to be repeatable if I switch to the block diagram of the PC Main VI and then minimize the block diagram window. I charted the elements remaining instead of the data; the backlog had jumped to ~27,800 elements.

I dropped the "sample rate" of the FPGA side to 54 kHz from 250 kHz. I was able to run an antivirus scan as well as minimize and restore various windows without the timeout occuring. That is good, though the CPU usage is bad as there are other loops that have to occur in my final system.

Tim

There is not supposed to be a wait. The read function will wait until it either has the number of elements requested, or there is a time-out. In this respect you change the loop execution time by reading more samples. If you are pegging the CPU then increase the number of samples (say to 15000) and increase your PCs buffer appropriately (I usually use 2x te FPGA size). 5000 data points was an arbitrary choice to give a couple of ms between iterations but if the PC is is still struggling (i.e there is some left over in the buffer at the end of every read) then it may not be able to keep up still when other stuff is happening.

-

Yer, it still blows my mind at how crazy it went with just a 4MB array!

And don't worry, I still have another installment (X-Control) waiting for me tomorrow!

At first glance there is no Build Array's etc... but there are options to transpose the buffer, then th buffer gets reformatted to an XY Graph.

That should be fun, can't wait!

Spooky

I was playing with Xconrols this weekend too (not fr buffer stuff though).

I shelved it in the end since I was really annoyed that a XControl doesn't inherit all the properties and methods of the base data type meaning you have to re-implement all the built in stuff.

-

Except then the PowerMeter class is dependent on the PowerSensor class. You have to drag the PowerSensor code around every time you want to use the PowerMeter class. (Maybe a power meter without a power sensor is meaningless, in which case that would be okay. Otherwise I try to break up dependencies in potential reuse code.)

Not really. The PowerMeter class has no dependencies its just a container used to manipulate the object you pass in to give the results context and meaning. PowerSensor Could Just as easily be an RTDSensor. You put it more eloquently than I in your previous paragraphs but basically we are thinking along the same lines.

And BTW, I thought you didn't like OOP...

LVOOP! My other language is Delphi

Horses for courses.

Horses for courses.

-

I am gonna side step my original statement of that I cannot use the preserve run time class VIs. Any place where I would perform that downcasting I wouldn't be changing the class on the wire (I would be getting downcast errors.) As far as that replacement goes it did eliminate my errors in the development environment (no crashes, no data type losses.) But In a built application it still crashes.

Could you build one of your own applications where you are using property nodes?

I didn't consistently see the error until I built my application for the first time. Furthermore, it was mostly displaying bad data in fixed data types like U32s or some other scalar. It wasn't until I used a datatype like a string or array before the crash became consistent (I think its still a matter of probability, it just gets alot higher the more memory you require for a datatype.)

The "Othello" contribution in the "Examples Competition" uses property nodes.

-

In other words: Go Mad!

In other words free resource

[CR] lvODE

in Code Repository (Certified)

Posted

Impressive.

I don't have a valid use for this, but it is obvious you have spent a lot of time on this product. Many thanks for sharing it with the Labview community.