-

Posts

461 -

Joined

-

Last visited

-

Days Won

32

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Mads

-

You should have used Grok...

- 14 replies

-

- dvr

- ni software

-

(and 2 more)

Tagged with:

-

Discussion forums like LAVA and ni.com are challenged these days by LLMs that can answer in a well structured manner very quickly and handle follow-up questions on the spot. Forums have their stenghts, but when it comes to basic questions that LLMs can find a lot about, spread across multiple forums, the LLMs win. It is a bit of a catch 22 though, as the LLMs need the forums alive to stay up to date 😮 ------------------------------ Here is Grok answering you question of what a DVR is: DVRs as References: In LabVIEW, DVRs (Data Value References) are a mechanism to access data by reference rather than by value. This means that instead of creating copies of the data (as is typical in LabVIEW's dataflow paradigm), a DVR provides a pointer-like reference to a single memory location where the data resides. This avoids unnecessary data duplication, which can be critical for large datasets or performance-sensitive applications. Avoiding Copies: By using DVRs, you can manipulate the same data in memory without creating copies, which is especially useful for large arrays, clusters, or other complex data structures. This reduces memory usage and improves performance. Parallel Operations and Race Conditions: When multiple parallel operations (e.g., parallel loops or VIs) access the same DVR, there is a potential for race conditions if the access is not properly synchronized. LabVIEW provides the In-Place Element Structure to safely access and modify DVR data. This structure ensures that only one operation can read or write to the DVR at a time, preventing race conditions. Without this, simultaneous read/write operations could lead to unpredictable results or data corruption. Key Points to Add: Thread Safety: DVRs are not inherently thread-safe. You must use the In-Place Element Structure (or other synchronization mechanisms like semaphores) to avoid race conditions when multiple parallel tasks access the same DVR. Use Cases: DVRs are commonly used in scenarios where you need to share data between parallel loops, modules, or processes without copying, such as in real-time systems or when managing shared resources. Limitations: DVRs are only valid within the application instance where they are created, and the data they reference is freed when the reference is deleted or the application stops. --------------------- Before DVRs we typically had to resort to cloning of functional globals (VIs), but DVRs make this a little more dynamic and slick. You can have a single malleable VI operate on multiple types of DVRs too e.g., that opens up a lot of nice reuse. If you have an object that requires multiple circular buffers e.g. you can include circular buffer objects in the private data of that object, with the circular buffer objects containing a DVR to an array acting as that buffer... -------------------- Here is ChatGPT comparing functional globals with DVRs: Functional Globals (FGs) and Data Value References (DVRs) are both techniques used in programming (particularly in LabVIEW) to manage shared data, but they offer different approaches and have different strengths and weaknesses. FGs encapsulate data within a VI that provides access methods, while DVRs provide a reference to a shared memory location. Functional Globals (FGs): Encapsulation: FGs encapsulate data within a VI, often a subVI, that acts as an interface for accessing and modifying the data. This encapsulation can help prevent unintended modifications and promote better code organization. Control over Access: The FG's VI provides explicit methods (e.g., "Get" and "Set" operations) for interacting with the data, allowing for controlled access and potential validation or error handling. Potential for Race Conditions: While FGs can help avoid some race conditions associated with traditional global variables, they can still be susceptible if not implemented carefully, particularly if the access methods themselves are not synchronized. Performance: FGs can introduce some overhead due to the VI calls, but this can be mitigated by using techniques like inlining and careful design. Example: An FG could be used to manage a configuration setting, with a "Get Configuration" and "Set Configuration" VI providing access to the settings. Data Value References (DVRs): Shared Memory Reference: DVRs are references to a memory location, allowing multiple VIs to access and modify the same data. This is a more direct way of sharing data than FGs. Flexibility: DVRs can be used with various data types, including complex data structures, and can be passed as parameters to subVIs. Potential for Race Conditions: DVRs, like traditional globals, can be prone to race conditions if not handled carefully. Proper synchronization mechanisms (e.g., queues, semaphores, or action engines) are often needed to prevent data corruption. Performance: DVRs can be very efficient, especially when used with optimized data access patterns. Example: A DVR could be used to share a large array between different parts of an application, with one VI writing to the array and another reading from it. Key Differences and Considerations: Control vs. Flexibility: FGs offer more control over data access through their defined interface, while DVRs offer more flexibility in terms of the data types and structures that can be shared. Race Condition Mitigation: While both can be susceptible, FGs can be designed with built-in synchronization mechanisms (like action engines), while DVRs require explicit synchronization mechanisms to prevent race conditions. Performance Trade-offs: DVRs can offer better performance in many cases, especially when dealing with large data sets, but this can be offset by the complexity of managing synchronization. Code Readability and Maintainability: FGs can make code more readable and maintainable by encapsulating data access logic, but poorly designed FGs can also lead to confusion. In summary: Use Functional Globals when: You need controlled access to data, want to encapsulate data management logic, or need to ensure some level of synchronization. Use Data Value References when: You need to share data efficiently between multiple parts of your application, need flexibility in the data types you are sharing, or when performance is critical and synchronization can be handled externally. It's worth noting that in many cases, a combination of both techniques might be used to leverage the strengths of each approach. For example, a DVR might be used to share data, while a functional global (or an action engine) is used to manage access to that data in a controlled and synchronized manner.

- 14 replies

-

- dvr

- ni software

-

(and 2 more)

Tagged with:

-

Well, it seems to be sufficient to set the dialog to modal *and* set its front panel to frontmost. As long as it is both the VI stays modal and blocks access to all other windows.

-

In various part of my application I want to allow users to open a setup dialog and when that setup dialog is open they should not be able to shift to another window, so the dialog should be modal... However, I also want the callers to continue to update as the user might then discover something critical in those displays while in the setup and exit the setup. The easy (/correct) solution to this is to have a separate loop in the callers that calls these setup dialogs, because then the setup dialog can be called statically/syncronously and have its window set to modal....I then get both updates in the caller's display and block access to GUI operations in the caller window. Now the downside to that is that you have to have such a dedicated loop and for various reasons some of the updates happen in the same loop here, so instead I thought I could call the dialog dynamically. This invalidates the modal behaviour though as it will not be modal in relation to the caller anymore. Setting the dialog "Always on top" is one option, but then it will stay on top even of file dialogs it calls(!) so unless you turn off the always on top setting every time you call a file dialog you have another problem. Setting the dialog to floating on the other hand does not block access to the caller's window. I can alternatively live with that by adding code to close the dialog if the parent gets closed etc, but a true modal behaviour would remove the need for such additional code and would be preferable. So, the question is, is there a way to call a VI asyncronously, but still force it to behave modal in relation to its caller (or "globally" (for the application))? Or should I just capitulate and make sure these calls happen statically in a loop that can wait?

-

Just to share how I got around this: By deleting 1 front panel item at a time I found that one single control was causing PaneRelief to crash; an XY graph. Setting it temporarily to not scale and replacing it with a standard XY graph (the one I had had some colours set to transparent etc) was enough to avoid having PaneRelief crash LabVIEW, but it would now just present a timeout error: I found a way arund this too though: the VI in question was member of a DQMH lvlib that probably added a lot of complexity for PaneRelief. With a copy saved as a non-member it worked: I could replace the graph, edit the splitters with PaneRelief without the timeout error (even setting the size to 0), then copy back the original graph replacing the temporary one, and finally move the copy back into the lvlib and swap it with the original. Voila! What a Relief... 😉 I probably have to repeat this whole ordeal if I ever need to readjust the splitters in that VI with PaneRelief though 😮

-

The Pane Relief tool is great, but sometimes it seems VIs seem to have become incompatible with it and any change of the splitters will lead to a LabVIEW crash (cursor goes into busy mode for a while after the change, then poof). Even if you then remove almost all other controls and splitters and recreate a single splitter its front panel, doing a normally simple edit (nothing special like setting the splitter size to 0 or anything) to that will crash too...(removing *all* controls does work though...) When this happens there is often an awful lot of things to recreate if the VI has to be recreated/all controls deleted (especially since copying diagrams does not replicate front panels or vice versa...). Has anyone experienced this and found a way to fix it? (I have the source code of the tool available so one possible step is to start debugging the crash on that, but perhaps someone has been down that rabbit hole already?)

-

You do it right yes, but just use the method you used at the bottom. It is more simple and just as "in-place" as the top-method. If the number of rows were huge and you mainly needed to save memory it might make sense to convert and replace one element at a time instead (avoiding a full copy of the column to be converted, which you still have in your two solutions (verified quickly using ArrayMemInfo...)), but that would be a different code.

-

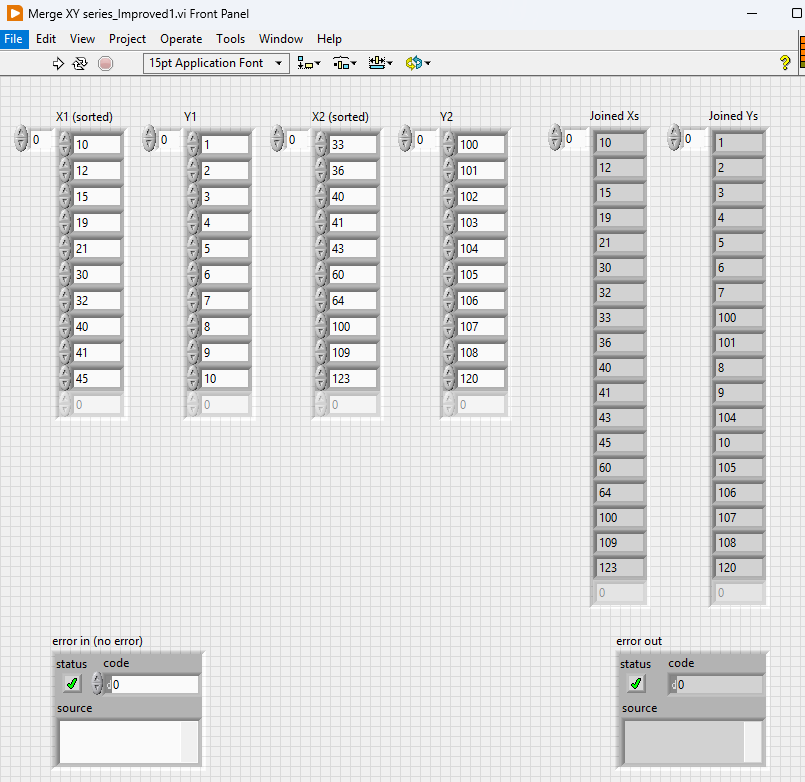

To analyze large time series remotely I have a client application that splits the data transfer using two methods; It will break down the periods into subperiods that is then assembled into the full period by the receiver *and* if the subperiods are too large as well they are retrieved gradually (interlaced) by transferring decimated sets with a decimation offset. So, as a result of this I need to merge multiple overlapping fragments of time series (X and Y array sets). My base solution for this just concatinates two sets, sorts the result based on the time (X values) and finally removes duplicate samples (XY pairs) from it. This is simple to do with OpenG array VIs (sort 1D and remove duplicates VIs) or the improved VIM-versions from @hooovahh, but this is not optimal. My first optimized version runs through the two XY sets in a for-loop instead, a for loop that picks consecutive unique values from either of the two sets until there are no such entries left. This solution is typically 12-15 times faster than the base case (<10 ms to merge two sets into one set with 250 000 samples on my computer e.g.). Has anyone else made or seen a solution for this before? It is not as generic as the array operations covered by the OpenG array library e.g., but it still seems like something many people might need to do here and there...(I had VIs in my collection to stitch consecutive time series together with some overlap, but not any that handles interlacing as well). It would be interesting to see how optimized and/or generalized it could be solved. I have attached the two mentioned examples here , they are not thorougly tested yet, but just as a reference (VIMs included just in case...). Below is a picture of the front panel showing an example of the result they produce with two given XY sets (in this case overlapping samples do not share the same value, but this is done just to illustrate what Y value it has picked when boths sets have entries for the same X (time) value): Merging XY series LV2022.zip

- 1 reply

-

- 1

-

-

Ah, yes, thanks. The attachment in the original post is now without the OpenG dependency and has been converted back to LabVIEW 2018.

-

Many years ago I made a demo for myself on how to drag and drop clones of a graph. I wanted to show a transparent picture of the new graph window as soon as the drag started, to give the user immediate feedback of what the drag does and the window to be placed exactly where it is wanted. I think I found inspiration for that on ni.com or here back then, but now I cannot find my old demo, nor the examples that inspired me back then. Now I have an application where I want to spawn trends of a tag if you drag the tag out of listbox and I had to remake the code...(see video below). At first I tried to use mouse events to position the window, but I was unable to get a smooth movement that way. I searched the web for similar solutions and found one that used the Input device API to read mouse positions to move a window without a title and that seemed to be much smoother. The first demo I made for myself is attached here (run the demo and drag from the list...). It lacks a way to cancel the drag though; Once you start the drag you have a clone no matter what. dragtrends.mp4 Has anyone else made a similar feature? Perhaps where cancelling is handled too, and/or with a more generic design / framework? Drag window out of listbox - Saved in LV2018.zip

-

Getting from UID to object reference when owning VI was opened outside project

Mads replied to Mads's topic in VI Scripting

I was wrong, the reason this did not work was something else...So, I now have it working.👍 Thanks for all the help @Darren and @Rolf Kalbermatter -

Getting from UID to object reference when owning VI was opened outside project

Mads replied to Mads's topic in VI Scripting

For some reason that does not seem to be the right application reference either...Open VI ref for the owning VI in that context still returns error 1004. -

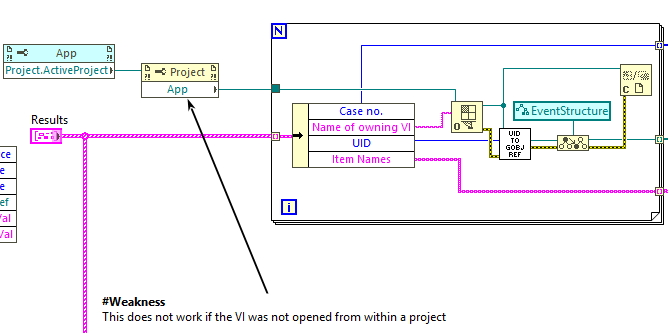

Perhaps not fully a scription question, but I am not sure where it fits better...(and perhaps easy to answer for anyone with a little bit more scripting experience than me too): Upgrading to LV2024 from 2022 I noticed that some shortcut menu plugin did not work anymore and the reason is that NI has for some reason redesigned the framework to always nullify any references a plugin might try to hand off to a dynamically called VI...To get around this you will either have to avoid using dynamic VIs (but this will prevent you from making plugins that stay open without blocking access to anything else until you close the plugin window..), or hand off UIDs instead of references. This is where I ran into an issue though; I can pass on UIDs, but to get from UIDs to a reference (event structure ref in this case) within a specific instance of a VI I only found this method: I successfully use the scripting function UID to GOBJ Reference.vi (found in the hidden gems palette e.g.) by feeding it the UID to the event structures I found. This also requires a reference to the owning VI though (which the plugin framework also will not allow me to pass without nullifying it), so I pass on the name of the owning VI and use Open VI reference on that to get the right VI instance....For Open VI Reference to get the right VI instance though I use the Project.ActiveProject node, read the app property of that and feed that to the Open VI Reference...and this works. The problem is that if the owning VI was opened outside a project the activeproject node will not provide anything...(I think LabVIEW could have solved this by having a "virtual"/"root" project for such VIs which would allow all code relying on project refs to work even in such VIs...but no). So...How do I get the proper application instance ref to feed into Open VI Reference if the VI does not belong to a project? Or alternatively; is there a way to get from UID to GObj Ref (in this case event structure references) that bypasses this issue? PS: The 2024 changed shortcut plugin framework seems to work in a slightly mysterious way as the refererences I might create based on UIDs received from it will *also* be nullified when the plugin finishes (not just references handed directly) - so the dynamically called VI that tries to work via the UIDs will have to make sure it creates those references after the plugin has finished with this cleanup... 😮

-

There is no change when it comes to Real-Time support now with 5.0.4 right? Still need to stick to 4.2 to use it on RIO-targets?

-

I agree, with the former😉 It is time to pause the planning to redecorate the kitchen when there is a fire in the living room (no matter how fire retardent the new paint is going to be).

-

Here is one quick draft of a VI for 2D searches. You might also save it as a VIM to use it on other types of 2D arrays... Search 2D Array.vi

-

One important thing to note is that if the application was built with the "Pass all command line arguments to application" key checked the OS Open Document event is never triggered 🙁 (come to think of it this might be why it has not worked as expected in the past 🤔). This might be a show stopper in cases where you *sometimes* want to be able to launch the app with additional/other arguments, e.g. to trigger some special behaviour. We do that to allow system administrators to disable some features in the application depending on the user's access, or point the application to an alternative configuration e.g..

-

Yes, it does work 🙌 When that tip first got out way back it did not seem to do the job properly, but it does now, in LabVIEW 2022 at least. I could not get the filter-version of the event to give me anything for some reason, but the regular does and it picks up opening multiple files together. (Looking at the issue again earlier I noticed VIPM e.g. still uses the helper app (VIPM File Handler.exe), but perhaps that is just a legacy / if it ain't broke, don't fix it thing. This is "supersecretstuff" too though, so trusting that it will continue to work is another issue.)

-

The "LVShellOpen"-helper executable seems to be the de-facto solution for this, but it seems everyone builds their own. Or has someone made a template for this and published it? Seems like a good candiate for a VIPM-package 🙂 (The downside might be that NI then never bothers making a more integrated solution, but then a again such basic features has not been on the roadmap for a very long time🤦♂️...)

-

Did you figure out this crash @smarlow?

-

Here is a good thread that covers various related scenarios: https://forums.ni.com/t5/LabVIEW/why-open-new-clone/td-p/2976973 Regarding the queue; if it is not in use anywhere (regular dequeue e.g.) so that the close can be detected there? If it is polled in the timeout you probably do not need to run that timeout every 10ms as that will put a high load on the CPU. For users to perceive things as "real-time"/instant anything below 300 ms or so will do. You can also wire the error wire directly to the stop terminal unless you have other cases there that generate a boolean, then using the status alone is more practical..