-

Posts

469 -

Joined

-

Last visited

-

Days Won

33

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Mads

-

I definitely prefer the pre-SP colors and icons. The SP1 LabVIEW "20" Icon marking is completely unreadable...and the fonts, font sizes and layout of the welcome screen is all over the place. I do not understand how these things pass quality control🤮 Post-sigh: And as always upgrading to SP1 the license is no longer supported by our Volume License Server (even though our SSP agreement runs for another year...) - so a manual request for an updated license is once again required...Rinse and repeat later for the 2021 release...😒

-

NI abandons future LabVIEW NXG development

Mads replied to Michael Aivaliotis's topic in Announcements

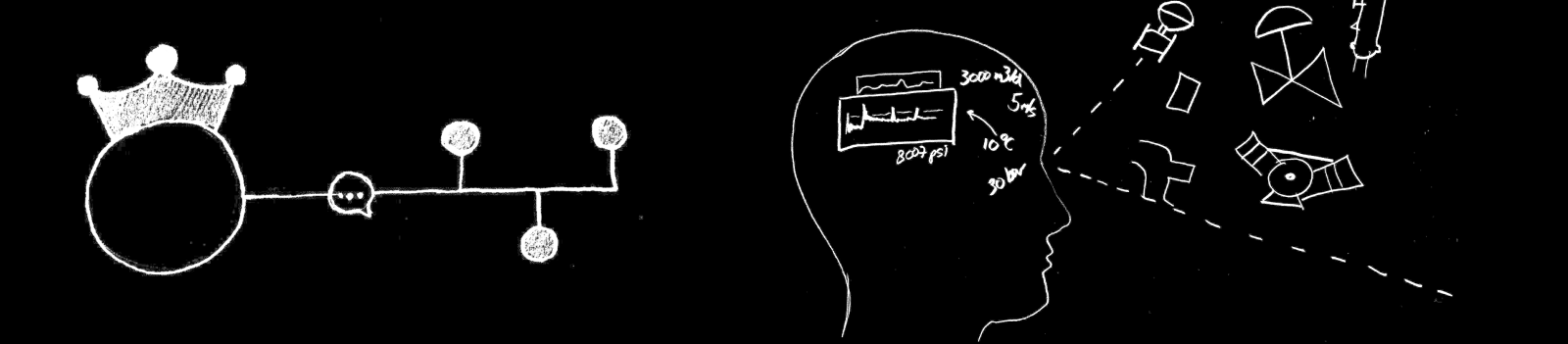

Who said anything about debugging a built application, it's about seeing what you get without having to build it - because WYSIWYG. Many applications have multiple windows that run in parallel, and I want to see them like that during development. And I want multiple diagrams and front panels open while tracking the data flow and/or inserting debug values. I even want to be able to have panels open just to see them while I am working on something related, because it helps me maintain the full mental model of the thing I am working on. I do not want to be bothered minimizing windows all the time just to clear the space either, there is enough of that in current LabVIEW. With NXG having more than one front panel or diagram per display was barely possible, no matter how small the actual front panel or diagram was. -

NI abandons future LabVIEW NXG development

Mads replied to Michael Aivaliotis's topic in Announcements

For the first versions of NXG it was not possible. Then it started to allow you to have multiple instances of the VI open and hence to see both the diagram and the front panel at the same time, but each window had so much development-stuff surrounding it that it was not practical to have much more than one or two open. Hiding any of it to free up space and/or to see something closer to what you would see in the built application was not an option. -

NI abandons future LabVIEW NXG development

Mads replied to Michael Aivaliotis's topic in Announcements

Having the ability to work with multiple front panels viewed as they will look in the built application, and looking at multiple diagrams at the same time, has very little to do with break-points and reentrancy. It's about WYSIWYG, testing, and having a good understanding of multiple interacting parts of your system. Having a thin line between what you see in edit mode and what you get when running is invaluable, not just to the understanding for beginners (which is a great plus), but for anyone wanting to avoid surprises because they lost the connection between the code and the result... As for break-points in reentrant VIs that is something to avoid and handle in a different way...that's a minor issue. -

I see a lot of people wanting this, but why? We code graphically after all. The way to make sense of the underlying code to a G-programmer is to present it as G-code, not text... Ideally we had a SCC-system made specifically for graphical code, but I do not expect that to become a reality (unless someone made it on top of an existing one perhaps). Personally I live relatively comfortably with the solutions we can set up already, but would prefer to see it better integrated into LabVIEW and/or have out of the box solutions on how to get started with various major SCC alternatives. If the VIs were humanly readable it might make it easier to create more powerful alternatives to VI Scripting though, and to generate code at run-time in built applications...That is something else.

-

NI abandons future LabVIEW NXG development

Mads replied to Michael Aivaliotis's topic in Announcements

This mistreatment of WYSIWYG was the worst of NXG. Having multiple front panels and block diagrams open at the same time, and being able to jump from run to edit mode quickly to do debugging and GUI-testing is one of the core strengths of LabVIEW. The lack of understanding of this was also reflected in other changes, like the removal of the Run Continuously-button. The front panels need to present themselves as close to what they will be during run-time as possible (greatly lowers the threshold for new users in understanding things, but also helps experienced developers maintain a healthy relationship to their GUI - and testing(!) during the whole development) , with a minimum of development-related real-estate wasted around them. It can be nice to have lots of stuff available around a window under active development, but only on that window and only when you want it. -

According to VI Package Manager the library is compatible with LabVIEW 2013 and onwards...SQLite can support multiple users, but it locks the whole database when writing, so if you have lots of concurrent writes it is not the database you want (usually the time the database is locked is a few milliseconds - so for most uses this does not matter).

-

The library operates directly on the database file, no ODBC.

-

NI abandons future LabVIEW NXG development

Mads replied to Michael Aivaliotis's topic in Announcements

The new branding is not my cup of tea so hopefully that does not tell too much about the new management. Reducing (the need for) administrative positions could be a good thing. As for raising the quality and speed of the LabVIEW development I hope they use their savings to keep and build a highly skilled, tight nit, centralized team. The developers should all worship Graphical programming🧚♂️🧚♀️, even though many of themselves have to be proficient with many an awful text based tool. If they have seen the light from the many lessons about the uniqueness of graphical vs textual programming embedded in current LabVIEW, they might not stray to the dark path of NXG 😀 Steal the best (the graphics) of the textual worlds, keep the spirit and innovations of G. The first letter graphic in the next LabVIEW should be G.👼 -

NI abandons future LabVIEW NXG development

Mads replied to Michael Aivaliotis's topic in Announcements

Here's hoping the right lessons have been learned, and that things will jump and move in a better direction from now on. -

Tip on how to copy RT build specs to new type of target

Mads replied to Mads Toppe's topic in Real-Time

That would make sense if the question was whether they would support that a third party created a tool based on this type of manipulation. I do not understand why they do not support it within the project explorer though. When they control both the file format and the editor, supporting this type of target copying would just be a matter of updating it to their new format. They already convert the project file to new versions so that part would be taken care of. -

I am sure it is possible to mess up any type of SCC system that way 🙃 The main complaints I have with SVN really is the slowness - mainly related to locking (can be sped up *a lot* if you choose to not show the lock status in the repo browser though), and the occasional need for lock cleanups... (When someone has been checking in a whole project folder and it did not contain all of the necessary files for example...).

-

What do you think of the new NI logo and marketing push?

Mads replied to Michael Aivaliotis's topic in LAVA Lounge

Slightly related topic: I wonder what the trend looks like for the share of questions in the NI discussion forums marked as resolved. Based on my own posts there, it seems to get harder to find a solution to the issues I run into. I am not sure if that is just because the things I do in LabVIEW are closer to the borders of regular use / getting quirkier though, or if it is a sign of declining quality in the products involved. I suspect it is a mix of both. It would be cool if such statistics were readily available. A trend of the posting rate per forum/tag for example could reveal shifts in the interests of the users (the mentioned shift towards configurable turnkey solutions vs general application development for example) and/or the quality of the product. The latter might partially be possible to separate from the former by looking at the mentioned share of resolved issues; adjusted for the number of years of experience the questionnaires had (which perhaps could be inferred by how long they have been registered at ni.com and/or the number of posts or solutions made by that user...). -

Sure, that's basic (always dangerous to say though, in case I have overlooked something else silly after all, it happens 😉). The lvlib and its content is set to always be included, and the destination is set (on source file settings) to the executable. The same goes for the general dependencies-group. The dynamically called caller of some of the lvlib functions on the other hand is destined to a subdirectory outside the executable, and ends up there as it should. But then so does lots of the lvlib-stuff - seemingly disregarding that is destination is explicitly set to be the executable. Correcting other usual suspects (read: additional exclusions) do not produce a repeatable solution either. Perhaps someone else here have observed similar voodoo though, and figured it out?

-

I happen to have some JKI JSON calls, among other things, in a dynamically called plugin, and it seems that whatever I do in the application build specification to try to get all those support functions (members of lvlibs) included in the executable, the build insists on putting the support functions as separate files together with the plugin (the plugin is here a VI included in the same build, destined to be in a separate plugins folder). (Sometimes I wonder if there is a race condition in the builder; what does it do for example if there are two plugins include din the build that will call the same VI, and the destination is set to Same as caller ..🤔That's not the case just now though, right now the only caller is one dynamically called external VI..) In an ideal world I think I would create a destination for the lvlibs that plugins are to use in a single external support container-file like an llb...In the case of this JSON library though there are classes involved to, so an llb does not work (name collisions etc). Packed libraries are too cumbersome to use I think, for various reasons (having to support multiple targets for example). So as a second best solution I want everything (all lvlibs that plugins may need something for) packed into the executable (works as a single file container, and supports classes as long as it is not using the 8.x file format...), but then the application build seems to behave very unpredictably. At first I would expect that if I included the lvlib on the always included list, and then set the destination of the lvlib to the executable I would be fine, but no. - Suspecting that this only applies to the lvlib-container file itself and not the VIs it owns, I then also set the destination for all dependencies and packed and shared libraries to the executable...- Still the builder puts lvlib-VIs together with the plugin. I have tried this with and without excluding unused members of the library with/without modification...and with and without disconnecting type definitions. In the end I returned to the original build setup (as this was noticed after a conversion from 2018 to 2020), tried a build, got a Bad-VI error on a couple of VIs used by an xcontrol, set those to include the block diagram...and voila - magically this affected where the JSON lvlib ended up as well, even though they have no links in the code. This problem keeps popping up though, and once it is there it seems like getting the lvlib-files to the wanted destination always includes some voodoo...😧 Or does it?

-

Sounds like a philosophy not exactly aligned with using LabVIEW... I found it now though, it is called the GPM Browser. Re-reading the documentation I noticed a sentence about it that I had overlooked. It is not marketed much though no, you have to RTFM 🙁

- 63 replies

-

- 1

-

-

- open source

- share

-

(and 3 more)

Tagged with:

-

One useful tip when troubleshooting VIPM is that there is an error log in %programdata%\JKI\VIPM\error When you first ran it, did you get the user logon dialog? I had that but was unable to logon, and when I then chose to continue without a logon it never showed any windows...I ended up uninstalling it, deleting the JKI/VIPM folder in ProgramData, and then reinstalling it - and this time I chose to register a new account, and got it to work. That was at home though, at work I ended up with additional issues because the firewall would not accept the certificates of the server used by VIPM. I just noticed now that Jim Kring announced a fix for this on the discussion forum here: https://forums.jki.net/forum/5-vi-package-manager-vipm/ , but unfortunately that is not out yet...

-

What do you think of the new NI logo and marketing push?

Mads replied to Michael Aivaliotis's topic in LAVA Lounge

Sure, the focus of NI has always been on hardware sales, and that has directed where much of the development effort in LabVIEW goes, and how it is marketed. I often think that in this respect the customers of NI show more respect to the power of LabVIEW/G than NI does. There is an underlying uncertainty in the use of LabVIEW due to this - and the growth of LabVIEW and G as a programming language is perhaps limited by it. It goes both ways though; the hardware sales has also supported the continued development of LabVIEW - and LabVIEW draws strength from the ecosystem it is part of. I would not be surprised if NI now (wrongly) thinks that they do not need LabVIEW to make their hardware unique (spending so much time and money on NXG might make it all look like waste...). I hope I am dead wrong though, and that they consider themselves just as much a software company ("the software is the instrument" after all!). If not, I hope they at least spin off a separate company soon enough that will. The latter would be riskier for the future of LabVIEW/G than the former (having to establish a different sustainable business model), but might also leave us developers with a higher reward. Especially if the alternative is a dwindling LabVIEW / NXG that is geared towards configuration only. -

Having lots of connection issues with VIPM (turned out to be an issue with the JKI server certificate I found out on my own...), I just had a relook at G Package Manager... Is it seriously based on people having to use the command line to install packages?🤦♂️ The main selling point of LabVIEW is that it is *graphical*....and it is trying to force everyone to use the command line?? At least then integrate it into Windows Explorer so that I can right-click my project folder and choose packages to install then and there. O rhave a graphical browser that displays the pacakges, and let me choose a bunch of them there and then point them to the project directory for one batch install. Installing one package at the time is terrible - it would mean that I would need to install 10-20 packages in every project. If so, make it possible to make package groups at least, so that we can install a bunch of the usual suspects with one click. *Ideally* you would have the packages installed in your user.lib or somewhere else centralized instead, and then if you use anything from any of the packages, they are automatically copied into the project...(yes, this is one of the few places where NXG might be on the right track...).

- 63 replies

-

- open source

- share

-

(and 3 more)

Tagged with:

-

What do you think of the new NI logo and marketing push?

Mads replied to Michael Aivaliotis's topic in LAVA Lounge

The idea that customization is enough seems like a variant of the 80-20 fallacy...🤦♂️ To me LabVIEW is the thing that makes NI unique. It is their greatest product. It happens to also sell hardware because of the limitless possibilities introduced by the concept of virtual instrumentation. The RIO concept strengthens that package, and is why most of the hardware we buy from NI are sbRIOs and cRIOs. Without LabVIEW and RIO we would choose cheaper hardware options. (The hardware ties might unfortunately be one of the things that prevents LabVIEW from becoming all it could and deserves to be though; the graphical champion of all kinds of programming. ) -

What do you think of the new NI logo and marketing push?

Mads replied to Michael Aivaliotis's topic in LAVA Lounge

But that is why I do care, because the success of NI is important to us all. Everyone who has invested in their ecosystem is hurt if NI fails. -

What do you think of the new NI logo and marketing push?

Mads replied to Michael Aivaliotis's topic in LAVA Lounge

When it comes to color it is generally a mistake to be "unique". Most cars (and clothes, and houses and...) are one of 4-5 colors. They have their specific effects on the human mind, and you cannot avoid them if you need that effect - which NI does. If you want to project a sustainable image and sell - you need those colors, no matter how many other companies use the same. When it comes to shades most are unusable as they come off as drab, muddy or garish. You can choose a light and "fresh" shade, but move a little too much and you step into the ugly. Choosing green is generally risky, although there are some fresh tones that work in some amounts (like in the logo in this case). The darker greens, used on much of the NI web site, is off that cliff though. I think a majority of the population will tell you that for free. And the screen resolution requirements of the layout sucks on PCs, which is what people shopping for or working on NI related things will use most of the time. The designers have gotten their priorities wrong there. As for the logo, that's totally fine, for many of the reasons you mention. The package manager logo though...🤣 -

What do you think of the new NI logo and marketing push?

Mads replied to Michael Aivaliotis's topic in LAVA Lounge

If I have to say one positive thing about the new look, it's that the color palette is probably very very rare.... -

What do you think of the new NI logo and marketing push?

Mads replied to Michael Aivaliotis's topic in LAVA Lounge

My train of thoughts about it: Do I have a browser or display issue.... Someone must have hacked the site and messed it up really good - yuck... Wow, they are serious....this is even worse than NXG, someone should turn the ship... Is this an attempt to be appear more "green"? What screen resolution do they think people have? I have to double mine to read this properly... I wonder how this looks like for people who are color blind...(let me try on https://www.toptal.com/designers/colorfilter, nah the welcome dialog blocks that...) This is making me depressed, let me close this thing...