-

Posts

3,948 -

Joined

-

Last visited

-

Days Won

275

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

OpenG Package: LabPython weirdness

Rolf Kalbermatter replied to gb119's topic in OpenG General Discussions

That's pretty harsh! The source code is on sourceforge and there is nobody preventing other people from accessing it and attempt to port it to 64 Bit LabVIEW. It won't be easy but given enough determination it is certainly doable. -

OpenG Package: LabPython weirdness

Rolf Kalbermatter replied to gb119's topic in OpenG General Discussions

It might be possible but it is far from just a recompilation of the code. The code was written at a time where nobody was thinking about 64 bit CPUs and no standards existed how one should prepare for that eventualiity. Also it is possible that the script interface in LabVIEW has been cleansed of support for older API standards for the 64 bit version. LabPython uses the first version of that API, but if the 64 bit version of LabVIEW doesn't support that anymore, then the new version documentation would need to be gotten from NI. This is not an official public API. -

[CR] TaskDialogIndirect (win-api comctl32.dll)

Rolf Kalbermatter replied to peterp's topic in Code Repository (Uncertified)

As Shaun already elaborated, the issue is always about the LabVIEW bitness, not the underlaying OS (well, you can't run LabVIEW 64 bit on 32 bit Windows but that is beyond the point here ). -

[CR] TaskDialogIndirect (win-api comctl32.dll)

Rolf Kalbermatter replied to peterp's topic in Code Repository (Uncertified)

Yes. See his comment to add 32 bit support later on. -

[CR] TaskDialogIndirect (win-api comctl32.dll)

Rolf Kalbermatter replied to peterp's topic in Code Repository (Uncertified)

The problem is certainly not endianess. LabVIEW uses internally whatever endianess is the prefered one for the platform. Endianess only comes into the picture when you flatten/unflatten (and typecast is a special case of flatten/unflatten) data into differently sized values. This is why you need to byte swap here. Your issue about seemingly random data is most likely alignment. LabVIEW on x86 always packs data as tight as possible. Visual C uses a default alignment of 8 bytes. You can change that with pack() pragmas though. -

Well, I'm not sure what your hourly rate is. But a LabVIEW upgrade is almost certainly cheaper than trying to recreate that effort for yourself, if you intend to use the result in anything that is even remotely commercial.

-

[CR] TaskDialogIndirect (win-api comctl32.dll)

Rolf Kalbermatter replied to peterp's topic in Code Repository (Uncertified)

You wouldn't need all that swapping if you had placed the natural sized element in that cluster, an int64 for 64 bit LabVIEW and an int32 for 32 bit LabVIEW. For the 0 elements in your cluster it doesn't matter anyhow. Now you have a dialog with a title and a single string in it and it already looks complicated. Next step is to make it actually usefull by filling in the right data in all the other structure elements! -

of course that won't work! That sqlite3 **ppDb parameter is a pointer to a pointer. The inner pointer is really a pointer to a structure that is used by sqlite to maintain information and state for a database, but you as caller of that API should not be concerned about that contents. It's enough to treat that pointer as a pointer sized integer that is passed to other sqlite APIs. However in order for the function to be able to return that pointer it has to be passed as reference, hence the pointer to pointer. Change that parameter to be a pointer sized integer, Passed by reference (as pointer) and things look a lot different. However, seeing you struggle with such basic details, it might be a good idea to checkout this here on this site. Someone else did already all the hard work of figuring out how to call the sqlite API in LabVIEW.

-

[CR] TaskDialogIndirect (win-api comctl32.dll)

Rolf Kalbermatter replied to peterp's topic in Code Repository (Uncertified)

I would tag it übernasty. Really, it's an API that I find even nasty to use from C directly. Very likely there is. With all it's own complexeties such as correct .Net version that needs to be installed and instantiated by LabVIEW on startup. But the interfacing is made pretty easy since .Net provides a standard to describe the API sufficiently enough for LabVIEW to do the nasty interface conversion automatically. -

[CR] TaskDialogIndirect (win-api comctl32.dll)

Rolf Kalbermatter replied to peterp's topic in Code Repository (Uncertified)

Problem is that this structure is pretty wieldy, uses Unicode strings throughout and many bitness sensitive data elements. Basically, each LP<something> and H<something> in there is a pointer sized element, meaning a 32 bit integer on LabVIEW 32 bit and 64 bit integer on LabVIEW 64 bit. Same for the P<something> and <datatype> *<elementname> things. and yes they have to be treated as integer on LabVIEW diagram level since LabVIEW doesn't have other datatypes on its diagram level that directly correspond to these C pointers. Not to talk about the unions! So you end up with at least two totally different LabVIEW clusters to use for the two different platforms (and no don't tell me you are sure to only use this on one specific platform, you won't! ) Trying to do this on LabVEW diagram niveau basically means that you not only have to figure out how to use all those different structure elements properly (a pretty demanding task already) but also play C compiler too, by translating between LabVIEW datatypes and their C counterparts properly. You just carved out for you a pretty in depth crash course in low level C compiler details. IMHO that doesn't weight up against a little unhappyiness that LabVIEW dialogs don't look exactly like some hyped defacto Microsoft user interface standard that keeps changing with every new Windows version, and that I personally find changing to the worse with almost every version. -

[CR] TaskDialogIndirect (win-api comctl32.dll)

Rolf Kalbermatter replied to peterp's topic in Code Repository (Uncertified)

What are you trying to do? This is a pretty hairy structure to interface to from the LabVIEW diagram. I definitely think the only reasonable approach is to write a wrapper DLL that translates from proper LabVIEW datatypes to the according elements in this structure. A major work but the only maintainable solution! So is there anything you hope to achieve with this that you couldn't do with native LabVIEW elements? -

Problem with dll for Image processing

Rolf Kalbermatter replied to S_1's topic in Machine Vision and Imaging

You definitely need to show your DLL function prototype and the diagram that calls it, preferably in VI form and not just an image. What you expect us here to do is showing us a picture of your car and asking why its motor doesn't run! -

I understand your concerns, but reality is that any multiplattform widget library (be it wxWidget, QT, Java, LabVIEW or whatever else you can come up with) is always going to be limited with whatever is the least common denominator for all supported platforms. Anything that doesn't exist on even one single platform natively has to be either left away or simulated in usually very involved and complicated ways, that make the code difficult to understand and maintain. And the LabVIEW window manager does contain quite a few such hacks to make things like subwindows, Z ordering, menus and common message handling at least somewhat uniform to the upper layers of LabVIEW. Hacky enough at least to make opening the low level APIs to this manager to other users a pretty nasty business. I'm sure there are other marketing driven reasons too, that Apple didn't include a cfWindow interface to NSWindow into its CoreFoundation libraries, but even if they did, they certainly wouldn't have tried to map that to the WinAPI as they did with most other CoreFoundation libraries.

-

And that is exactly what LabVIEW does. It's Window manager component is a somewhat hairy code piece dealing with the very platform specific issues and trying to merge highly diverging paradigmas into one common interface. It makes part of that functionality available through VI server, and a little more through an undocumented C interface which lacks a few fundamental accessors to make it useful for use outside of LabVIEWs internal needs. Yes "one" could! And what you describe is mostly how it would need to be done. But are you volunteering? Be prepared to deal with highly diverging paradigmas of what a window means on the different platforms and how it is managed by the OS and/or your application! And many hours of solitude in an ivory tower with lots of coffeine, torn out hairs and swearing when dealing with threading issues like the LabVIEW root loop and other platform specific limitations. I don't see the benefit of that exercise and won't go there! What for? For MDI applications? Sorry but I find MDI one of the worst implementation choices for almost every application out there.

-

"/usr/lib" should be in the standard search path for libraries, and if the library was properly installed with ldconfig (which I suppose should be done automatically by the OPKG script) then LabVIEW should be able to find it. Note that on Linux platforms LabVIEW will automatically try to prepend "lib" to the library name if it can't find the library with its wildcard name. So even "sqlite3.*" should work. However I'm not entirely sure about if that also happens when you explicitedly wire the library path to the Call Library Node. But I can guarantee you that it is done for library names directly entered in the Call Library Node configuration.

-

Ahh licensing! Well, I'm also in the last stage of finalizing a license solution for all LabVIEW platforms. Yes, including all possible NI RT targets!

-

Good luck with that! On Linux the native handle is supposedly the XWindows Window datatype. On Mac I would guess (don't have looked at it but entirely based on assumptions) that for the 32 Bit version it is the Carbon WindowPtr and for 64 Bit version it is an NSWindow pointer (and yes this seems to require Objective C, there doesn't seem to be a CoreFoundation C API to this for 64 Bit). So, you have at least 4 ENTIRELY different datatypes for the native window handle, with according ENTIRELY different APIs to deal with! And no, the Call Library Node function can not access Objective C APIs, for the same reason it can't access C++ APIs. There is no publically accepted standard for the ABI of OOP compiled object code. I guess the Objective C interface could be sort of regarded as standard on the Mac at least, since anyone doing it otherwise than what the included GNU C toolchain in X code does is going to outcast himself. For C++ there exists unfortunately not such an inofficial standard.

-

That's actually covered in that document. Any child references of the front panel are "static" references. When the VI server was pretty new (around LabVIEW 5.1) those were in fact dynamic references that had to always be explicitedly closed. However in a later version of LabVIEW that was changed for performance reasons. If you attempt to close such a reference the Close Reference node is in fact simply a NoOp. This is documented and some people like to go to great lengths to make sure to always know exactly which references need to be closed and which not, in order to not use the Close Reference node unneccessarily but my personal opinion is that this is a pretty useless case of spending your time and energy and simply always using the Close Reference node anyways is the most quick and simple solution. One way to reliably detect if a returned refnum is static or dynamic is to place the node that produces the refnum into a loop that executes at least twice and typecast the refnum into an int32. If the numeric value stays the same then it is a static refnum, otherwise it is dynamic and needs to be closed explicitedly in order to avoid unneccessary resource hogging of the system.

-

As if that would be any better! i have seen very bizarre behaviour with customers both when using McAfee and Norton and have a few pretty bad experiences myself with McAfee. Bad enough that we did not renew the license. On one side they like to be notoriously present in anything you do, on the other hand their UI allows little to no configuration options anymore. LabVIEW tends to do all kind of things when starting up, which opens many files all over the place. Virus scanners like Norton and McAfee like to intercept that every time and not in a way that is performance wise to be ignored. Works fine if only a few files are involved but runs completely awry with a high number of files being queried during a programmatic operation. Unfortunately it is not something that seems to be consistent from machine to machine. These virus scanners cause trouble on some machines and the same version seems to work fine on others and it is almost impossible to analyze why. But removing them also makes such problems go away, so go figure.

-

One reason why EtherCAT slave support isn't trivial is that you need to license it from the EtherCAT consortium. EtherCAT masters are pretty trivial to do with standard network interfaces and a little low level programming but EtherCAT slave interfaces require special circuitry in the Ethernet hardware to work properly. One way to fairly easily incorporate EtherCAT slave functionality into a device is to buy the specific EtherCAT silicon chips from Beckhoff and others which also include the license to use that standard. However those chips are designed to be used in devices, not controllers so there is no trivial way of having them be used as generic Ethernet interfaces. That makes it pretty hard to support EtherCAT slave functionaility on a controller device that might also need general Ethernet connectivity, unless you add a specific EtherCAT slave port in addition to the generic Ethernet interface, which is a pretty high additional cost for something that is seldom used by the majority of the users of such PC type controllers.

-

How to use camera not listed under MAX

Rolf Kalbermatter replied to Sharon_'s topic in LabVIEW General

I wasn't aware of the Toshiba Teli product line. Googling "TeliCam Toshiba" didn't bring up any relevant links :-) and "TeliCam" alone only showed some analog cameras! Since it's indeed an entire range of cameras with all kinds of interfaces we definitely need to know more about the actually used model before we can say anything more specific about the best way to use that from withing LabVIEW. -

How to use camera not listed under MAX

Rolf Kalbermatter replied to Sharon_'s topic in LabVIEW General

To add to what Jordan and Tomas already said, the camera is pretty unimportant here. Since it is an anaolog camera you need to have also some sort of image frame grabber interface that converts the analog signal to a digital computer image. This is what is important as to how you can interface to your camera. Unfortunately NI has discontinued all their analog frame grabber interfaces otherwise the most simple solution would have to be to buy an NI IMAQ device and connect your camera to that. Instead of that there are supposedly still some Alliance Members that sell third party analog frame grabbers with LabVIEW drivers. Other possible interfaces that claim to have LabVIEW support: http://www.theimagingsource.com/de_DE/products/grabbers/dfgmc4pcie/ http://www.bitflow.com/products/details/alta-an http://www.i-cubeinc.com/pdf/frame%20grabbers/TIS-DFGUSB2.pdf And as has been mentioned, if the frame grabber has a DirectX driver you should be able to access is from IMAQdx too, possibly with a little configuration effort. -

Calling GetValueByPointer.xnode in executable

Rolf Kalbermatter replied to EricLarsen's topic in Calling External Code

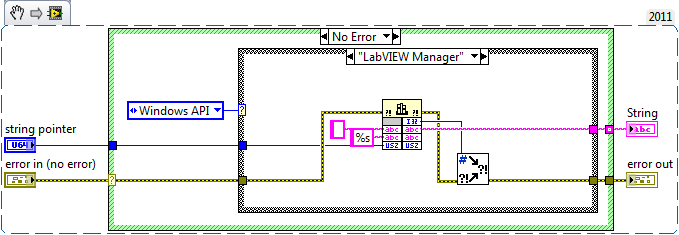

This appears to probably call libtiff and there the function TIFFGetField() for one of the string tags. This would return a pointer to a character string and as such can indeed not be directly configured in the LabVIEW Call Library Node. The libtiff documentation is not clear about if the returned memory should be deallocated explicitedly afterwards or if it is managed by libtiff and properly deallocated when you close the handle. Most likely if it doesn't mention it, the second is true, but definitely something to keep in mind or otherwise you might create deep memory leaks! As to the task of returning the string information in that pointer there are in fact many solutions to the problem. Attached VI snipped shows two of them. "LabVIEW Manager" calls a LabVIEW manager function very much like the ominous MoveBlock() function and has the advantage that it does not require any extra DLL than what is already present in the LabVIEW runtime itself. "Windows API" calls similar Windows API functions instead.- 10 replies

-

- 2

-

-

Get Volume Info, but of which volume?

Rolf Kalbermatter replied to ThomasGutzler's topic in LabVIEW General

The problem is that you are using reparse points (the Microsoft equivalent, or more precisely attempt to create an equivalent, of unix symbolic or hard links). And that reparse points where only really added with NTFS 3.0 (W2K) but Windows itself only really learned to work with them in XP sort of, and even in W7 support is pretty limited and far from getting recognized properly by many Windows components who aren't directly part of the Windows kernel. LabVIEW never has heard about them and treats them logically as whatever the reparse point is used for, namely either the file or directory they point at. LabVIEW for Linux and Mac OSX properly can deal with symbolic links (hard links are as far as applications are concerned anyhow fully transparent). On Windows LabVIEW does actually support shortcuts (the Win95 placebo for support of path redirection) but does not offer functionality to allow an application to have any influence on how LabVIEW deals with them. When you pass a path that contains one or more shortcuts to the File Open/Create or the File/Directory Info function, LabVIEW automatically will resolve every shortcut in the path and access the actual file or directory. But it will not attempt to do anyhing special for reparse points and doesn't really need to as that is done automatically by the Windows kernel when passing a path to it that contains reparse points. It only gets complicated when you want to do something in LabVIEW that needs to be aware of the real nature of those symbolic links/reparse points, such as the OpenG ZIP Library. And that is the point where I'm currently working on and it seems the only way to do that is by accessing the underlaying operating system functions directly, since LabVIEW abstracts to much away here. But it shouldn't be a problem for any normal LabVIEW application as long as you are mostly interested in the contents of files but not the underlaying hierarchy of the file system. Incidentially my tests with the List Folder function showed that LabVIEW properly places the elements that point to directories (reparse points, symbolic links and shortcuts) into the folder name list, while elements that point to files are placed into the filenames list. And that is true for LabVIEW versions as far back as 7.0. But there is an exported C function FListDir() which is even (poorly) documented in the external Code reference online documentation, that returns shortcuts as files but also returns an extra file types array which indicates them to be of type 'slnk' for softlink which was the MacOS signature for alias files. Supposedly List Folder uses internally FListDir() to do its work and properly process the returned information to place these softlinks into the right list. Unfortunately FListDir() doesn't know about reparse points, something quite understandable if you realize that even Windows 8 only has one single API to create a symbolic link. If one wants to create hard links or retrieve the actual redirection information of the symbolic or hard link, one has to call directly into kernel space with pretty sparsely documented information to do those things. -

Keeping track of licenses of OpenG components

Rolf Kalbermatter replied to Mellroth's topic in OpenG General Discussions

While this might be a possible option and is done in other software components to get around the problem of changing authors for different components I do think it is made more complicated by the fact that there would have to be some sort of body that actually incorporates the "OpenG Group". For several open source projects that I know of and which use such a catch all copyright notice, there actually existst some registered non-profit organization under that name that can function as copyright holder umbrella for the project. Just making up an OpenG Group without some organizational entity may legally not be enough. Personally I would be fine with letting my copyright on OpenG components be represented by such an entity. Now, even if such an entity would exist there would be one serious problem at the moment. You can't just declare that any cody provided to OpenG in the past falls under this new regime. Every author would have to be contacted and would have to give his approval to be represented in such a way through the entity. Code from authors who wouldn't give permission or can't be contacted, would need to remain as is or get removed from the next distribution that uses this new copyright notice. And there would need to be some agreement that every new submitter would have to agree too, that any newly submitted code falls under this rule. All in all, it's doable, but quite a bit of work and I'm not sure the OpenG community is still active enough that anyone would really care enough to pick this up.