-

Posts

27 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by _Mike_

-

Great news @Michael Aivaliotis! @LogMAN thanks for supporting the process and publishing transformation code. Should we then wait with potential forking an merge requests for all the git-housekeeping of the repo?

-

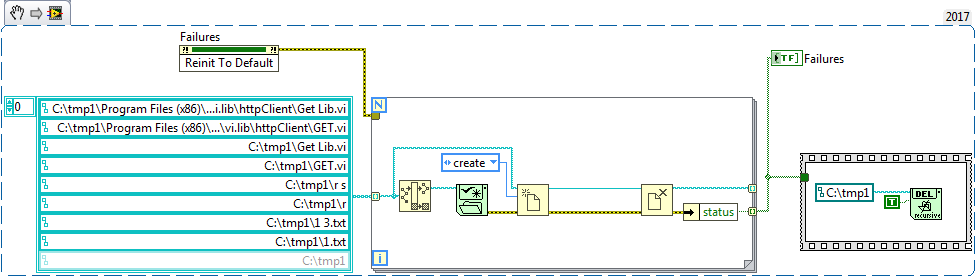

Hello, I have recently hit a peculiar issue when I was deploying a source distribution (SD) on a PXI Target running PharLap. The source of SD contained vis from NI's httpClient library, so the SD itself contained (among many others) two files Get Lib.vi and GET.vi in one folder. Upon attempt to upload the SD to target via FTP, I have seen no errors no warnings, but trying to dynamically launch a file from SD that used httpClient resulted in thread stack overflow. By close inspection I have found out that I miss GET.vi file on the target. Moreover I was unable to upload this file by FTP. Then I run code from snippet on the target can see Error 10 (duplicate path) appearing each time I want to create a file which name part matches "part before a spacebar" of existing file. This "feature" prevents me from using httpclient library on RT target, that I am heavily dependent upon. Is there a way to overcome this problem ?

-

After consultation with @Steen Schmidt I've learned that there is no API to do it, and the way to create a project including target consisting of few c-modules from limited poll would be by directly examine project's xml file. I will go that way.

-

Thanks for reply. However it's not that I want to detect actual layout. I have a textual description what modules should be at which slots from another team, and I would like to programmatically create a project and build a bitfile.

-

Hi all! Does anyone know how one can programmatically add a given chassis to a target and fill it with a set of C-modules and FIFOs? I attempt to programmatically create a bitfile for a target when I know the set of modules I want to install in e-rio based remote I/O system. I do have standardized vis for interfacing each module (via FIFOs).

-

especially when it comes to week numbering ;]

-

Lost UDP packets due to ARP

_Mike_ replied to Michael Aivaliotis's topic in Remote Control, Monitoring and the Internet

Considering your slow rate - why you want to depend on "in general" unreliable UDP instead of TCP? At such rate TCP's overhead should be of no matter, so - if I may ask - what are the benefits you are looking for? Or it's more matter of "curiosity" that UDP in principle should work byt ARP protocol interferes it? -

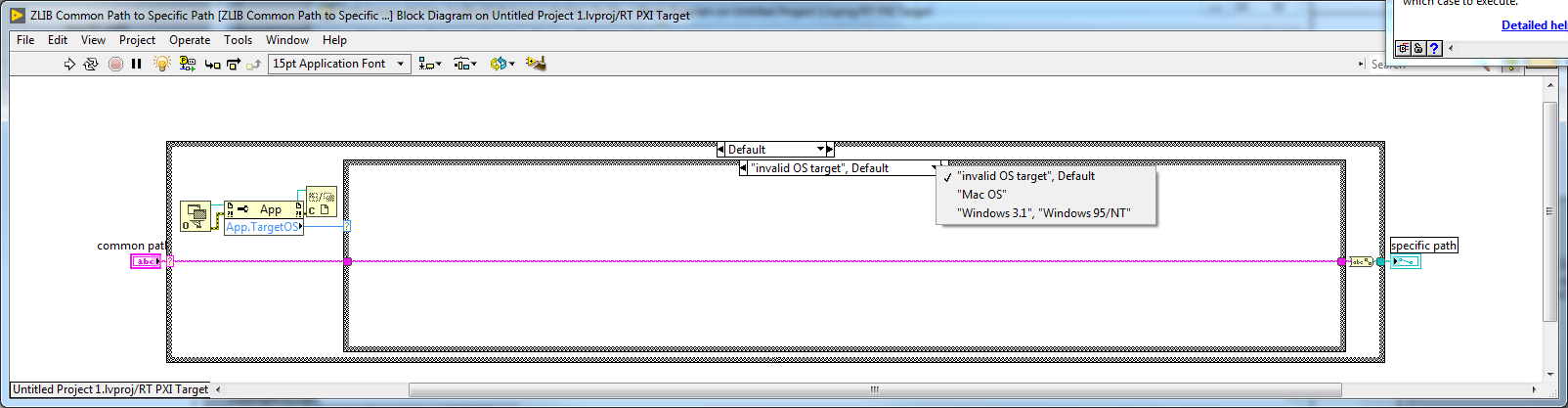

Hello, I am having a big problem in unpacking a zip file containing subfolders on PharLap. Unpacking attached zip file results in error: Open/Create/Replace File in ZLIB Read Compressed File__ogtk.vi->ZLIB Get File__ogtk.vi->ZLIB Extract All Files To Dir__ogtk.vi->Untitled 2.vi<APPEND> C:\q\qqqqqq/IOExport.csv It seems that the separator decoding does not work properly, so I have looked inside lvzip.llb\ZLIB Common Path to Specific Path__ogtk.vi: Here I see no case for Pharlap (neither for modern windows), while I guess that it should convert common path "/" separator to PharLap's "\". Is my guess correct? I am using ZLIB version 1.2.3 and LabVIEW2017 q.zip

-

The sole advantage in my opinion is ability to connect LabVIEW application with systems that use MQTT. I see no point in using MQTT for connecting between two LV applications.

-

I have worked extensively with LabVIEW and MQTT. Indeed LabVIEW does not have any really good libratry for that. I have worked a bit with @cowen71 to put together some good functionality. We wanted to make it more "reusable" and release it to broader public, but when it reached the amount of features that served our private purposes - the momentum stopped. Here is a library https://github.com/mradziwo/mqtt-LabVIEW/ which is not perfect as I say, but allows for asynchronous calls to MQTT, and some event-based callbacks. If you would be willing to join the team to bring it to "publication" state, we would be more than happy to collaborate on that.

-

Put your LabVIEW in the Tray -- Once and for all

_Mike_ replied to Stinus Olsen's topic in Code In-Development

Most likely library calls, or names of .Net classes. In the first case make sure that all pointers are 64bit, in the latter one need to make a switch case or object based solution to choose proper constructors based on "bittyness" of the LV execution system. -

Hello Neil, I do some DL programming mostly based on TensorFlow through Python. If ML/AI group will be created, I'd definitely would like to join it !

-

Hidden terminals on the .NET Constructor Node?

_Mike_ replied to LogMAN's topic in Calling External Code

I can also report similar behavior. What is more - by complete random choice of constructor - I have got a I32 output rather than reference.- 12 replies

-

- 1

-

-

- constructor

- .net

-

(and 1 more)

Tagged with:

-

That sounds indeed like a gap-matching tool when it comes to calling LabVIEW code from Python! I am urged to try it out ASAP, as I see that romance between LabVIEW and Python has already started, but still misses the spark. TestScript indeed promises a lot to kindle up this fire.

-

Is there a possibility in your library to get a sub element as json formatted string. I mean to get from json string: { "field1": 1, "field2": { "item1":"ss", "item2":"dd"} } the item field2, and expect it will return a string containing: { "item1":"ss", "item2":"dd" }

-

So apparently IMAQdx is pretty rigid when it comes to registering new events. I have failed to find any documentation on this topic but I have learned that PlugAndPlay events work only on FireWire cameras. Nevertheless I have managed to get event-like behavior from Genie Nano camera by setting up the camera to issue the events I was interested in - even though LabVIEW cannot handle them. However, Nano Genie does provide GenICam Attributes for timestamp of last triggered event; it allowed me to register for "Attribte updated" event on those attribute and by such trick - receive asynchronous updates from the camera.

-

That's the fear I'm facing. However is there a way to "hook up" onto those events as those are standardized GigE Vision events?

-

Hello all! I have a problem with registering to GigEVision events with LabVIEW & NI-IMAQdx I work with Teledyne Dalsa Genie Nano G3-GC10-C1280IF camera with GigEVision interface. It's documentation describes several events I can enable at the camera that will be "... sent on control channel when ..." particular situation occurs at the camera - e.g. @exposure end. However, when I wire session wire to Register Events node, I still have options only for "Attribute Updated", "Frame done" and "Plug and Play". I would like to ask you for help in finding how does GigEVision events can be read/discovered/registered to through NI-IMAQdx.

-

IMAQdx session "Attribute updated" event

_Mike_ replied to _Mike_'s topic in Machine Vision and Imaging

Also I am not in need to separate proces of parameter setting to independent loops. I will be perfectly content with blocking call each time. -

IMAQdx session "Attribute updated" event

_Mike_ replied to _Mike_'s topic in Machine Vision and Imaging

Thank you a lot! I will experiment a bit with that but I guess the way to elliminate the possibility of raecondition would be to place an assertion on exposure time in between setting exposure time and triggering a software trigger. I imagine such assertion in two forms: asynchronous - using "AttributeUpdated" event (then I need to use it preferrably in all places I change any attributes - to ensure that event I get confirms the change i invoked) synchronous - polling IMAQdx for values of subject attributes in wait for update to happen. -

IMAQdx session "Attribute updated" event

_Mike_ replied to _Mike_'s topic in Machine Vision and Imaging

Thank you smithd! I use continuous mode solely due to the fact, that setting up camera otherwise takes relatively long time (100ms+) so it would be a hassle for me to trigger it correctly. I have not came accross software trigger in LV before - apparently it is not pronounced strongly enough I will dive into the topic and come back if some things will remain unclear. -

IMAQdx session "Attribute updated" event

_Mike_ replied to _Mike_'s topic in Machine Vision and Imaging

That indeed sounds interesting! I am using Basler ace and Deledyne/Dalsa Genie cameras. I could find some information on "software acquisition trigger" for Basler - i need more insight into teledyne/Dalsa hardware. How does soft triggering look like (i mean 'softare triggering") from LabVIEW/IMAQdx perspective? I have seen neither any examples nor palette vis to support such functionality - could you write me a bit more or direct me to appropriate materials? -

Hello! I am running a system with GigE camera with NI framegrabber in continuous acquisition mode - in which I change some attributes every now and then. So far I have been using following scheme to assert I am getting correct image after attribute change: change attribute read "Last buffer number" property -> n run IMAQdx get image.vi set to "buffer' and parameter n+2 (+2 being due to the fact that i assumed attribute change arrives to camere during running exposure that will be unaffected by attribute change - hence i needed to discard "next" buffer) Unfortunately I still every now and then acquired image that obviously was aquired with previous attributes (e.g. I've dramatically increased.decreased exposure rate, while acquired image was very similar to previously acquired one). Guessing that it may have something to do with buffers I have increased "magic number" from +2 to +3. It indeed helped, but after longer usage I have discovered, that it only reduced the frequency of the error. Hence I needed to design more "bulletproof" solution that will satisfy my timing requirements (stoping and starting acquisition is out of question as it takes more than 100ms what is unacceptable for me) I would like to: change attribute acquire information from camera that will allow me to fetch image acquired with changed attribute For such purpose I have discovered IMAQdx events - especially "Attribute updated" and "frameDone". Unfortunately, I could not find any detailed documentation about those functions. Therefore I would like to ask you for help to determine when 'Attribute updated" is triggered. Is it when: driver receives the command and pushes it to camera? (pretty useless for me as I cannot relate it to any particular exposure) camera confirms getting it (then assuming it arrives during an ongoing exposure, I'll discard "next' image and expect second next to be correct camera confirms applying it (then I assume that next image should be obtained with correct settings) camera confirms it managed to acquire a frame with new parameter (pretty unlikely scenario - but the i'd expect correct image to reside in "last" buffer) Could you help me determine which case is true? Also should I be concerned about the fact that there may be a frame "in transition" between camera and IMAQdx driver which will desynchronize my efforts here?

-

try this one readmultiformatdata.zip here comes the example of reading three different files, with different formats and different data - then it converts each log file to a common style, and created one joined log. readmultiformatdata.zip

-

That sounds like a perfect task for visualization tool like e.g. grafana: https://grafana.com/ Though if you insist on using LV for that, I'd recommend you to import all different data using "Read Delimited Spreadsheet VI" http://zone.ni.com/reference/en-XX/help/371361P-01/glang/read_delimited_spreadsheet/. Then you should merge the data together into one-format array. Having all the information in one array will allow you to perform whole variety of analysis.