-

Posts

3,463 -

Joined

-

Last visited

-

Days Won

298

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by hooovahh

-

Yes, a value change event does not necessarily mean a different value than the previous one. You can use the Value (Sig) property to set a control to the current value and the event is still triggered, same with this. The solution seems like an easy one. If the old value, is equal to the new value, do nothing. The old and new values are available in the event that is triggered.

-

Filthy Casual. But seriously yes I type with my hands on the home keys (stupid Mavis Beacon made sure of that). Swapping too many keys can be dangerous when I go to use literally any other keyboard in the world. The other issue is changing my brain to use keys differently. CTRL has always been that very lower left key, I can feel around to find the edge and know which it is. Changing CTRL and ALT sounds dangerous. As I mentioned I already replaced Caps Lock with CTRL because I just don't use that key.

-

Okay a quick update. I tried some behavior changes to make my life easier. I did get one of those Kinesis keyboards I linked to earlier (used cause who whats to pay that much), and I got the adapter that lifts the center of the keyboard and has the wrist guard. This is a bit difficult to get used to but I like it. I also bought an industrial foot pedal like this one, wired it to a Teensy LC (cause I had one already) and using the internal pull up I have it activate the CTRL key when pressed so I don't need to stretch my pinky finger. This change is harder to get used to because I need to have my foot in a resting position but ready to press, and then force myself to use that instead of my hand. It's possible and I use it for about 50% of my CTRL presses. I also went to the doctor and they said I have tendinitis which is a catch all term, and the specific injury might have a better classification. I'm now going to physical therapy for a month or so for some hand and wrist treatments. I'm also doing some hand stretches, and am doing better. As for the desk, I'm sorta in a lab environment without much extra space. The desk is a solid one that doesn't adjust, and probably a bit too high. I don't think changing it would be easy.

-

When are events queued/registered?

hooovahh replied to Scatterplot's topic in Certification and Training

Oh that reminds me, XControls have some kind of limitation when it comes to using user events, I believe you can't use user events at all, that are created from outside the XControl, and I wonder if when it executes and registers has something to do with this limitation. -

When are events queued/registered?

hooovahh replied to Scatterplot's topic in Certification and Training

Thank you very much for the correction, I've edited my previous post. For answering several questions in this thread I went and wrote code to confirm my assumptions. When it came to answering that question I just assumed I knew what it would do but as you mentioned gave the right answer to a different question. -

When are events queued/registered?

hooovahh replied to Scatterplot's topic in Certification and Training

I never really messed with this feature much, so I had to write some test code to see. It appears the locking of the FP occurs on static events when the event occurs, not when it is dequeued. Same goes for dynamic control references, if the register has the "Lock Panel Until Handler Completes" by right clicking the register for events. As for user events, it appears this isn't an option because user events don't involve UI controls. -

Yeah I opened these in LabVIEW 2015 32-bit and I don't even get prompted to save on close, no dirty dot, no recompile. I'm not convinced bitness is even saved in the VI. EDIT: Reading the block data the only thing that has changed between the two is the FPHb block, which I doubt has anything to do with bitness.

-

To me this seems like a matter of opinion, on how this tool should behave. If the purpose is to show the various versions of LabVIEW that are compatible with the VI, then I would think it should show both the 32 and 64 bit versions of LabVIEW. Because after all both are compatible with opening the VI. But I understand that showing the user the version it was last saved in might be helpful. Well to help out could you post a 32 bit and a 64 bit version of the same VI, and possibly two other versions that have separate compiled code or not. Looking at the private methods nothing jumps out to me as what you want, but there are some bit options that I can't predict what the values will be for other VIs. The other option is to look at the VI file structure on a binary level and find the differences. I suspect there might be something in there but again without any VIs to look at I can't say for sure. But there is the real possibility that this information just isn't in the VI. Source might not care what bitness it was saved in, so maybe NI doesn't save that information in the VI, only in the compiled code.

-

When are events queued/registered?

hooovahh replied to Scatterplot's topic in Certification and Training

Yeah I suspect new users think of an event structure as a type of interrupt, or goto function, and when dataflow is the name of the game, that just isn't the case. -

When are events queued/registered?

hooovahh replied to Scatterplot's topic in Certification and Training

When it comes to dynamically registered events, you are correct. If I generate a bunch of user events, then register for them, my event structure won't see the previously generated events, because it wasn't registered for them, when they were generated. Only events generated after registering will be seen and handled. It's like enqueueing elements, when the queue hasn't been initialized yet. But when it comes to static events, LabVIEW knows what it needs to register for. While I can't say for certain, I always assumed it registered for these static events, when the VI is started, before any code is executed. In actuality it might be registering for the events before the VI is even ran, but for this discussion it doesn't really matter. All that matters is static events are registered before code execution, presumably because LabVIEW can know what it needs to register for, and does it for you. When it comes to dynamic events you have more control over when to register and unregister so that isn't the case. As for your other question. Each event structure does get it's own queue to stack events onto. If you have two event structures, and they both register for the same user event (remember both register, not just register once and give that to each) then firing that one user event will be handled in both cases separately. This can be useful for a 1-N type of communication where one action is handled in N places, like a Quit event. When it comes to two event structures sharing the same static event, I think things are inconsistently handled. By that I mean if you have two event structures, handling the same boolean Value Change control, some times they both get the event, sometimes the first structure will get it and the other won't, or the sometimes the second structure gets it but not the first. Basically avoid this situation in all LabVIEW code. Because of this, and for code simplification, it is highly recommended that you only have one event structure for each VI, but that alone isn't bad for your code. EDIT: Still avoid the described situation, but as Jack said, I was confused about when this situation would happen. -

Not sure it is really an issue. I can't say for sure but I suspect the first time you try to do something like this, some DLL dependencies are loaded or opened the first time and cause some kind of delay where every subsequent write doesn't have that.

-

So a VI might not have the bitness in the VI, due to the fact that the bitness is in regards to the compiled code, which might not be int he VI file. http://forums.ni.com/t5/LabVIEW-Idea-Exchange/Property-to-get-LabView-Editor-bitness-32bit-64bit-and-patch/idi-p/2978707 That being said I'd suspect this information is available somewhere in the VI as a private function, or the VI file structure itself. But for this tool I don't think it matters, because VIs saved in 64 bit LabVIEW can be opened in 32 bit LabVIEW and vise versa. So for your tool you can show the compatible version as both the 32 bit and 64 versions installed. Of course I don't have a 64 bit version installed at the moment to test with.

-

How do these FPGA XNodes work without all the abilities?

hooovahh replied to Sparkette's topic in VI Scripting

Nope I'm not. I didn't make the connection that you were attempting to use scripting to change XNodes, sorry. -

How do these FPGA XNodes work without all the abilities?

hooovahh replied to Sparkette's topic in VI Scripting

Are you sure? Because nothing in your reply has anything to do with XNodes, Hybrid XNodes, abilities, or anything this thread is about. If you have a question not related to the topic, then please don't reply to that topic. Make your own thread on LAVA, or on the dark side (forums.ni.com) in the appropriate subforum. As for your specific question, I've never done that in scripting but I always assumed it was possible. -

Yeah I have used these OpenG functions on clusters of arrays, of clusters etc... It stores and opens it fine (other than the issues OP mentions) but it tends to get very slow. The OpenG read/write INI stuff uses the NI INI stuff under the hood so each line in the INI is a call to that NI function which gets very slow quickly. We found the MGI read/write anything to be a much faster solution, since they re-wrote the INI parsing stuff and use the normal File I/O instead of the NI INI. As for the original question, this is to be expected. You aren't saving the data as a binary format, you are converting it to ASCII then back, and in that you loose precision, that can happen in the type of conversion you are doing here. One thing that might help is if you use the Floating Point Format input and specify there the number of digits of precision to use on the read and write. Or convert the number to a string of your own choosing, and write that instead of the number.

-

Why does it matter? And if the answer to that question is because you want to send frames at a periodic rate, then I'd say you are doing it wrong. If timing matters, don't use software timing. The same goes for writing to DAQmx analog outputs. If you are trying to make a clean nice sine wave on an analog output, you wouldn't do it by setting each output as a single point AO value in a while loop. Your timing just won't be consistent due to jitter. What you would do in this case it make the full waveform of the output, put the whole output on the hardware buffer, then tell it to play that waveform and repeat it. Here the hardware controls the timing. You can do similar things with XNet. If you must set a periodic message every 10ms, then tell it to send the signal (or frame) every 10 ms, and then update it's value when you want, and the next time it sends it, it will send the new value. Or if you want to build a queue of signals (or frames) to go out one after another 10ms apart you can do that too, and before the queue is empty you can add more to the queue. These different CAN modes are the Stream, Queued, and Single-Point. Open the context help and it shows nice diagrams about how signals and frames are read or written in the different modes. The database you select defines the rates of the signals (or frames).

-

The ViewPoint systems one works fine and is newer, not that this means it is better. But honestly I think using a toolkit is optional. I've generally just always used the tortoise SVN which add right click menus in Windows Explorer. I also work in the Lock, Edit, Commit scheme, instead of the Edit, Merge. What this means is files are read-only until you get a lock. Once you get the lock the VI is now not read-only. You make the changes you want, and then commit them which by default will unlock it, setting the file back to read-only, allowing someone else to update and lock. So if I'm working on a VI and I don't have the lock I will hit save, and LabVIEW will prompt saying it can't save it is read-only and the explorer window will be opened asking me where to save it. I will in this window right click my file and get the lock. I'll then cancel that window and save again, and this time it will save because I have the lock and it isn't read only. I can do all the SVN operations I want from the explorer window, and don't need to invoke another tool like Viewpoint. The only exception is for things like invoking compare, and rename, which generally needs to rename both in SVN and in an open project. Note that if you have LabVIEW 32 bit on a Windows 64 bit, you'll want to install this additional installer to give the right click menus in 32 bit applications after installing the 64 bit tortoise client. This is mentioned on the download page of tortoise SVN.

-

I don't, in a good number of cases the computers aren't on a network, other than than between a few other PCs all of which I control. In other cases they are put on the internet but this is generally so they can be remoted into, and have data uploaded to a network location automatically. These PCs are then put on the domain and controlled by IT. I don't. What kind of attack are you talking about? None. Never used encryption. Never needed a cloud provider, if I did I would prefer one that used encryption.

-

To me I don't think it does. Being part of the class may give the VI special privileges that otherwise you wouldn't have. Being a member might allow you to have access to private data, where you could be doing things that a user of your toolkit wouldn't be able to do, unless they modify the class. If you have a project you don't have to have the folder structure in it, mirror you files on disk. So some people place an examples folder in the project, which can then link to files deep in other folders. So this is another option. I'd suggest looking at other toolkits and code posted to get a feel for how the community organizes code and examples. In LabVIEW there are about 3 or 4 ways to sorta get around the stict-ness of the language, but none of them are satisfying. Polymorphics, generics, VI Macro, XNodes, variants all could work with varying levels of success. I don't see the purpose of the OptionType class at all. It takes a variant and turns it into a cluster of a string and an array of words, and can then reverse this...isn't this what the to variant and from variant does for you? Your unwrap also just clears the error and doesn't pass it out. What if there was a problem with conversion? How will I know from using the API? To help with the variants you can get the type of variant, and other information, using some OpenG functions, or the native ones in VI.lib. Install Hidden Gems, or use 2015 which have them on the variant palette. I suspect this can eliminate the OptionType class all together.

- 3 replies

-

- lazy lists

- option types

-

(and 1 more)

Tagged with:

-

I would suggest both of these things. Maybe something like a laser pointer shining through it would help detect levels easier. What is the lighting in the room going to like? Can it be controlled? Lighting in vision is basically an art form and not having control over that can make things difficult to impossible.

-

Yeah I made them up, but I've often thought about what you could name higher level dimensions like this. It is hard to conceptualize dimensions this large, and I think putting in these terms makes it easier to describe to people. Go to Library 4, go to Aisle 1, get Bookshelf 3 and the first shelf on it, grab the second book, turn to page 4, and you'll find the 2D array of data you want.

-

Yeah reading it again I suspect this is really all the assignment is.

-

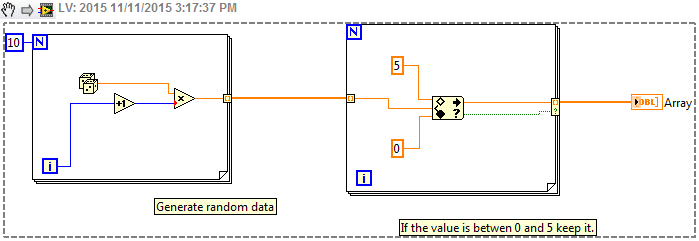

You made a 12 dimensional array. That is not what you want. Rarely do you need more than 2, this problem really only needs two, rows and columns. You have Rows, Columns, Pages, Books, Shelves, Bookshelves, Aisles, Libraries, Towns, States, Countries, and Planets. There are many ways to go about doing this. They will all involve some type of sorting mechanize, Maybe calling the coerce in range for the 12 different ranges, then using the conditional concatenating to build them into the rows. Here is a quick example on getting data in a range. This is a snippet and can be brought into LabVIEW as executable code. Also here is some free online training to explain the basics of LabVIEW, but I assume that's why you are taking the course. Links at the bottom. https://decibel.ni.com/content/docs/DOC-40451

-

Also did you take a picture of a piece of paper, of a printed front panel, of a VI? Is this an assignment? Not that we won't help with assignments but we won't just do the work for you.