All Activity

- Today

-

BevisChan joined the community

-

windbear joined the community

- Yesterday

-

bartek8118 joined the community

-

Soumik1506 joined the community

-

Hello Rolf, thank you for the help. I did download the source files from HDF5 and HDF5Labview. You're are right it can take some time to get it working. For that reason I'll start with making a datastream from the cRIO to the windowns gui pc and use that for now. At least I'll be able to reduce some risk. Meanwhile I'll try to get a the Cross compiler working I'll should at lease be able to get a "hello world" running on the cRIO and take some steps if I can find some time. I did find the following site: https://nilrt-docs.ni.com/cross_compile/cross_compile_index.html Do you think this site is still up to date or is it a good point to start? I am only targeting a Ni-9056 for now. Thanx!

-

DenisLog joined the community

-

Knox joined the community

-

yingfanxing joined the community

-

Tree control how to add custom icon to column

FANBIN86 replied to Vandy_Gan's topic in LabVIEW Community Edition

This 2D picture is overlaid transparently on the tree, and the graphics are drawn according to the coordinates (row, col) you need for display. - Last week

-

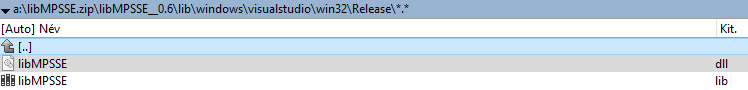

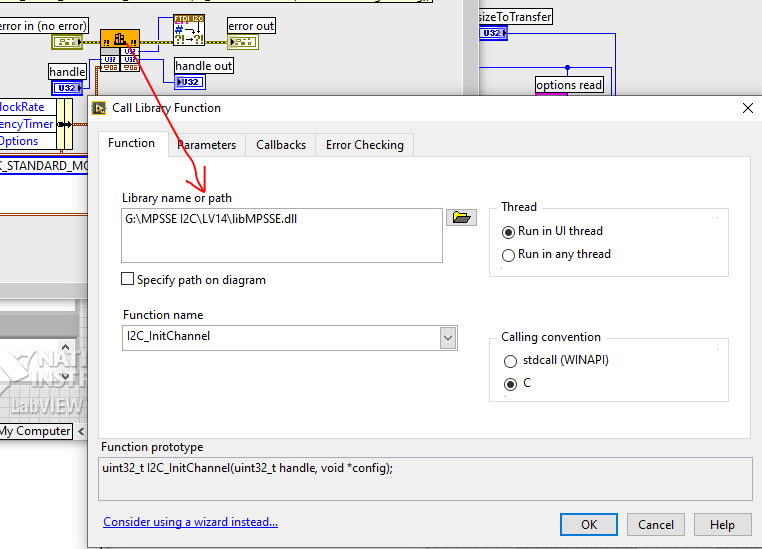

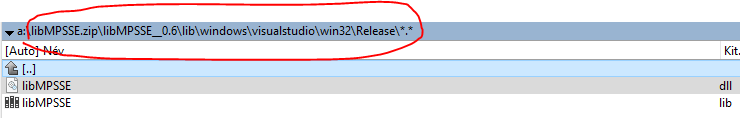

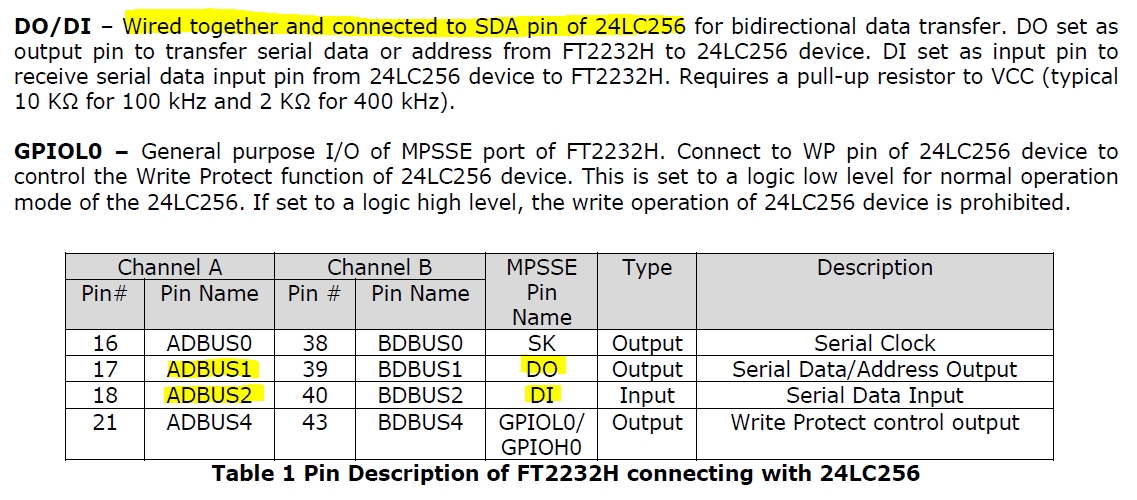

There is an updated LibMPSSE library on FTDI's website. LibMPSSE-I2C Examples https://www.ftdichip.com/old2020/Support/SoftwareExamples/MPSSE/libMPSSE.zip If you replace the libMPSSE.dll in the project folder with the one from the following location it should work. It's possible that when you open the VI the run arrow will be broken. In this case you need to set the Library Name for the Call Library Function Node in each subVI. I've just tried it, and it works.

-

LabVIEW FTDI MPSSE I2C DeviceRead always returning 0xFF

gammaray replied to Arj's topic in Code Repository (Uncertified)

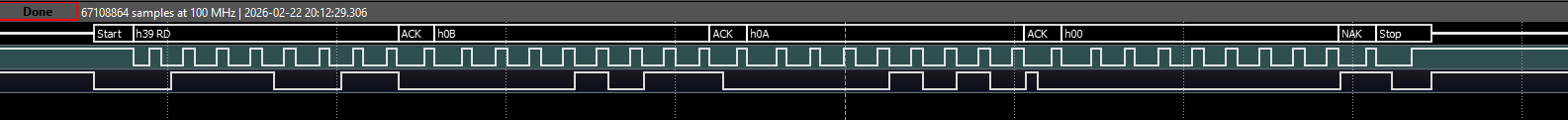

By the way, this library is a bit weird.I haven't been able get the master yet to send an ACK when reading, if more bytes need to be read and a NAK after the last byte has received. -

LabVIEW FTDI MPSSE I2C DeviceRead always returning 0xFF

gammaray replied to Arj's topic in Code Repository (Uncertified)

Hi, It was long ago when you asked. You've probably already solved it. I just started using the FT2232H in LabVIEW and noticed your question. Connect the DO and DI pins to each other and it will work. -

Never used them or had a need for them.

-

Unfortunately, I don’t have that privileged access, no… 😄 A very simple example: it would allow for consistent display between a table and its corresponding intensity chart, right? But also for any display that needs to be right-to-left, such as numbers,etc.

-

And what your point of vue about Q Controls ?

-

Rolf Kalbermatter started following About X-Controls... :-D and Growing direction of arrays at edit time

-

Growing direction of arrays at edit time

Rolf Kalbermatter replied to ManuBzh's topic in LabVIEW General

I can't really remember ever having seen such a request. And to be honest never felt the need for it. Thinking about it it makes some sense to support it and it would probably be not that much of work for the LabVIEW programmers, but development priorities are always a bitch. I can think of several dozen other things that I would rather like to have and that have been pushed down the priority list by NI for many years. The best chance to have something like this ever considered is to add it to the LabVIEW idea Exchange https://forums.ni.com/t5/LabVIEW-Idea-Exchange/idb-p/labviewideas. That is unless you know one of the LabVIEW developers personally and have some leverage to pressure them into doing it. 😁 -

I didn't know this site Shaun, thank you. All of three of us in this topic, started LabVIEW a loooonnnggg time ago it seems...

-

M. Darren Tiger do ban them publicly ! 😄

-

Absolutely echo what Shaun says. Nobody banned them. But most who tried to use them have after some more or less short time run from them, with many hairs ripped out of their head, a few nervous tics from to much caffeine consume and swearing to never try them again. The idea is not really bad and if you are willing to suffer through it you can make pretty impressive things with them, but the execution of that idea is anything but ideal and feels in many places like a half thought out idea that was eventually abandoned when it was kind of working but before it was a really easily usable feature.

-

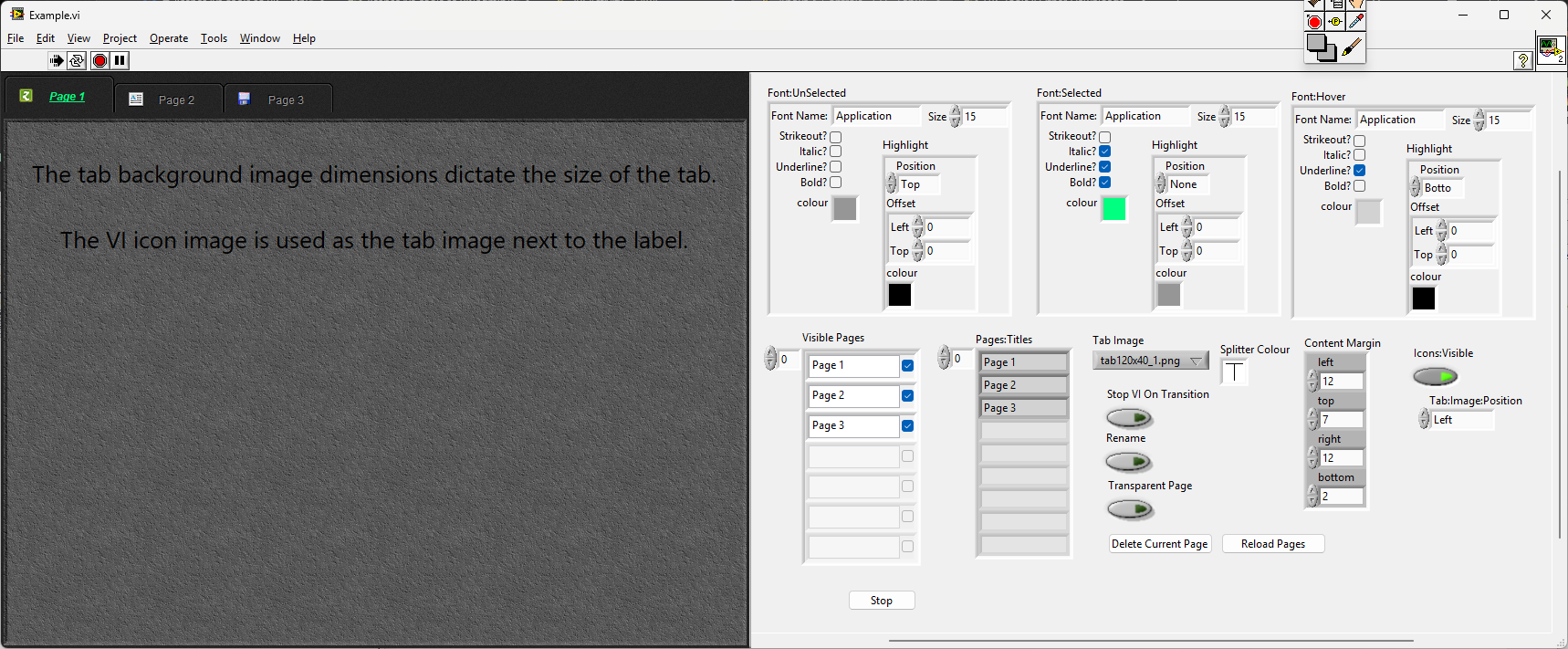

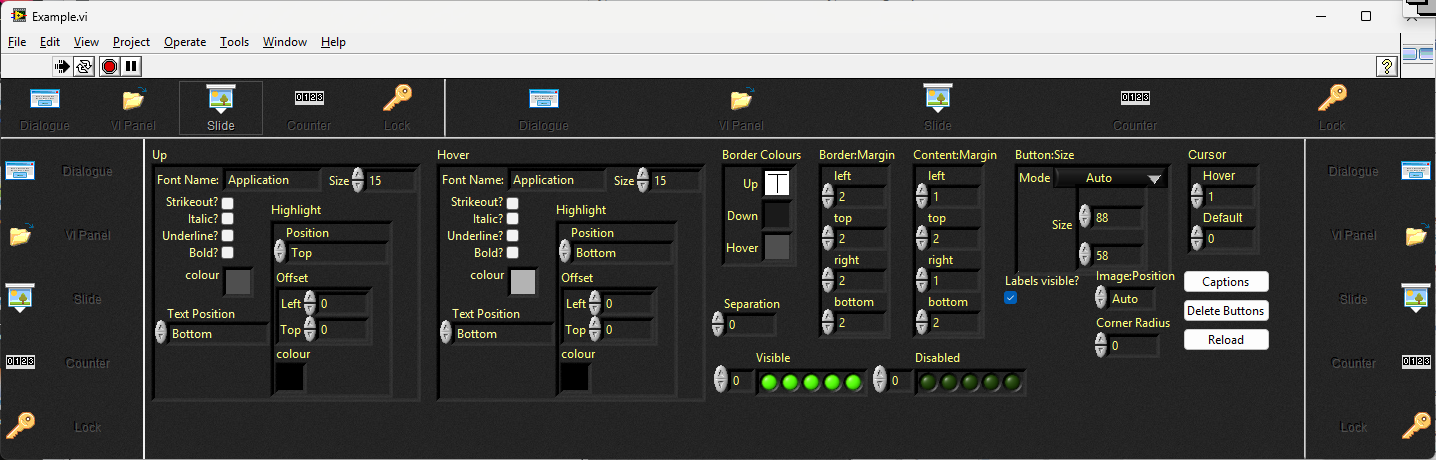

They aren't banned. They are just very hard to debug when they don't work and have some unintuitive behaviours. I have Toolbar and Tab pages XControls that I use all the time and there is a markup string xcontrol here. If you are a real glutton for punishment you can play with xnodes too

-

For sure, this must have been debated here over and over... but : what are the reasons why X-Controls are banned ? It is because : - it's bugged, - people do not understand their purpose, or philosophy, and how to code them incorrectly, leading to problems.

-

I'd would find useful (may be it's already exists) to choose the editing growing direction of an array. It's used to be from left to right and/or up to down, but others directions could be usefull. Yes I can do it programmatically, but it's cumbersome and it useless calculus since it could directly done by the graphical engine. Possible (and I forget about it) or already asked on the wishlist ? (I'm quite sure there's of one the two !) Emmanuel

-

carsten19 joined the community

-

X___ started following rtm files not copied when releasing a source distribution?

-

Using LV 2021 SP1, I am trying to release my source code but realized that, in addition to the problem described in this thread, I am missing .rtm files that I took great care to move over in their respective libraries (in order that they be included in the release). They are simply ignored during the source code distribution creation, as they would otherwise show up in the list of files generated during the build (which I am using in the script described in the quoted thread above). Note that it is not possible to see which files of a library are included in the Source Files tab of the source code distribution properties dialog, only that said library (XXX.lvlib) is included. I suppose a workaround would be to remove this type of files (and other non .vi(m), .ctl, etc, files) from libraries and individually mark them as always included, but that kind of defeats the purpose of using libraries to provide self-contained code and asset packages. Is that a described feature or a missing one?

-

By Evan joined the community

-

Andre Aronis joined the community

- Earlier

-

Rolf Kalbermatter started following Ctl reedited is invalid after saved and h5labview on cRIO?

-

It's a little more complicated than that. You do not just need an *.o file but in fact an *.o file for every c(pp) source file in that library and then link it into a *.so file (the Linux equivalent of a Windows dll). Also there are two different cRIO families the 906x which runs Linux compiled for an ARM CPU and the 903x, 904x, 905x, 908x which all run Linux compiled for a 64-bit Intel x686 CPU. Your *.so needs to be compiled for the one of these two depending on the cRIO chassis you want to run it on. Then you need to install that *.so file onto the cRIO. In addition you would have to review all the VIs to make sure that it still applies to the functions as exported by this *.so file. I haven't checked the h5F library but there is always a change that the library has difference for different platforms because of the available features that the platform provides. The thread you mentioned already showed that alignment was obviously a problem. But if you haven't done any C programming it is not very likely that you get this working in a reasonable time.

-

Hello I am using h5labview on a windows PC connected to a cRIO. This seems to work fine. I rather would like the file save be running on the cRIO though. Does anyone know if there is a howto on this subject? The most recent link I can find is this one: https://sourceforge.net/p/h5labview/discussion/general/thread/479d0cdf61/?limit=25#c81d the link is a few years old and if I would be able to compile and get a *.o file I would not know howto use it on the cRIO. I have some Linux experience I can setup a VM with Linux. I use Labview 2024. any help is appreciated thank you!

-

Ctl reedited is invalid after saved

ensegre replied to Vandy_Gan's topic in LabVIEW Community Edition

And must you really do it in a compiled executable? Cosmetic properties you can change programmatically on the fly even in an exe. -

Ctl reedited is invalid after saved

Rolf Kalbermatter replied to Vandy_Gan's topic in LabVIEW Community Edition

Many functions in LabVIEW that are related to editing VIs are restricted to only run in the editor runtime. That generally also involves almost all VI Server functions that modify things of LabVIEW objects except UI things (so the editing of your UI boolean text is safe) but the saving of such a control is not supported. And all the brown nodes are anyways private, this means they may work, or not, or stop working, or get removed in a future version of LabVIEW at NI's whole discretion. Use of them is fun in your trials and exercises but a no-go in any end user application unless you want to risk breaking your app for several possible reasons, such as building an executable of it, upgrading the LabVIEW version, or simply bad luck. -

Thanks from 2026 for that Dropbox-Link ! 🤩

-

Ctl reedited is invalid after saved

Vandy_Gan replied to Vandy_Gan's topic in LabVIEW Community Edition

@ensegreI had watch a video that demonstrate the function on the YouTube, but I did not remember the link of it -

Ctl reedited is invalid after saved

Vandy_Gan replied to Vandy_Gan's topic in LabVIEW Community Edition

@Zou Also I would modify text's property ,e.g. font ,size , position .Becuase I would like to achieve to modify batch more ctrls -

It's just boolean text. Do you really need to save it a file?