Leaderboard

Popular Content

Showing content with the highest reputation on 06/29/2012 in all areas

-

Last year I had a product mostly ready to go that made heavy use of this functionality, but I had to rip it out fairly late in the process because of some fundamental flaws (mainly #1 in asbo's list, but also some other issues). Please don't rely on this functionality in any app that you're not planning to throw away.3 points

-

Over the past few years, I have advocated that several private properties/methods that could be useful to the wider LabVIEW audience be made public/scripting. I have been largely successful. Please let me know (on this thread, or with PMs, or whatever) if y'all come across private entries that you think would be useful, and I'll see what I can do to make them official in a future LabVIEW version.2 points

-

In general unlike the scripting stuff, this are more general methods and properties added for various reasons during the development for LabVIEW, usually to allow a certain LabVIEW tool to do something. Those methods while there, do not receive the same attentions in terms of maintenance, unit test coverage and of course documentation. They can and sometimes do break under different than the intended use cases, are left outside in the cold, when NI creates a new LabVIEW version and simply are the unloved stepchild in terms of care and maintenance in general. Whoever added them for their specific tool is responsible to make sure they keep working in newer releases but it's very likely that a developer adding a new feature to LabVIEW isn't aware about some of them in the first place and in the course breaks them horribly and because of the limited unit test coverage such a breakage may not get discovered. So in conclusion, play with them, have fun and enjoy the feeling to have a privileged position in terms of inside view into LabVIEW things, but DON'T use them for anything you want to be able to work across new LabVIEW versions without breaking your code, especially if you plan to develop something that might end up being used by other people than yourself.2 points

-

Easy answer: instead of calling Quit LabVIEW, use System Exec to run "taskkill /f {appname}.exe". Hard answer: break down all of the technologies (DAQ, TCP, Shared Variables, etc) you use and figure out which one isn't shutting down properly. You failed to mentioned the version of LabVIEW you're using but I'll assume it's LV2011. Try turning off SSE2 optimizations in your build.1 point

-

V I Engineering, Inc. is donating 2 x Microsoft Kinects for Windows, compatible with LabVIEW! Upgrade your test systems to a virtually real* HMI! Track gestures and objects in the room and control your virtual world through the power of Kinect and LabVIEW (you can find out more about communicating with the Microsoft Kinect here and here, and you can download drivers here). *This door prize will not make you look like Tom Cruise. Unless, of course, you already look like Tom Cruise. In that case, sure, let's say that this door prize will make you look like Tom Cruise. Yeah, that sounds pretty good - put that in post. Wait, are you writing that last bit down? No, the "...let's say that this door prize will make you look like Tom Cruise" bit. No, don't put that part in the post - I don't want anyone to think we're actually promissing to make them look like Tom Cruise. I mean, c'mon, they'll never fall for that. Will they? You know what, maybe you're right - put it in there and let's see if anyone falls for it. Wait, why are you still writing?1 point

-

Any USB device is going to be limited in it's current capability (both sourcing and sinking) and usually only 5v - You didn't say which relays (5v/12v/24v). You are much better going for a PCI solution such as the NI-PCI 6517 which will operate 12v and 24v directly without intermediary hardware (32x125mA max or 425 mA per single activated relay). You'll also have more than enough current headroom to add LEDs that can burn retinas at 100 paces If it is a 5V relay, you can still use the same card, but you may have to put a resistor in-line to drop the lower (off) threshold depending on the relay. Most of the time you can get away without this however.1 point

-

Here are some options: It's possible someone already did this. I seem to have a vague recollection of this, but I'm not sure. It might be easier to just build a new project with just the VIs you want. I usually try to keep only the VIs I actually need to access in the project. You might be able to drag virtual folders from one project window to another to speed this up, although I'm not sure about this. I would expect that writing a VI like the one you want shouldn't be that hard, assuming you have a small number of main VIs which hold the others in memory. Basically, you need to open the main VI and get all its dependencies (either through the Get VI Dependencies method or by recursively using the Callees property on all the subVIs. Once you have that list, You need to iterate though all the VIs in the project and see if they're on that list. If they're not, they're either orphaned or a top level VI and you should already have a list of top level VIs (in fact, they can be part of the first list). I'm assuming there's a method you can use to remove VIs from the project at that point, but I haven't looked for it. Of course, one more thing you would want to consider at this point is deleting the VI (presumably you don't need it anymore if no one is calling it). And one more of course - you would want SCC so that you can revert if something goes wrong.1 point

-

If you think for a few seconds about it you will recognize that this is true. When a path is passed in, LabVIEW has at every call to verify that the path has not changed in respect to the last call. That is not a lot of CPU cycles for a single call but can add up if you call many Call Library Nodes like that especially in loops. So if the dll name doesn't really change, it's a lot better to use the library name in the configuration dialog, as there LabVIEW only will evaluate the path once at load time and afterwards never again. If it wouldn't do this check the performance of the Call Library node would be abominable bad, since loading and unloading of DLLs is really a performance killer, where this code comparison is just a micro delay in comparison. If I would have to have a guess, using a not changing diagram path adds up maybe 100us, maybe a bit more, but compare that to the overhead of the Call Library node itself which is in the range of single us. Comparison of paths on equality is the most expensive comparison operation, as you only can determine equality if you have compared every single element and character in them. Unequality has on average half the execution time, since you can break out of the comparison at the first occurrence of a difference.1 point

-

I've written my own SQlite implementation making this the fifth I'm aware of. All of them being yours, mine, ShaunR's, SmartSQLView, and a much older one written by someone at Philips. Handling Variants can be done (mine handles them) but there's several gotchas to deal with. SQLite's strings can contain binary data like LabVIEW strings. It looks like your functions are setup to handle the \0's with text so that's not a problem. So you can just write strings as text and flattened data as blobs, then you can use the type of the column to determine how to read the data back. The next trick is how to handle Nulls. As your code is written now NaN's, Empty strings and Nulls will all be saved as sqlite Nulls. The strings are null because the empty is string is passed as a 0 pointer to bind text. So when you have an empty string you need to set the number of bytes to 0 but pass in a non empty string. I never figured out an Ideal solution to NaN's. Since I treat null's as empty variants I couldn't store NaN's as nulls. The way I handled NaN's was to flatten and store them as blobs. I also would flatten empty variants with attributes instead of storing them as nulls (otherwise the attribute information would be lost). Be aware of the type affinity since that can screw this up. I like how you used property nodes to handle the binding and reading of different types. If you don't mind I might try to integrate that idea into my implementation. If you want to improve the performance, passing the dll path to every CLN can add a lot of overhead to simple functions (at least when I last checked). I use wildcards from http://zone.ni.com/reference/en-XX/help/371361H-01/lvexcodeconcepts/configuring_the_clf_node/ If your executing multiple statements from one SQL string you can avoid making multiple string copies by converting the SQL string to a pointer (DSNewPtr and MoveBlock be sure to release with DSDisposePtr even if an error has occurred). Then you can just use prepare_v2 with pointers directly. You might want to add the output of sqlite3_errmsg to "SQLite Error.vi" I've found it helpful.1 point

-

No. There is no chance at all that we'll release that. I will tell you that it's titled Primitives for Dummies and has at least a dozen pages. The only part you're wrong about is the watermark on every page. I should point out that the document starts with "Tremble young seeker for thou art entering a secret chamber in the LabVIEW shop of horrors." I don't have much more to say about this except that we keep parts of LabVIEW private because they are not ready for use by the general public or even experimenters. If you want to experiment, I suggest you check out NI Labs. I think it's great that you want to experiment, but there are a lot of Bad Things™ that can happen. You can corrupt VIs and not get them back. Or the corruption may have no effect for a long time and then suddenly things start to break in unexpected ways. I'm not telling you to protect you or because I feel NI is threatened; I'm telling you because there's the potential to break things for you, and other people if you release tools that use unstable, unreleased features.1 point

-

Hi So thanks to a very very kind "insider" I am in a position to offer a very rare door prize to a lucky LAVA BBQ attendee: a LabVIEW iPhone hard plastic cover. This is for a 3G / 3GS model. Cheers Chris Roebuck CLA , CTA and all round nice guy1 point

-

Ano Ano: These sorts of questions always work best if you can post your best attempt so far and let us help you fix it. If nothing else, go ahead and build the front panel that you want so that everyone is clear what data types you're trying to build up. This sounds like a homework question, and the community will help, but it won't write it for you. Give us something to start with and then we can point you the right way.1 point

-

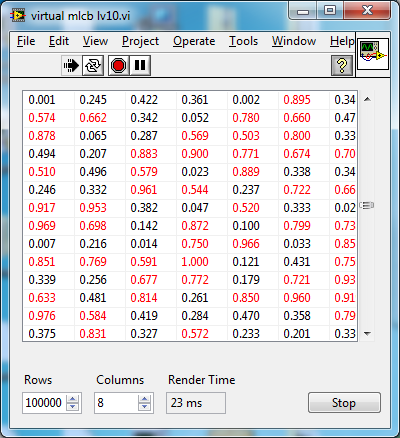

One of my UIs has a problem where it can chug down if you throw too much data at it. The underlying problem is formatting I'm applying to a native LabVIEW multicolumn listbox (MCLB). I'm not aware of any way to get events out of the MCLB when an item is scrolled into view, etc, so the application just blindly applies formatting to the entire list. Not a problem when I wrote the application because the data set was at most bounded to perhaps 1000 rows. Being in R&D, we're never happy though and my colleagues who use the application started throwing data sets at it that can have something like 100 000 rows. Yeah, the UI bogs down for minutes at that point, even with UI updates being deferred etc. Simply performing hundreds of thousands of operations on the MCLB even with no UI updates takes patience. Now ideally I'd like to have better support for the native MCLB, but for now I need to work with what I got so I figured I'd kludge together a pseudo virtual MCLB in native G-code. Here's a proof of principle: virtual mlcb lv10.vi If you run the VI and generate some stupidly large data sets, say 100000x8, you'll see that the render time hopefully stays constant as you scroll through the data, and with a little luck is reasonably fast. Now if I could make a virtual tree view...1 point