Leaderboard

Popular Content

Showing content with the highest reputation on 02/26/2013 in all areas

-

2 points

-

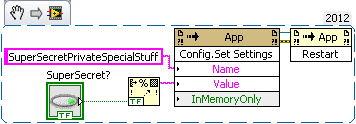

I'm considering a third iteration of a messaging library we use at my company and I'm taking a close look at what I feel are the limitations of all the frameworks I use, including the Actor Framework. What are the perceived limitations? How can they be addressed? What are the implications of addressing them or not addressing them? I have a good laundry list for my own library, but since it's not widely used I'd like to focus on the Actor Framework in this post. I'm wondering why the communication mechanism with an Actor is so locked down. As it stands the Message Priority Queue is completely sealed to the outside world since it's a private class of the Actor Framework library. The classes which are exposed, Message Enqueuer and Message Dequeuer, do not define a dynamic interface that can be extended. This seems entirely by design given how the classes are architected-- and it's one of the things that has resulted in a fair amount of resistance to me applying the AF wholesale to everything I do. Well that and there's a fair amount of inertia on projects which predate the AF. Consider a task where the act of responding to a message takes considerable time. Enough time that one worries about the communication queue backing up. I don't mean anything specific by "backing up" other than for whatever reason the programmer expects that it would be possible for messages deposited into the communication queue to go unanswered for an longer than expected amount of time. There are a few ways to tackle this. 1) Prioritize. This seems already built into the AF by virtue of the priority queue so I won't elaborate on implementation. However prioritizing isn't always feasible, maybe the possibility of piling up low priority messages for example is prohibitive from a memory or latency perspective. Or what if the priority of a message can change as a function of its age? 2) Timeouts. Upon receipt of a message the task only records some state information, then lets the message processing loop continue to spin with a zero or calculated timeout. When retrieving a message from the communication queue finally does produce a timeout, the expensive work is done and state info is updated which will trigger the next timeout calculation to produce a longer or indefinite value. I use this mechanism a lot with UI related tasks but it can prove useful for interacting with hardware among other things. 3) Drop messages when dequeuing. Maybe my task only cares about the latest message of a given class, so when a task gets one of these messages during processing it peeks at the next message in the communication queue and discards messages until the we have the last of the series of messages of this class. This can minimize the latency problem but may still allow a significant back up of the communication queue. The backup of the queue might be desired, for example if deciding whether to discard messages depends on state but if your goal was to minimize the backup dropping when dequeuing might not work. 4) Drop messages when enqueuing. Similar to the previous maybe our task only cares about the latest message of a given class, but it can say with certainty that this behavior is state invariant. In that case during enqueuing the previous message in the communication queue is peeked at and discarded it if it's an instance of the same class before enqueuing the new message. These items aren't exhaustive but they frame the problem I've had with the AF-- how do we extend the relationship between an Actor and its Queues? I'd argue one of the things we ought to be able to do is have an Actor specify an implementation of the Queue it wishes to use. As it stands the programmer can't without a significant investment-- queues are statically instantiated in Actor.vi, which is statically executed from Launch Actor.vi, and the enqueue/dequeue VIs are also static. Bummer. Basically to do any of this you're redefining what an Actor is. Seems like a good time to consider things like this though since I'm planning iterating an entire framework. How would I do this? Tough to say, at first glance Actor.vi is already supplied with an Actor object so why couldn't it call a dynamic dispatch method that returns a Message Priority Queue rather than statically creating one? The default implementation of the dynamic method could simply call Obtain Priority Queue.vi as is already done, but it would allow an Actor's override to return an extended Queue class if it desired-- assuming the queue classes are made to have dynamic interfaces themselves. Allowing an actor to determine its timeout for dequeueing would also be nice. I'm not saying these changes are required to do any of the behaviors I've outlined above, adding another layer of non-Actor asynchronicity can serve to get reasonably equivalent behavior into the existing AF in most cases. However the need to do this seems inelegant to me compared to allowing this behavior to be defined through inheritance and can beg the question if the original task even needs to be an Actor in this case. The argument can also be made that why should and Actor care about the communication queue? All an Actor cares about is protecting the queue, and it does so by only exposing an Enqueuer not the raw queue. If a task is using an Actor and gums up the queue with spam by abusing the Enqueuer that's its own fault and it should be the responsibility of the owning task to make sure this doesn't happen. This is a valid premise and I'm not arguing against it. All for now, I'm just thinking out loud...1 point

-

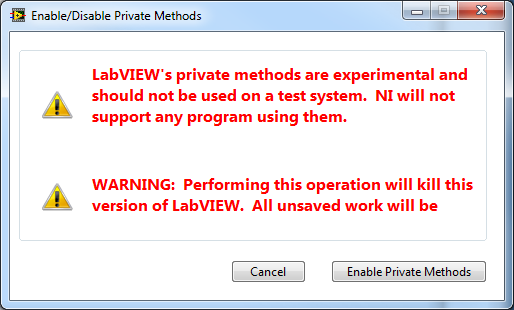

I don't use private methods often, and as a result I don't know what other methods are available. When I do turn on private methods I find that there are a ton and they some times will slow down my development, because there are so many that I will not use 99% of the time. For this reason I find myself enabling and disabling private methods and I wanted an easier way to turn them on and off. I made this VI (and it's supporting code) to run from the Tools menu of LabVIEW (place in the <LabVIEW>Projects folder) which will change the LabVIEW.ini key, and then restart LabVIEW. Disclaimer 1: Private methods are not supported by NI and should not be used in build applications, and are generally only used for tools of development. Disclaimer 2: This set of code contains an EXE (pv.exe) and will be saved and executed from the system temporary folder %temp%. Information about this EXE can be found here. Disclaimer 3: As stated in the main VI, this will taskkill the version of LabVIEW running, and all unsaved work will be lost. Any feedback is appreciated, thanks. EDIT: This code uses OpenG File Library. Enable Disable Private Methods.zip1 point

-

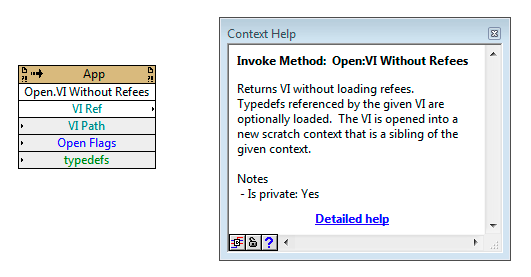

Check! Idea posted here: http://forums.ni.com/t5/LabVIEW-Idea-Exchange/Open-VI-reference-without-refees/idi-p/2329706 Let's see if it catches on. Take care! /Steen1 point

-

With regards to to the straightjacket -- this finally triggered a synapse none of us have keyed onto yet: unit testing. It's a manner in which the parent class can supply a bread crumb trail as to what is expected of a child class. Yet the child class can choose to fail or ignore these tests. Can we think of ways to incorporate unit tests as more of a first class feature of class definitions? Perhaps even making ad hoc execution of the tests as simple as right-clicking a ProjectItem that's part of the class?1 point

-

Rather than adding it to my (lossy) IdEx queue; by all means, I would gladly vote for your post -- and remember, etiquette there would suggest not showing a brown banner on an invoke node :-)1 point

-

I particularly second this. Actors should endeavor to read their mail promptly, and the message queue should not double as a job queue.1 point

-

Because (I believe) Stephen's focus was to create a framework that prevented users from making common mistakes he's seen, like having someone other than the actor close its queue. I'm not criticizing his decision to go that route, but the consequences are that end users are limited in the kind of customizations they're able to easily do. Lack of extendability and customizability. More generally, it forces me to adapt how I work to fit the tool instead of letting me adapt the tool to fit how I work. It encourages a lot of static coupling. Requires excessive indirection to keep actors loosely coupled. Somewhat steep learning curve. There's a lot of added complexity that isn't necessary to do actor oriented programming. It uses features and patterns that are completely foreign to many LV developers. Ditto. I've talked to several advanced developers who express similar frustrations. This is typically only an issue when your message handling loop also does all the processing required when the message is received. Unfortunately the command pattern kind of encourages that type of design. I think you can get around it but it's a pain. My designs use option 5, 5. Delegate longer tasks to worker loops, which may or may not be subactors, so the queue doesn't back up. The need for a priority queues is a carryover from the QSM mindset of queueing up a bunch of future actions. I think that's a mistake. Design your actor in a way that anyone sending messages to it can safely assume it starts being processed instantly. Agreed, though I'd generalize it a bit and say an actor should be able to specify it's message transport whether or not it is a queue. I'd do it by creating a messaging system and building up actors manually according to the specific needs. (Oh wait, I already do that. ) That kind of customization perhaps should be available to the developer for special situations, but I'd heavily question any actor that has a timeout on the dequeue. Actors shouldn't need a dequeue timeout to function correctly.1 point

-

1 point

-

I have run across whacky users who do things like delete .ini files. What I have taken to doing is not installing the .ini file but recreating the default one within the program when it can not find one. This can be as simple as keeping the default ini file inside a string constant, or if I have a configuration page I just save the default configuration as if the user had changed nothing from the default values. Along these lines, I can also detect an ini file from a previous version. In this case I can provide mutation code to update some values, and toss others. I do this inside the app, interesting thought to make it part of the installer, but I guess you only have one shot at mutation that way. Again I have seen (and perpetrated) copying of an old ini file after installing an app, and that is why I chose to make it part of the app. NI does something like this to you (each year a new version with a new ini file). How do you like it? I usually copy and hope nothing blows up.1 point

-

I just did a manual test (I pasted the generated image into Paint.NET) and it does seem to work - feeding 24 into the Image Depth input generates an image where the boolean terminal is 0,127,0.1 point

-

I'm pretty sure that the default color depth for the method is 8 bits, so you get a limited palette. Try using 24 bits.1 point

-

There is a lot of funny business going on with the terminal bounds and with the Draw Rectangle vi. On the surface, it appears the TermBnds[] property is wrong because it returns value between 0,0 and 32,32 which means a size of 33x33. However, Draw Rectangle does not really use the values of right and bottom, it actually gives rectangles with the width and height specified (for line width = 1). For example, if you draw the rectangle from (0,0) to (8,8) and look at the 1-bit pixmap you will see that the pixel indices are 0,0 to 7,7. When you turn around and draw a second rectangle from (8,0) to (16,8) you get a second line at column 8 in the 1-bit pixmap, ie. a double-width line. What a mess. An easy "fix" is to compensate by adding 1 to the right and bottom values. Now you get a nice conpane outline with all single pixel lines. The only problem is that it is now 33x33 pixels (if you care).1 point

-

This post is kind of old, I don't know if JGCode released his project API, so my apologies if this is already somewhere else. I just wanted to add a note here in case someone else tried to use the project API. The tags used in the build specification for the version have changed. JGCode uses inside "Executable Get Version_jgcode_lib_labview_project_api.vi" : App_fileVersion.major App_fileVersion.minor App_fileVersion.patch App_fileVersion.build at least on the version I am working on now (LabVIEW 2012) the tags are: TgtF_fileVersion.major TgtF_fileVersion.minor TgtF_fileVersion.patch TgtF_fileVersion.build Also, this is only useful in development mode, because as MJE showed in this post: http://lavag.org/topic/15473-getting-the-version-of-a-build-from-the-project/?p=93474 you can use that vi to extract the actual exe version at run time.1 point

-

I wrote this VI a while back for a colleague. It's not perfect, but it's the closest thing I've got. Saved in LabVIEW 8.6. Get Connector Pane Data Type Image.vi1 point

-

There is another option I can think of, but I don't know if its performance will be any better - Get the VI's connector pane reference and use that to get the reference to the controls and the pattern. Then, use those reference and call the Application class method Data Type Color to get the color for each terminal and then draw the connector yourself based on the pattern. I'm assuming that the color is the one which actually appears in the con pane. You might be able to use code people already uploaded to make editing the con pane easier (I believe Mark Balla has one in the CR).1 point