-

Posts

4,942 -

Joined

-

Days Won

308

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ShaunR

-

-

Sorry Shoneil. We must have been posting simultaneously and I didn't see your post.

QUOTE (shoneill @ May 14 2009, 09:32 AM)

QUOTE (shoneill @ May 14 2009, 09:32 AM)

I have also realised a LVOOP driver for a spectrometer which, while it has a LVOOP Backend running in a parallel process provides an interface via User Events. A spectrometer is pretty much like any other so defining the classes was not too hard. In order to incorporate a new Spectrometer I simply create a new class and feed this into my background process (which thanks to LVOOP runs with the new class without any code changes). Daklu's Idea takes this a step further. If I have understood correctly, you do something similar but then provide a way for broadcasting which Interfaces are available for a device. So I could have my Spectrometer servicing both a Spectrometer and a Colorimeter and a Temperature sensor interface. Is that correct? Is that the idea behind the examples posted? I have to confess I've read the document but not tried out the code.In addition I could jerry-rig a filter wheel (RS-232), a monochromator (GPIB) and a Photomultiplier (DAQ) together to create an Interface for a Spectrometer using three different devices witht hree different protocols. My top-level software doesn't care, it just calls the "Get Spectrum" method and (after a little while for a scanning spectrometer) delivers the data.This is what I see too. The abstraction can be a functional abstraction of the task rather than abstraction of the devices.

-

QUOTE (Daklu @ May 14 2009, 10:36 AM)

Download File:post-15232-1242328643.zip

Different manufacturers, different command sets, 2 are RS485, 1 is TCPIP and of course the 34401 is GPIB. All I had to do to incorporate them was copy and paste from the examples in the user manuals to the files (and add some comments) and add a couple of entries in the lookup table...Job done. Probably took me longer to find the manuals on our network...lol.

QUOTE (Daklu @ May 14 2009, 10:36 AM)

QUOTE (Daklu @ May 14 2009, 10:36 AM)

It isn't my intent for the Exterfaces Architecture to be based around a hardware device. That's what burned me in the first place. Interface definitions, like interfaces in text languages, are based on a set of common functions. The IVoltmeter interface can be applied to any device that can measure or calculate voltages: DVMs, oscilloscopes, DACs. It doesn't even have to be a physical instrument. In theory you could implement an exterface that reads current and resistance measurements from a text file and returns the calculated voltage. (Though it would be a bit tricky to implement that in this particular interface definition.) The example I've included happens to have 4 instruments that are fairly narrow in their capabilities and so I can see how it would look as if that is what I was doing.Great! Back on topic

Indeed. And this is why I think its far more useful than anything else I've seen in classes based around instruments, which are always peddled as infinite extensibility IF you write 100 similar snippits of code to wrap already existing functions (which is what I thought first of all with yours). Add a new DVM? Write another 20 function wrappers like the last lot only a bit different. But if I use your exterfaces (I think) I can wrap 1 piece of code and use the exterfaces to define higher level function like entire tests. Instead of an IVoltmeter. I (could) have an IRiseTime and choose which subsytem to run it on (for the one to many). Or maybe I'm just barking up the wrong tree.lol.

QUOTE (Daklu @ May 14 2009, 10:36 AM)

QUOTE (Daklu @ May 14 2009, 10:36 AM)

Yep. (Well, not so muchenablemultiple inheritance assimulatemultiple inheritance.)A nod's as good as a wink to a blind man

Symantics.

Symantics.QUOTE (Daklu @ May 14 2009, 10:36 AM)

USB DVM's? Maybe they just supply a lead which is a USB->RS232 converter.

QUOTE (Daklu @ May 14 2009, 10:36 AM)

I didn't say it wasgood, I saidit's not necessarily bad. I have several small utility vis that I routinely copy and use in projects. Why? Several reasons:-

If another developer checks out my source code the file will be there and he won't have to worry about finding and installing my reuse library. (This was not a mature Labview development house; it was a bunch of engineers working on (for the most part) quickie tools.)

You mean Like not having to download the JKI Test Toolikt eh?

QUOTE (Daklu @ May 14 2009, 10:36 AM) -

I tend to store single vi's in directories. Never use libs, and never use LLB's, so this isn't an issue for me or anyone else that uses my toolkits.

QUOTE (Daklu @ May 14 2009, 10:36 AM) -

Managing shared reuse code tends to take a lot of time. Copying and pasting takes very little time.

It does? I dind it the other way round, not to mention the fact that you have to to-arrange everything and get all the wires straight again.

QUOTE (Daklu @ May 14 2009, 10:36 AM)

QUOTE (Daklu @ May 14 2009, 10:36 AM)

See, I'd use the explicit calls. It would make debugging easier. 'Auto' would be the default though.Your baby, your call. Just means you get a load of idiots like me asking why its not working when they've created instead of linked.

-

-

QUOTE (Daklu @ May 14 2009, 02:22 AM)

Hmm... I don't necessarily dislike it, but it seems like it's just a manual way to get dynamic dispatching type functionality (polyconfigurism?) except less universal and more complicated. If I grant you those three assumptions sending commands to the device I think would be pretty straightforward. Your Read.vi could get very messy with parsing and special cases. Do all the instruments you're ever going to use to measure voltages return string values in the same format? I suppose you could encode regex strings in the config file and use them to extract the value you're interested in. Workable? Maybe, but why bother when classes and inheritance already do that? (The one case I can see for doing this is if you have a shortage of Labview licenses and really smart technicians who don't mind writing obscure codes in config files.)I changed my mind. I don't like it.

It might work with a known set of instruments that fit your assumptions but as a general solution I think it gets way too complicated way too quickly.

It might work with a known set of instruments that fit your assumptions but as a general solution I think it gets way too complicated way too quickly.Told you you wouldn't like it

The config files have straight strings (no regex). Config files don't extract anything. They are just a way to stream multiple commands to the instrument. A vast majority of instruments (DVM's, Temperatures controllers, drives...you name it) supply an ascii command set for their instruments and they are usually the same commands regardless of HW interface (Wheter it be RS232/485, TCPIP or Bluetooth). If it's someone like Agilent, Keithly etc, then they are SCPI compliant, which means you can pretty much use the same files for similar devices from each manufaturer and you can support any device from them by just peeking at their driver (which is really a parser) and extracting the strings (in fact I have a vi that does that and generates the files from their examples).

The config files have straight strings (no regex). Config files don't extract anything. They are just a way to stream multiple commands to the instrument. A vast majority of instruments (DVM's, Temperatures controllers, drives...you name it) supply an ascii command set for their instruments and they are usually the same commands regardless of HW interface (Wheter it be RS232/485, TCPIP or Bluetooth). If it's someone like Agilent, Keithly etc, then they are SCPI compliant, which means you can pretty much use the same files for similar devices from each manufaturer and you can support any device from them by just peeking at their driver (which is really a parser) and extracting the strings (in fact I have a vi that does that and generates the files from their examples).QUOTE (Daklu @ May 14 2009, 02:22 AM)

My 'application' actually was designed as a toolkit of top-level VIs that would be sequenced using TestStand. (And it would have worked great if it weren't for those pesky design engineers!) The problem is that the top-level toolkit was built on several other layers of toolkits I was developing in parallel. I haven't worked through how to set up the entire architecture using exterfaces instead of the design I did use, but it looks promising.I'm not sure it will help for what you are envisioning. But if your exterfaces are based around the function the engineers are trying to achieve, rather than the devices they "may" be using I think it will work great. But, you know your design, and your target. I've also found that giving the engineers a (slight" ability to affect the software (like my technicians example) means that they end up making the changes and not you

QUOTE (Daklu @ May 14 2009, 02:22 AM)

I don't use the Agilent

. so cannot say whether it works or not. But it loaded and compiled fine. I was just interested in seeing how you integrate a previously defined driver in your architecture and chose one that you have.

. so cannot say whether it works or not. But it loaded and compiled fine. I was just interested in seeing how you integrate a previously defined driver in your architecture and chose one that you have.QUOTE (Daklu @ May 14 2009, 02:22 AM)

I'm not sure why you expected the 34401 exterface to be listed in the device drivers section of the project. An exterface isn't a device driver to my way of thinking. In this project the interfaces are an abstraction of a particular type of measurement. (Voltage measurements and current measurements.) The exterfaces implement the abstraction for a specific piece of hardware using the device driver supplied by the vendor. The files in the device drivers folder represent the drivers that are supplied by the instrument vendors and would normally reside in <instr.lib>.Because it's a DVM in the same light as your ACE or BAM. I had expected the Agilent to apear in the list of Device Drivers" and a simpler "Exterface" wrapper to interface to the architecure.

QUOTE (Daklu @ May 14 2009, 02:22 AM)

QUOTE (Daklu @ May 14 2009, 02:22 AM)

When you say "a class implementation of my previous example," do you mean a class implementation of polyconfigurism? Isn't the point of polyconfigurism to avoid classes so you can add instruments without writing G code? Can you show me what you mean, maybe by stubbing out a simple example? (Text is fine, or if you're particularly ambitious you could try ascii art. )

)I meant a base class implementation the of hardware abstraction. Where you could have (for example) a class that takes the an HW interface (TCPIP,SERIAL etc). And methods such as "Read", "Write", "Configure From File" sitting below your BAM, ACE and Agilient. Your instruments can inherit from that (basically your parser) and your exterfaces would be the equivelent to the alias lookup (maybe).

QUOTE (Daklu @ May 14 2009, 02:22 AM)

QUOTE (Daklu @ May 14 2009, 02:22 AM)

You lost me again. Do you mean this is a bad case in theExterface Architectureclass implementation strategy or in theLabviewclass implementation strategy?Labview.

QUOTE (Daklu @ May 14 2009, 02:22 AM)

Not without changing the overrides (I think)

QUOTE (Daklu @ May 14 2009, 02:22 AM)

The interface definition provides the application with the appropriate instrument control resolution. At one extreme we have a simple, high-level interface with Open, Read, and Close methods. At the other extreme is a very low-level interface that defines the superset of all instrument commands. Different applications require different resolutions of instrument control. I can't define an interface that is suitable for all future applications, so I don't even bother trying. The small changes I make are simply to customize the interface's resolution for the application's specific needs.Indeed. But the ideal scenario is that all features of all devices are exposed and available, and you just choose which ones to use in the higher level. This was the same problem that IVI tries to address. Just because we use classes doesn't make this any easier.

QUOTE (Daklu @ May 14 2009, 02:22 AM)

I'd go for the "Auto" only. Doesn't give people the opportunity to get it wrong then.

QUOTE (Daklu @ May 14 2009, 02:22 AM)

There's nothing in the architecture that prevents the developer from creating a 5th instance. What happens depends on the instrument and the vendor's device driver. If the instrument is connection-based and a connection has already been established with another instance, the driver will probably return an error. If the instrument is not connection-based then yeah, an inattentive developer could screw things up.Well. There is no reason that the instrument shouldn't give a result from the request, as long as the "object" ensures that the instrument is in an appropriate state to give a correct response. I'm thinking here of....say... you create an "Ammeter" instance and a "voltmeter" instance rather than an "ACE" instance.

-

-

QUOTE (angel_22 @ May 14 2009, 01:55 AM)

http://forums.lavag.org/How-to-Insert-Images-in-your-Posts-t771.html' target="_blank">Inserting Images, vis etc

-

You don't have enough data points above and below the threshold to complete the "Peak Measurement" analysis.

Either

1.Change the "percent level settings" method from "Auto Select" to "histogram" to force that method

or

2. Change the threshold levels to something like 40%,50% and 60%

-

We had two main instruments that we used as I2C masters to communicate with the vendors' chips: The NI-8451 and the Total Phase Aardvark.

Thats a fantastic name....lol.

We also had a third instrument that we used for I2C signal validation, the Corelis CAS-1000E. So, following what I thought were standard practices, I designed an abstract class, "I2C Master," and created child classes for those instruments. These child classes were intended to be long-term, shared reuse code. (i.e. One installation shared among many applications.) The base class defined some general I2C functions such as Get/Set Slave Address, Get/Set Clock Rate, Find Devices, Open, Read, Write, Close, and a few others. The common functions are what developers would use if dynamic dispatching is needed. Each child class also wrapped the rest of the instrument's api so the developer could access all of the functions while staying within the same device driver object. Obviously those functions were not available to use with dynamic dispatching.I designed the entire test system following that type of inheritance pattern. I implemented .lvlibs for each vendor's touch api. I created an abstract "Touch Base" class and derived child classes to wrap the lvlibs. This setup actually worked pretty well... right up until I started getting requests that violated the original scope of the application. While I had tried to predict the types of testing I would be asked to do and designed the test system for as much flexibility as was feasible, my crystal ball simply wasn't up to the task. To accomodate the new requirements within a reasonable timeframe I frequently had to remove some modularity (goodbye 8451) and/or rework my driver stack--that distributed framework code that was supposed to be untouchable. As time went on my 'reusable' code became more and more customized for that particular application. On top of that, I couldn't always guarantee backwards compatibility with previous versions of the drivers. Had those drivers actually been released and used by other developers I would have had a maintenance nightmare on my hands.

I have a solution for that....but you won't like it

If you abstract the interface, rather than the device, you end up with a very flexible, totally (he says tentatively) re-usable driver.

I'll speak generically, because there are specific scenarios which make things a bit more hassle, but they are not insurmountable.

Take our ACE,BAM and HP devices. From a functional point of view we only need to read and write to the instrument to make it do anything we need. I'll assume your fictional DVMs are write-read devices (i.e you write a command and get a response rather than streaming) and I'll also make the assumption that they are string based as most instrument we come across are generally.

Now....

To communicate with these devices we need to know 3 things.

1. The transport (SERIAL/GPIB/TCPIP etc.)

2. The device address.

3. The protocol.

Visa takes care of 75% of No.1. No.2 is usually a part of No.3 (i.e the first number in a string). So number 3 is the difficulty.

So I create a Write/Read VI (takes in a string to write and spits out the response...if any), and I will need an open and close to choose the transport layer and shut it down. I now have the building blocks to talk to pretty much 90% of devices on the market. I'll now imbue the read/write vi with the capability to get its command strings from a file if I ask it to. So now, not only can it read and write single parameters, I can point it to a file and it will spew a series of commands and read the responses. This means I can configure any device in any way I choose just by pointing it to the corresponding config file. New device? New config file. No (labview) code changes so you can get a technician to do it

Now, in your application, you have a lookup table (or another file) which has a name (alias), the transport, the address, the config file and/or the command for the value you want to read.(....say DC:VOLTS?); The read-write file vi is now wrapped in a parser which takes the info from the table and formats the message and sends it out through the read/write file vi or it loads the config file.

I now have a driver scheme that not only enables me to add new devices just by adding a config file and an entry in the table, but also enables me to send the same config to multiple instruments on different addresses or different configs to the same devices on different addresses and read any values back I choose. And all I need is 1 VI that takes the alias.

Told you you wouldn't like it

because OOP programmers start frothing at the mouth as soon as you mention config files...lol. But I'll come back to this in context a little later on.As an example, at one point in the project one of the vendors implemented a ~Reset line that had to be pulled low for the chip to operate. Hmm... the 8451 and the Aardvark both have gpio lines and I built those functions into the drivers, but gpio doesn't necessarily fall in the realm of "I2C Master." The CAS-1000 doesn't have gpio lines. Should I create an abstract "GPIO Base" class and derive child classes for the gpio modules (such as the USB-6501 and the gpio functions of the 8451 and Aardvark) so I can continue using the CAS-1000? How would I make sure the Touch:Aardvark class and the GPIO:Aardvark class weren't stepping on each other's toes? After all, they would both be referring to the same device. To avoid a huge redesign I ended up implementing gpio functions in the "I2C Master" abstract class so I could continue using the 8451 and Aardvark. I ditched the CAS-1000 and serial port functionality. (The serial port requirement came back later in the project... ugh.) This is when I started wishing for interfaces.

because OOP programmers start frothing at the mouth as soon as you mention config files...lol. But I'll come back to this in context a little later on.As an example, at one point in the project one of the vendors implemented a ~Reset line that had to be pulled low for the chip to operate. Hmm... the 8451 and the Aardvark both have gpio lines and I built those functions into the drivers, but gpio doesn't necessarily fall in the realm of "I2C Master." The CAS-1000 doesn't have gpio lines. Should I create an abstract "GPIO Base" class and derive child classes for the gpio modules (such as the USB-6501 and the gpio functions of the 8451 and Aardvark) so I can continue using the CAS-1000? How would I make sure the Touch:Aardvark class and the GPIO:Aardvark class weren't stepping on each other's toes? After all, they would both be referring to the same device. To avoid a huge redesign I ended up implementing gpio functions in the "I2C Master" abstract class so I could continue using the 8451 and Aardvark. I ditched the CAS-1000 and serial port functionality. (The serial port requirement came back later in the project... ugh.) This is when I started wishing for interfaces.If your system is such that you only have to code for exceptions. Then you are winning.

Near the end of the project much of my reuse code was no longer reusable and modularity was almost completely gone. The system required the Aardvark and only worked with a chip from a single vendor. The system I had created worked well when the requirements remained within my original assumptions. Once those assumptions were violated software changes either took weeks to implement (not an option) or required hard coded customizations.If anything is changing rapidly, then any software "architecture" is bound to be compromised at some point and the more you try to make things fit...the more they don't...lol. If its for internal use only, you are better off with a toolkit that you can throw together bespoke tests quickly - something Labview is extra-ordinary at doing.

Amen.I learned a lot on that project. Two of the main lessons I learned were:- Before I design the software, I need to understand if I'm creating a finished product or an engineering tool. Finished products have requirements that probably won't change (much.) Engineering tools will be asked to do things you haven't even though of yet. The two require very different approaches. I had designed and built a finished product while the design engineers expected it to behave as an engineering tool. Engineering tools require rapid flexibility above all else (except reliable data.)

<cite>QUOTE (Daklu @ May 11 2009, 02:21 AM) [/post]</cite> </cite><cite>QUOTE (Daklu @ May 11 2009, 02:21 AM) </cite>

Regarding reusing exterfaces across projects... depends on what you define as "reuse code." I expect I might do a lot of exterface code copying, pasting, and modifying between projects. If you consider that reuse code then I'm right there with you. Given point 2 above, I'm in no hurry to distribute interface definitions or exterfaces as shared reuse code. Getting it wrong is far too easy and far, far more painful than customizing them for each project.Lots of copying and pasting means that you haven't encapsulated and refined sufficiently. I think this is a particularly bad case in the class implementation strategy which forces you to do this over traditional Labview which would encapsulate without much effort. After all. In other languages, changes to the base class effect all subsequent inherited classes with no changes whatsoever.

<cite>QUOTE (Daklu @ May 11 2009, 02:21 AM) </cite>

<cite>QUOTE (Daklu @ May 11 2009, 02:21 AM) </cite>

However, if you keep the interface definition and exterfaces on the project level they don't have to be huge monolithic structures. You define the interface based on what that application needs--no more. There's no need to wrap the entire device driver because chances are your application doesn't need every instrument function exposed to it. If you have to make a change to the interface definition there's no worrying about maintaining compatibility with other applications. Small, thin, light... that's the key to their flexibility.Lots of small changes as opposed to one big change? I'd rather not change anything but I don't mind adding.

<cite>QUOTE (Daklu @ May 11 2009, 02:21 AM) </cite>

<cite>QUOTE (Daklu @ May 11 2009, 02:21 AM) </cite>

"Create Instance" creates a new instance of the device driver and links that exterface to it. "Link to Instance" links an exterface to an already created instance. An exterface will use one or the other, not both. Use "Link" when an instrument is going to use more than one of it's functions. The vi "Many to One Example" illustrates this. (Look at the disabled block in "Init.") The system has a single Delta multimeter but it is used to measure both current and voltage. If I create two instances I end up with two device driver objects referring to the same device. That leads to all sorts of data synchronization problems and potential race conditions. By having the second exterface link to the same device driver object the first exterface is using, those problems are avoided.[Edit: Your question made me realize "Create Instance" and "Link to Instance" aren't good names for developers who are using the api and aren't familiar with the underlying implementation. "Link to Instrument" works, but "Create Instrument" doesn't. Any ideas for better names?]I've no problems with "create" (that's the same in other languages or you could use "New" as some do). But I can't help thinking that the linkto is a clunky way to return a reference to an object. If the "Create" operated in a similar way to things like queues and notifiers where you can create a new one or it returns a reference to an exisiting one, it would save an extra definition.

<cite>QUOTE (Daklu @ May 11 2009, 02:21 AM) <a href="index.php?act=findpost&pid=62740"></cite>

Suppose you have more than one Delta multimeter in your test system. On the second "Create Instance" call should it create a new instance for the second device or link to the first device? There's not really any way for the software to know. Seems to me requiring the developer to explicitly create or link instances makes everything less confusing.Rereading this I realize I didn't make one of the exterface ideas clear. In my model, each instrument has its own instance. Ergo, if I have four Delta multimeters in my system then I'll have four instances of the device driver object. If the device driver is not class based (such as the 34401) obviously there aren't any device driver objects to instantiate. In those cases the "instance" is simply a way to reference that particular instrument. Since all calls to the 34401 device driver use the VISA Resource Name to uniquely identify the instrument, I put that on the queue instead of a device driver object when XAgilent 34401:Create Instance is called. This allows the 34401 exterface to behave in the same way as exterfaces to class-based device drivers.Indeed. But having created 4 objects already, what happens if you create a 5th?<p>

-

QUOTE (normandinf @ May 13 2009, 03:07 AM)

I've noticed that a lot of newcomers on this forum are already using LabVIEW 2009... (or so they say )

)I feel I've been left behind...

Awwww. Don't feel left out. Feel smug in the fact of knowing that they will find all the bugs first so that when you upgrade to 2009.1, it actually works

-

Its better to post in this forum. Then others may learn from the questions and answers.

-

QUOTE

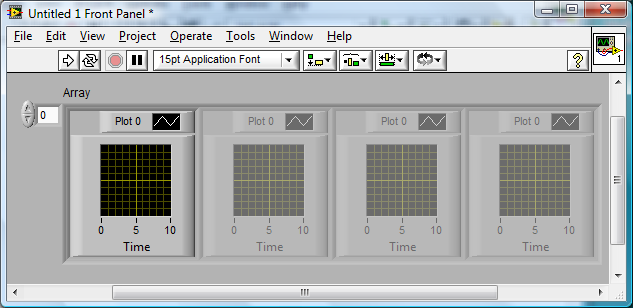

OK. How can I change the size of the control array at run-time? The Array Property Node "NumCols" seems to be read-only.Right click on the property node and choose "Change To Write" from the menu.

QUOTE

Also, how do I unpack this on the Block Diagram? If I attach an "Index Array" VI with no index (to indicate I want the first element of the array), the output data type is an array of one element, which is not what I would expect. I would expect a cluster of one WaveGraph.It should be a cluster containing a 1D array. If you put a Waveform graph on your front panel then right click and "create" constant on the diagram. You will see it creates a 1D array of Double Precision constant.This is the default format. You can see more on the input options in the help for Waveform Graph (a 2D Array is a multi-plot for example).

-

QUOTE (Ton @ May 12 2009, 09:13 PM)

I have a plugin selector, but I want beforehand check which VIs are valid plugin VIs, but some of these VIs have an enormous footprint and take up a minute to load, even if the user decides to load another plugin VI. So I want to limit the time needed to check which VIs are valid plugin VIs.Ton

can you not do it by the plug-in name? (therefore avoid loading anything)

-

Most PC's have DACs and ADCs built in nowadays.....Your sound card. If your looking for cheap!.

-

QUOTE (angel_22 @ May 12 2009, 12:46 AM)

this is not my home work but my university project..The main objective of this project is to discuss the automation of a greenhouse using

Supervisory Control and Data Acquisition (SCADA) systems and LabVIEW program.

The automation includes temperature and humidity so the

client can monitor and make the suitable control for the greenhouse from his office.

thank you for all..

A good starting point might be the temperature system demo in the examples directory. It demonstrates alarm (over temp, under temp) detection and would at least provide a frame of reference for you to ask specific questions.

-

QUOTE (Ton @ May 12 2009, 06:47 PM)

Yes, but you use a splash loader....PM me if you are part of the current Beta.

Ton

Sorry, I'm not.

Not quite sure what difference it makes if the open file ref is in a splash-loader or not, but, I also have a "cleaner", which goes through all the vi's, loading them and identifying orphan files. That only takes a couple of minutes to load and check every vi. So I'm still a bit baffled.

-

QUOTE (Neville D @ May 12 2009, 06:22 PM)

.....And no more compatible file-system.....no more 3rd party support. LV-Rt is only a real option if your system is completely NI based.

QUOTE (rolfk @ May 12 2009, 08:10 AM)

Which makes it probably more like XP Embedded anyhow Rolf Kalbermatter

Rolf KalbermatterShhhh. Don't tell everyone

-

QUOTE (Ton @ May 12 2009, 05:58 PM)

I think other people must have covered this as well, so I get a little bit more descriptive.I am trying to list all VIs in a given directory that will fit a given framework.

Basically the front panel should have 2 given controls of a certain type and label, together with 3 indicators of a given type and label.

The genuine problem is that loading the VIs with Open VI reference takes up to a few minutes when the VI that is called only takes a few seconds to run. So I try to only open up the front panel and see if the VIs match those criteria.

Ton

This seems a bit bizarre. My whole application (a couple of thousand vi's) only takes a few seconds to load (via an open ref then run from a splash-loader). Why does it take yours a few minutes to load a vi? Does it have to search for sub-vi's?

-

QUOTE (crelf @ May 11 2009, 09:46 PM)

Well, your experience aside I'm not sure I'd have a purely Windows OS controlling anything that could lop of limbs. That said, I don't know your application or system, so I can't speak without ignorance on that.

I'm not sure I'd have a purely Windows OS controlling anything that could lop of limbs. That said, I don't know your application or system, so I can't speak without ignorance on that.Indeed....lol.

We don't rely on it, safety critical aspects are hardware enforced (like E-STOPS which will cut power and air at the supply), Like I said though. Windows is very robust as long as you don't have all the other crap that your IT dept would put on, disable all the unwanted services, don't use active X or .Net and only run your software.

-

QUOTE (Michael Malak @ May 11 2009, 10:08 PM)

http://lavag.org/old_files/monthly_05_2009/post-15232-1242078686.png' target="_blank">

Put your graph into a cluster. Then put the cluster into an array.

-

QUOTE (crelf @ May 11 2009, 07:25 PM)

QUOTE (crelf @ May 11 2009, 07:25 PM)

If the user ignored a "improper shutdown, hard disk has errors, run scandisk" message and your software faults due to that, is that your fault too?Yes. The operators function is solely to load/unload the machine with parts, start the machine in the morning and stop the machine at the end of the shift. No more is expected. If an error is detected in the software, it sms's us with the error and we contact the customer to ensure that preventative action can take place on the next shift changeover.

QUOTE (crelf @ May 11 2009, 07:25 PM)

I'm not saying that you can't plan for these things, but you have to, well, plan for these things. If you know that you need to activate Windows in 30 days or it'll bomb out, then you activate Windows within 30 days so it's okay. You need to gain and apply that same level of product knowledge to all the components you apply to the system - including anything from NI or any other manufacturer. That said, if a user ignored an error for a month (whether that be from an activation issue or a hard fault) and then expected me to fix it immediately once it bombed out, I'd be having a stern talk to them.errrrm. I didn't think it was in question that the OP (or myself) hadn't originally activated Labview. He was talking about the fact he had activated it and after an update Labview required re-activation.

We have an OEM license for windows so activation isn't an issue (if we decide to use windows that is, we also use linux so activation would be irrelevant). Any (and I mean ANY with a capital E) errors must stop the machine and turn it into a paperweight, otherwise people can lose limbs. We have no issues with windows (it's remarkably stable, if you don't load it up with active X or usual rubbish you find on a desktop PC) and we have had only 2 windows failures in 4 years both due to malicious intent. Windows has never suddenly popped-up that it requires re-activation. Or refused to work because of licensing. However, as I pointed out, we have had 2 instances as of this year so far of Labview requiring re-activation.

-

a description of your problems would be helpfull. Perhaps some pictures of the code you have already?

-

QUOTE (rolfk @ May 10 2009, 10:59 AM)

Well, you do not get that error before you try to read PAST the end of the file. As such it is not really a pain in the ######. Reading up to and including the last Byte does not give this error at least here for me.Rolf Kalbermatter

It means you can't just "Read X Chars" until >= "file size" without getting an error. The choice is you either monitor the chunks in relation to the file size and at "file size -Read X Chars" change the "Read X Chars" accordingly, or ignore the error only on the last iteration. If you have another way, I'd be interested. But I liked the old way where you didn't have to do any of that.

-

While you can choose to wrap every function into class methods, that is not usually an abstraction method that works well. What you should do is consider what things you tend to do over and over again and create abstractions for those items.

Agreed. There are certain people,however, I know who would disagree (purists who prescribe to the infinite decomposition approach). My problem is that complex function is what I do over and over again (measure pk-pk waveform, rise time etc, etc) but the hardware is always changing. Not only in the devices that are used to acheive the result, but in the number of different devices (might require just a spectrum analyser on one project, but a motor, a spectrum analyser and digital IO on on another).

For example, let's say you want to create a DMM class that supports Agilent and NI DMMs in your application. Both of these DMMs have completely different drivers required for configuring a voltage measurement, but essentially, they both perform the same operations. You can create a DMM.Configure Measurement method with an Agilent (Agilent.Configure Measurement) and NI (NI.Configure Measurement) implementation (and Simulator, and future hardware you haven't yet purchased, etc). What's nice is that you separate your application logic from your instrument specific drivers that 'implement' that logic.This is what object-orientation is all about -- irrespective of Daklu's 'Extraction Architecture'.

This I get. And for something like the "message pump", which has a pre-determined superset base of immutable functions is perfect. But for hardware it seems very hard to justify the overhead when drivers are supplied by the manufacturer (and can be used directly), "to make it fit". Yes the Agilent has a "Voltmeter" function, but it also has the maths functions which the others don't have so that needs to be added. Also. Just because I have abstracted the Voltmeter" function doesn't mean I can plug-in any old voltmeter without writing a lot of code to support it. If it added (lets say) a frequency measurement. Then my application would need to be modified also anyway.

Daklu's architecture is trying to go one step further -- so that if you have other instruments that have overlapping functionality, you can implement an interface (exterface) that both of the instrument classes would implement (feel free to correct me if I'm wrong). I'm not sure how succesful his implementation is, I briefly looked at it but did not look long enough to fully understand it.I get this too, And don't want to turn this thread in to OOP vs traditional, that's not fair on Daklu.

I added an exterface for the Agilent 34401 to illustrate how I would implement it using that particular IVoltmeter interface definition. Check the readme for notes on it. Remember that interfaces definitions are determined on the project level--I don't anticipate them being distributed as part of reusable code modules. I designed the IVoltmeter interface based on the needs of my fictional top-level application. Your top-level application may require a different interface definition. Also, I don't think there is any value in implementing the exterface unless you know you'll be swapping out the 34401 with different instruments in this application.Let me know if you have any more questions.

The value I see in your interface approach is that it seems to make more of the code re-usable across projects, intended or otherwise

The interface definition needs to be a superset of the project definitions, but that is easy for me since the higher functions are repetitive from project to project (as I explained to Omar). The intermediary "translation" could work well for me but the prohibitive aspect is still the overhead of writing driver wrappers which prevents me from taking advantage.

The interface definition needs to be a superset of the project definitions, but that is easy for me since the higher functions are repetitive from project to project (as I explained to Omar). The intermediary "translation" could work well for me but the prohibitive aspect is still the overhead of writing driver wrappers which prevents me from taking advantage.I have one question though. Why do you have to "Link" to a device? Presumably you would have to know that the device driver is resident before hand for this. Can it not be transparent? Devices are (generally) denoted by addresses on the same bus (e.g RS485) or their interface or both. Can the "Exterface" not detect whether an instance already exists and attach to it, or create it if it doesn't?

Also, where do I download the example with the Agilent included (I tried from the original post but it is the same as before)

<p>

-

QUOTE (Daklu @ May 9 2009, 06:20 PM)

The JKI vis are part of JKI's VI Tester toolkit. You can download it from http://forums.jkisoft.com/index.php?showtopic=985' rel='nofollow' target="_blank">here. Use VIPM to install it. Or you could just remove them from the project... you don't need them for the example.I like the abstraction (I always like the abstraction....lol). And I see where your going with this and like it (in principle). But the main issue for me (and is one big reason I don't use classes) is that we are still not addressing the big issue, which is the drivers.

My applications are hardware heavy and include motors, measurement devices (not necessarily standardized DVM's and could be RS485/422, IP. analogue etc), air valves and proprietary hardware....well you get the picture. The hardware I use also tends to change from project to project. And (from what I can tell) to use the class abstraction, I need to wrap every function that is already supplied by the manufacturer just so I can use it for that project. Take the Agilent 34401 example in the examples directory for instance. If I want to use that in your Architecture, do I have to wrap every function in a class to use it rather than just plonk the pre-supplied vi's into my app which is pretty much job done? The drivers tend to use a seemingly class oriented structure (private and public functions etc), but there just doesn't seem any way of reconciling that with classes (perhaps we are just missing an import or conversion function or perhaps it exists but I haven't come across it).

I know you have shown some example instruments, which strikes me as fine as long as you are developing your own drivers. But could you show me how to integrate the Agilent example into your architecture so I can see how to incorporate existing drivers? After that hurdle is crossed, I can see great benefits from your architecture.

-

I read you document and (on the first) pass it all made sense. And I have downloaded the example, but I'm missing a load of JKI vi's (presumably in the Open G toolkit somewhere). I'm looking for them now.

Just thought I'd say "something" since your eager for comments

Just a little patience. Not everyone is in your time-zone and it is the weekend after all.

Just a little patience. Not everyone is in your time-zone and it is the weekend after all.

Convert to square to detect phase shift

in LabVIEW General

Posted

QUOTE (Tubi @ May 15 2009, 01:49 PM)

Well the latest example you supplied seems to work fine. I changed the variables on the front panel and it didn't complain. Are you saying that it works in simulation but not on the hardware?

If this is the case. It might be useful to capture the data from the machine to a file and run that through the simulation. Then w can all see and investigate the differences.