-

Posts

4,999 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

The State of OpenG (is OpenG Dead?)

ShaunR replied to Michael Aivaliotis's topic in OpenG General Discussions

Ask them about BridgeVIEW -

The State of OpenG (is OpenG Dead?)

ShaunR replied to Michael Aivaliotis's topic in OpenG General Discussions

Lava serves this purpose well. In fact. my last interaction with openG was here -

The State of OpenG (is OpenG Dead?)

ShaunR replied to Michael Aivaliotis's topic in OpenG General Discussions

Of course not. But some of the toolkits haven't been updated for a few years because of the reasons I stated. So it's an example of why the trope about no development activity is untrue. This is why I don't put dates on the public version history. By the way. The idea that development activity is a sign of a "live" project only came about in recent history once people got used to buggy code and alpha releases purporting to be a release proper. I view continuous updates and lots of activity as unstable and therefore unusable code. If one is contemplating using a library or piece of software and think it is less a project risk if there is lots of activity, then, IMO, one has admitted that ones own software will be unstable and bug ridden. I don't exactly know at what point alpha software was accepted as the the norm for final product but it seemed proliferate in the switch to Agile Development. -

The State of OpenG (is OpenG Dead?)

ShaunR replied to Michael Aivaliotis's topic in OpenG General Discussions

That's a trope initially perpetuated by marketing people. There is no message. If developers discontinue a product they will [almost] always say so in the notes, comments or website unless they were unexpectedly hit by a bus. I have a similar problem with this perception. Many of my toolkits are feature complete at initial release. There is usually a period of bug fixes shortly after release and then no updates because there are no new features and no reported bugs. -

The State of OpenG (is OpenG Dead?)

ShaunR replied to Michael Aivaliotis's topic in OpenG General Discussions

No library is dead whilst at least one person is using it. -

The State of OpenG (is OpenG Dead?)

ShaunR replied to Michael Aivaliotis's topic in OpenG General Discussions

I a similar vein. The toolkit gives new starters a lot of examples on how to use prototypes in LabVIEW - in small, easily digestible chunks IMO it has enormous value as a teaching resource and as an exemplar of coding style. -

The State of OpenG (is OpenG Dead?)

ShaunR replied to Michael Aivaliotis's topic in OpenG General Discussions

Yeah. I can't claim that one. It was originally a criticism of OOP -

The State of OpenG (is OpenG Dead?)

ShaunR replied to Michael Aivaliotis's topic in OpenG General Discussions

I don't think it's just you. For my part. I work with companies that require approved suppliers so using any third party software is an admin nightmare. Because of that and the length of my whispy white beard, I have accrued my own software which is unencumbered by other licences and can be used by the aforementioned companies because I am approved. Certainly there are some features that have been added to LabVIEW when before only openG had those features (getting type def info and auto indexing array springs to mind) and Rolfs zip package is much, much faster than NIs. But a lot of the functionality was just replication of NIs (like most of the string functions). There were a few gems in there like the inifile being able to write anything (but I think MGI toolkit outpaced that and besides, I moved to SQLite a while ago now). And, as you mentioned, removing array duplicates and it's ilk. But, like you say, they are not hard to write and the incestuous dependencies means that when you want a banana you get a banana, the gorilla holding the banana, the tree the gorilla sits in and the whole jungle surrounding it. Package that with some BSD 2/3, LGPL, GPL and god knows what else ad nauseum and it makes an otherwise fine package difficult to actually use in anger. -

Agreed. But it's the unhandled ones you have to worry about. and the ones that annoy customers the most

-

A cruel prank by AppBuilder doing exactly what it was told to do...

ShaunR replied to Aristos Queue's topic in LAVA Lounge

It's the nature of the beast. I had something similar today but not quite on the same scale. Every time I tried to build a zip archive with the option "Recurse Directories" .. it didn't. So I went through the code checking paths and and found it was because the boolean wasn't being set for some reason. So having gone down the hierarchy checking hte file paths I then went back up checking the boolean only to find that the control on the front panel wasn't wired to the unzip sub vi for that option. Now you might say DUH! But in my defence, it wasn't obvious because two boolean controls where vertically compressed and the wire of boolean control above (which was a function to be worked on next and therefore expected to be unwired) went underneath the "Recurse Directories" and came out looking like it's wire.. So if I had tried the other option (Include Empty Dirs) it would have recursed and I would have known immediately rather than 20 minutes later . -

LAVA Server Maintenance This Weekend (11\03\18)

ShaunR replied to Michael Aivaliotis's topic in Site News

Thanks Michael. Things seem to be much improved. -

I often wondered about the legality of that. Technically, you need never buy a copy of LabVIEW if you just use a VM and are happy with the trial logo permeating the front panel. M$ have particular rules about VMs (and Apple actively prohibit their OS on them) but NI have never (to my knowledge) explained their stance on them.

-

As per usual, I'm n outlier here. I hardly ever disable Automatic Error Handling and prefer to use Clear Error.vi where necessary (although I have my own Clear Error.vi which is re-entrant rather than the native on which is not). My reasoning is thus. It demonstrates that the error condition has been considered and a specific decision has been made to ignore it. Automated test harnesses reveal uncaught errors (by never completeing) wheras they may pass if AEH is turned off. I'd rather an error dialogue pop up on a customer machine which at least gives me some iinformation of where to look if an unconsidered error occured rather than them saying "I press this button and it doesn't work". Turning it off is is a code-smell for "cowboy coder"

-

In a pinch, you can download the trial of the latest version and use that to back-save. It's not a permanent solution as the trial period is something like 7 days. Most developers subscribe to the NI SSP (Service and support package) and get yearly updates of the latest version but that doesn't mean there are no "issues" and the only workable solution is if everyone, in the entire company, is on the same version and upgrade at the same time.

-

This is one of the reasons I still use 2009 (apart from performance and robustness ). The only viable option is to program in the minimum LabVIEW version you intend to support. It's one of the main issues with cooperative, group development and requires a strict regime of everyone sunchronizing versions in repositories. It can be done with different versions by back saving, but it usually ends in tears. Backward compatibilty in LabVIEW is exceptional. Forward compatibility requires a crystal ball and unicorn droppings.

-

You're not liable, though. JKI is. That is my point. You gonna sue?

- 63 replies

-

- open source

- share

-

(and 3 more)

Tagged with:

-

Have they? The zlib library isn't GPL or LGPL and the Vis are BSD. I assume that Rolf is intending to make an intermediary library to be LGPL and that means compliance with, now, 3 licences - the most onorus being LGPL. That's not my vision and would argue that it would be no better than under vi.lib. I think we both see the value of install but for uninstall you don't want to touch the target packages - just delete or recreate the links.

- 63 replies

-

- open source

- share

-

(and 3 more)

Tagged with:

-

Practically speaking. If you delete a hard-link, you delete the target. If you delete a soft-link, you only delete the link. There's a little more to it than that, but not much. Windows file APIs are link-aware. The in-built LabVIEW ones are not.

- 63 replies

-

- open source

- share

-

(and 3 more)

Tagged with:

-

I was specifically talking about GPL but some of it probably applies to LGPL as some people (like Centos) have re-licenced to older versions due to "Licence compatibility issues".....so here we go Noone can decide if dynamic linking is a derivitive work or not. The burden of making available source code for a few years. Noone can decide what derivative licences are compatible, not comptible or if any code monkey, like me, is in violation. It's unclear, to me, what happens when a customer makes their application; what burdens are imposed on them. As I undertand it, they have the same distribution burdens that I would have (which they might not be aware of) and that could open them up to a legal threat. The problem with GPL is that everyone (including lawyers) argue about what does and doesn't constitute a violation. It is the uncertainty that means it is unusable, especially in cases where the original licences (like ZLib) are so permissive and are not surrounded by these complications.

- 63 replies

-

- open source

- share

-

(and 3 more)

Tagged with:

-

Programmatically select all boolean (best way to avoid race condition)

ShaunR replied to mthheitor's topic in User Interface

An indicator has two "labels". The label and caption. Show the caption (should be defaulted to the same as the labe and hide the label). Then rename the controls to 1_Home, 2_Ensaio etc (The caption will be what the user actually sees without the numbers). Now you can get the label name, split off the number and put it into a cluster with the value (if you have less than 10 controls, you don't need to convert to a numeric). Make sure the number is the first item in the cluster because LabVIEW will automatically sort on the first element so you can use the sort array directly. From there you can sort the cluster and now you know your clustered booleans are in numerical order.- 8 replies

-

- array of boolean

- race condition

-

(and 1 more)

Tagged with:

-

Well. Having to use TPLAT I have various tools for, how should I say, un-cocking its cock-ups No compromises. Either it installs stuff properly with a click or two or it will be relegated to "just another installer that doesn't really do what I need" Sweet. What licence is it? (Please don't say, GPL, please don't say GPL, please don't say GPL)

- 63 replies

-

- open source

- share

-

(and 3 more)

Tagged with:

-

OK. So what sort of features would we want in a new package manager? Here are a coulple that I've long hoped for: The ability to export a package dependancy list (Just the names and versions? Or more than that?). The ability to export licences of a package and all its dependancies. Automatic detection of 32 and 64 bit binaries. Support installation of VIPM, OGPM, LVPM, executables (like setup.exe) and zipped packages. Import from public online repositories (like Github, Bitbucket etc). What else?

- 63 replies

-

- open source

- share

-

(and 3 more)

Tagged with:

-

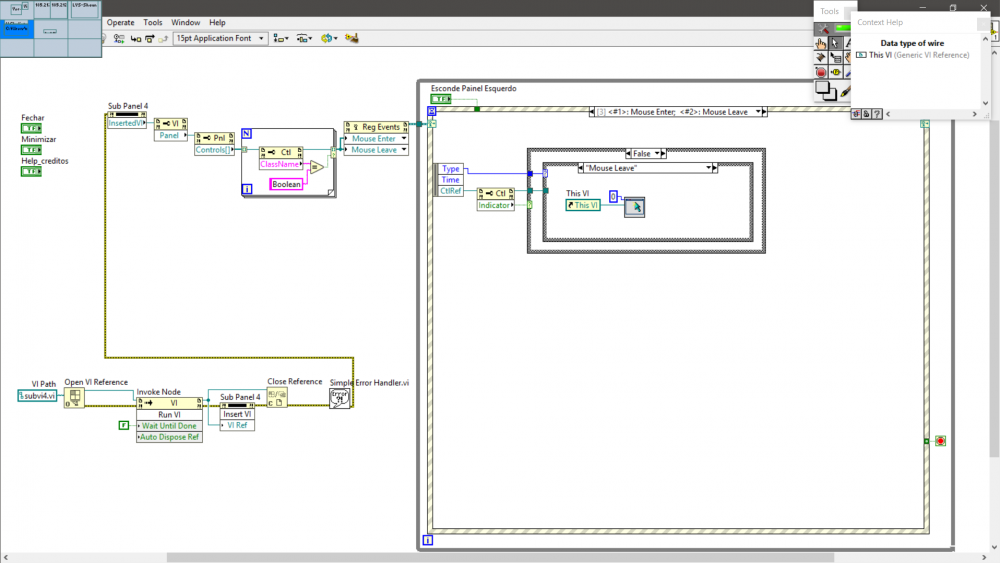

How to make SUBVI change Mouse Icon programmatically

ShaunR replied to mthheitor's topic in User Interface

main.vi I've back-saved to LV 2012 but I don't have 2012 available ATM to check (and can't remember when the "Inserted VI" property was introduced.) -

trigger on a sequence of two sequential Boolean events

ShaunR replied to NateTheGrate's topic in LabVIEW General

Something like this? ab.vi