-

Posts

4,942 -

Joined

-

Days Won

308

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ShaunR

-

-

15 hours ago, X___ said:

We need to be realistic and pragmatic.

We need more Xerox Parc and less Trailer Park.

-

1

1

-

-

On 10/30/2023 at 4:08 PM, Thomas_VDB said:

LabVIEW is in great need of big investments/rework. As much as I disliked NXG, it was started for a valid reason. Without such investments, we keep working everyday in a development environment, who's foundations are (almost) 40 years old.

Well. C++ is just as old and C is 10 years older - so go figure! The whole software discipline hasn't really moved on in the last 60 years (check this out!) and while I do see AI changing that, it's probably not in the way you think. If AI is to be instrumental to programming it must come up with a new way of programming that obsoletes all current languages-not automate existing languages. Software needs it's Einstein vs Newton moment and I've seen no sign of that coming from the industry itself. Instead we get more and more recycled and tweaked ideas which I like to call Potemkin Programming or Potemkin languages.

On 10/30/2023 at 4:08 PM, Thomas_VDB said:I don't see such a thing coming for LabVIEW, which will rapidly make LabVIEW fall behind in comparison to other languages.

I disagree. LabVIEW, so far, has been immune to AI but it has also been trapped in the Potemkin Programming paradigm (ooooh. PPP

). It needs another "Events" breakpoint (when they introduced the event structure and changed how we program). Of all the languages, LabVIEW has the potential to break out of the quagmire that all the other languages have languished in. What we don't need is imports from other languages making it just as bad as all the others. We need innovators (like the guy that invented malleable VI's for funsies) only in their 20's and 30's - not 70's.

). It needs another "Events" breakpoint (when they introduced the event structure and changed how we program). Of all the languages, LabVIEW has the potential to break out of the quagmire that all the other languages have languished in. What we don't need is imports from other languages making it just as bad as all the others. We need innovators (like the guy that invented malleable VI's for funsies) only in their 20's and 30's - not 70's.

-

2

2

-

-

13 hours ago, X___ said:

No, it did not. It did mention that groupdocs was not free though!

What puzzles me is that it did not mention that this was a standard feature of the python PIL package:

from PIL import Image import matplotlib.pyplot as plt filename = '/content/drive/MyDrive/my image.png' # point to the image im = Image.open(filename) im.load() plt.imshow(im) plt.show() print(im.info) print('Metadata Field: ', im.info['Metadata Field'])which is probably the approach I will use in my migration to Python experiment.

You've really been gulping down that AI coolade, huh?

I was going to mention PIL but IIRC it only supports standard tags (like the .NET that you criticized) so didn't bother to mention it.

-

Being picky

52 minutes ago, ensegre said:

52 minutes ago, ensegre said:in my case I don't see appreciable differences between x3 and compound +++

vs

20 minutes ago, ensegre said:~117ms with compound +++, twice + x3 and 3x, whereas ~127ms with compound arithmetic 3x or x3

Can't be both

(and that's 10ms)

(and that's 10ms)

However. You have a timing issue in the way you benchmark in your last post. The middle gettickcount needs to be in it's own frame before the for loop.

-

6 minutes ago, ensegre said:

in my case I don't see appreciable differences between x3 and compound +++. Maybe there is something platform dependent, if at all.

With the replace array it makes no difference but in your original it made about 10ms difference (which is why I thought it was a compile optimization)

-

What's interesting about ensegre's solution is the unintuitive use of the compound arithmatic in this way. There must be a compiler optimization that it takes advantage of.

-

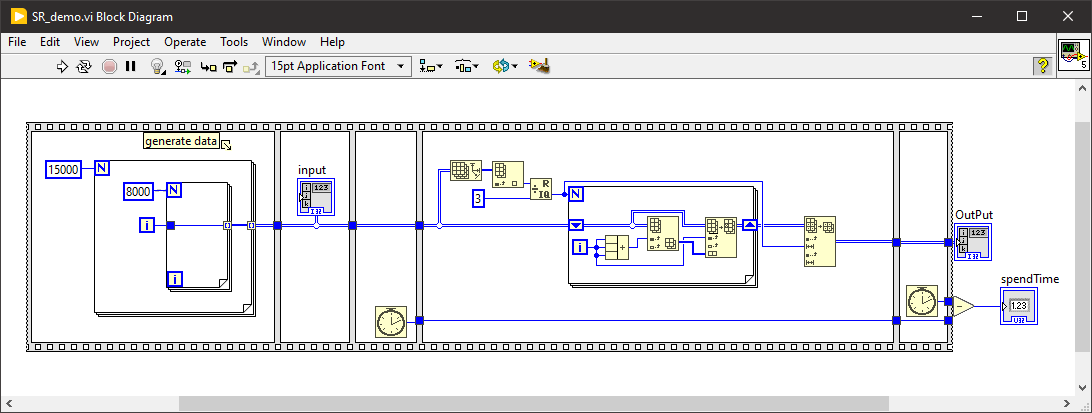

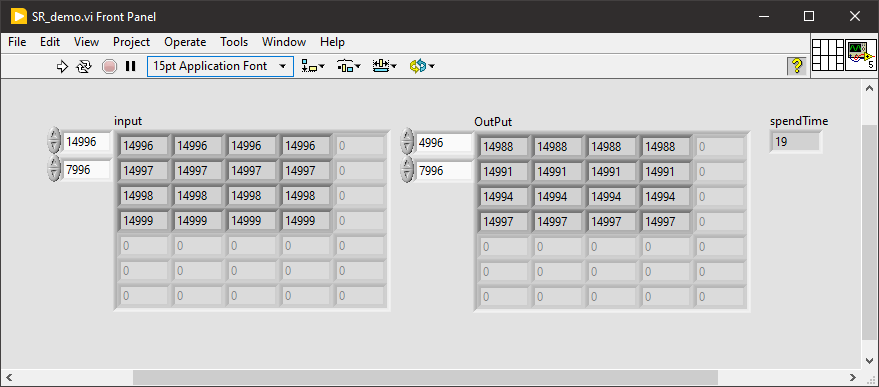

My go (improvement of ensegre's solution ).

Original: 492 ms

ensegre original: 95 ms

ensegre with multiply by 3 instead of 3 adds: 85 ms

Conditional indexing: 72 ms

Replace array (this one): ~20ms.

-

2

2

-

-

Didn't your AI guru suggest exiv2?

-

-

Quote

Add new class as main test project class and change inheritance make TestLite/Core/Project.lvclass as parent of your project class.

Nope. Not gonna fly, I'm afraid. This is the problem with LV POOP.

I like the bit at the end-a video to show us just how complicated it is.

Is this a Test Stand lite? Or is it actually a test framework for unit testing?

-

18 hours ago, codcoder said:

Interesting take but does it fly with TestStand?

Nope. But if you are interfacing to TS then changing clusters will break the connector anyway. You are much better off using strings (like JSON or XML) to TS.

-

1

1

-

-

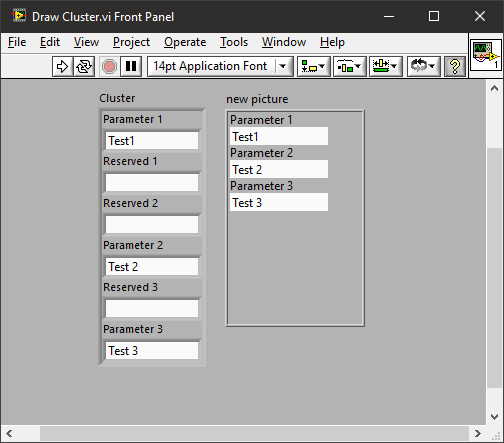

You could just draw the cluster on a picture control. You could then easily filter out the "reserved". You wouldn't need to change the UI every time it changes and I expect the user doesn't care what the underlying cluster is.

-

1

1

-

-

What are you expecting? DC is in the extended character set.

In the help for the To Lowercase function it states:

QuoteIt also translates any other value in the extended ASCII character set that has a lowercase counterpart, such as uppercase alphabetic characters with accents

What it converts to is probably dependent on the code page you are using.

-

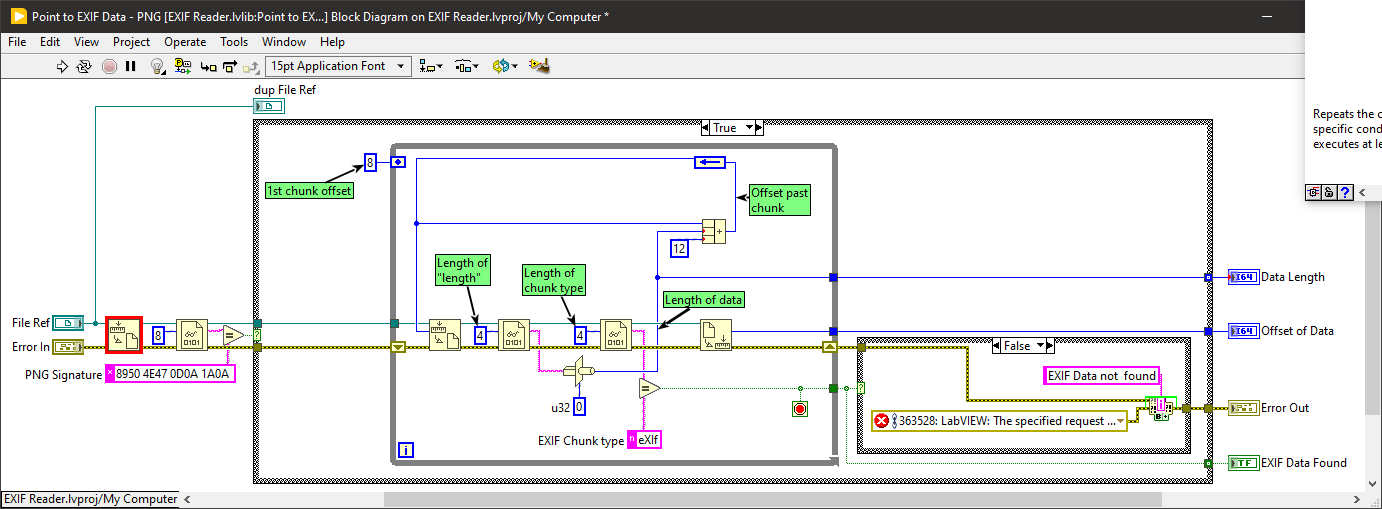

Nicely written but doesn't work for me (LabVIEW 2023 64 bit on Windows 10) using this image:

.

.

It hangs and never returns in "Point to EXIF Data - PNG.vi".

The while loop never terminates as "Get File Position.vi" returns Error 4 (end of file) and there is no check on errors inside the loop so it keeps going and incrementing "Offset past chunk". Additionally, there is no check on the value of the "Offset past chunk" in case it has increased beyond the number of bytes in the file (optional defensive programming).

You also have a custom installer which doesn't create all the palette icons correctly. I would recommend you look at the JKI VI Package Manager which is the defacto standard method for creating and installing addon packages.

-

18 hours ago, hooovahh said:

I have a guy, that has to hire a guy to get the work done

Or, in other words, "A Manager". That's all managers are! Your value isn't diminished, you've just been given the opportunity to increase your skill-set.

-

15 hours ago, Phillip Brooks said:

I can limp along, but I'm unhappy arriving at work every day and I'm not sure how long I can continue.

That's the point where most of us switch to consultancy. For that switch, though, you need good personal relationships with customers and be happy with an irregular income.

-

22 hours ago, hooovahh said:

My problem with this, is that for some tasks if I do it in LabVIEW it might take a week. And if I were asked to do that task it in any other language I'd ask for a year.

Well. If I were your manager then I'd ask you to find a contractor that can do it in a week and task you with managing them.

21 hours ago, codcoder said:

21 hours ago, codcoder said:The point I'm trying to convey here is that people often become closely associated with their roles in their respective fields, not necessarily as a programmer in the language that dominates in that field.

I feel this is a different point.

I am a Systems Engineer and a programming language is a tool I use to engineer a system

That is different from what I was saying that languages are pretty much all the same. The latter is a poke at the industry lacking diversity in it's approach to programming and that I (and others) only see a difference in syntax and not really in execution. You might argue that Ladder Programming is a different beast to C/C++ (which it is) but both of those are 50 years old. LabVIEW has more in common with Ladder programming than any of the more modern languages which is why many people struggle when they move from a text based language.

That is different from what I was saying that languages are pretty much all the same. The latter is a poke at the industry lacking diversity in it's approach to programming and that I (and others) only see a difference in syntax and not really in execution. You might argue that Ladder Programming is a different beast to C/C++ (which it is) but both of those are 50 years old. LabVIEW has more in common with Ladder programming than any of the more modern languages which is why many people struggle when they move from a text based language.

There are 32 types of hammers but they are all still hammers. That's how I see programming languages.

-

1

1

-

-

1 hour ago, codcoder said:

You're a front-end web developer first and a JavaScript coder second.

Are you? What has front end web development to do with Node.js?

QuoteNode.js® is an open-source, cross-platform JavaScript runtime environment.

I'm guessing you have only used Javascript for client-side browser scripting. Don't forget, client-side Javascript is nothing without HTML-itself another language.

-

1 hour ago, codcoder said:

Just because you know JavaScript as a frontend web developer doesn't mean you can transition to C and become an embedded developer.

I don't see why not

Javascript syntax and structures are based on Java and C so it's a much easier transition than, say, to embedded Python. The point here is that it's not the language that makes transition difficult. It's the awareness of the limitations of the environment and how to access the hardware.

Javascript syntax and structures are based on Java and C so it's a much easier transition than, say, to embedded Python. The point here is that it's not the language that makes transition difficult. It's the awareness of the limitations of the environment and how to access the hardware.

-

1 hour ago, codcoder said:

The discussion here often assumes that all text-based languages are generic and interchangeable. I don't get that.

They pretty much are, at the end of the day. When you program in windows, you are programming the OS (win32 API or .NET). It doesn't really matter what language you use but some are better than others for certain things. It's similar with Linux which has it's own ecosystem based on the distributed packages.

Where LabVIEW differs is in the drivers for hardware and that is where the value added comes from. The only other platforms that have a similar hardware ecosystem is probably something like Arduino.

-

1

1

-

-

I always find this kind of question difficult because LabVIEW is just my preferred language. If an employer said we are switching to something else, I'd just shrug my shoulders, load up the new IDE and ask for the project budget number to book to.

I think the issue at present isn't really if there is demand for the next 10 years. It's what Emerson will do at the end of this year. You could find that LabVIEW and Test Stand is discontinued this year, let alone in 10.

-

1

1

-

-

1 hour ago, Rolf Kalbermatter said:

Icons are the resource sink of many development teams. 😀

Everybody has their own ideas what a good icon should be, their preferred style, color, flavor, emotional ring and what else. And LabVIEW actually has a fairly extensive icon library in the icon editor since many moons.

You can't find your preferred icon in there? Of course not, even if it had a few 10000 you would be bound to not find it, because:

1) Nothing in there suits your current mood

2) You can't find it among the whole forest of trees

3) Used all the good ones in other products.

I have a load of icon sets from Tucows which is now defunct. Icon sets are difficult to find nowadays. You used to get 200 in a pack. Now they are individual downloads on websites.

-

You may also be interested in some of the other functions instead of asking the user to change the input method.

-

18 hours ago, Neil Pate said:

@ShaunR and @dadreamer (and @Rolf Kalbermatter) how do you know so much about low level Windows stuff?

Please never leave our commuity, you are not replaceable!

Oh, I am easily replaceable.

The other two know how things work in a "white-box", "under-the-hood" manner. I know how stuff works in a "black-box" manner after decades of finding work-arounds and sheer bloody-mindedness.

.

.

Three Button Dialog truncates message or adds "Standard" at the end

in LabVIEW Bugs

Posted

I cannot replicate this behaviour. (LV 21.0.1f2 )