-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

Open Source SW and mailicious intent

ShaunR replied to Matthew Rowley's topic in OpenG General Discussions

I said it was intuitively obvious that one was a higher risk than the other. Someone trying to kill you poses a higher risk that you will die than the risk from someone that isn't and I don't need to spend 3 weeks investigating to come to that conclusion unless I want a few decimal points on that binary result. If you actually look at the security assessments you sill see things like mitigating controls exist that make exploitation of the security vulnerability unlikely or very difficult. Likelihood of targeting by an adversary using the exploit (my emphasis) So even the formal appraisals require intuitive judgement calls. -

Open Source SW and mailicious intent

ShaunR replied to Matthew Rowley's topic in OpenG General Discussions

I've thought long and hard about your question. While it is intuitively obvious, I'm unable to quantify it outside of the formal risk assessment process - which itself can be fairly subjective. (You do risk assessments, right?) When I say "obvious". I mean in the same sense that the prospect of being beaten senseless on a Friday night is intuitively worse and riskier than tripping over and possibly hurting yourself even though I don't know the probabilities involved. I'm aware of formal processes for mitigation of malicious code - which tend to be fluid and dependent on the particular adversary. However I'm not sure of how you would apply those processes to incompetence. -

Open Source SW and mailicious intent

ShaunR replied to Matthew Rowley's topic in OpenG General Discussions

Indeed. The compatible with LabVIEW program is multi tier and intended to standardise the out-of-the-box experience of addons and herd the cats. The lowest level basically states you have followed the style and submission guides whereas the silver and gold simply attests that you have had positive feedback reviews from real customers as to levels of support (responses within 48 hrs etc). Once a product is on the Tools Network and approved, the same rigor of checks required to gain acceptance is not applied for every release so I would be very wary of a supplier offering this as proof in isolation. -

Open Source SW and mailicious intent

ShaunR replied to Matthew Rowley's topic in OpenG General Discussions

Citation? -

Open Source SW and mailicious intent

ShaunR replied to Matthew Rowley's topic in OpenG General Discussions

Don't use open source with this project (and that is not a glib comment.). Their procedures may let you get away with verifying a PGP key against a distribution but the legal ramifications are not worth it unless you have a dedicated legal team. Do you have a dedicated legal team? If not then bite the bullet and refactor the opensource components out. (Then apply to become one of their "preferred suppliers" ) -

Yes. I have looked at the RT Images directory now and it seems fairly straight forward. There seems to be a couple of ways to utilise the deployment framework but the only thing I'm not sure of is the origin of the GUID strings. I haven't looked at the zip package as yet. I get around the elevation by using my own VI that is invoked as the pre-install VI of VI Package Manager. If it fails, it tells the user to run the LabVIEW IDE using the "Run As Administrator". Not perfect but users seem to have no issue with this process and it works on other platforms (like Linux and Mac) too - of course changing the appropriate language (su, kdesu etc). Since I have all the tools, I'm also thinking of SFTP from a LabVIEW menu instead of the NI deployment. Max and I don't really get along and Silverlight brings me out in hives It would be great for the Linux platforms and avoid most if not all all the privilege problems since I would be able to control the interaction . I'm just mulling over whether I want that knowledge and can be bothered to see where it goes

-

Python client to unflatten received string from TCP Labview server

ShaunR replied to mhy's topic in LabVIEW General

For completeness. Here is how you could have used the flatten function from your first try. python example.zip -

Python client to unflatten received string from TCP Labview server

ShaunR replied to mhy's topic in LabVIEW General

You can have your cake and eat it if you wrap them in a VI Macro -

Python client to unflatten received string from TCP Labview server

ShaunR replied to mhy's topic in LabVIEW General

I'm using 2009 I'll check the others later. ...a little later... ok for 2014 too (it must be operator error on your machine ) -

Python client to unflatten received string from TCP Labview server

ShaunR replied to mhy's topic in LabVIEW General

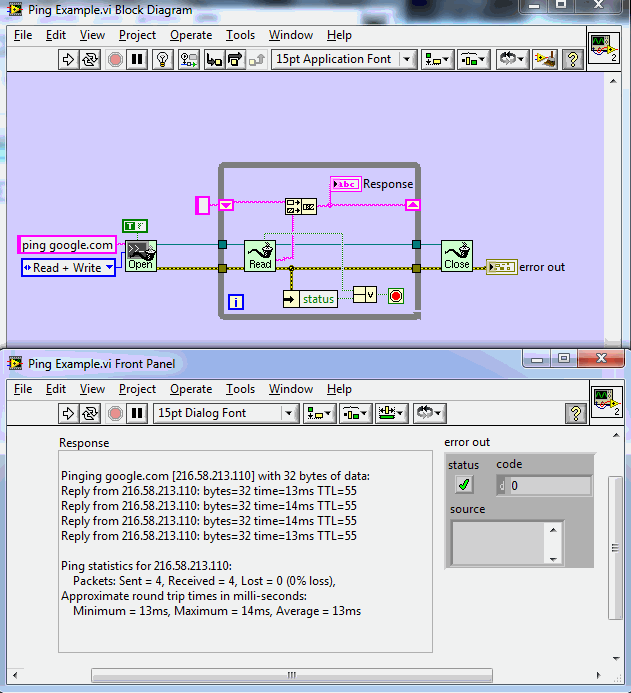

Well. The TCP Write can accept a U8 array so it's a bit weird that the read cannot output to a U8 indicator so simple adapt-to-type on the read primitive would make both of us happy bunnies.. Ahem. "\r\n" (CRLF) instead of EOF (which means nothing as a string in LabVIEW) is the usual ASCII terminator then you can " readlines" from the TCP stream if you want to go this route. You will find there is a CRLF mode on the LabVIEW TCPIP Read primitive for this too. Watch out for localised decimal point on your number to string primitive which may surprise you on other peoples machines (commas vs dots) and be aware that you have fixed the number of decimal places or width. (8) so you have lost resolution. You will also find that it's not very useful as a generic approach since binary data cannot be transmitted so most people revert back to the length byte sooner or later. -

Python client to unflatten received string from TCP Labview server

ShaunR replied to mhy's topic in LabVIEW General

I don't? (write shared libraries). This issue was solved a long time ago and there are several methods to determine and correct the endianess of the platform at run-time to present a uniform endianess to the application. I have one in my standard utils library so I don't see this problem - ever. Don't get me started on VISA...lol. I'm still angling for VISA refnums to wire directly to event structures for event driven comms Strings are variants to me so I see no useful progress in making the TCP read strictly typed-it would mean I have another type straight-jacket to get around and you would find everyone moaning that they want a variant output anyway. -

Python client to unflatten received string from TCP Labview server

ShaunR replied to mhy's topic in LabVIEW General

While I accept your point of order, how LabVIEW represents numerics under the hood is moot. Whenever you interact with numerics in LabVIEW as bytes or bits it is always big endian (only one or two primitives allow different representations). Whether that be writing to a file, flattening/casting, split/combine number or TCPIP/serial et. al. As a pure programmer you are correct and as an applied programmer, I don't care -

Python client to unflatten received string from TCP Labview server

ShaunR replied to mhy's topic in LabVIEW General

The first example you posted.............. There are size headers already. The flatten function adds a size for arrays and strings unless the primitive flag is FALSE. The thing that may be confusing is that the server reverses the string and therefore the byte array before sending. Not sure why they would do that but if the intent was to turn it into a little endian array of bytes then it is a bug. I don't see you catering for that in the python script and since the extraction of the msg length (4 bytes) is correct then python is expecting big endian when it unpacks bytes using struct.unpack but the numeric bytes are reversed. The second example has reversed the bytes for the length and appended (rather then prepended) it to the message. I think this is why you have said 100 is enough since it's pretty unusable if you have to receive the entire message of an unknown length in order to know it's length . If you go back to the first example. Remove the reverse string, set the "prepend size" flag to FALSE and then sort out the endianess you will be good to go. The flatten primitive even has an option for big endian, network byte order and little endian so you can match the endianess to python before you transmit (don't forget to put a note about that in your script and the LabVIEW code for 6 months time when you forget all this ) If you need to change the endianess of the length bytes then you will have to use the "Split Number" and "Join Number" functions if you are not going to cater for it in the python script. All LabVIEW numerics are big endian. -

Moves like that are almost never due to technical capabilities. They are either political or the sales engineer has worked hard for a long time and negotiated some enormous discounts and concessions to break NI lock-in. Have there been any changes to the decision-making management recently? Say. An ex Siemens employee?

-

Ahh. That explains LVPOOP.

-

-

Many people use Rolfs oglib_pipe but the real solution is to come into the 21st century and stop writing CLIs.

-

Not really a LabVIEW thing. You can manage Windows devices using the SetupDI API and you would call them using the CLFN. If that didn't do it for you then you'd be back down in the IOCTL where there be monsters.

-

Pharlap is a walk in the park VxWorks was the one that made me age 100 years I actually have some source with the relevant changes but never had a device.

-

That's nothing to sniff at. At some point you just have to say "this is the wrong way to approach this problem". JSON isn't a high performance noSQL database - it's just a text format and one designed for a non-threaded, interpreted scripting language (so performance was never on the agenda . )

-

300MB/sec? If you want bigger JSON streams then the bitcoin order books are usually a few MB

-

I don't use any of them for this sort of thing. They introduced the JSON extension as a build option in SQLite so it just goes straight in (raw) to an SQLite database column and you can query the entries with SQL just as if it was a table. It's a far superior option (IMO) to anything in LabVIEW for retrieving including the native one. I did write a quick JSON exporter in my API to create JSON from a query as the corollary (along the lines of the existing export to CSV) but since no-one is investing in the deveopment anymore, I'm pretty "meh" about adding new features even though I have a truck-load of prototypes. (And yes. I figuratively wanted to kiss Ton when he wrote the pretty print )

-

I was initially manipulating the string but then you demonstrated the recursive approach with objects for encoding which was more elegant and removed all the dodgy string logic to handle the hierarchy. Once I found that classes just didn't cut it for performance (as per usual) I went back and solved the same problem with queues. The fundamental difference in my initial approach was that the retrieval type was chosen by the polymorphic instance that the developer chose (it ignored the implicit type in the JSON data). That was fast but getting a key/value table was ugly. Since all key/value pairs were strings internally the objects made it easier to get the key/value pairs into a lookup table. Pushing and popping queues were much faster and more efficient at that, though, and didn't require large amounts of contiguous memory.