-

Posts

4,999 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

Not sure what you are trying to achieve here. The "List" is an example of a class that can be used with different type data (that's what variants are for) and VIMs give you a simple method to "adapt to type".

-

Indeed. My conversation was in replying to Shoneill. The OP doesn't have a problem with the producer being faster than the consumer.

-

The use of TCP/IP is because it is acknowledged and ordered. Don't forget this is for when the producer is faster than the consumer - an unfortunate edge case. No. The client is effectively DOSing the server (causing the disconnects). TCPIP already has a mechanism to acknowledge data and even retries if packets are lost. This is just using the designed features to rate limit. The receiver side can have as much buffer as it likes. There is no need to "match" each endpoint. We just want to rate limit the send/write so as not to overwhelm the receiver (I'm not going to use client/server terminology here because that is just confusing) As I said earlier. They don't have to be matched. If you are really worried about it you can modify the buffer size on-the-fly. You are getting bogged down on being able to set a buffer to exactly the message size. It doesn't have to be that exact, only enough that the receiver doesn't get overwhelmed with backlog and occasional room to breathe. It's simple, fast, reliable and far more bandwidth efficient than handling at Layer7.

-

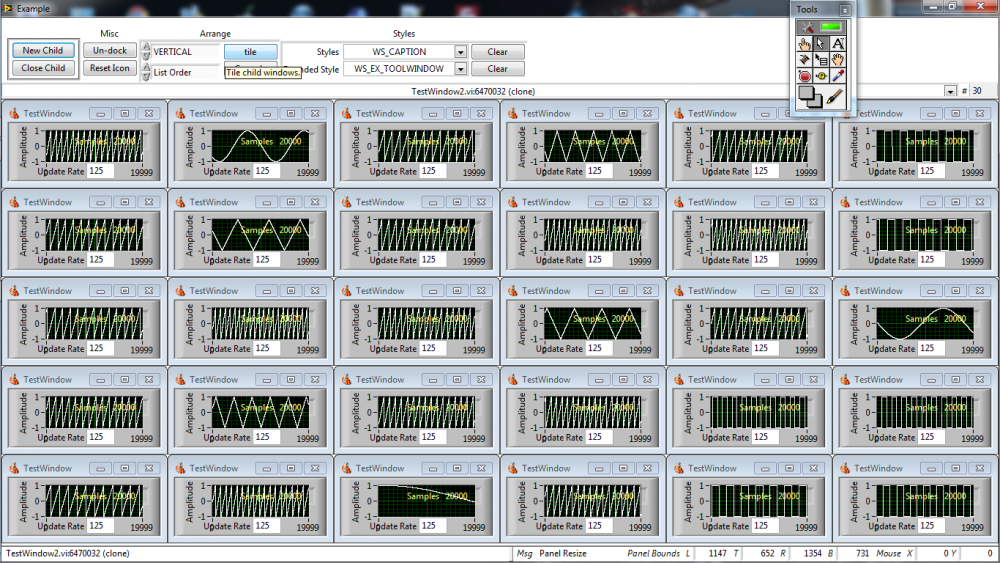

Interesting. Why didn't you use Websockets, RTSP or WebRTP? Well. B & C are the same thing essentially from a display point of view. I have achieved similar things to A in the past with saving to memory mapped files at high data rates which can be exploited by other VIs or even other programs. But your problem seems to be rendering, not acquisition or exploitation. What I'm not understanding at present is if an image needs operator intervention then presumably they can only operate on one image at a time and 30 line profiles or histograms aren't that intensive (why did NI drop array of charts?). (each vI is updating at 125ms and displaying 20,000 points - CPU utilisation ~ 6%). So how big are these image files?

-

You don't need a file to display an inline image in HTML and there is the LV Image to PNG Data VI. The limitation is how fast JavaScript can render images.

-

I used HTML5 canvas for just displaying an image. But used Raphael to overlay ROI, annulus, annotations and cursors. I could have exported an image to SVG (which Raphael supports) but LabVIEW can't do that. The hard work was by the back-end so this was purely for display purposes to the user. If you are planning on post-processing outside of LabVIEW then JavasScript is definitely not the way to go.

-

It sounds like the cRIO doesn't have the device profile. I'm guessing here, but on Windows we use MAX to create a profile for an unknown device or tell MAX what the device is using *.cfg files for that device. IIRC there is a NI DAQ Configuration Utility for Linux targets where *.cfg files can be imported. Maybe have a hunt around the forums for more information about the utility, because raw USB is a nightmare.

-

As a first stop. Have you tried using the "External Window" tools. I'm not sure of the details, but I seem to remember some people switching to it for similar reasons. Outside of LabVIEW, I have used Raphael (JavaScript) but had to write my own ROI and annulus which isn't that hard really. Another that springs to mind is ImageJ. This is Java (....shiver....) but has excellent manipulation tools and certainly should be on your list of "things to look at"

-

Sure you would. The servants don't need a modern kitchen to cook their gruel

- 14 replies

-

- dialog box

- labview

-

(and 1 more)

Tagged with:

-

They aren't really architectures. It's like saying a kitchen, bedroom or bathroom is a house. It is the composition of all the different room types that makes a house (analogous to software architecture) and the different rooms (Producer/Consumer, Pub/Sub, Actor, State Machines et.al) fulfil specific requirements within it. The problem is that people choose one room type and try to fit everything in it, ending up with a studio apartment where the mess, caused by trying to make everything fit, just spreads around tripping up the residents, making it hard to move around and almost impossible to redecorate.

- 14 replies

-

- dialog box

- labview

-

(and 1 more)

Tagged with:

-

I'm exactly the same. You end up trying so many different things to get it to work you don't really know what parts were crucial. Rabbit hole after rabbit hole. Mine isn't Ubuntu but Centos which is supported but even that wasn't trivial. When they say "supported" they mean you can phone up to get support and not get stone-walled I had to install LV2012 on Centos 7.2 (64bit with Gnome) this time around. Previously I stuck religiously to OpenSuse 32bit with KDE because that just worked out of the box. The main things I remember this time round are that I had to [first find then] install the relevant 32 bit libraries piece-by-piece (2012 is only 32 bit on Linux) as the installer complained about them. Then I had to symlink them because it still couldn't find them. Then MESA (whatever that is) and finally the kernel source to get VISA. This was over the period of about 2 days trying different things and I'm sure some of the stuff I tried, but can't remember, contributed to the final success.

-

But we have internet nowadays so you can do stuff like this-it's not rocket science. As for LabVIEW. Since I use polymorphic VIs for APIs and always make the menu visible, I use the instance menu and add (deprecated) after the instance (and menu) name. From that release it will be available for a minimum of 2 years to allow migration but generally it will not be removed at all from the library, only removed from the polymorphic list after that.

-

I think I actually heard an audible sigh at the end of that. Nice. And learning the meaning of "deprecate" wouldn't hurt either.

-

OK. So how does it differentiate between an NI or non-NI client?

-

It's a call to setsocketoptions like the NAGLE. There are some VIs in Transport.lvlib.

-

What's wrong with flooding the buffer? (they are allocated for each connection) TCPIP connections block when the buffer is full (that's why there is a timeout on the write). If you set the buffer to, say, 10 x message size then it will fill up with 10 messages and wait until at least one has been received (nak'd) and then write another.

-

"Propagating Calibration Changes" or "Difference Based Configurations"

ShaunR replied to dterry's topic in LabVIEW General

It says on the diagram password is "12345" although I'm so familiar with the syntax I didn't really give much thought that it wasn't obvious for those that haven't seen the "Speed Example". If you want to see it in other packages then just make a backup (there's a button for it in the toolbar). The backup will be unencrypted. It still has to go through formal testing for inclusion so thanks for the debug (re: path wire). Not sure why you got "not-a-path" since it is supposed to return the filename appended to either the project directory or the directory from which the top level VI exists in. I expect that will disappear when it is included in the main package but I will error guess to see if I can smell the reason and make it more robust. With regards to types. SQLite is [almost] typeless - It is one of the reasons I love SQLite . It uses what are called affinities which, for the most part, affect how data is stored rather than imposing type constraints. I use this to remove the strict typing in LabVIEW (you'll notice everything is a string). If you move to another DB then you will of course have to consider the types and your code will become more complex as you convert backwards and forwards for the specific types. You will also notice that each parameter has a "type" field, which is nothing more than a text label so that when required in LabVIEW, the strict typing can be reintroduced for the things that matter, if desired. (the VI I used to "guess" your types from the INI files needs some more work ). That isn't required for this configuration example (everything's a string...lol), But it is based on a real configuration editor I have written many times over for different people. You have a number of choices from this point on for "Steps". You could make another table just like the "parameters/Limits" or you could just use labels (groups). The problem with the former is that it is not very generic and you usually run into problems further down the line with instruments "in use" when they are used for multiple test steps in parallel. The labels approach enables you to just use a filter on the parameters (works for both limits and configuration) but I can see good arguments for either. You will note that the schema is hinged on Instrument configuration and steps don't really factor into that very much - just like the tests. So the schema isn't hierarchical from Test>Steps>Parameters as you show in you second ER diagram. Calculations are anyone's' guess. I don't know what they are for or what they do. Off the top of my head, I would probably consider them just another parameter with a "calc" label or group.- 25 replies

-

- configuration

- architecture

-

(and 3 more)

Tagged with:

-

No requirements specification; no solution. If you don't have one, then write one. It will force you to consider the details of the system, the components and how they will interact. If you are in the position to dictate component/device choices then choose a multi-drop interface and ensure all devices/components use that interface (TCPIP/RS485/Profibus whatever-find the common denominator). Then try and normalise the protocols (SCPI for example). A judicious choice of device interface options means the difference between juggling RS232, TCPIP, GPIB, RS485 et. al when they all may have TCPIP options when purchased. It will decrease the code complexity by orders of magnitude when you come to write it. Once you have all that defined and documented, then you can start thinking about software.

-

"Propagating Calibration Changes" or "Difference Based Configurations"

ShaunR replied to dterry's topic in LabVIEW General

Oops. A bit embarrassing. Seems LabVIEW linking insists that VIs are in the VI.lib instead of user.lib when opening the project. and causes linking hell! Attached is a new version that fixes that. However. If it asks for "picktime.vi", it is a part of the LV distribution and located in "<labview version>\resource\dialog" which isn't on the LabVIEW search path by default, it seems. I'll delete the other file in the previous post to avoid confusion. Test Manager0101.zip- 25 replies

-

- 1

-

-

- configuration

- architecture

-

(and 3 more)

Tagged with:

-

Sequence the wait and time functions using a sequence to ensure they are executed the in the same order, each time, on each iteration. A better approach is to use the "Timed Loop" instead of the wait.

-

"Propagating Calibration Changes" or "Difference Based Configurations"

ShaunR replied to dterry's topic in LabVIEW General

Sorry it's a bit later than expected - I was called out of country for a week or so. Here it is, though. You'll need the SQlite API for LabVIEW but once you have that you should be good to go. There's still a couple of bits and pieces before it's production ready and I'm still "umming" and "ahing" about some things, but most of it's there.- 25 replies

-

- configuration

- architecture

-

(and 3 more)

Tagged with:

-

"Propagating Calibration Changes" or "Difference Based Configurations"

ShaunR replied to dterry's topic in LabVIEW General

You will design your own schema-it's an example. Email support@lvs-tools.co.uk and we'll get your error looked at. At a glance, it looks like it can't extract the files to the vi.lib directory.- 25 replies

-

- configuration

- architecture

-

(and 3 more)

Tagged with:

-

"Propagating Calibration Changes" or "Difference Based Configurations"

ShaunR replied to dterry's topic in LabVIEW General

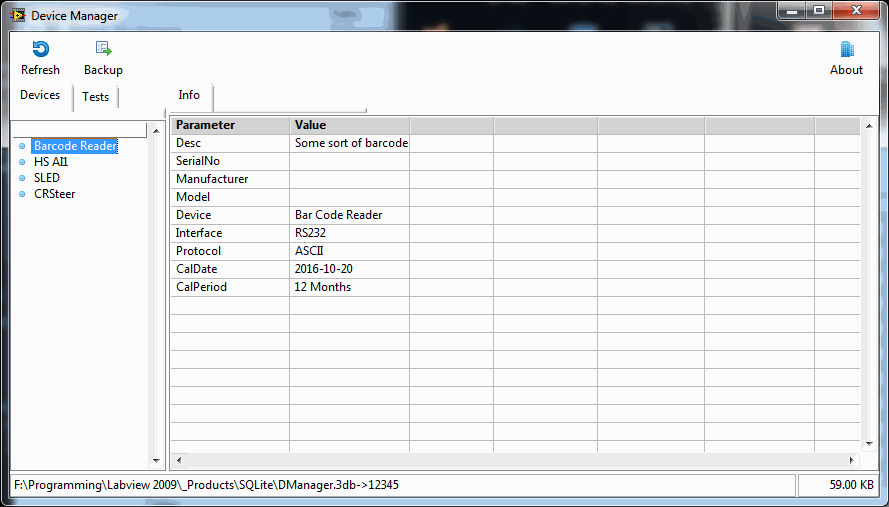

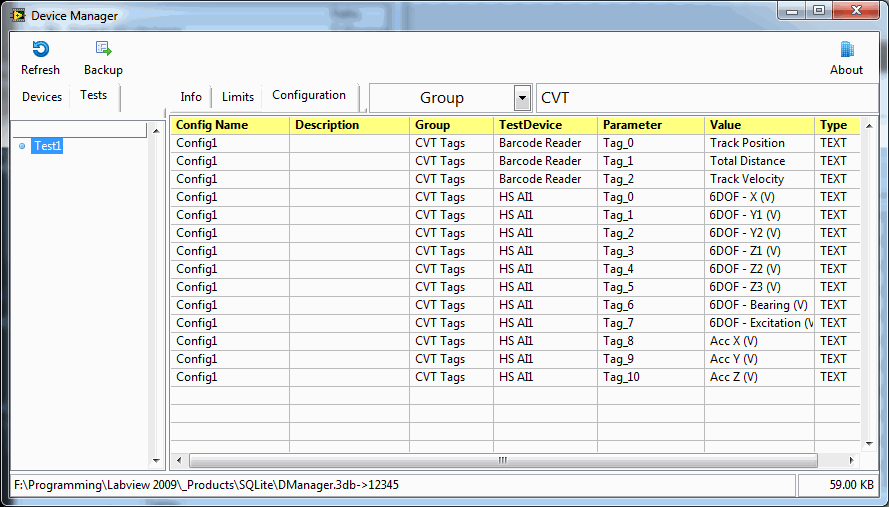

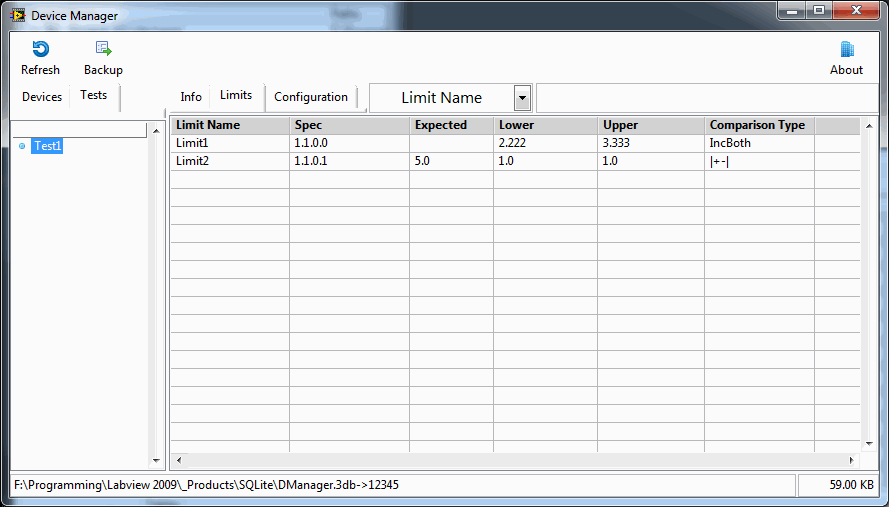

You can download the API here. (You will need to wait for the example) It's not that important to have real files - it's just useful context for you. But to demonstrate the power of using a DB means that you can have multiple configurations of devices and tests so what I have so far is this: This is just showing some device info which is the beginnings oft of asset management. This is showing you a filtered list (CVT Tags showing) of the configuration parameters (your old ini files) for Test1 that you can edit. ....and the Test1 limit list You can go on to add UUTs which is just a variation on the devices from a programmers point if view but I don't think I'll go that far, for now. The hard part is getting the LabVIEW UI to function properly...lol.- 25 replies

-

- configuration

- architecture

-

(and 3 more)

Tagged with:

-

"Propagating Calibration Changes" or "Difference Based Configurations"

ShaunR replied to dterry's topic in LabVIEW General

Well They are all config files so I'll have to make up some tests and test limits. I think I might add it to the examples in the API-without your data of course. I'll have a play tomorrow.- 25 replies

-

- configuration

- architecture

-

(and 3 more)

Tagged with:

-

"Propagating Calibration Changes" or "Difference Based Configurations"

ShaunR replied to dterry's topic in LabVIEW General

Depends how much of a match the example will be to your real system. I could just copy and paste the same INI file and pretend that they are different devices But it wouldn't be much of am example as opposed to, say, a DVM and a spectrum analyser and a Power Supply - you'd be letting me off lightly .- 25 replies

-

- configuration

- architecture

-

(and 3 more)

Tagged with: