-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

Listbox and MulticolumnListbox Border and Horizontal Lines Colors

ShaunR replied to dcoons's topic in User Interface

..and splitters. It's these sorts of issues that are the reason some of us abandoned the LabVIEW UI altogether. -

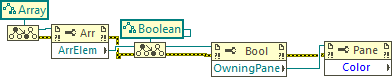

Get Boolean Image with Alpha - Get Array Element Background Color

ShaunR replied to hooovahh's topic in User Interface

There is an "Element" property for arrays. You can get that then cast it to the element type. Then your other methods should work (recursion). Boolean Get Image Testing_sr.vi -

To be fair. Those that VDB suggested aren't really protocols, they are middleware. One is based on OPC, the other is a translation service into an API. SILA is interesting in that it's basically what CoAPs does so I think your cartoon would definitely apply there Personally. I think they should just make all devices SCPI compliant and be done with it

-

[Request for feedback] Python visual scripting IDE

ShaunR replied to Jack Parmer's topic in LabVIEW General

Test Stand is a test sequencer so what you have now isn't even in the same paradigm. In terms of LabVIEW, you have some limited block functionality that could be compared to Express VI's (which we don't use). From what I can tell, It seems to be the Python version of Node Red (Javascript). It has a place but people are very quickly going to be dropped into text coding for anything more than hobbyist applications. Many people on this forum (not me) are also adept Python Developers already and I expect they will weigh in sooner or later. If you are going to target the LabVIEW community, I would suggest you work on your videos. From what I can tell, they are pretty much: Plug in some wires Magic happens Trust me bro, the pretty pictures are because of the magic". -

- 28 replies

-

- 2

-

-

- labview2017sp1

- labview rt

-

(and 3 more)

Tagged with:

-

And that's another big reason why ECL won't be available in Linux. It was ok when it used the NI binaries but the switch to standard OpenSSL binaries means that the CLFN usually loads system wide binaries (even if you define a path) and there is no way for LabVIEW to use "RTLD_DEEPBIND".

-

The problem with test stand is it tries to be all things to all people. IMO, simplifying is best. I haven't used TS for quite a while but when I did, I offloaded all tests to LabVIEW and just used it basically as a script. I had a VI with command/response capabilities (via TCPIP) which meant that TS sequences were a list of operations and just sent strings to execute tests and take measurements. One nice side-effect of the TCPIP meant that you could also execute tests remotely so an operator didn't even have to be sitting next to the machine the tests were running on (quality engineers loved that they could run tests and calibrate from their desks instead of going out to the machine).

-

I had a girlfriend like that. It turned out that her father used mix metaphors for comic effect but she didn't realise and thought it was the correct metaphor. When she said "everyone says it like that" what she meant was "my family says it like that"

-

Not that so much but he might be right and I've been using an eggcorn for my entire life.

-

A malapropism is similar but more common for comedy because it doesn't need to make sense in context (so it's easier and funnier).

-

I love Rolf's eggcorns. I never point them out because he knows my language better than I do.

-

it was meant tongue in cheek and specifically chosen because you've done it a million times (probably). I wonder what the forum mark-up is for that?

-

Don't have that problem with dynamic typing. Typecasting is the "get out of jail" card for typed systems. This one seem familiar? linger Lngr={0,0}; setsockopt(Socket, SOL_SOCKET, SO_LINGER, (char *)&Lngr, sizeof(Lngr)); DWORD ReUseSocket=0; setsockopt(Socket, SOL_SOCKET, SO_REUSEADDR, (char*)&ReUseSocket, sizeof(ReUseSocket));

-

Oddly specific. I would want a method of being able to coerce to a type for a specific line of code where I thought it necessary (for things like precision) but generally...bring it on. After all. In C/C++ most of the time we are casting to other types just to get the compiler to shut up. They broke PHP with class scoping. They are now proposing breaking it more with strict typing. Typescript is another. It's the latest fad.

-

Actually. your particular problem is that the variant type is only a half-arsed variant type. It's easy to get something into a variant, but convoluted and unwieldy to get them out again. I think "Generics" were supposed to resolve this but they never materialised. I shake my head with the recent push in the software industries to strict type everywhere. Most of the programming I do is to get around strict typing.

-

If only there were a way that one could use strings to define the event. We could call them, let me see, "Named Events"?.

-

There is a "best practices" document (this too) but I suspect you are looking for a less abstract set of guidelines.

-

We have the Start Asynchronous Call for creating callbacks in LabVIEW. There are a couple of issues with what you are proposing in that callbacks tend not to have the same inputs and outputs. What we definitely don't have, at all, is a way for implementing callbacks for use in external code (in DLL's). While what I'm proposing *may* suffice for your use case, it's the external code use case where LabVIEW is lacking (which is why it makes Rolf nervous ).

-

It's only software One isn't constrained by physics.

-

None of that is a solution though; just excuses of why NI might not do it.

-

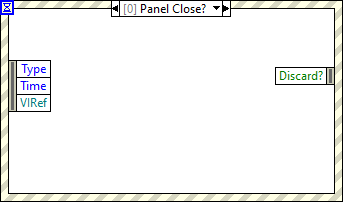

We've had these arguments before. I always argue that It's not for me to define the implementation details, that's NI's problem. We already have blocking events (the Panel Close? greys out the "Lock Front Panel" checkbox because it's synchronous). The precedent is already there. What I think we can agree on is that the inability to interface to callback functions in DLL's is a weakness of the language. I am simply vocalising the syntactic sugar I would like to see to address it. Feel free to proffer other solutions if events is not to your liking but I don't really like the .NET callbacks solution (which doesn't work outside of .NET anyway).

-

Not really, although I suppose it is in the same category as what I would propose. I mean more like the "Panel Close?" event. The callback would be called (and an event generated). You would then do your processing in the event frame and pass the result to the right hand side to pass back to the function (like the "Discard?" in the "Panel Close?" event). Of course. Currently we cannot define an equivalent to the "Discard?" output terminal. They'd have to come up with a nice way to enable us to describe the callback return structure.

-

Indeed. However, NI *should* have been aggressive in correcting that. I agree. Lazarus is the Open Source equivalent and I use a variation called CodeTyphon. But it highlights that even if LabVIEW is considered "vendor software" it should be on those lists as it is more of a programming language than HTML/CSS (as you point out). There could be no argument against inclusion, IMO.

-

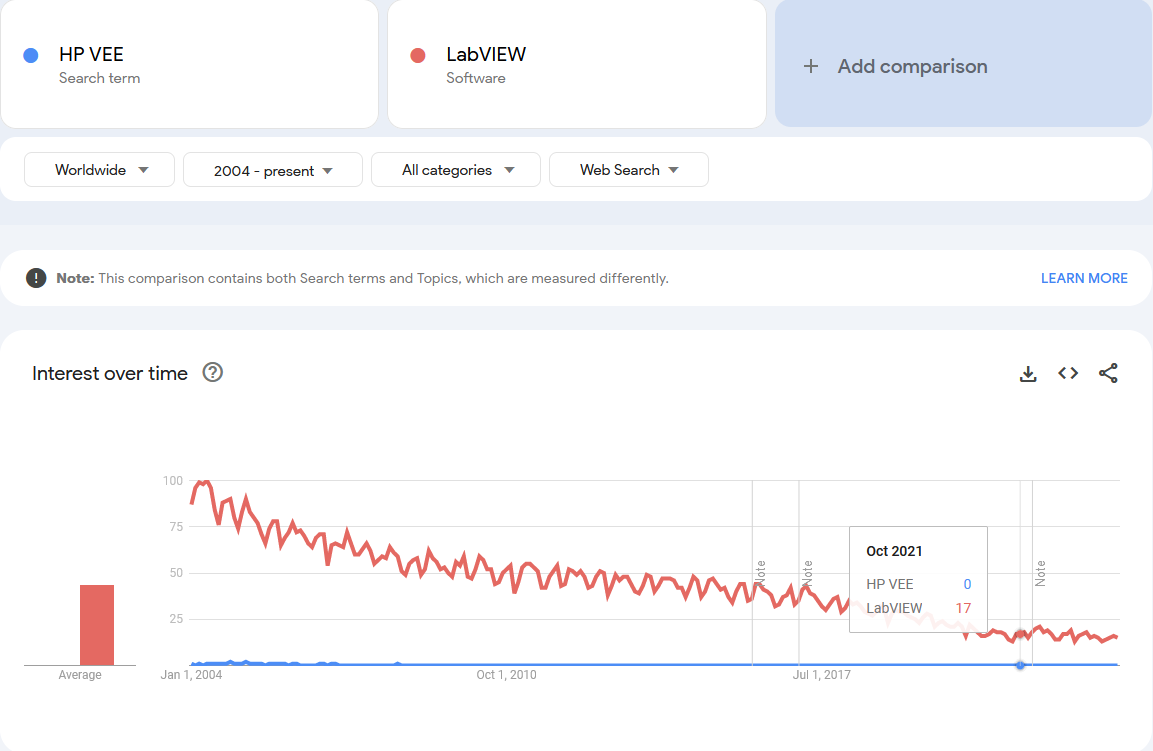

LabVIEW never seems to even be listed on programming statistic websites. I refuse to believe (also) that LabVIEW has less programmers than, say Raku. Hopefully Emerson will aggressively promote LabVIEW on these kind of sites to raise visibility. NI failed to do so consistently.

-

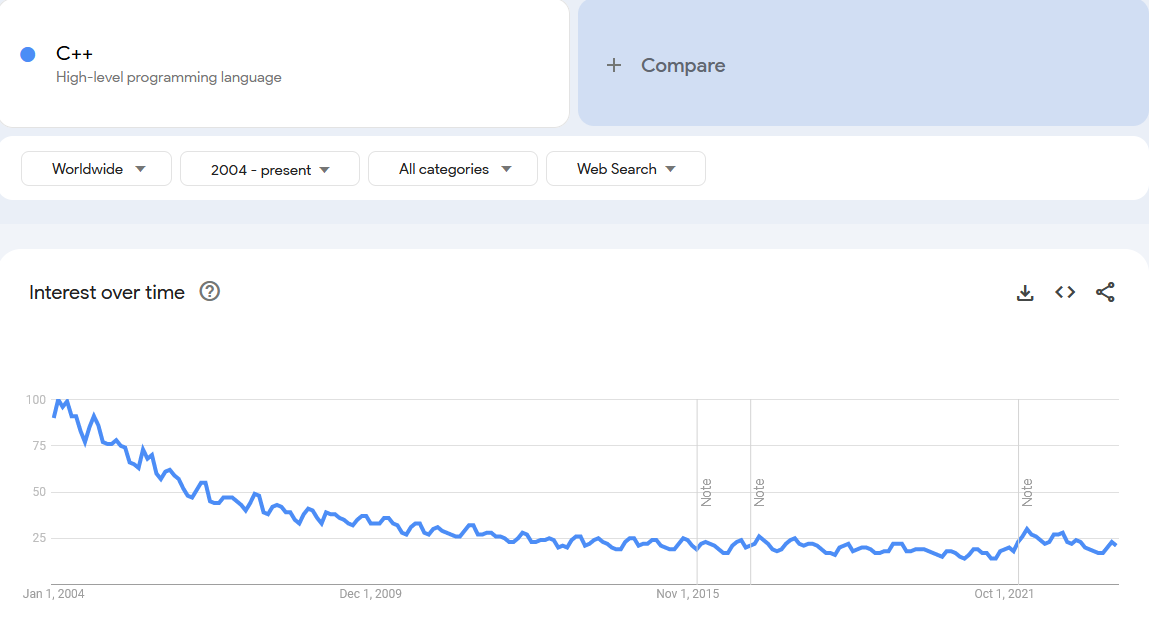

I've always been suspicious of Google Trends because it doesn't give context. The following is C++ but I refuse to believe it's marching to oblivion. But it's fun to play with