-

Posts

867 -

Joined

-

Last visited

-

Days Won

26

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by shoneill

-

I think how you do it is a bad idea. There are almost certainly more robust und easier to understand methods to do this. You can call the "Call parent method" in any child DD method and in this way pass on UI data to the base class (which will be called last) which then creates and shows the UI. In this way you automatically have a hierarchy of UIs available. If, for some reason, some UIs are not always available, then you simply don't add the data to the chain for that instance of the inheritance hierarchy (abstract classes for example).

- 16 replies

-

What I mean by not supported is that there's no object available to cast it to.

-

Good luck with that. A lot of these things are simply not supported in LabVIEW. I don't think you'll be able to get far on this. But if you do, I'd also be very interested in that.

-

Both of your FIFOs are massive, both to and from the FPGA. They can be MUCH smaller. What does your compile report look like, can you post a screenshot? Yeah, problem seems to be distributed RAM LUT cells, of which you only have 11000 ont he device. Your two FIFOs to and from the FPGA can be way way smaller, like 50 elements. You have set them to use Block RAM but I don't think the compiler is doing that.

-

I missed that too.

-

You can go through the code, find all instances of the typedef and replace them with itself, this will actually bring them into sync. I've done this in the past, just make sure to get the Typedef path and then replace the control with it's own typedef (from path).

-

Yes, everything is done via binding to prepared statements. None of this in my code:

-

I do, but testing was done on a HDD.

-

The answer to my surprisingly fast read times (Given the physical work required to actually perform head seeks and factoring in rotational speed of HDDs) is that Windows does a rather good job of transparently caching files int he background. If you look at the area labelled "Standby" on the memory tab in Task Manager - this is cached file data. Search for RamMap from Sysinternals for a tool to allow you to empty this cache and otherwise view which files are in cache and which portions of the files..... Very interesting stuff. And stuff I knew nothing about until a few days ago.

-

It was this part, although I'm learning a lot about the internals of Win7 the last few days. I have since also found the tool RamMap from Sysinternals which allows a user to delete file caches from Windows so that we can effectively do "worst-case" file access tests without having to restart the PC. This has given me some very useful data )no more influence from file buffering). If you open up the "performance monitor" in Windows it shows "Standby" memory. This is memory which is technically NOT used but in which Win7 caches file reads and writes so that if the act is repeated, it can do so from RAM instead of having to go to the disk. IT's basically a huge write and read cache for the HDD. Mine was 12GB last time I looked! In one sense, this allows 32-bit LV to "access" more than 4GB of memory (just not all at once). Simply write to a file and re-read piece for piece. It's still not as fast as actual memory access, but it's a whole lot faster than actual disk access.

-

No not yet. I wanted to get some idea of the workings of SQLite before going that route. As it turns out, we may not be going SQLite after all. Higher powers and all. Regarding the memory mapped I/O.... We will need concurrent access to the file (Reading while writing) and if I have understood your link properly, this may not work with mmap. Additionally, I'm currently investigating how the Windows 7 own "standby" memory is massively influencing my results. Caching of files accessed (currently 12GB on my machine) is making all of my disk reads WAY faster than they should be. I mean, it's a cool feature, but I'm having trouble creating a "worst case" scenario where the data is not in memory..... Well, I can benchmark ONCE but after that, bam Cached.

-

PRAGMA synchronous=OFF; PRAGMA count_changes=OFF; PRAGMA journal_mode=WAL; PRAGMA temp_store=MEMORY; PRAGMA cache_size=100000; That's my initialise step before creating tables.

-

No, no zeros missing, just very large transactions. Each datapoint actually represents 100k data points x 27 cols in one big transaction. Overall throughput must be 20k per second or more. I should have been more specific about that, sorry. I'm currently running into all kinds of weird benchmarking results due to OS buffering of files. One one hand it's good that Win7 uses as much RAM as possible to buffer, on the other hand, it makes benchmarking really difficult...... 1000 datapoints in 100ms from a magnetic HDD is VARY fast

-

I have a request pending to stop the world so that I can disembark...... Maybe I have something on my physical PC getting int he way of the file access...... I have just saved the newest version of your package to LV 2012 and run the software there. There still seemt o be differences. Either LV 2012 has some significant differences or I have processes running on my physical PC which is interfering. Either way, benchmarking this is turning into a nightmare. On a side note: How on earth does SQLite manage to get 1000 decimated data points (with varying spacing) from a multi-GB file in under 100ms given that I'm accessing a standard HDD, not an SSD. That's very few disk rotations for so much data.......

-

*quack* Just to make it clear I'm a power rubber ducker. I think part of my problem may be down to different versions of the SQLite DLL. I was using the "original" SQLite Library from drjdpowell but with an up-to-date SQLITE DLL from the web. I just downloaded the newest version and let it run in LV 2015 in a VM and all queries seem to be fast. If drjdpowell is watching, any input on that regard?

-

*EXPLETIVES HERE* It seems I'm a victim of OS disk access buffering. I have, in my stupid naivety, been using the same query again and again for speed testing. Problem is that (as Ihave now seen) every time I change my decimation factor (i.e. access different portions of the disk) the read speed is completely destroyed. Opening file X and running Query A 100 times has one slow and 99 fast queries. Changing the Query to B makes things slow again (followed by subsequent fast queries), and changing to C again slows things down and so on and so forth. Differences can be huge, from 30ms (fast) back to 34 seconds if I want to access different disk areas. Am I insane to not have thought of this before? Is the OS buffering or is it SQLite? It seems like SQLite may also have just died for my application. I initialise with: PRAGMA temp_store=MEMORY; (Could this be part of my problem perhaps?) PRAGMA cache_size=50000; (Surely this should only speed things up, right?) And I query using: WITH RECURSIVE CnT(a) AS ( SELECT 0 UNION ALL SELECT a+1 FROM CnT LIMIT 1000 ) SELECT X,Y,Z,Float1 FROM P JOIN CnT ON CnT.a=P.X WHERE Y=0 Just changing the step size in my cte from 1 to something else causes massive slowdown int eh subsequent access. Of course my application will be performing many different queries. It seems like either the OS or SQLite itself was fooling me. I started getting suspicious about a magnetic disk even being able to return 1000 data points in 30 msec, it doesn't add up physically. *MORE EXPLETIVES*

-

A new SQLite question: I have, during testing, created several multi-GB files which I can use for read testing. I notice that upon first access (after loading LabVIEW) the query to get data takes sometimes exactly as long as if the file was not indexed at all. Subsequent reads are fast (drops from 30sec to 100 msec). 1) Has anyone else seen this? 2) Is this normal (Loading Index into memory) 3) How do I make the first file access fast? Shane

-

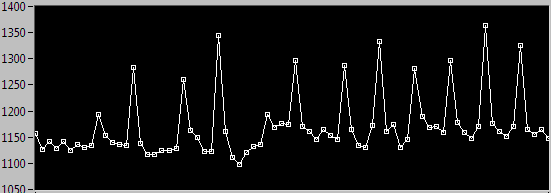

My remarks on degrading performance relate purely to the suggested replacement for the PRIMARY KEY by drjdpowell. I see similar write performance (fast, constant) when using a normal "rowid" primary key. As soon as I introduce my X,Y,Z Primary key as suggested ealier in the thread, I see significantly worse performance to the point that it rules out SQLite for the required function. I really need 20k INSERTS per second. At the moment I'm resigned to writing with standard rowid Index and then adding the X,Y,Z afterwards for future read access. Standard "rowid" Primary KeyIndex Compund (X,Y,Z) Primary Key Index

-

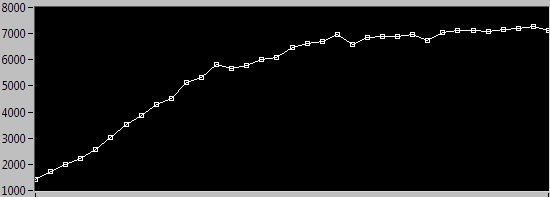

My INSERTS are with 3x Index (X,Y,Z) and 24 REAL values. Initial INSERTS take about 1s, but rises rapidly with more data to 7-8 seconds. Regarding the JOIN vs UNION..... I was simply pondering if it wouldn't somehow be possible to do something like: WITH RECURSIVE CnT(a,Float) AS ( SELECT 400000,0 UNION ALL SELECT a+200 FROM CnT UNION SELECT Float1 FROM P WHERE X=CnT.a AND Y=0; LIMIT 1000 ) SELECT Float1 FROM CnT; Unfortunately, the second UNION is not allowed.....

-

To continue on from this, this method of getting 1D decimated data seems to be relatively efficient. I am unaware of the internal specifics of the database operations but wouldn't a JOIN be more expensice than actually being able to directly SELECT the items I require from within the CTE and just return them? Problem there is that it would normally use a "UNION" but this doesn't work in a CTE. Is there any way to access directly the P.Float1 values I want (Once I have the index, I can directly access the item I require) instead of going the "JOIN" route, or is my understanding of the cost of a JOIN completely incorrect? That's interesting. Are there any INSERT performance consequences of this? Is having a UNIQUE index more efficient for an INSERT than a non-unique one? PS Yeah, having a compund PRIMARY KEY significantly slows down INSERTS by a factor of 7-8 for my testing. This rules it out for me unfortunately, shame really because that would have been cool. INSERTING identical data packets (100k at a time) which represent 5 seconds of data takes 1.2 seconds with default index and starts off OK but ends up at 8 seconds for a compound index of X,Y and Z. For slower data rates it would have been cool.

-

This seems to be working moderatly successfully: WITH RECURSIVE CnT(a) AS ( SELECT 400000 UNION ALL SELECT a+200 FROM CnT LIMIT 1000 ) SELECT X,Y,Z,Float1 FROM P JOIN CnT ON CnT.a=P.X WHERE Y=0; It gives me back 1000 decimated values from a 12.5GB file in just under 400msec (Int his example from X Index 400k to 600k). I might be able to work with that.

-

I have been looking into that and have seen that it has been using a - to put it politely - eccentric choice of index for the query. Of course it may be my mistake that I created an Index on X, an Index on Y an Index on Z AND and Index on X,Y,Z. Whenever I make a query like SELECT Float1 FROM P WHERE (X BETWEEN 0 AND 50) AND (Y BETWEEN 0 and 50) AND Z=0; it always takes the last index (Z here). Using the "+" operator to ignore a given index ( AND +Z=0 will tell SQLITE NOT to use the Z index) I canf orce the "earlier" indices. Only by actually DROPPING the X, Y and Z Indexes does SQLITE perform the query utilising the X,Y,Z Index (and is then really quite fast). Is it a mistake to have a single table column in more than one Index?

-

At the moment, it's a SQLite specific question. I'm amazingly inexperienced (in practical terms) when it comes to Databases so even pointing out something which might be completely obvious to others could help me no end. Doesn't rowid % X not force a MOD calculation on each and every ROW? PS It seems that rowid % 1000 is significantly more efficient that X % 1000 (Defined as Integer). This although both have indexes. Is this because rowid is "UNIQUE"? Also, I need to correct my previous statement. With multiple WHERE conditions, of course the MOD will only be executed on those records which are returned by the first WHERE condition.... so not EVERY row will be calculated.

-

My question relates to retrieving decimated data from the database. Given the case where I have 1M X and 1M Y steps (a total of 1000000M data points per channel) how do I efficiently get a decimated overview of the data? I can produce the correct output data be using Select X,Y,Z,Float1 from P WHERE X=0 GROUP BY Y/1000 This will output only 1x1000 data instead of 1x1000000 datapoints, one dimension quickly decimated Problem is that is iterates over all data (and this takes quite some time). If I do a different query to retrieve only 1000 normal points, it executes in under 100ms. I would like to use a CTE to do this, thinking that I could address directly the 1000 elements I am looking for. WITH RECURSIVE cnt(x) AS ( SELECT 0 UNION ALL SELECT x+1000 FROM cnt LIMIT 1000 )WHAT GOES HERE?; So if I can create a cte with column x containing my Y Indices then how do I get from this to directly accessing Float1 FROM P WHERE X=0 AND Y=cte.x SELECT Float1 from P WHERE X IN (SELECT x FROM cnt) AND Y=0 AND Z=0 Using the "IN" statement is apparently quite inefficient (and seems to return wrong values). Any ideas? In addition, accessing even a small number of data points from within an 8GB SQL File (indexed!) is taking upwards ot 30 seconds to execute at the moment. With 1.5GB files it seemed to be in the region of a few milliseconds. Does SQLite have a problem with files above a certain size?