-

Posts

867 -

Joined

-

Last visited

-

Days Won

26

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by shoneill

-

How to tell if USB camera has failed/disconnected

shoneill replied to Neil Pate's topic in Machine Vision and Imaging

My post seeks only to explain why the renaming takes place. If there's a rapid power off and on, not supporting a proper Serial Number can lead Windows to whink a new camera has been attached. I think the base problem you have is a power supply issue, and there USB is flaky at best. The issues you see with MAX are understandable from an unstable power point of view. Sorry that I didn't portray that information more clearly. Filtering of power over USB is nororiously crappy for most motherboards, for possible fixes, Bobillier's suggestions above soumd perfectly reasonable. -

How to tell if USB camera has failed/disconnected

shoneill replied to Neil Pate's topic in Machine Vision and Imaging

If a USB device does not implement a Device Serial Number correctly, Windows cannot distinguish between a second instance of the same camera being attached and the reconnection of the same camera. This leads to numerous "instances" of devices being installed in the system. If done correctly, the OS should be able to assign a driver to an instance of a specific device (taking serial number into account). The serial number of a device is normally defined in the device configuration package which an OS needs to read before it can even assign a device class. A lot of devices simply don't implement this. Also see HERE for info on where this data SHOULD be stored. -

LabVIEW 2017 Dynamic Event Registration Behaviour

shoneill replied to ShaunR's topic in LabVIEW Bugs

There is no new dynamic refnum behaviour. It behaves just like it was always designed to behave. The left and right sides of the terminal in the event structure have always worked that way. -

LabVIEW 2017 Dynamic Event Registration Behaviour

shoneill replied to ShaunR's topic in LabVIEW Bugs

All of my input has been technical. The information you seek is all in this thread if you care to find it. I also never used the word "misrepresent", so I'm unaware what you're referring to here. Is this supposed to be a joke regarding misinterpreting my misinterpret? I disagree re. the plea to authority. Plus, I'm fully aware of the ridculousness of the logical extension of what Linus said when context is ignored. Note the smiley. Well, for yourself perhaps but others read these threads also so even if you stoicly refuse to listen to any relevant technical details (or god forbid, other opinions), others may be able to incorporate the technical details of the discussion to help form their own opinions. -

LabVIEW 2017 Dynamic Event Registration Behaviour

shoneill replied to ShaunR's topic in LabVIEW Bugs

I never claimed you said that. But it was said. The code I posted illustrates why the proposed operation is incorrect. Jack's code only serves to illustrate why your intuiting which led to your original code was flawed. I don't think I've misinterpreted anything. I understand the original code, the changes and the workarounds. The workaround is to better understand the issue and code appropriately. Regarding not wanting code to change and the plea to Linus' authority: Taken at face value, Linus' comment would preclude the closing of any security flaw which has ever been exploited. Yes, obviously Linus is claiming that Spectre and such should be maintained indefinitely as some may be using it. -

LabVIEW 2017 Dynamic Event Registration Behaviour

shoneill replied to ShaunR's topic in LabVIEW Bugs

The code I posted illustrates why a "read only on first call" cannot work. If what you said is not rubbish, then more clarification is needed because by my understanding it is. You can change the protoype refnum for another of the same type no problem. You can bait and switch to your heart's content. Hence my insistance on differentiating between the data contained within the Refnum and the Type fo the refnum. One is possible to switch (without invalidating anything), the other isn't. Of course you can't change it for a refnum of a DIFFERENT type, that's a design time decision. The code I posted swaps out the refnum for another, but of the same datatype and therefore there's no invalidation at all. -

LabVIEW 2017 Dynamic Event Registration Behaviour

shoneill replied to ShaunR's topic in LabVIEW Bugs

And this relates to your original code....... how? You are NOT changing the datatype of your registration refnum, you are changing the data on the wire (which is the first of the two cases I mentioned in my previous post) and for which I posted a trivial example. -

LabVIEW 2017 Dynamic Event Registration Behaviour

shoneill replied to ShaunR's topic in LabVIEW Bugs

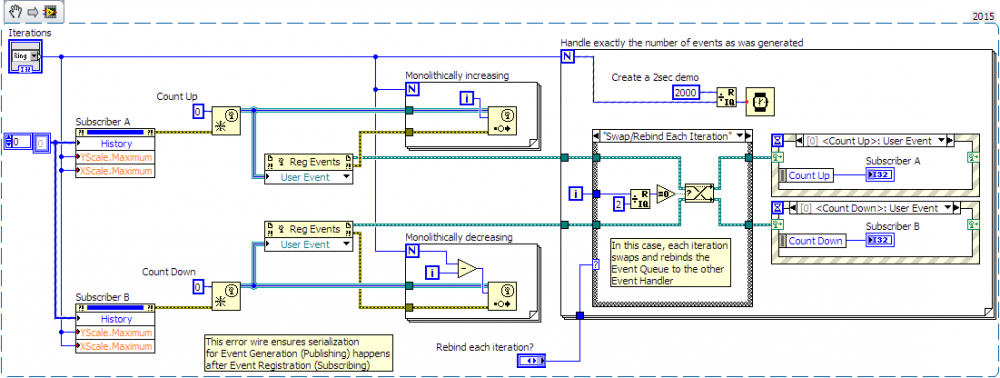

The usage of the word "Prototype" was careless. Use "Datatype" instead. If you mean changing the data carried on the strictly-typed wire attached to the left-hand side of the event structure, then the job queue for the event structure changes to whetever is contained there. As expected really. Jack Dunaway has an example of it in a link previously provided (not by me) in this thread (snippet provided below). If you mean changing the TYPE of the wire, that's a silly question since that can't be done. Doing that requires a recompile of the VI. -

LabVIEW 2017 Dynamic Event Registration Behaviour

shoneill replied to ShaunR's topic in LabVIEW Bugs

The bolded statement is rubbish, I'm sorry. I have a use case. In order to create a branching execution order in my event-driven code, I create entirely new registration refnums for a branch, swap out the event registration wires, execute the branch (which may itself branch....) before returning to the original queued up events. It's a rare use case, but it does exist and is powerful. It also adheres to all of LabVIEW's semantic rules of how things should work. I was reading the thread for a while and thought I was going to side with Shaun, but after looking at the code which he posted, I have to side with the others and say it's clearly (and I mean really clearly) an illegal buffer reuse compiler bug which has been fixed, and that's good. The semantics of the code dictate that the Event Shaun wishes to fire should never be handled in this code. The event registration refnum can be changed in every iteration of the Event structure. That's dataflow. The prototype of the Event Structure must remain constant but the actual event wueues handles within can be altered and substituted at will. Swapping out the Event registration refnum and wiring up new references to the existing refnum (as mentioned elsewhere in the thread) are NOT the same thing. Wiring up a new reference to an existing Registration refnum changes the event source for that event queue, but leaves all existing events in there. Swapping out the event registration refnum actually changes the event queue. Both of these should work and both of these adhere perfectly to dataflow. -

What I found helped int eh past was to generate the text texture myself for application. By creating a much larger texture than required, resulting in each letter being many pixels wide, the effects you are seeing were significantly reduced. Shane

-

How do you instantiate the text int he first place? Texture?

-

The only thing I can think of is to make the text more than a single pixel wide..... Otherwise antialiasing is going to do that to you. Of course, YOu can try turning off the Antialisaing for the object in question..... "Specials: Antialiasing"

-

https://www.opengl.org/archives/resources/faq/technical/polygonoffset.htm Available as "PolygonOffset" for a Scene Object in LabVIEW.

-

If you position objects at the same depth from the camera, you're leaving the choice as to which draws "front" up to rounding errors of the SGL datatype. Put a minimal depth difference between them. IIRC, there's a property you can set in order to force this.

-

Need help naming an "unmaintainable" VI

shoneill replied to Aristos Queue's topic in LabVIEW General

I personally don't like "chaotic" (In a maths sense) because chaos results from incredibly complex physical systems which are complex by definition whereas the code is typically complex by implementation. IANAM (I am not a Mathematician) -

Need help naming an "unmaintainable" VI

shoneill replied to Aristos Queue's topic in LabVIEW General

I recently watched a few Presentations on "Technical debt". I suppose you could say that bad code is beneficial in itself (assuming it works), but with high interest. If you don't come back to fix it, eventually it will bankrupt you. Or if your audience is male-only, the phrase "High maintenance" might work. I hesitate to add this term because it's often used in a very non-technical way which may offend some. -

Yes, I see this "Running but broken". I also see cases where a VI LV thinks is deployed is a different version from the one actually deployed. Or VIs bein broken because of errors in VIs which aren't even used on the target..... I've had RT VIs claim they were broken due to an error in a VI which is only used on our FPGA targets..... Something somewhere is very weird with the whole handling of these things. I've been harping on about it for years and I'm glad (please don't take that the wrong way) that others are starting to see the same problems. Maybe eventually it'll get fixed. Of course, the problem is tat in making a small example, the problem tends to go away nicely. NI really should have a mobile customer liaison (a crack LV debugger) who travels around visiting customers with such non-reproducible problems in order to get dirty with debug tools and find out what is going on. There are so many issues which will never be fixed because the act of paring it down for NI "fixes" the problem.

-

Need help naming an "unmaintainable" VI

shoneill replied to Aristos Queue's topic in LabVIEW General

I like the term viscosity. But it's not a generally applied term int his area. Increasing viscosity (resistance to flow) is not a problem until you need to adapt to a new surroundings. Then I searched for some syntonyms of Viscous and fould "malleable". Hmm. Sounds familiar. Or how about a currently totally underused word in Software Development: "Agile" -

Well, the idea meant was that fixing a bug or implementing a new feature changes the environment for other code so both may or may not have an effect on the prevalence of any other given bug to raise its head at any given time. The larger the change to code, the more disruption and the more disconnect between tests before and after the change. Intent is irrelevant. Features and bugs may overlap significantly.

-

BTW. I just spent the entire day yesterday trying to find out why my project crashes when loading. No Conflicts, no missing files, no broken files. Loading the project without a certain file is fine, adding the file back when the project is loaded is fine but trying to load with this file included from the beginning - boom crash with some "uncontinuable exception" argument. I have NO idea what caused it but eventually, at 7pm I managed to get the project loaded in one go. Yay labVIEW. I don't care how often LV is released as long as bugs in any given version is fixed. To find the really awkward bugs we need a stable baseline. Adding new features cosntantly (and yes, I realise fixing a bug is theoretically the same thing but typically a much smaller scope, no?) raises the background noise so that long-term observations are nigh impossible. I for one can say that the time I spend dealing with idiosyncracies of the IDE is rapidly approaching the time I spend actually creating code. Crashes, Lockups, Faulty deploys to RT...... While LV may be getting "fewer new bugs" with time, it's the old bugs which really need dealing with before the whole house of cards implodes. Defining an LTS version every 5 years or so (with active attempts at bug fixes for more time than that to create a truly mature platform) would be a major victory - no new features, only bug fixes. Parallel to this, LV can have a few "feature-rich" releases for the rest. Bug fixes found in the LTS can flow back into the feature-rich version. I've proposed it again and again but to no avail because, hey, programming optional. I'm annoyed because I want to be productive and just seem to be battling with weirdness so much each day that a "permanent level of instability" just sounds like a joke. At least my exposure to this instability is icnreasing rapidly over the last years, or so it feels. And yes, I'm always sending crash reports in.

-

Any correlation between the segment of the LV user base who adopts non SP1 versions and the ones targetted with the "Programming Optional" marketing?

-

AQ, what if I want (or need) my debugging code to run with optimisations enabled i.e. without the current "enable debugging"? Think of unbundle-modify-bundle and possible memory allocation issues on RT without compiler optimisations...... This would require a seperate switch for "enable debugging" and my own debug code, no?

-

AQ, I'm not arguing against the idea of needing debug code per se (I put copious amounts of debug paths into my code), only on the coupling of the activation to the already nebulous "enable debugging". I see a huge usability difference between RUN_TIME_ENGINE and toggling debugging on a VI. It's my 2/100ths of whichever currency you prefer.

-

OK it's been mentioned a few times: "user optimisations". Are we really naive enough to believe that's all this will be used for? How about making non-debuggable VIs? Broken wires as soon as debugging is enabled? How does this tie in with code being marked as changed for source control? I have BAD experiences with conditional disables (Bad meaning that project-wide changes can actually lead to many VIs being marked as changes just because a project-wide value has changed which will be re-evaluated every time the VI is re-loaded anyway.... ) I presume since this will be a per-VI setting it will have at least a smaller scope. But what about VIs called from within the conditional structure? They are then at the mercy of the debugging enable setting of the caller VI...... I mention this because we tra quite a lot of code reuse across platforms and this problem rears its ugly head again and again. I have no problem introducing a vi-specific conditional disable structure, but linking it to a completely different setting seems just wrong. Sure, "enable debugging" is a pandora's box anyway, but at least for a given LV version it's not a moving target. Imaging "enable debugging" doing something different for each and every VI you enable it on..... That sounds like a maintainance nightmare.