ned

Members-

Posts

571 -

Joined

-

Last visited

-

Days Won

14

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ned

-

Filling a cluster with strings and arrays dynamically

ned replied to jbone's topic in Calling External Code

In those examples, the data returned is always a pointer-sized integer, which is then dereferenced by a mysterious XNode call GetValueByPointer. The example provides what I assume is equivalent LabVIEW code for dereferencing a pointer using MoveBlock. Dereferencing the pointer this way copies the data from space allocated by the DLL into space allocated by LabVIEW. I'm guessing that in your case you are trying to return the structure/array directly by reference, allowing the call library node to handle the dereferencing. As a result, you're handing LabVIEW a block of memory that it didn't allocate, so it doesn't know how to manage it nor free it on exit. I'm not sure what happens to the memory allocated by the DLL in the examples; I'd guess this would be a memory leak if called repeatedly, and that the operating system would clean it up on exit, but that's mostly a guess. -

Filling a cluster with strings and arrays dynamically

ned replied to jbone's topic in Calling External Code

If you're going to allocate memory in your DLL and then hand it back to LabVIEW, you should either use the LabVIEW memory manager functions, or allocate the memory in LabVIEW and then pass pointers to it into your DLL. The LabVIEW memory manager functions are documented in the help, under Code Interface Node functions. Alternately, you can initialize the memory in LabVIEW before calling the DLL by using Initialize Array; for a string, initialize an array of U8 and convert it to a string. -

I really like these tools, especially Diff. Merge is a bit hard to use because it involves a lot of windows (at least 7 - block diagram and front panel for each of the VIs being merged and the result of the merge, plus the list of differences) but it is nice to have. Every once in a while a Perforce command takes an excessively long time to complete - over 30s - but in general it works well. When using Diff, I recommend doing it from within LabVIEW when possible, because it sorts out the search paths for you and you don't get lots of warnings about subVIs not found. This does not happen properly when launching diff from the command line (within P4V for example).

-

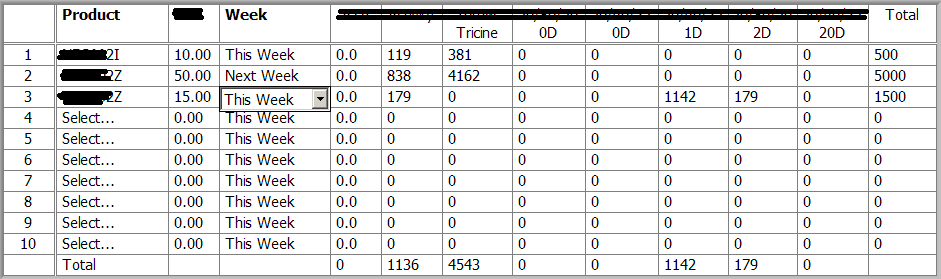

I don't have LabVIEW 2010 installed so I can't open your VI, but I've found that you can only edit cells that already have text in them; you can't add text to new cells. You also have to click in exactly the right place at the right time in order to edit cell contents. I prefer to use a table control if the user needs to be able to edit values. If you want to be fancy about it, create a table indicator instead and add a few individual controls for different data types. When the user clicks on a cell in the table, get the cell location (the table control has a method for this). If the user is allowed to edit that cell, make the control appropriate to the datatype of that column visible and move it to the the cell that was clicked. When the user finishes editing the value, copy the control's value into the appropriate cell in the table and hide the individual control. It takes some work but you can build a very nice-looking interface that lets you mix and match datatypes in the same table, and also have some columns that can be edited and others that cannot. With more work you can have the tab key operate properly, moving from one column to the next. Here's an example of this from one of my applications; the first column is values from a Ring control that is populated at run-time, the second column is a floating-point value, and the third column is an enumeration. The remaining columns are calculated and populated as the user fills them.

-

Check for data on TCP connection

ned replied to mike5's topic in Remote Control, Monitoring and the Internet

There is no LabVIEW function that will do this. You can only determine if data is available on a connection by reading it. What you may want to do is move the STM calls into a separate loop and put the data that you read into a queue, where you'll have more control over it. You may also want to enqueue the connection info along with the data in case you need to send a response to the same connection. I've done this in the past with variants - one loop reads the TCP data, turns into into a variant, adds the connection ID as an attribute, then enqueues it. Another loop dequeues the data, processes it, reads the connection ID attribute and copies that to a new variant containing the response data, and enqueues it so that the send/receive loop can dequeue it and send it along. -

Simple PI (no D needed) control without Real-Time?

ned replied to Gan Uesli Starling's topic in LabVIEW General

I'm not sure what Real-Time has do to with this; do you have access to NI's PID Toolkit? If you do, that's definitely the easiest way to go, and it works on any LabVIEW platform. If not, there used to be PID example distributed with Traditional NI-DAQ. It may have been removed in recent versions - I haven't installed Traditional NI-DAQ in a long time - but you could probably find it in an older install, from version 7.1 or so (which you should be able to load in a more recent version of LabVIEW). -

High Temperature Air Velocity measurement

ned replied to Dan Bookwalter N8DCJ's topic in LabVIEW General

I don't know if it will work for you, but I had good luck using a McCrometer V-Cone flow meter a few years ago. Give them a call and see if they can size one for you. You'll also need a differential pressure transducer and some math to get a good measurement. -

Yes (I've only done it with Y axes, but I assume it's the same for X). Right-click on the X scale and choose Duplicate Scale. It's not particularly pretty, and a bit hard to tell which axis is which (for two, you can move one to the top of the graph to separate them), but it should work. In the graph properties you can set which X and Y scale will be used by each plot, and there must be a way to set that through property nodes as well. Each axis will always take up the full width of the graph, so you won't get exactly the effect you want, but it will be close.

-

I would have said the obvious answer is to use 2 different X axes, then set the ranges so the data appears where you want it.

-

Access tp TCP socket options via .NET

ned replied to Mark Yedinak's topic in Remote Control, Monitoring and the Internet

In that case, the problem is that the function prototype for setsockopt is not the same as for getsockopt. Specifically the last parameter (what you have as size, and the linked documentation gives as optlen) should be passed as a pointer. With that correct, I don't get an error. -

Access tp TCP socket options via .NET

ned replied to Mark Yedinak's topic in Remote Control, Monitoring and the Internet

The comments in your code, and the function prototype, are set up to call setsockopt, but the function you're calling is getsockopt. Which function do you mean to call? Also, it appears that if you're trying to set or get SO_LINGER, you need to pass a pointer to an appropriate LINGER structure (a cluster of two U16 values) as the value argument, or an equivalent array. To be safe I'd make sure that you do wire a value into any pointer input, even if it's actually an output, although LabVIEW might handle that for you. -

For exactly the reason your professor mentioned - the analog output on your USB 6008 (and pretty much any analog output on a similar device) cannot supply enough power to run a motor. The analog output is designed to act only as a control signal. The digital outputs on the 6008 are similarly limited.

-

Same way you do on Windows. The standard file functions work normally on the cRIO using Windows-style paths; the internal flash drive is C:.

-

Inlining a subVI that uses the In Place Memory Structure

ned replied to Onno's topic in LabVIEW General

A properly-written subVI will not make a copy of data passed into it. You can use the "Show Buffer Allocations" tool to see this. In the subVI, all the front panel terminals should be at the top level (outside any loops and structures), and terminals should be marked required. -

I don't know about the majority of LabVIEW developers, but all my jobs have been full-time, salaried positions. Often LabVIEW isn't the only thing I do - it may be 3/4 of my time, but the rest is dedicated to whatever else is needed to get a test or automation system up and running. That usually includes anything from evaluating components to reviewing mechanical designs to plumbing and wiring. EDIT: and I should note as well, a lot of my time is reading documentation, and sometimes coding in scripting languages specific to the chosen hardware, such as motion control systems.

-

There's a limit on how many DMA FIFOs you can use, so I like to save them for streaming large amounts of data. For sending parameters, where I may want to set them in an arbitrary order but speed isn't critical, I use the boolean approach. Also, if I want to test the effect of changing those parameters, using controls lets me set the values directly from the FPGA front panel without any host code. Perhaps I made it sound like a bigger difference than there actually is - I just wanted to be clear that there are two distinct types of FIFOs, so crossrulz's suggestion of using a DMA FIFO to transfer data from the host to FPGA is slightly different than your question as to transferring data between loops on the FPGA. A DMA FIFO uses a reserved area of memory on the host and FPGA to do direct transfers of data. A target-scoped FIFO moves data only on the FPGA, and provides implementation options - Flip-Flop, Lookup Table, or memory. The memory option is slowest but can hold the largest number of items without taking up additional FPGA space.

-

A lot of traditional good LabVIEW style doesn't apply to FPGA. You want to avoid arbitration - multiple loops all trying to access the same resource, such as a functional global. Also, to maximize FPGA space available for your logic, you want to minimize the amount of space used for data storage by using FIFOs or memory blocks instead of global variables or functional globals. A memory block acts a lot like a global variable in practice, but it is an efficient way to store and share data on the FPGA, and you can avoid arbitration if you have only one location where you read and one location where you write. Similarly, you can choose to have your FIFOs implemented in memory. Note that there's a difference between a DMA FIFO, which passes data between the FPGA and host, and a FIFO that only passes data around the FPGA. I try to break up my FPGA code into tasks that are easily separable, because the FPGA is really good at doing work in parallel. For sending large numbers of parameters from the host to the FPGA without using a lot of front panel controls, I'd put three controls on the front panel: a data value, an index, and a boolean indicating that the value has been read (you could also use an interrupt). I'd then have a loop dedicated to monitoring the boolean; whenever it's true, write the data value to the specified index, then set the boolean to false. The host sets the value and index, then the boolean, and waits until the boolean has cleared before sending the next parameter. Another loop in the FPGA code reads from the same memory block as necessary to do calculations.

-

In that case, I was misinformed by a coworker; thanks for the correction.

-

If by ID you are referring to the arbitration ID (I don't know if ID means something else in CANopen terms), I don't believe the CAN protocol allows the arbitration ID to be 0, so you should never see that value.

-

It sounds like you're already done, but you could save yourself a lot of reverse-engineering work by simply using "Variant to Flattened String," which I believe does exactly what you want - provides a flattened representation of the data without a variant wrapper.

-

Yes, you can build your VI into an application if you have the Application Builder. Your sibling will need to install the Run-Time Engine (free from NI) that matches the version of LabVIEW that you use. The NI License Manager will tell you whether you have the application builder available, and the LabVIEW help explains how to build an application.

-

Your question isn't very clear. Most computers, Windows-based or otherwise, don't have any programming environment installed on them. Are you asking if there's some way you can run your VI on another computer, or if you can call your VI from another programming language? If you have the Application Builder (included in more-expensive LabVIEW editions, and available as an add-on), then you can build your VI into an application that you can run on any computer as long as it has the (free) LabVIEW Run-Time Engine installed. Likewise, you can build your VI into a DLL that can be called from another Windows program.

-

Calling LabVIEW.exe from another exe

ned replied to Black Pearl's topic in Development Environment (IDE)

Are you aware that as of LV2009, LabVIEW (Professional and above) includes lvmerge.exe, which probably does what you want already? -

In general, Ethernet and USB will be much faster than a serial protocol. At the same baud rate, 232, 422, and 485 are all comparable (probably identical, in fact, since the major difference between them is voltage levels and the number of devices that can be connected). The protocol and implementation running on top of the communication is equally important. For example, the Parker 6k motor controllers support both serial and ethernet communication. Over ethernet, they support two different protocols. One is text-based and works just like the serial connection. The other is a binary packet containing variable values. The manual for the 6k COM server notes, "Although variables can be sent as a command using the Write method, the time taken to parse the command and check data validity is longer than the time to send an entire variable packet."

-

Unfortunately, no, probably not. The version of LabVIEW provided to FRC teams does not allow access to the cRIO's FPGA. Maybe it's possible in this year's beta (I have no idea), but most likely you can't modify the code running on the FPGA.