-

Posts

763 -

Joined

-

Last visited

-

Days Won

42

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by smithd

-

https://www.ni.com/pdf/manuals/371780r.pdf yay! 🎉 too bad nxg breaks the code

-

Creating a type def with more then one item

smithd replied to wannabecontroller's topic in LabVIEW General

The term you are looking for is a cluster, equivalent to a C struct more or less. You create a type definition like this: https://zone.ni.com/reference/en-XX/help/371361R-01/lvhowto/creating_type_defs/ once you are in the control editor for the type definition, what you want to do is drop down a cluster control. ctrl+f for "clusters" on this document for a video: https://www.ni.com/getting-started/labview-basics/data-structures or in tutorial form: https://www.ni.com/tutorial/7571/en/ Depending on where you're at, you may have access to NI online training, or other resources found on this page: https://labviewwiki.org/wiki/Training -

To go further, the timed loop is the not the right choice, and the RT FIFO has a negative performance impact on this application. The data source (daqmx) is nondeterministic, the data client (tdms) is nondeterministic, so using an RT FIFO as I understand it will result in performing a memory copy on every iteration to copy from the (newly allocated and thus non-deterministic) daqmx buffer to the preallocated RT FIFO buffer. In contrast a normal queue (again as I understand) will simply shuffle around a pointer to the buffer provided by daqmx*. No allocation past the one performed by daqmx and a minor one to allocate the queue structure. By using a timed loop you're forcing everything to run in a single thread, so if you add any additional code later into the daqmx loop, it would have to run in series with the daqmx api reads. You're also telling the RT OS that the HIGHEST priority loop/thread in your entire codebase is something which immediately hits an asynchronous call and waits for that call to return. *I think technically its passing around a struct with an inline timestamp and dt, and then a variant pointer and an array pointer, but you get the idea. 2.2: you can make it fixed by passing in a samples to read per channel input on the daqmx read VI. The default is -1, which tells the driver to return whatever is available but don't wait for new data. 4 By definition the two loops have to run at the same rate...on average. As shown (a kind of producer-consumer implementation), you really want the daqmx loop to be driving the logger loop, which is easy to do with a fifo or queue -- you pass in -1 for the timeout, and as data arrives the bottom loop will run. As you have it, the tdms loop is simply running every 5 ms. Instead of a queue or shared variable, you can also use channel wires which are intended to make data communication a bit more user friendly. They even have an RT FIFO option if you ever do need that: https://www.ni.com/product-documentation/53423/en/

-

Timing output every elements of a array for loop

smithd replied to SteveSun's topic in LabVIEW General

What are you trying to output to? A graph? A daq card? If its in one of the pictures, only 2 came through on my end, the rest show up as blank squares. -

Generally RT code doesn't have a UI, and as mentioned you have to use a special add-on to use events with shared variables. The usual equivalent is to use a messaging tool to handle communication and generate events in your RT code. I personally use HTTP and/or websockets since its free and there are many clients out there. I believe there is a tcp handler for drjdp's messenger lib (https://sine.ni.com/nips/cds/view/p/lang/en/nid/213091), actor framework has a networking addon, and for quick and dirty, AMC (https://www.ni.com/example/31091/en/) works. To put it another way, where you might use a UI event (queue) structure on a windows machine, you would typically use asynchronous I/O (eg tcp, udp, serial) to feed a queue on the rt system.

-

there is hardly support in labview itself In reality this is a pretty easy fix to do on your own branch of the code. Inside here: https://bitbucket.org/drjdpowell/jsontext/src/default/Variant to JSON Text.vi and here: https://bitbucket.org/drjdpowell/jsontext/src/default/JSON text to Variant.vi If you look for every case structure using the type enum, find the case for "extended precision" and then add "Fixed Point" to the same case. Numeric variants automatically coerce so it should work as simple as that. (I suggest extended because of the different variations of fixed point, potentially using 64 bits in a more efficient manner than say a 64 bit double. You will still possibly lose precision)

-

Event structure causing other code not to run

smithd replied to rscott9399's topic in LabVIEW General

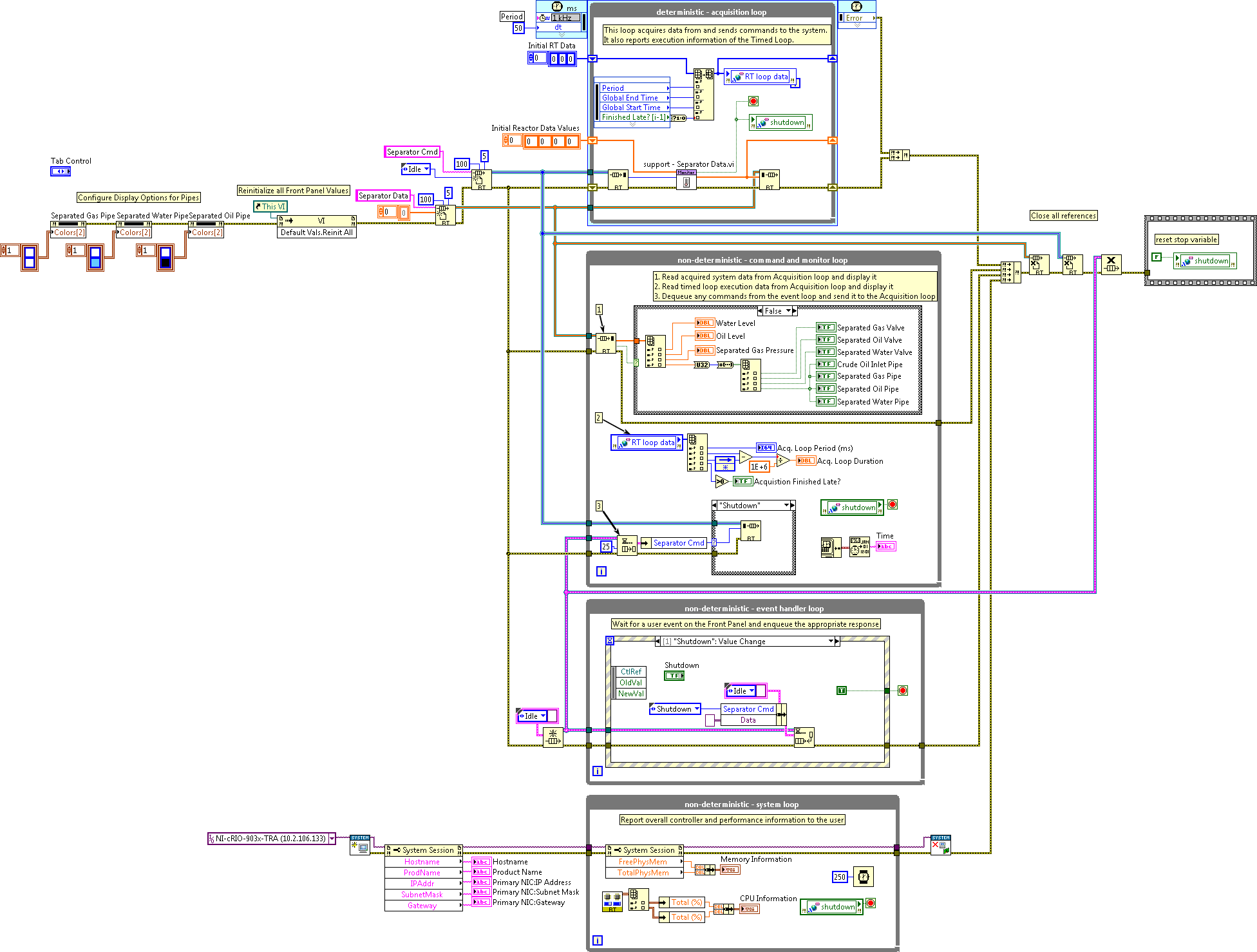

I re-read your (rscott) post and it occurred to me you might be missing something more fundamental wrt labview applications. I don't claim that this code is good or bad, but it can serve as a reasonable example for this conversation: https://forums.ni.com/t5/Example-Program-Drafts/LabVIEW-Real-Time-Sample-Template-Embedded-UI/ta-p/3511679?profile.language=en Note that there is some UI code, but theres also a deterministic control loop and various monitoring loops.This is typical and in fact kind of on the low side. This is why message oriented frameworks like actor framework or drjdp's messenger library or delacor's dqmh are fairly popular as they provide some of the plumbing and structure common to many applications. -

Event structure causing other code not to run

smithd replied to rscott9399's topic in LabVIEW General

to convert this into c-style: while(1) { pathwire=pathin waitforinput(timeout=forever) pathout=pathwire } you didnt 'hose the rest of the system' but your loop will stop because you told it to wait for input. You generally have many many many loops in a labview program. You can change this loop to a polling style by setting timeout to something besides forever. also note that the order of operations in the loop is not specified. I wrote one possibility. -

daqmx is a driver that supports all of NI's standalone daq cards as well as cdaq devices and now the newest generation of crio

-

This is a good guide to look through: https://www.ni.com/en-us/shop/compactrio/compactrio-developers-guide.html Mark mostly covered it, the way I'd think about it: Default -> run on host Does my code protect human beings -> generally don't use NI products* and don't even touch the application unless you know what you're doing (*exception is maybe the SIL module but even then I'd want an experienced safety person consulting) To move to the compactRIO you want one or both of these to be true: Does my code need to run 24/7 headlessly Does my code need to operate in a moderately precise (~100 usec) time frame To push the code from RT down to FPGA you want one of these to be true: Does my code protect a mechanical system (eg look for voltage to go past limit, open relay) Does my code need to close a loop in a precise (>25 ns variation) time frame (eg motor control) Does my code analyze a large amount of data that my RT CPU is unable to keep up with, AND Does my code analyze data in such a way that otherwise wasted time (eg time between samples of an ADC chip) can be effectively utilized (eg performing a filtering operation on each sample) Does my code analyze data in such a way that a large amount of data can be compressed to a small amount of data (eg integrate a curve after a trigger, or find peak and valley of a waveform) The reason that last one is an AND is that if you can't pretty easily pick up the slack on the FPGA by seriously reducing the data rate or doing a lot of work in otherwise 'idle' time, its probably cheaper to just buy better hardware. Theres a limit to this of course, but...

-

ethercat master Inizializing EtherCAT Master on cRIO-9030

smithd replied to Anmol Sidhu's topic in Hardware

I dont know what an ENI is, but you can add third party slaves with the standard xml files: https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z000000P9CqSAK&l=en-US -

OpenG "Variant Data" palette not supported in NXG3.0 ?

smithd replied to LOIS LE BRAS's topic in OpenG General Discussions

The issue drjd/wiebe identified will interfere with any application where you want to generically accept a data element and inspect the names contained elements. I think that is mostly the serialization libs. I'm actually very surprised this doesn't bother you, I'd have assumed you would use these features. -

OpenG "Variant Data" palette not supported in NXG3.0 ?

smithd replied to LOIS LE BRAS's topic in OpenG General Discussions

If I had to guess, it internally translated to UTF16 when its displayed because I think thats the dotnet default memory encoding. But in any case, it should likely have the same multilingual support as WPF. -

OpenG "Variant Data" palette not supported in NXG3.0 ?

smithd replied to LOIS LE BRAS's topic in OpenG General Discussions

network functions have always accepted byte arrays and strings, I dont think this has changed: https://www.ni.com/documentation/en/labview/latest/node-ref/tcp-read/ The string/byte array conversions are in the original link under "working with different encodings" -- the node still exists, you just have to know whether the string is "extended ascii" or unicode: https://www.ni.com/documentation/en/labview/latest/node-ref/byte-array-to-string/ https://www.ni.com/documentation/en/labview/latest/node-ref/string-to-byte-array/ However I dont think this is the problem. I think Lois is conflating item (A) which is that NXG has changed the 'default' encoding for the string data type to be UTF8 with item (B) which is that NXG has removed a lot of meta-programming facilities including (I assume) a dependency of the OpenG data type parsing functionality and (I know) a dependency of every variant to X (xml, json, ini) toolset ever made for this language. It looks like at least some of the necessary components for the openg library do exist, though. To me (A) is a positive change, (B) is a breaking change that makes the language unusable for me until its resolved, and it sounds like at present NI has 0% interest in doing so. -

OpenG "Variant Data" palette not supported in NXG3.0 ?

smithd replied to LOIS LE BRAS's topic in OpenG General Discussions

Similar issue in nxg: https://forums.ni.com/t5/LabVIEW-Idea-Exchange/Malleable-control-labels/idi-p/3782013/page/6#comments In this case its not the openg functions but is instead the built-in variant parsing functionality that has changed, but its all the same sort of general "metaprogramming" category. -

thats quite the hidden gem🎅 🦄

-

I'm not bothered by the size of that guy, I'm bothered that everything is serially executing

-

i think those variant functions should be a tiny meaningless fraction of the overall execution time. Have you profiled it?

-

How can I improve while loop speed on windows?

smithd replied to LAVA Good's topic in LabVIEW General

In the compiler doc here (https://www.ni.com/tutorial/11472/en/) theres mention of a yieldIfNeeded block which is inserted into loops which allows for coordination with the rest of the runtime. If you just have the one while loop, you are still having to check against the runtime engine if you should keep running or if you need to pause and let other code run. Its not just a simple jump. tl;dr I dont think there is any way to do this with a regular loop. A timed loop may work. The overhead should be minimal compared to any actual code you wish to run in the loop, and if that isn't true labview is probably not the tool for the job. -

You're also what?! Make sure your lavag email is not @ni.com and then pm Michael. He can fixit.

-

Before or after you tell those darn kids to get off your lawn?

-

2019 is almost certainly done already. Since its integrating an addon, it could come in SP1, but I'd bet 2020 if it happens.

-

isn't that...an xcontrol? anywho, voted up

-

My vague understanding is that labview exes are basically special zip files with an executable header tacked on with the instruction to load the labview runtime. The runtime then takes over and loads the code. This obviously changes with the fast file format, but the general concept is probably about the same. Point being, the exe is still a separate OS process, it just happens to immediately load up a copy of the lvruntime dll which can be shared.