Mellroth

Members-

Posts

602 -

Joined

-

Last visited

-

Days Won

16

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Mellroth

-

Jonathon, I had trouble installing this package using VIPM 2010, and this was probably because the icon.bmp file included in the .ogp file was ReadOnly. The main problem is within VIPM (AFAIK), but to enable other users to use this Quick Drop Plugin, I just opened your original zip and the included ogp file and removed any ReadOnly flags. rename_lvoop_fp_object_labels-1.0-1_LV2009.zip /J

-

Thanks Mikael /J

-

I downloaded the zip GDS_4.0rc_LV2009.zip today, and it unzipped without problem. My problem is that I'm still using LV2009 and the setup VI in the zip file requires LV2010, even though the name of the zip file indicates that it should be for LV2009. /J

-

Yes it does. You can even add custom FPGA code to the scan engine and have access to this through the advanced API as well /J

-

I'm not sure you can reach the 1kHz requirement using IO variables, I would rather use the Scan Engine Advanced I/O access API. Especially if the I/O count increases. Using this API you can read/write more than one channel at the time. It also means that you can make the application much more dynamic, since added modules can be automatically handled without recompiling the application. http://zone.ni.com/d...a/tut/p/id/8071 /J

-

Can you tell us more what you want to do with the references? The reason I ask is that there are other ways of getting the BD or FP reference while editing, e.g. if you just want to handle the top most window you can do this with event filtering. Monitor the private event type "VI activation", and from this you'll get the VI reference as well as a numeric telling you whether the BD or FP was activated. See attached VI MonitorActiveVI.vi /J

-

[CR] Improved LV 2009 icon editor

Mellroth replied to PJM_labview's topic in Code Repository (Certified)

Hi PJM, I just noted that text entry in the icon editor (1.7) does not really work if you type to fast. (LabVIEW 2009.1, Windows XP) This can be seen both in the actual icon when editing text, and also in the filtering of glyphs. Seems like one or more key events are lost (filtering in the event structure maybe?) To reproduce; start text editing with the text-tool and then press a-s-d in one fast sequence. The only thing I get in my icon is 'a', both 's' and 'd' are lost. /J -

Sub VI Hangs at "Wait for event" invoke node - ActiveX code

Mellroth replied to Nesara's topic in Calling External Code

When you speak of a "yellow color block" I assume you speak about the built in functions in LabVIEW, and as a user you cannot really create built in functions. The option for a user is to create a SubVI, but this will still not give you the option to abort the VI with the abort button. Regarding the performance issue when you specify a smaller timeout, I think that you see a degradation in performance over time due to that you are not closing references, and because the stop node wont execute if the timeout error is propagated to its inputs. To get even better speed you should initialize the event watcher, and only call the WaitForNotification in your loops (don't forget to close references afterwards). Please have a look at the attached example, where this Init-Wait-Clear is used to increase performance. WMI_EVENT_Example Folder.zip /J -

Possible workaround: Usually when I create VIs that I know will be used as dialogs, pop-ups etc. I go into VI properties and de-select the "Allow user to close window". Doing this seems to force LabVIEW to believe that this is a front panel that should not be removed during builds. I have suggested to R&D the addition of an option in the VI settings that would allow me to explicitly state that the FP should be kept during builds, instead of having to specify this in every build or use workarounds like this. It seems kind of silly that the option to keep a front panel is only available in the build specifications when a VI could be used in several builds. /J

-

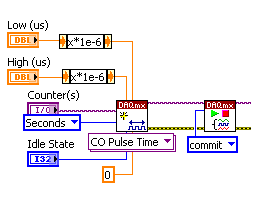

As I said, I might miss some part of your problem and I do missed the fact you had to generate a pulse with 15us width. If you want to have the pulse generation restarted a bit more efficiently you could try committing the pulse settings to the board before starting the task. I don't know if this will give you the loop rates you need, but it should be more efficient than the current solution (if your hardware support it). /J

-

Maybe I misunderstand what you are trying to do, but why are you using a counter output to do the switching? Why not use a digital output instead? /J

-

WaveformChart property node Y.Scale.Maximum

Mellroth replied to Gan Uesli Starling's topic in LabVIEW General

Do you you have autoscale ON for the Y-axis? If it is, turn it off and see if that helps. /J -

Hi bluesky96, welcome to LAVA. It would be much easier for us to help if you could attach the code. Right now I can only guess that some of the functions that you use, actually references the semaphore VIs. Are you using DMA to transfer data back and forth to the FPGA? If so, are you sure that all data is read by the other side before continuing? /J

-

I don't think that is entirely true, the PXI-6229 has 32 clocked DIO lines (but all in port 0). The sample speed of the DIO lines are not possible to define using a internal clock, instead you'll have to use an external timing source (unless you use the default sample clock which I believe is 1MHz for all 622X boards)). This is usually not that much of a problem, since you can use one of the CLK outputs to generate the sample clock you need, just remember to specify the CLK terminal as the external timing reference in the DIO task. /J

-

Multiple channels at 64kS/s does not seem to be all that useful for display in real time:lol: We used this technique to be able to run a high channel/high speed data acquisition on a machine with limited amount of memory (but then, the customer wasn't interested in looking at the raw data, only in the calculation results). I think each FIFO element contained 1000 samples for each channel, and that we had a secondary Queue for the result data (running average etc.). /J

-

I don't know anything about your application, but if it is the amount of contiguous memory that is the bottle neck I would start looking into using queues or RT-FIFOs to store data in smaller chunks. Maybe by reading data from the DAQ boards more frequently (if you are using DAQ). The benefit is that you can have the same amount of data in the FIFO, but the memory allocated does not necessary have to be contiguous. I also believe that the number of buffers of data LabVIEW holds on to can be minimized by using FIFOs; once allocated no more buffers should have to be added. Just my 2c /J

-

Hi, I think (I don't have LabVIEW available at the moment), that you are giving the start and end point instead of start and duration. Subtract max value by the start value an use this instead of the current end point (max value). Edit: I just realized that you are scaling the y-axis with the first and last point in the data, try to use y-axis auto-scaling instead. /J Ps. you are shifting back and forth between waveform and DBL-array, I would try to stay in one of them. When using waveforms, you don't have to define the dt and start of the graph. If you want to change the start point (or dt), change the waveform instead.

-

Are you loading the same llb in both the exe and the rtexe? If you are, the code you are trying to load is in the wrong format for the rtexe. To get around this, add a source distribution build script for the top level VI, on the same target type as your rtexe (in this case a cRIO 9012). Let this build script create a llb for you and be careful to include any specific HW drivers in the build, and check the Additional exclusion page to include/exclude other items. Once built, you will have a llb (and possibly a data folder with support files) with code specifically for the cRIO 9012 target. Try to put this llb and its data folder on the 9012 target instead. Good Luck /J

-

viServer - problem with LV instances

Mellroth replied to DaveX's topic in Application Design & Architecture

Right click on "My Computer" in the project, and enable VI server TCP access (and set another port that 3363 since that is probably in use). /J -

Are you saying your book is as deadly as a gun... /J

-

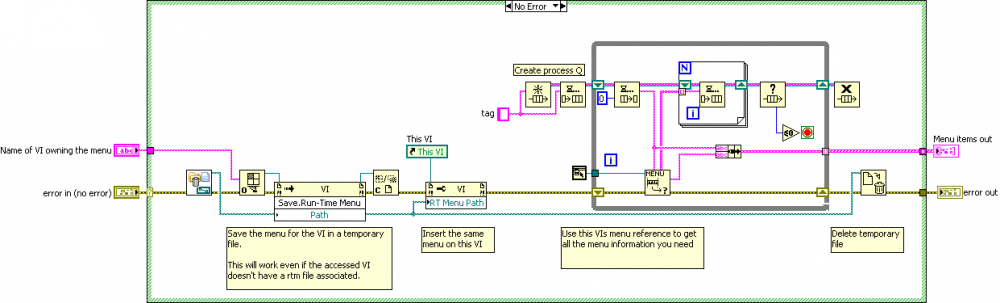

I don't have a solution to get you the menu reference, but to get the menu items you could do like this (and I guess you have done something similar). GetMenuItems.vi /J

-

If you first decide to go with the new XNET boards, you get down to a more reasonable amount of boards. We have been using both the Series2 and XNET boards, and the biggest problem with the Series2 boards is the amount of on-board memory that limits the number of frames you can handle autonomously. With XNET this is solved, each port have separate buffers for output and input (the older boards shared one buffer for all ports and directions). XNET also support bus-termination, that can be set from SW. In our cases we do a lot of fault insertion (skipping X frames, inserting erroneous parameters in the frames etc.) and handle J1939 TP.BAM-TP.DT transfers. Due to this, we have to create our own engine above the NI-CAN or XNET layers. In this scenario XNET really outperforms the NI-CAN, both in terms of actual time it takes to get information to/from the driver, but also in jittering (which is much lower for XNET). One thing that is missing in XNET compared to NI-CAN, is the ability to run simulated interfaces. /J

-

Problem running under operating systems of different languages

Mellroth replied to bimbone's topic in LabVIEW General

Just have to ask; you are not having a space between : and \ are you. In both your posts you do have a space between these characters, and I don't think Windows like a folder/file name to start or end with a space character (cannot check right now). /J -

It has been a while since I played with Veristand personally, but we do use Veristand on some of the systems we are involved in. Veristand is a great environment to get you started with HIL systems. But, when the complexity (channel count, CAN communication etc.) increases, we feel that Veristand is less user friendly than it could be. This will probably change, since NI is pushing heavily for the Veristand platform, and it has some great potential. Some comments on Veristand at the moment; * Great tool for HIL systems in low to medium channel count systems * Lacks some of the scaling types we (our customer) needs, e.g. lookup tables * Some bugs in the CAN support * Front Panel items connected to the same parameter (or a derived parameters), are not updated synchronously. This can look very strange, e.g. if one item displays speed in mph and another in km/h, it feels like the scaling is wrong. /J