CharlesB

Members-

Posts

59 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by CharlesB

-

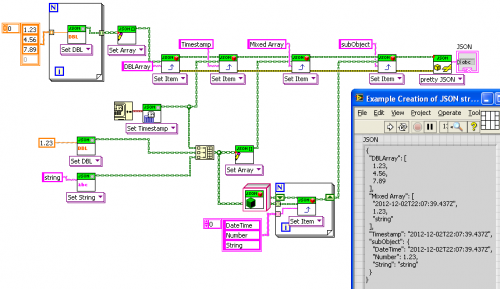

What I do in my implementation is to serialize the class type, and if present when reading it back I instantiate the real class, using LabVIEW's "Get LV Class Default Value By Name". It works neatly in my case. Serializing class type is an option given to the "Data to JSON" VI, so classes that don't have children don't get their type serialized. Serialized class looks like this: { "Timings": { "@type": "ChildTimings", "@value": { "Camera delay": 0, "Exp time": 0.001, "Another field": "3.14159" }, "Name": "Charles" } Attached my version of the package implementing this (don't pay attention to the version number, it was forked from 1.3.x version). Code is in JSON Object.lvclass:Set Class Instance.vi and JSON Object.lvclass:Get as class instance.vi Hope you won't find it ugly :-) lava_lib_json_api-1.4.0.26.vip

-

Here's what I've done for this in my implementation. I had the same need, big configurations with nested objects that needed to be serializable. I created a "JSON serializable" class that has "Data to JSON" and "JSON to data" that take JSON values as arguments. My classes inherit from it and de/serialize their private data by overriding these methods, with the option of outputting class name along with the data. JSON.lvlib is modified to handle these classes on get/set JSON. I used the @notation to output the class name, resulting in this kind of output "Timings": { "@type": "Timings", "@value": { "Exp time": 0.003, "Piezo": { "Modulation shape": "Square", "Nb steps": 4 } } } This is the code for deserializing and serializing:http://imgur.com/a/afaKj The drawback is that I need to modify JSON.lvlib, and I didn't take the time to update my fork to stay inline with the development of JSON library. Also it needs LabVIEW 2013, because of the need of "Get LV Class Default Value By Name". The advantage is that the class name is serialized, and loaded back at deserialization. It allows to save/load child classes of a serializable class, giving more flexibility to the configuration. If someone is interested I can work on updating the fork with last version of JSON.lvlib and publish it

-

Preserving run-time class with cluster of objects

CharlesB replied to CharlesB's topic in Object-Oriented Programming

Generics are maybe an solution to drjdpowell's request, but as for mine the compiler just needs to be a little smarter to guess what type of object is in my cluster. In this case it's just compile-time type determination. The fact that this type of request rises may be a good sign that LabVIEW users are now more mature and at ease with object-oriented programming, and thus more demanding -

Preserving run-time class with cluster of objects

CharlesB replied to CharlesB's topic in Object-Oriented Programming

I was expecting this type of answer... Keeping type for objects in arrays and clusters wouldn't be too hard, I guess! Thanks -

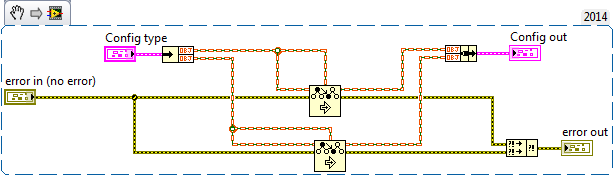

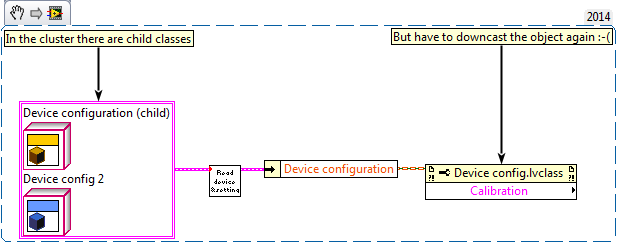

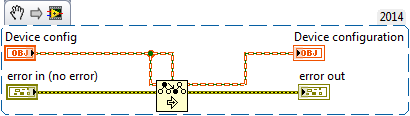

I love how useful is the "Preserve runtime class" node when I wire an object to a more generic subVI, and not having to downcast it after... (first snippet is the subVI, and second is how I use it) But how to make it work with cluster of objects? I tried this in the SubVI: But no success: unbundling after my subVI call gives me the base class, and I have to downcast it. Any thoughts on this problem?

-

Sounds correct, I don't really understand the case in which buffer size is computed, but it may be there for a reason. You don't have to worry about leakage in LabVIEW code, since memory is managed by the runtime.

-

Name: Triple buffer Submitter: CharlesB Submitted: 21 Oct 2014 Category: *Uncertified* LabVIEW Version: 2011 License Type: BSD (Most common) (initial discussion, with other implementations here) In the need for displaying large images at a high performance, I wanted to use triple buffering in my program. This type of acquisition allows to acquire large data in buffers, and have it used without copying images back and forth between producer and consumer. This way consumer thread doesn't wait if a buffer is ready, and producer works at max speed because it never waits or copy any data. If the consumer makes the request when a buffer is ready, it is atomically turned into a "lock" state. If a buffer isn't ready, it waits for it, atomically lock it when it is ready. This class allows to have a producer loop running at its own rate, independently from the consumer. It is useful in the case of a fast producer faster than the consumer, where the consumer doesn't need to process all the data (like a display). How to use Buffers are provided at initialization, through refnums. They can be DVRs, or IMAQ refnums, or any pointer to some memory area. Once initialized, consumer gets the refnums with "get latest or wait". The refnum given is locked and guaranteed to stay uncorrupted from the producer loop. If new data has been produced between two consumer calls, the call doesn't wait for new data, and returns the latest one. If not, it waits for the next data. At each producer iteration, producer starts with a "reserve data", which returns the refnum in which to fill. Once data is ready, it calls "reserved data is ready". These two calls never wait, so producer is always running at a fastest pace. Implementation details A condition variable is shared between producer and consumer. This variable is a cluster holding indexes "locked", "grabbing", and "ready". The condition variable has a mechanism that allows to acquire mutex access to the cluster, and atomically release it and wait. When the variable is signaled by the producer, the mutex is re-acquired by the consumer. This guarantees that the consumer that the variable isn't accessed by producer between end of consumer wait and lock by consumer. Reference for CV implementation: "Implementing Condition Variables with Semaphores ", Andrew D. Birrell, Microsoft Research Click here to download this file

-

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

No, I pass the pixel pointer to the DLL function, which can than read or write the raw pixels. This is what gives the best performance. For making sure of this, I converted my program to LV2014, replacing my DLL calls with native G array operations and the new ImageToEDVR along with an IPE, performance goes down by 50%! This apparent "global lock" was solved by some DLL functions that were left calling inside UI thread. I have no performance issue with manipulating IMAQ data outside IMAQ functions, so I would say instead that when done correctly, it can solve performance issue without going into Vision runtime licence costs. I concede that it has the drawback of more complexity, as it adds DLL calls, and need to have maintainers that know both LabVIEW and C++. I haven't tested the IMAQ functions for manipulating image pixels, because at design time a few years ago I didn't want to pay for the Vision runtime license on each deployment. But they probably have similar or better performance. As of your benchmark, I'm certainly doing more stuff than just buffer copy, so it's hard to compare. -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

Yes, it also works, and the benchmarking gives the same results as two other solutions -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

Ooh thanks! I had some processing CLFN that were specified to run in the UI thread! Now producer loop frequency is more independent of display loop. -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

I'm not sure to understand how it would help here? Yes, perfectly sure. Buffers are allocated with different names everywhere, and filled in DLL functions, using IMAQ GetImagePixelPtr. I have made some some benchmarks, measuring both consumer and producer frequency, and had the 3 solutions. Display is now faster, now that I have dumped my XControl used to embed IMAQ control, which I believe was causing corruption. Trivial solution: 1-element queue, enqueued by producer. Consumer previews queue, displays, and empty the queue, blocking producer during display 2 queues solution (by bbean) Condition variable (mine) All three solutions have similar performance in all my scenarios, except when I limit consumer loop to a 25 Hz, in this case producer in 1. is also limited at 25 Hz. Trivial solution shows image corruption in some cases. Except this case, I never see producer loop being faster than consumer, they both stay at roughly 80 Hz, while it has some margin: when I hide display window, producer goes up at its max speed (200 Hz in this benchmark). When CPU is doing other things, the rates go down to the same values at the same time, as if both loops were synchronized. This is quite strange, because in both 2. and 3. producer loop rate should be independent from consumer. Consumer really does only display, so there's no reason it would slow down the producer like this... Everything looks like there's a global lock on IMAQ functions? Everything is shared reentrant. Producer is part of an actor, execution system set to "data acquisition" and consumer is in the main VI. -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

It may be a bit overkill, but DVR and semaphore are here to protect against race condition. I actually just translated the code shown in the paper from MS research. It's important that the operation "unlock then wait then re-lock" is atomic, so that the producer don't read data in between, and if so you have inconsistent operation... Yes, the 2 queue approach is simpler, and it also works, but it's also interesting to have a G implementation of the condition variable, as this pattern may be helpful in some cases. I agree it's not aligned with the usual paradigm in LabVIEW, but overall it was a good exercise Also I have a small performance gain with the CV version of triple buffer. Maybe you can implement condition variable using fewer synchronization mecanism, I'd have to think about it Thanks, I didn't know about the synchronous display stuff. The producer is actually doing other processing tasks with the frames, and needs to spend as less time as possible on the display, which is secondary compared to the overall acquisition rate, so I need display-related stuff to be wait-free in the producer. -

Version 1.0

217 downloads

(initial discussion, with other implementations here) In the need for displaying large images at a high performance, I wanted to use triple buffering in my program. This type of acquisition allows to acquire large data in buffers, and have it used without copying images back and forth between producer and consumer. This way consumer thread doesn't wait if a buffer is ready, and producer works at max speed because it never waits or copy any data. If the consumer makes the request when a buffer is ready, it is atomically turned into a "lock" state. If a buffer isn't ready, it waits for it, atomically lock it when it is ready. This class allows to have a producer loop running at its own rate, independently from the consumer. It is useful in the case of a fast producer faster than the consumer, where the consumer doesn't need to process all the data (like a display). How to use Buffers are provided at initialization, through refnums. They can be DVRs, or IMAQ refnums, or any pointer to some memory area. Once initialized, consumer gets the refnums with "get latest or wait". The refnum given is locked and guaranteed to stay uncorrupted from the producer loop. If new data has been produced between two consumer calls, the call doesn't wait for new data, and returns the latest one. If not, it waits for the next data. At each producer iteration, producer starts with a "reserve data", which returns the refnum in which to fill. Once data is ready, it calls "reserved data is ready". These two calls never wait, so producer is always running at a fastest pace. Implementation details A condition variable is shared between producer and consumer. This variable is a cluster holding indexes "locked", "grabbing", and "ready". The condition variable has a mechanism that allows to acquire mutex access to the cluster, and atomically release it and wait. When the variable is signaled by the producer, the mutex is re-acquired by the consumer. This guarantees that the consumer that the variable isn't accessed by producer between end of consumer wait and lock by consumer. Reference for CV implementation: "Implementing Condition Variables with Semaphores ", Andrew D. Birrell, Microsoft Research -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

UPDATE Victory!! The corruption problem wasn't related to the triple-buffering, but to my display which was using XControl. I don't know why, but it looks like my XControl was doing display stuff after setting value, anyway problem is gone. Note that the solution with two queues posted by bbean perfectly works and have similar performance. Kudos! However I keep my solution, which is more complex, but has a fully independent producer. Triple buffering.zip How to use This class allows to have a producer loop running at its own rate, independently from the consumer. It is useful in the case of a fast producer faster than the consumer, where the consumer doesn't need to process all the data (like a display). Buffers are provided at initialization, through refnums. They can be DVRs, or IMAQ refnums, or any pointer to some memory area. Once initialized, consumer gets the refnums with "get latest or wait". The refnum given is locked and guaranteed to stay uncorrupted from the producer loop. If new data has been produced between two consumer calls, the call doesn't wait for new data, and returns the latest one. If not, it waits for the next data. At each producer iteration, producer starts with a "start grab", which returns the refnum in which to fill. Once data is ready, it calls "ready". These two calls never wait, so producer is always running at a faster pace. Implementation details A condition variable is shared between producer and consumer. This variable is a cluster holding indexes "locked", "grabbing", and "ready". The condition variable has a mechanism that allows to acquire mutex access to the cluster, and atomically release it and wait. When the variable is signaled by the producer, the mutex is re-acquired by the consumer. This guarantees that the consumer that the variable isn't accessed by producer between end of consumer wait and lock by consumer. Reference for CV implementation: "Implementing Condition Variables with Semaphores ", Andrew D. Birrell, Microsoft Research -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

Once again, thanks everyone for your propositions! But if consumer is too slow, producer will have an empty Q2 when starting to fill, and will have to wait. I have sketched a simple condition variable (only one waiter allowed) class, that protects access to a variant, and gives two main methods, signal and wait. It is used in a triple-buffer class, having 3 main methods: start grab, grab ready, get latest. "get latest" doesn't wait if a buffer has been ready since latest call, and waits if it's not the case. Both methods "start grab" and "grab ready" never wait. I will post it as soon as it's ready. -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

Forgot to answer on this: if doing this, and the consumer is really slow, you have to adjust the number of buffers, depending on the "slowness" of the consumer, in order to prevent corruption. So for me it has the flow of "too bad, it worked on my setup". More, there is still no way for the producer to know which buffer is locked without the race condition problem. Sorry to say, but lossy queue on a shared buffer doesn't solve data corruption, as I said before. I think I fully understand the advantages of dataflow programming, My program has more than 1500 VIs, uses the actor model for communication, and doesn't have any semaphore or complex synchronization. But here we have large buffers, in a pointer-style programming, so dataflow isn't really applicable. I mean, dataflow with pointers isn't really dataflow, since they don't carry the data. These buffers are shared between two threads, and LabVIEW doesn't protect you if you don't have proper synchronization. Sorry to insist, but triple buffer is just a simple sync pattern that fits to my problem if I want the best performance, it is widely used display-related programming. -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

Unfortunately I can't use any async framegrabber option, because I'm doing processing on sequence of images, which disables the possibility of a "get latest frame". I really need to implement triple-buffering by myself, or I'll have to slow down the producer with copying image to the display at each iteration. It seems that LabVIEW doesn't have proper synchronization function to do this, but in C++11 it is really straightforward (condition variable are native), so I'll use a CFLN... It runs at full speed if I disable display. And I already tested simple notifier, display gets corrupted by the acquisition -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

It's a really large app, so I can't share the code, but producer actually does acquire and process, and consumer dose only display. Camera is awfully fast (2Mpixels at 400 FPS), and I'm doing very basic processing, so in the end image display is slower than acquisition, which is why I want to drop frames, without slowing acquisition down. -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

But in my case a slower consumer can drop frames (maybe I should have pointed that before), as it's just for display. So if producer is faster it must not be slowed down waiting for consumer to process every frame. Does my problem make more sense now? -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

I wish I was overthinking, but with a simple 3-elements queue, the producer has to wait when queue is full. This means lower performance when the consumer task is longer than producer task. In triple buffering the producer never waits, that's its strength. Take a look at http://en.wikipedia.org/wiki/Multiple_buffering#Triple_buffering if you're not convinced of the advantages. I don't use IMAQ for acquisition, only for display (I have Matrox framegrabber, and make calls to their dlls to fill IMAQ references), so I don't have access to these VIs. Same remark, producer is filling a queue, and will have to wait if it's full. -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

Complex locking is here to be sure that P won't overwrite a buffer locked by C in a race condition, because P won't be aware of it. Both events "C wait ends" and "C locking buffer" must be atomic, or you have this race condition. With your solution, naming queues PQ and CQ. If C is longer than P, PQ might be empty when P is ready to fill a buffer, and has to know which buffer is held by C, in order to not corrupt it. So P has to wait for C to complete operation and fill PQ, which is against purpose of triple buffering where P acquires at full frequency. -

How to implement triple buffering

CharlesB replied to CharlesB's topic in Machine Vision and Imaging

In my case buffers are IMAQ references, so I don't think I need DVRs, since IMAQ refs are already pointers on data. If I give it a try with queues, I need a P->C queue for the "ready" event. At grab start, P determines on which buffer to fill, which is the one that is neither locked nor ready. At grab end, P pushes on the "ready" queue. If C is waiting, the just-filled buffer is marked as locked, and if not, it is marked as ready (and the previous lock remains). But P also needs to know whether a buffer has been locked by C during a fill, like in the third iteration on my original diagram. So we must have another queue, C->P, that P will check at grab start. Which brings the following race condition problem (P and C are in different threads) if C request happen at the same time a grab ends: B1 is ready, P is filling B2, and B3 is locked C queries the "ready" queue, which already has an element (no wait), B1 C thread is put of hold P threads wakes, with a grab end, P pushes in the "ready" queue B2 P sees no change in the "locked", queue, and chooses to fill on B1, since B3 is still locked C thread finally wakes, and pushes on the lock queue B1 But P has already chose to fill on the B1 buffer, so B1 will be corrupted when C will read data. So we need a semaphore around this, so that P doesn't interrupt the sequence "ready dequeue, lock queue". The semaphore will be acquired by C before waiting on the "ready" queue, released after pushing to the "locked" queue, and P will acquire it at grab start. I don't think there's a dead lock risk, but I'm not sure... That's why condition variables are great: you have an atomic wait and lock, which prevents such race conditions. I pretty sure condition variables can be implemented with queues and semaphores, but I don't know how. But how does the original FIFO knows it shouldn't write on the memory location it gave to you? If the producer acquires data at a higher rate than the consumer, there must be a mechanism to prevent data corruption if buffers aren't copied between producer and consumer. So if you put a large array in a queue, and dequeue it in another loop, no data copy is ever made?