-

Posts

4,943 -

Joined

-

Days Won

308

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

Not that so much but he might be right and I've been using an eggcorn for my entire life.

-

A malapropism is similar but more common for comedy because it doesn't need to make sense in context (so it's easier and funnier).

-

I love Rolf's eggcorns. I never point them out because he knows my language better than I do.

-

it was meant tongue in cheek and specifically chosen because you've done it a million times (probably). I wonder what the forum mark-up is for that?

-

Don't have that problem with dynamic typing. Typecasting is the "get out of jail" card for typed systems. This one seem familiar? linger Lngr={0,0}; setsockopt(Socket, SOL_SOCKET, SO_LINGER, (char *)&Lngr, sizeof(Lngr)); DWORD ReUseSocket=0; setsockopt(Socket, SOL_SOCKET, SO_REUSEADDR, (char*)&ReUseSocket, sizeof(ReUseSocket));

-

Oddly specific. I would want a method of being able to coerce to a type for a specific line of code where I thought it necessary (for things like precision) but generally...bring it on. After all. In C/C++ most of the time we are casting to other types just to get the compiler to shut up. They broke PHP with class scoping. They are now proposing breaking it more with strict typing. Typescript is another. It's the latest fad.

-

Actually. your particular problem is that the variant type is only a half-arsed variant type. It's easy to get something into a variant, but convoluted and unwieldy to get them out again. I think "Generics" were supposed to resolve this but they never materialised. I shake my head with the recent push in the software industries to strict type everywhere. Most of the programming I do is to get around strict typing.

-

If only there were a way that one could use strings to define the event. We could call them, let me see, "Named Events"?.

-

There is a "best practices" document (this too) but I suspect you are looking for a less abstract set of guidelines.

-

We have the Start Asynchronous Call for creating callbacks in LabVIEW. There are a couple of issues with what you are proposing in that callbacks tend not to have the same inputs and outputs. What we definitely don't have, at all, is a way for implementing callbacks for use in external code (in DLL's). While what I'm proposing *may* suffice for your use case, it's the external code use case where LabVIEW is lacking (which is why it makes Rolf nervous ).

-

It's only software One isn't constrained by physics.

-

None of that is a solution though; just excuses of why NI might not do it.

-

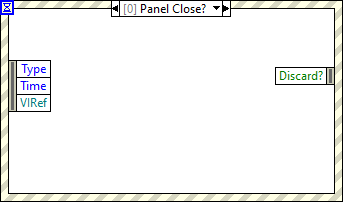

We've had these arguments before. I always argue that It's not for me to define the implementation details, that's NI's problem. We already have blocking events (the Panel Close? greys out the "Lock Front Panel" checkbox because it's synchronous). The precedent is already there. What I think we can agree on is that the inability to interface to callback functions in DLL's is a weakness of the language. I am simply vocalising the syntactic sugar I would like to see to address it. Feel free to proffer other solutions if events is not to your liking but I don't really like the .NET callbacks solution (which doesn't work outside of .NET anyway).

-

Not really, although I suppose it is in the same category as what I would propose. I mean more like the "Panel Close?" event. The callback would be called (and an event generated). You would then do your processing in the event frame and pass the result to the right hand side to pass back to the function (like the "Discard?" in the "Panel Close?" event). Of course. Currently we cannot define an equivalent to the "Discard?" output terminal. They'd have to come up with a nice way to enable us to describe the callback return structure.

-

Indeed. However, NI *should* have been aggressive in correcting that. I agree. Lazarus is the Open Source equivalent and I use a variation called CodeTyphon. But it highlights that even if LabVIEW is considered "vendor software" it should be on those lists as it is more of a programming language than HTML/CSS (as you point out). There could be no argument against inclusion, IMO.

-

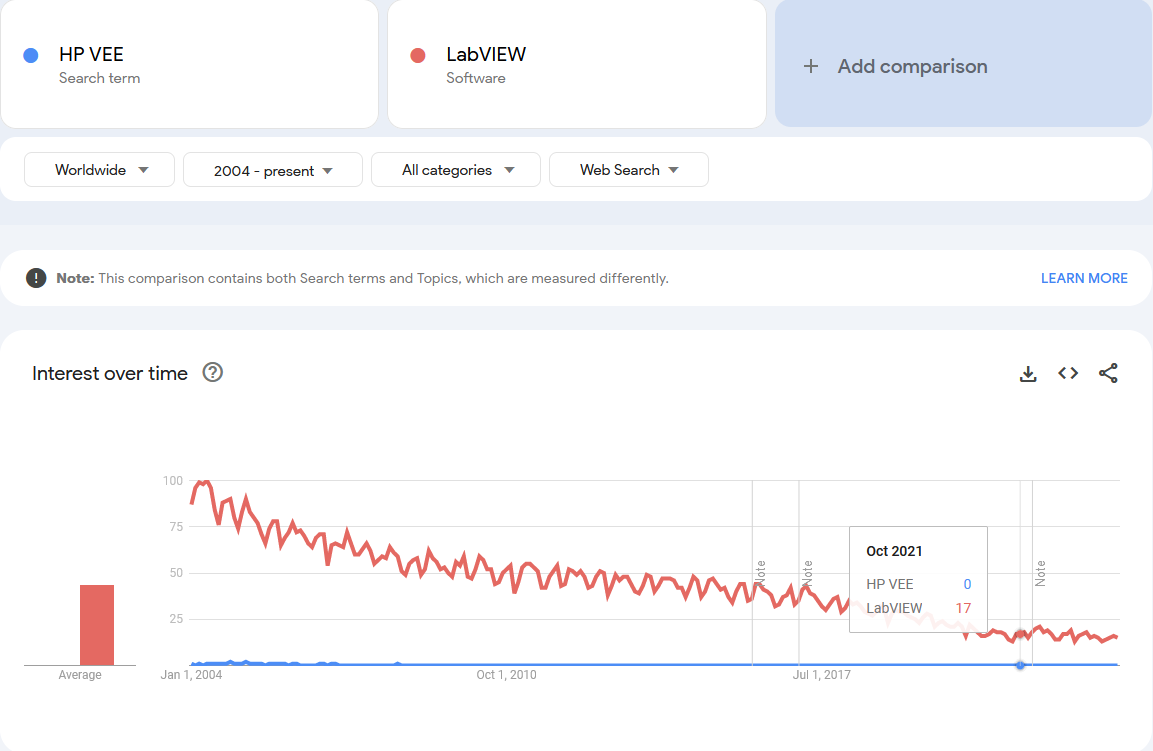

LabVIEW never seems to even be listed on programming statistic websites. I refuse to believe (also) that LabVIEW has less programmers than, say Raku. Hopefully Emerson will aggressively promote LabVIEW on these kind of sites to raise visibility. NI failed to do so consistently.

-

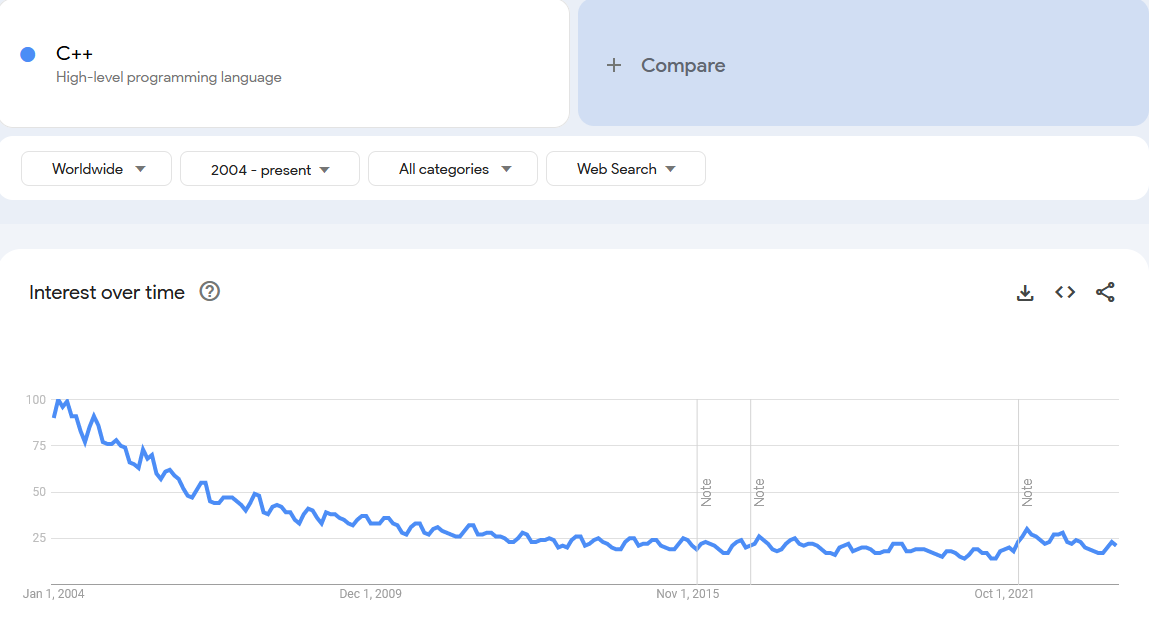

I've always been suspicious of Google Trends because it doesn't give context. The following is C++ but I refuse to believe it's marching to oblivion. But it's fun to play with

-

Happy? Or do you mean grateful for small mercies? You wouldn't have been happier with a documented and example laden product that you can ask questions about and actually works? No. There is too much of this thinking around nowadays and it annoys the hell out of me. We (programmers generally) used to be better than this. The rigor and community that made OpenG tools is definitely gone now. I'm not quite sure who is to blame for this mindset creeping in but it's just not good enough BTW. I also get just as annoyed with "Beta" releases or, as I call it, "Still Not Working". Oh well. Back in the private toolkit it goes. Maybe one day I'll get around to it but it's clear that even if I did release it to the community as-is, it'd just be the spawning point for variants which, quality wise, would be just as bad, if not worse.

-

Different philosophies that we probably will never agree on. But in this particular case; I have working code with no known bugs and the price for using it is for someone to take responsibility and ownership of it.

-

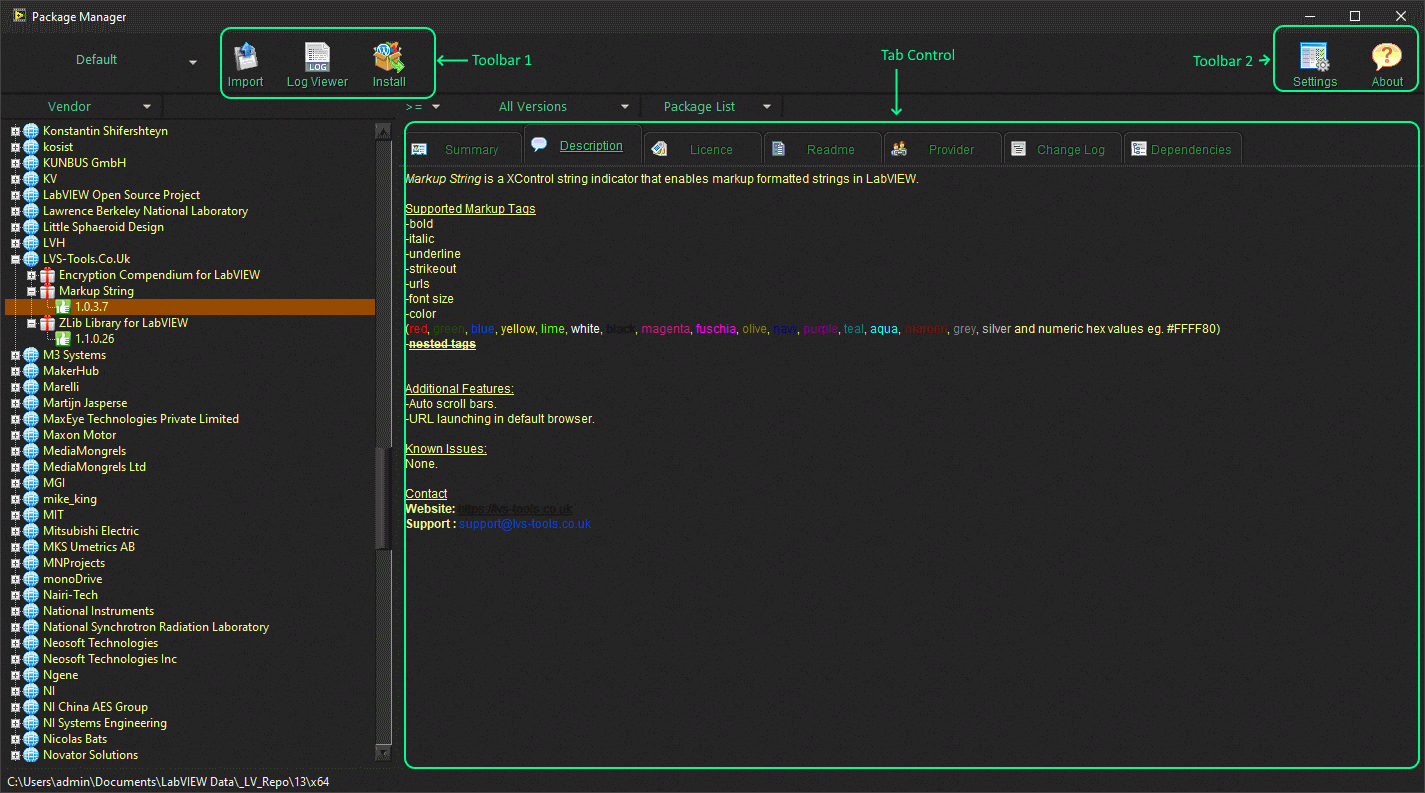

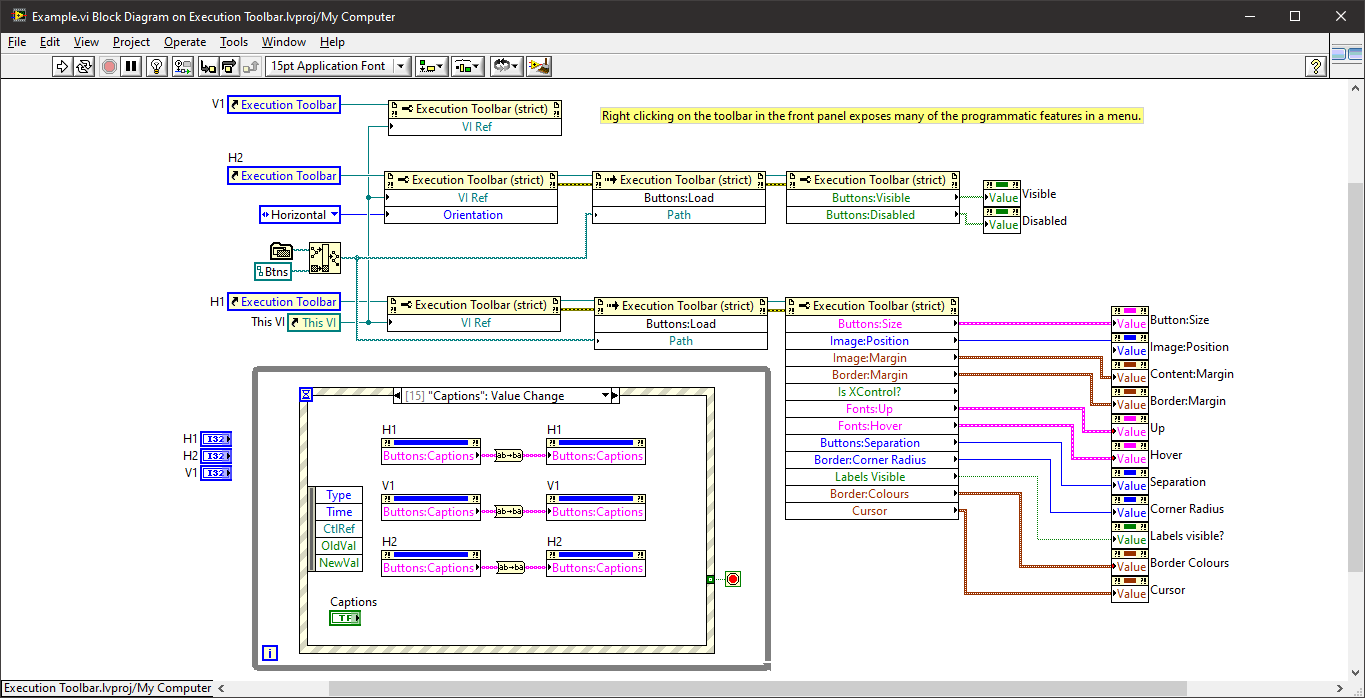

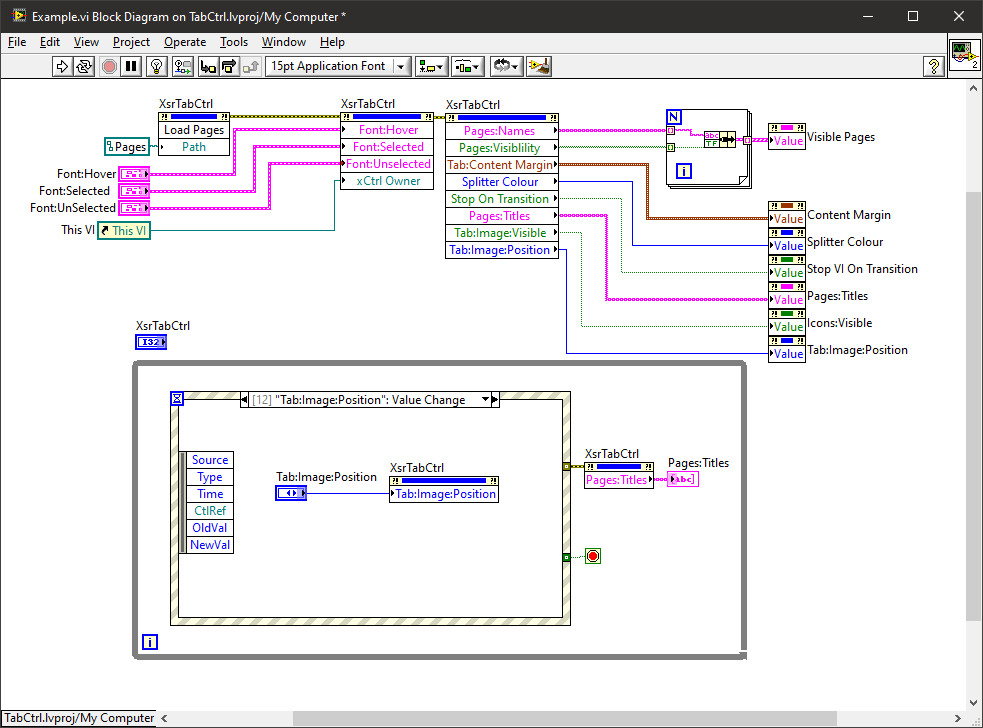

Support is always necessary. That never happens. It's why there are so many half-finished and buggy projects on github. If I'm not prepared to release and support it, I'll either find someone that can or not release at all. What you've never had, you don't miss-right? One day I might get the energy and time-window, but don't hold your breath. Both xControls use the 2d picture control for the button/tab bar. You can add buttons/pages either at design or run-time. Where things might be different of how one envisages these things working is that the buttons are, in essence, callbacks. Pressing a button invokes a VI (which is why it's called the Execution toolbar) but most of the features are around managing the buttons/panels. Both the toolbar and tab bar work very similarly that way (tab bar obviously shows the VI in a sub panel). They are pretty feature complete. I suppose the tab bar could add an optional close icon but I never needed it. Hmmm. Maybe I'll add that now .... perhaps not

-

I Con not install dsc by vipm for labview2016

ShaunR replied to Danial Nasser's topic in LabVIEW General

I like your optimism. Maybe you are right. Emerson Reports Fourth Quarter and Full Year 2023 Results; Provides Initial 2024 Outlook Interestingly, Europe seems to be their biggest growth. -

Device drivers are on a separate installation DVD (it'll be 10's of GB). While they are probably on the FTP site somewhere, you can download it from the main NI website.

- 28 replies

-

- labview2017sp1

- labview rt

-

(and 3 more)

Tagged with:

-

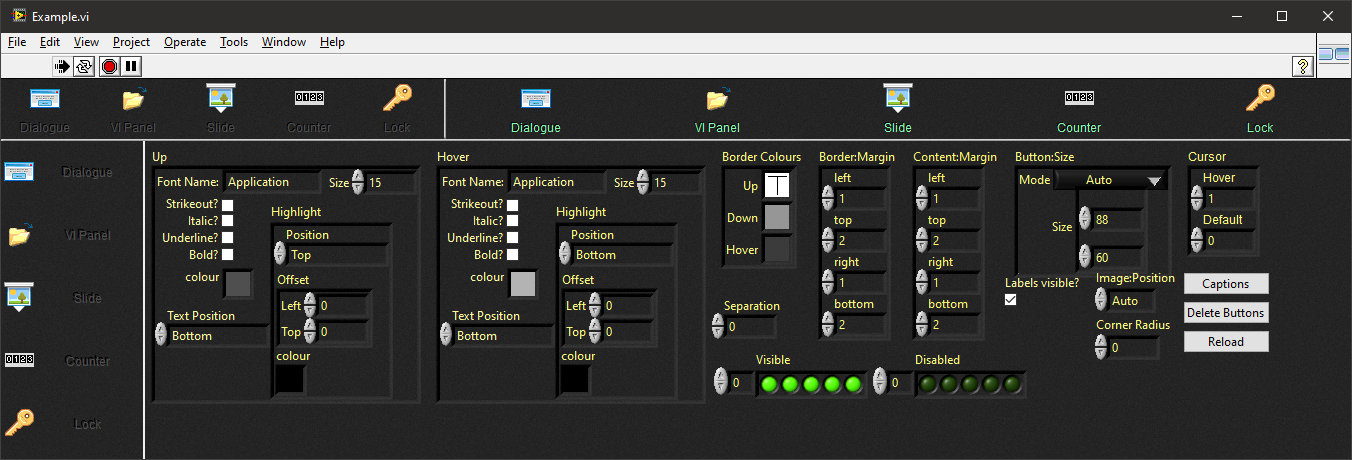

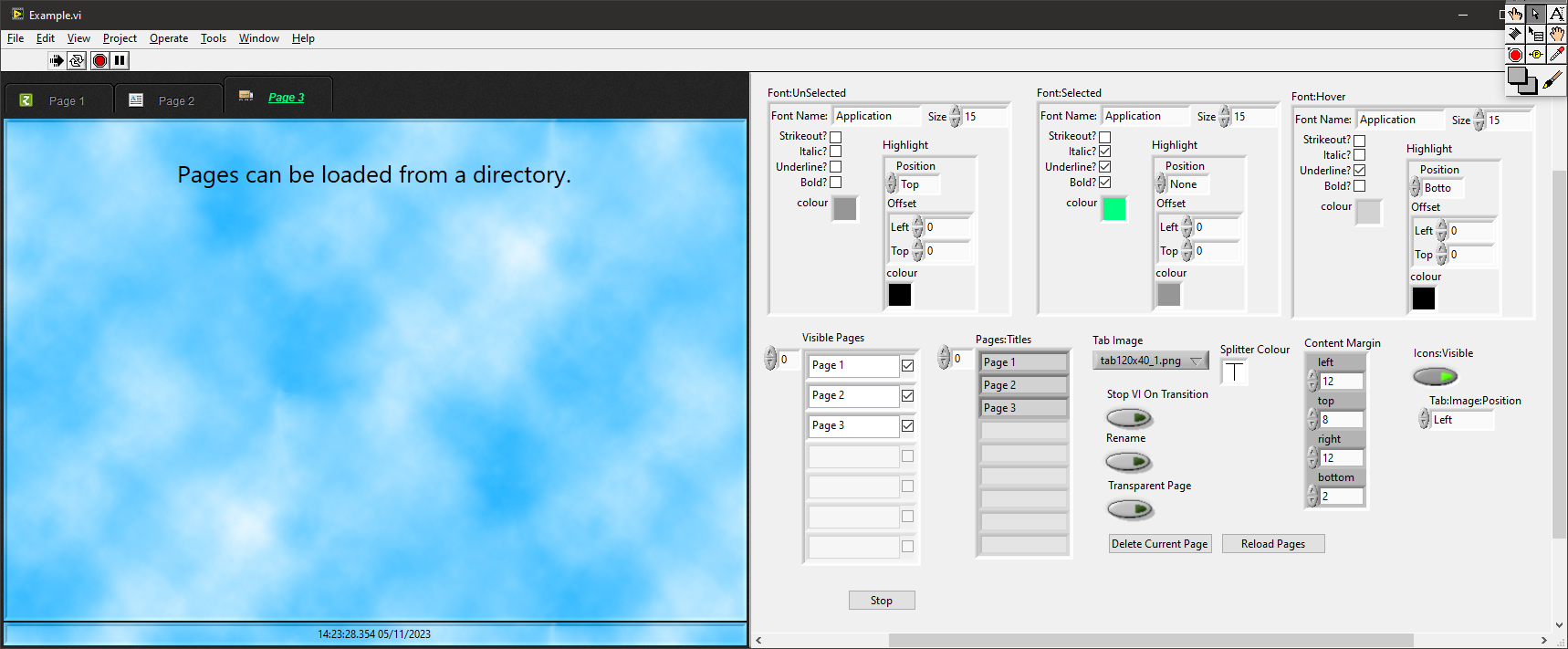

A long, long time ago in a galaxy not so far away I developed two xControls in order to create a VI installer. The VI Installer hasn't really gone anywhere but the xControls that it used were for a Toolbar and a Tab Control. (The string indicator which supports colours on the tab page below was another but that is already available - Markup String). The two xControls are pretty well defined and feature complete but for them to be introduced into the wild, they need "productionising" (documenting and tidying up FP/diagrams and creating VIPM installers). I've sat on them for years so it's not going to be me that does the last few percent and I don't want to be the one that people go to for support (I have enough out there already that I have to support). So if someone wants to take up the mantle, give me a shout and I'll figure out how to release them. The XControls are self contained with their own examples: The "Execution Toolbar" xControl example. The Tab Control xControl example: If no-one is interested then I'll keep them in my private box and sit on them for another decade.

-

Not a huge leap but an improvement on events (IMO) would be Named events (ala named queues). Events that can be named but (perhaps more importantly) can work across applications in a similar way that Windows messages can be hooked from an application-all driven from the Event structure. I initially experimented with a similar technology when VIM's where first discovered (although it didn't work across applications). Unfortunately, they broke the downstream polymorphism and made it all very manual with the Type Specialization Structure - so I dropped it. Another is callbacks in the Event Structure. Similar to the Panel Close event, they would have an out that can be described. But getting on to the LabVIEW GUI. That needs to go completely in its current form. It's inferior to all other WISIWIG languages because we cannot (reliably) create new controls or modify existing ones' behaviour. They gave us the 1/2 way house of XControls but that was awful, buggy and slow. What we need is to be able to describe controls in some form of mark-up so we can import to make native controls and indicators. Bonus points if we can start with an existing control and add or override functionality. All other WISIWIG languages allow the creation of controls/indicators. This would open up a whole new industry segment of control packs for LabVIEW like there is for the other languages and we wouldn't have to wait until NI decide to release a new widget set. At the very least allow us to leverage other GUI libraries (e.g. imgui or WXWidgets).

-

He should have. All the stuff he needed was on the NI site that I gave him a link to.

- 28 replies

-

- labview2017sp1

- labview rt

-

(and 3 more)

Tagged with: