-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

They used to be called VI Macros (*.vim). There is a thread on here somewhere (can't find it ATM) that talks about them extensively with examples and such.

-

Well .There is also Windows 10 IoT but the intent seems to be one OS to rule them all and they (M$) have stated so.We shall see whether it is just a rebranding to Windows 10 of different OSs or a more micro kernel approach with .NET at the centre. Regardless. M$ have proven their disregard for privacy so from my perspective it makes no difference. Well I've been using browsers for a long time now and there are caveats here. A browser JS engine is single threaded and usually buckles with more than a few hundred KB/s. They also tend to only run the JS in the tab that is front-most. A cursory glance at some of the demo videos it appeared to just be a HTML and Javascript editor (maybe an embedded engine too) in the UI and probably the usual NI Webserver somewhere in the background as they were using HTTP requests. I didn't see any use of HTTPS which really should be de-facto nowadays. If that's all it is then I'm no better off than now only currently I can also use Websockets and WebRTP et. al. Perhaps I'm jumping the gun here, though. But I will be looking very closely at this in the next couple of weeks to see exactly what's there.

-

NI already have a Windows RT platform that they distribute with their PXI racks. I don't think it would be a great leap from that, especially when M$ are pushing previous Windows Embedded systems to Windows 10.

-

I don't think that is the strategy. I think the intention is to only have to deal with one OS on all NI platforms (Windows everywhere).

-

Don't pick one and force everything into it. Partition your software (messaging, state machines, sequential operations, UI, configuration etc) and decide on a scheme for each one. Some will fit with QSM, others will not (broadcasting changes, for example) use messaging to bridge the gap. You may end up with multiple QSMs for a number of sub systems. There is not "One Architecture To Rule Them all" even within an program. If it is a small application then you might find "most" of it will fit with a QSM but until you break it down, you will not know.

-

Load lvlbp from different locations on disk

ShaunR replied to pawhan11's topic in Development Environment (IDE)

I have had situations like this and the only option was to look at the XML. In there you may find absolute paths (c:\myapp\myprogram) mixed with relative paths (..\myprogram). Change all the absolute paths to relative ones and it may resolve this. -

I've no idea what this means Well. It's a bit off topic but........ I used them for visual inspection. The RPi3 has a CSI-2 interface (many of these boards do). I think it was 4 lane (4Gbps) but I only needed two. You get wireless and HDMI for free. If you need a bit more processing power then you can add something like a Saturn FPGA for image processing (LX45, the same as in some of the cRIOs). The customer has tasked one of their engineers to investigate creating a GigE to CIF-2 converter because they eventually want to use and reuse Basler cameras on other projects but we were unable to find such a device (links please if anyone has them). They already had a LabVIEW program that they used a bit like NXG and I connected that to the sub system with wss Websockets. For the ultra low end devices, I use them to add data logging, HMI and watchdog capabilities to existing hardware (like the Wago that was linked earlier and cRIO). You only need an Ethernet port and HDMI for that and as a bonus you get more USB ports. There is a whole multitude of choices here - with or without Wifi, LORA, more or less USB ports and GPIO etc. The only lacking technology at the moment is availability of GB Ethernet but they are starting to come through now. These types of devices I no longer think of as "hobby" devices.

-

Yeah, No. I've never seen a patronising waveform before . That's not happening this time and probably hasn't happened for many years. We've seen LabVIEW stagnate with placebo releases and there are so many maker boards for less than $100, hell, less than $10, it's no longer funny (and you don't need $4000 worth of software to program them). I while ago I put a couple of Real-time Raspberry PIs in system instead of NI products. You love Arduino, right? There is a shift change in the availability of devices and the tools to program them and it has been hard to justify LabVIEW for a while now except if a company was already heavily locked in. If it's not working on Linux then that's a serious hobbling of the software since I, like many others, am now looking to expunge Windows due to privacy concerns for myself and my clients.

-

The biggest problem for me was that they have changed all the wires. I spent about 10 minutes trying to figure out why a for loop wasn't self-indexing because a normal scaler value looks like a 1D array wire. At one point I was sure that it should've and was cursing that they had removed auto-indexing Then I made the scaler into an array (which was a lot harder than it should have been) and it looked like a 2D array wire . I was probing around with the wiring tool tool trying to figure out what the wires actually were because my minds auto-parser just said everything wouldn't work. Add to that that all the primitive icons now look the same to me, only distinguished by some sort of braille dot encoding, I thought "I'm glad I won't ever have to use this". I think NI have recreated HPVEE to replace LabVIEW

-

If you think of it more as a replacement for MAX with a VI editor built in, then it may make a lot more sense than a new LabVIEW. There is a very obvious emphasis on distribution, system configuration and deployment-many of the things that MQTT is doing for maker boards and IoT devices. I don't think it is a coincidence that wireless are among the first products to be supported rather than cRIO type devices. Interestingly I also think it is a case of be careful what you wish for. I have asked for many years for source code control applicable to LabVIEW because other SCC treat Vis as binary blobs (good luck with that merge ) So really we could only use them for backup and restore. Well. Now LabVIEW Vis are text (XML it seems) so it is absolutely possible to, in theory, do diff merges. I also have complained about creating custom controls. Well. We will have that now too via C#. So I can write IDE controls and project plugins in C#. I can bind to the NI assemblies (in C#) for DAQ and Instrument Control. I can use SVN with C# so what do I need LabVIEW for? If you *want* to use LabVIEW then there is an IDE, although rehashing all the icons will only alienate existing LabVIEW programmers. We will be inferior to C# ones because we can only do a subset of their capabilities on our own platform. Over time, NI customers will not renew their LabVIEW SSP packages which gives NI the excuse to not support it. All the experienced LabVIEW programmers will either move to something else because no jobs are available (see my previous comment about Python in T&M) or retire. Large corporations will be hiring C# programmers to rewrite the LabVIEW code that failed conversion. Then ..... it gets worse The best we can ask for at this point is that NI open source LabVIEW 2017 and give it to the community as a going away present :.

-

I don't blame the language. C# is just a front end for .Net (like VB.net et. al.) It's the .Net I blame and have always blamed and I don't care what language you put in front of it including LabVIEW - It will be worse. But there is more to this than a language choice. It is quite clear than NI have jumped in with both feet onto the Windows 10 platform which, don't forget, is attempting to corner all hardware platforms with one OS. There is some sense in that since Win 10 has JIT compilation of .NET (perfect for VI piecemeal compilation) , a background torrent system (push deployment and updates whether you like it or not) and plenty of ways to get data for mining and DRM enforcement (Customer Improvement Programs). This is the start of Software As a Service for NI so you won't need LabVIEW in its old form.

-

Well. LabVIEW was the best cross development platform. That is why I used it. I could develop on Windows and, if I managed to get it installed, it would just work on Linux (those flavours supported). Over time I had to write other cross platform code that LabVIEW couldn't do or, rather, wrote cross platform code so that LabVIEW could use it on other platforms. I used the Codeblocks IDE (GCC and MingW compilers) for C and C++ DLLs or the Lazarus IDE for Free Pascal (in the form of Codetyphon). The latter can use several GUI libraries such as QT, GTK through to Windows or its own fpGUI. However. those things aren't the problem with Linux. It is the distribution and the Linux community is in denial (still) that there is a problem. So recently I have had a pet project which was a "LabVIEW Linux" distribution. I created my own distro where I could ensure that no other idiot could break the OS just because they decided to be "cool" or wanted to use "this favourite tool". I basically froze a long term support and tested distro, added all the tools I needed, removed all the "choice" of what you could install then made it into a custom distro that I could micro-manage updates with (like OpenSSL), It had LabVIEW, VISA and DAQ installed, along with a LAMP, and all my favourite LabVIEW tool-kits were pre-installed. It could be run as a live CD and could be deployed to bare bones PCs, certain embedded platforms and even VPS. I solved (or added to )the distribution problem by making my own. Here's a funny anecdote. I once knew of a very, very large defence company that decided to rewrite all their code in C#. They tried to transition from C++ on multiple platforms to C# but after 6 years of re-architecting and re-writing they canned the project because "it wasn't performant" and went back to their old codebase.

-

Silverlight was definitely the stake through the heart of Web UI bulder just as (I think) C# will be the same for LabVIEW. I've been actively moving over to Linux for a while now and .Net has been banned from my projects for donkeys' years. So doubling down with a Windows only .NET IDE is a bit perplexing. Especially since at this point, I consider Windows pretty much a legacy platform for T&M, IoT, DAQ and pretty much everything else LabVIEW is great for. When Windows 7 is finally grandfathered; Windows will no longer be on my radar at all.

-

Node Red FTW

-

What are these long-term plans? I did manage to get it working on another PC and whilst there were some nice IDE features (tabbed panes with split) I couldn't use it for proper work. it doesn't address any of the long standing features we have asked for, it is quite slow in responsiveness. Graphs are very basic (no antialiasing? No cursors?) and, I believe, it is Windows only (was that C# I saw in its guts?). If they had added the MDI style of UI to LabVIEW 2017 I would have been over the moon! As regards your comment about relevancy. Is it similar to Test and Measurement where, in the last year, Python has overtaken LabVIEW as the language of choice? (at least in the UK, that is)

-

Lots of examples on the net.

-

Maybe. But is there now a proper Source Code Control? Can we make our own native controls? Or are we looking at just another BridgeVIEW-like environment?

-

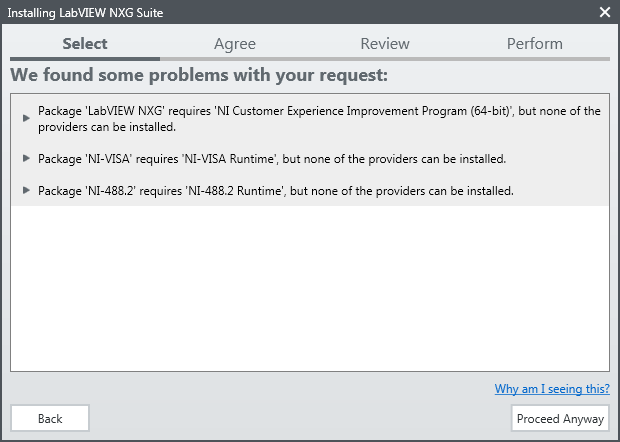

Well. I was pleased to see that they finally created a NI package manager-I wasn't impressed when they opted for a 3rd party company to provide one (and, of course, said so). That's about as far as I got, though. .LabVIEW 2017 installed fine!

-

This is why I prefer the "TARGET_TYPE" which doesn't have this limitation.

- 9 replies

-

- crash

- troubleshooting

-

(and 1 more)

Tagged with:

-

I highly recommend you use the native property nodes which are guaranteed to work on all supported platforms. The "Execute" is a last resort and I don't see anything in your code which cannot be obtained with property nodes.

- 9 replies

-

- crash

- troubleshooting

-

(and 1 more)

Tagged with:

-

AQ gets quite irate when people talk about LabVIEWs "garbage collector". I will defer to his expertise and definition Just to get in before someone pipes up about THAT function......."Request Deallocation" is not a garbage collector in any sense. "

-

View Executable on Web browser

ShaunR replied to Cat's topic in Remote Control, Monitoring and the Internet

Viewing is easy. Just Save a FP image to a file and reference it in a webservers HTML page (NI Webserver, Apache NGINX,whatever.) Control is much harder which is why many of us use Websockets. The NI webserver is based around calling individual functions (Vis) in a command/response manner from the browser which is why they have Remote Panels as Smithd has mentioned. There are indirect methods like VNC or surrogate "SendKeys" software but direct control of a LabVIEW application requires you to embed a server in your application which can respond to browser clicks -

LabVIEW doesn't have a garbage collector. I've seen these sorts of behaviours with race conditions when creating and freeing resources.

-

Changing available inputs based on user selection

ShaunR replied to ocmyface's topic in User Interface

I posted an example a little while back of using a SQLite DB for this sort of thing which was based around testing rather than pure DAQ so it is a superset of what you require (at the moment ). Code is here.