-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

I think your framework has come far enough now that you need to drop all this "actor" terminology. You now have specific modes of messaging and operations such as services, listeners, controllers and processes which are all merged under the banner "actor" - the same way everything is a "VI", even LVPOOP "methods". This is a similar scenario to the electrician/decorator problem and a switch of view will help understanding, adoption and epiphanies. I tend to think of actors as the micro and services et. al. as the macro. Your framework is superior to the Actor Framework so you should no longer ride on its terminology coat-tails and the OOP definition is just a universal catch-all for chunks of code (turtles all the way down). Calling all your use case solutions "actors" is just confusing and hiding the underlying application realisation and therein lies the power of your framework.

-

You are right. I've just noticed the semi-colons. I thought it was a JSON export format of STIL that was posted.

-

If manipulating, rather than creating; I would take a look at the new SQLite features.to see if that would be a solution. You could import a STIL files' JSON representation directly. You can then query (or update) it's parameters as if they were part of the database. This would link in extremely well with the rest of a test system so you could pull out test results and the STIL parameters for a particular configuration or date/time with transparent SQL queries.

-

Privacy policy?

-

Build Number

ShaunR replied to Neil Pate's topic in Application Builder, Installers and code distribution

IC. It was this I was referring to -

Build Number

ShaunR replied to Neil Pate's topic in Application Builder, Installers and code distribution

The command line is never a solution on windows. That's a Linux fetish. Look for the DLLs. Most cross platform dynamic libraries have a libversion function call and there is bound to be one for Git. -

I'm not sure what bit you read that said memory mapped IO removes concurrency. That doesn't seem right. Turn PRAGMA synchronous=FULL and turn off "Write Cache" in device manager for the worst case but the behaviour you describe sounds more like LabVIEW memory allocation than OS disk caching. I haven't seen this specific behaviour but it was a few years ago that I used SQLite with anything other than a SSD or USB memory stick. Anyway. It's all academic if the meeting-monkeys deign another course.

-

You have all the easy bases covered (you are aware that not all of those are sticky and have to be used when opening, not just when tables are created?). At this point I usually look for a more suitable alternative for the use case. TDMS would be far superior for streaming the data but I expect you have hit a brick wall there too with the decimation, making the idea of doing it in a query attractive by its sheer simplicity. If SQLite is the "closest" of all the considered options then you would have to really dive in and get your hands dirty. I'm pretty sure you are already close enough that you could probably get there eventually but it's a whole domains worth of knowledge in and of itself. If we ignore physical constraints like disks, then there is a whole raft of low level configurations of how SQLite operates so it would be a case of investigating forcing manual optimisation of query plans memory-mapped IO or even writing your own "decimation" function or extension to name just a couple. Have you tried the SQLite mailing lists?

-

If you are running in SYNC=FULL (the default) then SQLite is using Write-through and Windows buffering is bypassed since it breaks ACID. This makes a big difference on mechanical drives-not so much on SSDs.You can tweak more performance by not writing a journal (JOURNAL=OFF) and setting SYNC=OFF at the expense of catastrophic failure integrity.

-

That's interesting but not surprising. I might add some more benchmarks around this to those for rows and bulk inserts. It would be a useful metric to see what the performance overhead is for varying Indexes. 20K/sec bulk INSERT is easily achievable. I'm not sure if you missed a zero off of that but 20K x 27 cols is about 100ms for me.

-

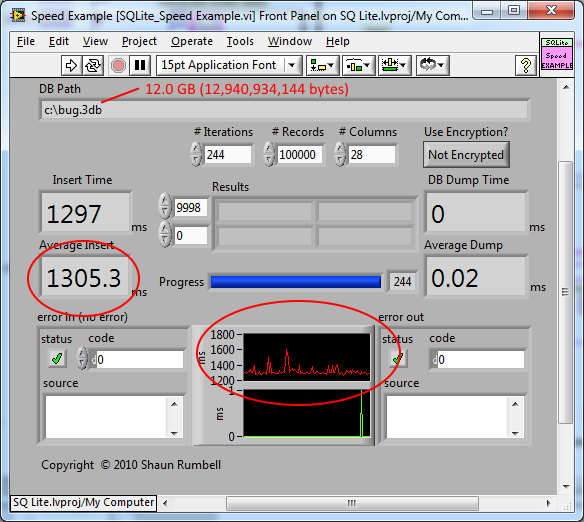

There is something not quite right there. The file size should make no difference to the INSERT performance. This is inserting 100K records with 28 columns. Inserting 244 times increases the file size from 0 to 12GB.(I just disabled the drop, create and select in the Speed Example). There is jitter due to other things happening but it is not increasing as the file grows.

-

UNION and JOIN are two different things (JOIN is an alias for "LEFT JOIN" - you can have other types). A JOIN maps columns from one table to another for indirection. A UNION just appends data. The union is used in the WITH RECURSIVE so as to create an ordered queue which bestows the tree walking behaviour- it's a fortuitous slight of hand. How many columns? Benchmarking 100K x 3 columns (xyz) runs at about 250ms using my benchmark. Are you saving to a huge single table as if it were a spreadsheet? I get that INSERT rate (1.2 secs) at about 25 columns.

-

Most eval boards or programmers use a virtual (serial) com port. If the software they supplied lets you choose com1,2 etc then you can use the LabVIEW serial VIs to talk to it (if you know the commands)

-

Now you're talking.

-

Ah. Yes. But you can read it out in any order you like by just by using the ORDER BY clause. That's the beauty of DBs. The "View" isn't defined by the data structure,

-

It's an unusual use case and I wouldn't recommend a DB for this since there is a lot of overhead for realising a relational DB that you just don't need. However. I would suggest you UPDATE rather than DELETE. You wouldn't clear a memory location before writing a new value to it in a ring buffer. You'd just overwrite because it is more efficient. DELETE is an extremely expensive operation compared to UPDATE as well as more esoteric things like fragmentation (Vacuum resolves this but can take a very long time) Thinking about what you are doing a bit more. You are not using a ring buffer, are you? You have a fixed length FIFO. What you probably want is INSERT OR UPDATE that isn't directly supported by SQLite, but can be emulated. The easy one would be INSERT with the REPLACE conflict condition but I think that just does a delete then insert so performance wise, you are no better off. The implementation is easier than messing with triggers, though.

-

Ahh. I get it. Yes that would be a useful optimisation for this scenario. The Time,WN might not be unique but if that's not an issue I can see it simplifies things greatly. It's taking advantage of the hash table lookup under the hood.. I can think of a few more uses for that too. I wonder what the performance difference is between that and a value table lookup like, say, LevelDB.

-

Right. Files size, space saving, I get (Uin64 per row). But you don't have to add an index and the bit I'm really not getting is how WITHOUT ROWID enables you to store "in order of Time and WN". Insertion order is the same with or without a rowID, is it not?

-

Standard Indexes on individual columns isn't very useful. In its simplest form you can consider an index to be an alias to a group so creating an index for each column is a little pointless. An index on XY and/or XZ makes more sense. Creating a partial index on single columns is very useful, though, since you are pre-mapping the alias to a subset of the rows of the table. Making an index on Z=0 would probably be useful in your case. Can you explain that? I thought "WITHOUT ROWID" was purely an optimisation.

-

Run Explain. (SQLite_Explain Example.vi) on both queries. It will "explain" how it processes the query and what optimisations are performed (like if an index is used or not) and you can see what the differences are.

-

Is this SQLite only? Or a more general SQL question since performance varies markedly from platform to platform and some responses may not be valid for MSSQL, for example. Group by is probably the wrong approach for decimation. You'd have to execute the Explain query to find out what the internal lookups are. I expect you are forcing one or more full table scans. In the Data Logging Example I use the following expression to return decimated data. Decimating 1M datapoints is a few hundred ms on an SSD but I don't know what that file size is, off-hand. The "rowid" decimation is quite obvious and means that SQLite can skip entire sections without querying and testing the data. WHERE (x between ? AND ?) AND (rowid % 500 == 0) What does this approach yield with your dataset? If X,Y,Z are coordinates, I would highly recommend using the R-TRee features of SQLite if you haven't tried it already. If your SQL queries are to find intersections and/or contained/overlapped polygons then the R-Tree module is far more performant that straight SQL.

-

Don;t wire anything to the "options" terminal of the open primitive. You don't really want a shared pool, you want pool of 3 individual instances (pre-allocated).

-

Doh! It wasn't a call by asynch node that was used. Opticians appointment confirmed.

-

I think it is one pool because of the strict type ref. Called in any order because of the Asych call and as if parallel iterations was enabled of the for loop.. For those who haven't seen this before. It is a demonstration of this behaviour. Foot Shooting factor 9000! What do we get if we win? Can I go home early?