LAVA 1.0 Content

-

Posts

2,739 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by LAVA 1.0 Content

-

-

QUOTE(Matheus @ Oct 15 2007, 09:56 AM)

How is the performance without the SV read?

Then how does it perform without the TC reads?

Ben

-

QUOTE(gottfried @ Oct 11 2007, 08:01 AM)

Hi,can I (how can I) get an the same board (a 6251) two different data rates? I need a 80kS/s channel and 4 10S/s (every 100ms) channels.

Thanks

Gottfried

There are several ways to do this, I have attached an example of one way. Import the .nce file into max then run the example. The important thing to remember is that there is only one A/D in a multifunction DAQ card, when you read in the documentation that there are X#Channels, they are really just talking about multiplexing capabilities. This means that there is really no such thing as simultaneous sampling with a single multi function DAQ card, so if you really need simultaneous sampling you need: more than one Multifunction daq card, a multiple channel digitizer card or a sample and hold card. If you protect your resource (A/D) with a semaphore and configure your task in MAX you can have multiple sample rates with one A/D, they are however not simultaneous, but that is not possible with a multifunction daq anyway.

-

After installing LabVIEW I immediately set the grid size to 8x8 for both diagram types. It's much better to align objects. This works fine for creating a new VI which is not inherited from a template. But with the new OOP features of LabVIEW an annoying issue was born. If you create a new VI from a static or dynamic dispatch template the grid size is not set to the given environmental value. Instead the default sizes are used and you have to manually change the VI grid settings.

Creating new VIs from templates should also take care of the environment grid settings. This is CAR #49MA4FU1.

Henrik

-

QUOTE(Gavrilo @ Oct 15 2007, 08:14 AM)

... will memory deallocation requests augment the performances of my interface, or will it slow it down by adding unncessary memory allocations?Thanks in advance for any help!!

If the buffers used for all of the wires in the sub-Vi are large enough, the same buffers should be re-used.

The last time I poked around with the de-allocate, it only cam e into play when the VI in question was marked for removal from memory. If the sub-VI is part of the app (not dynamic) it will not be marked for removal while the top-level VI is running.

On the other hand, if the VI ws loaded dynamically, then when all of its references are closed, the de-allocation is possible.

Stepping back:

I think of that switch as being useful if you have a set of functions that are called as a response to a user action, are used, and then are unloaded.

All of the above is just my opinion based on personal observations.

If anyone actually KNOWS what is happening, please step up and correct me, PLEASE!

Re: more memory

If your VI is using more virtual memory than you have physical memory, then adding physical meemory will help.

If you are using less virtual than physical, then use the profile tool to find who using the pig, then review that/those critters for excessive buffer copies.

Trying to help,

Ben

-

These are two related issues filed under different CAR numbers:

1. With LV8.5 the project explorer now also shows the hierarchy of files in the new 'Files' tab. If you try to drag&drop an existing VI from the list into the front panel or block diagram of another opened VI than you are not allowed to insert it there. The behavior should be the same like within the 'Items' tab. Listed VIs should be draggable into a target VI. This is Car #4DJFJ7G3.

2. If you drag&drop a VI from the 'Items' tab into an opened VI the focus is still set to the project explorer. To offer a better workflow (necessary for us keyboard users) the focus should be set to the front panel or block diagram of the target VI. Than you can immediately use keyboard shortcuts without having to use the mouse to set the focus to the target VI. This is Car #4E89HJAR.

Henrik

-

QUOTE(NormKirchner @ Oct 13 2007, 03:53 PM)

If that's your interest, keep an eye out for an incoming post from me in the code repository.It's a concept called LVx (x is for export).

It's a design pattern that allows you to add exported method functionality to a LV program both in dev mode and exe form.

I'll keep an eye out for your comments and own additions to LAVA.

Welcome. ~,~

Oh!

All of you LVOOPish types are way ahead of me.

I have scheduled some time to study

in December just so I can understand what y'all are talking about.

in December just so I can understand what y'all are talking about.Give me time, I'll catch up.

Ben

-

Hi everyone.

I'm still reading the forum and posts for a while but never created an account. But now this remaining issue is also done.

I'm a LabVIEW developer since around the year 2000. Mainly I'm focused at creation of applications with measurement background for laboratory use. I'm working at a research centre where I also do local related technical support for NI problems. We also host a LabVIEW usergroup and have monthly meetings where we present ongoing work or new features.

Due to my work I regulary find bugs which I already reported to NI. I'll try to file some of them - which are related to LV8.5 - within the bug section. That's also the main reason why I created the account.

Thanks for the great platform.

Regards,

Henrik

-

Hi,

I studied Electronic and Electrical Engineering at university, and have been in the test and measurement field ever since (1985). Currently sitting within throwing distance of Danny, and appreciating his help.

Kevin

-

QUOTE(sahara agrasen @ Oct 13 2007, 01:17 PM)

I required material based on LabVIEW intermediate course I or II .....if anybody has pdf related to that plz send it to me

its urgent for me

Be careful. That is proprietary material, and you might as well post a registered key to a LV toolkit or the code on here. From NI's standpoint, it's just as illegal.

If you want some more specifics or specific examples or table of contents, I think we can manage.

-

If that's your interest, keep an eye out for an incoming post from me in the code repository.

It's a concept called LVx (x is for export).

It's a design pattern that allows you to add exported method functionality to a LV program both in dev mode and exe form.

I'll keep an eye out for your comments and own additions to LAVA.

Welcome. ~,~

-

QUOTE(Justin Goeres @ Oct 12 2007, 01:38 PM)

I was hoping maybe there was a super-secret INI setting for this, but I couldn't find any information about one...I would like to have LabVIEW stop automatically putting those little numbers in the lower-right corners of the icons for the VIs I create:

Is there a way to turn that behavior off?

I'm trying to get the hang of the new icon templating features in LV85, but the little numbers get in the way. I'm removing them by hand, which mostly ruins the benefit of using the icon templates in the first place.

I have a workarround...

You can just drag and drop a picture "into" the Icon of the Frontpannel Winndow- that takes only a few seconds...

=> Create your own Templates (pictures) as much as you like

Or you can use an customized ICON-Editor:

LAVA Code Repository...

Vi, Vit, Ctl Icon and Description Editor /Browser

Mark Balla's Icon Editor Ver 2.2

-

I've posted the VI I mentioned on the NI forums here as it uses undocumented features.

I put very little time into this; if someone has the time to deal with the recursion and sees value in calculating the VI Modularity Index; go for it!

Download File:post-949-1192187068.vi(LabVIEW 8.5)Download File:post-949-1192189545.vi (Uses OpenG toolkit)

-

That begs the question "Should image links be included in RSS feeds?"

I like the fact that they can be included, or I wouldn't be able to read xkcd.com comics at work via Google Reader.

I'm going to listen to the new "The 5th dimension" post now

PS. A big thanks to Michael for continuing to experiment and add new features like this to LAVA. :thumbup:

-

There are a couple of NI forum topics that discuss setting up LabVIEW to work with multicore/multiprocessor systems. They commonly refer to the LabVIEW VI

vi.lib\Utility\sysinfo.llb\threadconfig.vi

Fully utilizing all CPU's for a LabVIEW application

Core 2 Duo, Core 2 Duo Quad, and Labview 8.2

From the second link, it seems that threadconfig.vi queries the OS capabilities. I recall that there are versions of Windows that only support a single CPU socket, a quick Google returned this. LabVIEW may be limiting your CPU load distribution as a result of what your OS reports.

I just read on another link in the same Google search that Vista includes something called the Next Generation TCP/IP stack. I was interested in this:

New support for scaling on multi-processor computersThe architecture of NDIS 5.1 and earlier versions limits receive protocol processing to a single processor. This limitation can inhibit scaling to large volumes of network traffic on a multi-processor computer. Receive-side Scaling resolves this issue by allowing the network load from a network adapter to be balanced across multiple processors. For more information, see.It sounds like this might be a useful option for me. Can you tell me a bit about the computer and OS you are using?

-

Maybe some of the more active LabVIEW related blogs like Thinking in G, ExpressionFlow and crelf's Technology Articles? could use this tool to make audio versions of their blogs available. Maybe even the RSS feeds from LAVA could be "podcasted".

Imagine listening to "the 5th dimension" on the way to work (long commutes only, and no carpooling; you don't know who might be offended :laugh: )

-

QUOTE(Michael_Aivaliotis @ Oct 4 2007, 10:03 AM)

The solution for me is to upgrade to the latest driver and restart the build. So now it asks for a CD I have.The way this feature works is pretty ridiculous and needs to be fixed. There MUST be a way to tell the builder what msi files we want.

Michael,

I encountered this problem back in 8.0 it's a mess. One thing I might point out is in 8.2 and up there is a check box in the dialog that ask for the source CD. I can't remember exactly what it says but if you select it the source from the cd will be copied to your hard drive, and the next time you do a build it will get the source from this location without a prompt. If you check this at least you won't have to dig for the CD's the next time you do the build. The downside is the hard drive space you consume over time.

-

QUOTE(Popatlal @ Oct 7 2007, 05:55 AM)

hi to allcan anybody suggest some links which allow to download audio lessons of labview?..

Plz send it to me if it is possible

Popatlal

I don't know about all those sites, but I have about 1 hour a day while I drive home. Give me your number and I'll talk you through it.

hehehe j/k..... well... maybe not.

-

QUOTE(yen @ Oct 8 2007, 03:26 PM)

As Chris said, indeed it is.I must say I always liked the Fatboy Slim videos.

For example, there was one which was simply stuff blowing up in slow motion, including stuffed cats (how I miss Monty Python

). Another had a bunch of people doing a weird dance.

). Another had a bunch of people doing a weird dance.We need another dose of the " Kitty Cat " video in this thread.

-

QUOTE(NDaugherty @ Oct 8 2007, 03:36 PM)

Stumbled across this myself a few weeks ago.

....IT ROCKS!

You have no idea how nice it is to have a free 411 at your fingertips until you use it.

once you do. you'll use it ALL THE TIME.

I mean really, how many times have you been driving to a place and forgot exactly which street it's on, only to drive around for a while

Shit man, just goog 411 it and they connect you with the place and just ask the chucklehead that answers the phone!!!

Glad you posted it here.

I mentioned it to Mike A. and he was like... "dude you're so yesterday, I found out about that like way long ago, before it was even released man! I've been checking that number every day since Google did it's IPO. Jeeze....catch up w/ the times captian" j/k

-

-

I hope the Amazon patent trolls don't read LAVA; you might be infringing on their One-click technology

-

Welcome,

I am always interested in what the new members find particularly interesting.

I happen to be a User Interface Junkie. If you can't figure out how to use it by looking at the interface it's crap!

Give me a well developed interface and it's like I'm on a nice warm sandy beach.

</junkie rant>

-

I was wondering how the "ctrl + shift + Run" was working.

I have a llb with a large number of VIs, if I open the main VI and hit "ctrl + shift + Run" what is going to be recompiled ?

All the VIs in the llb ?

or

Only the main VI's hierarchy ?

The diferece is of course the VIs in the llb which are called dynamically.

-

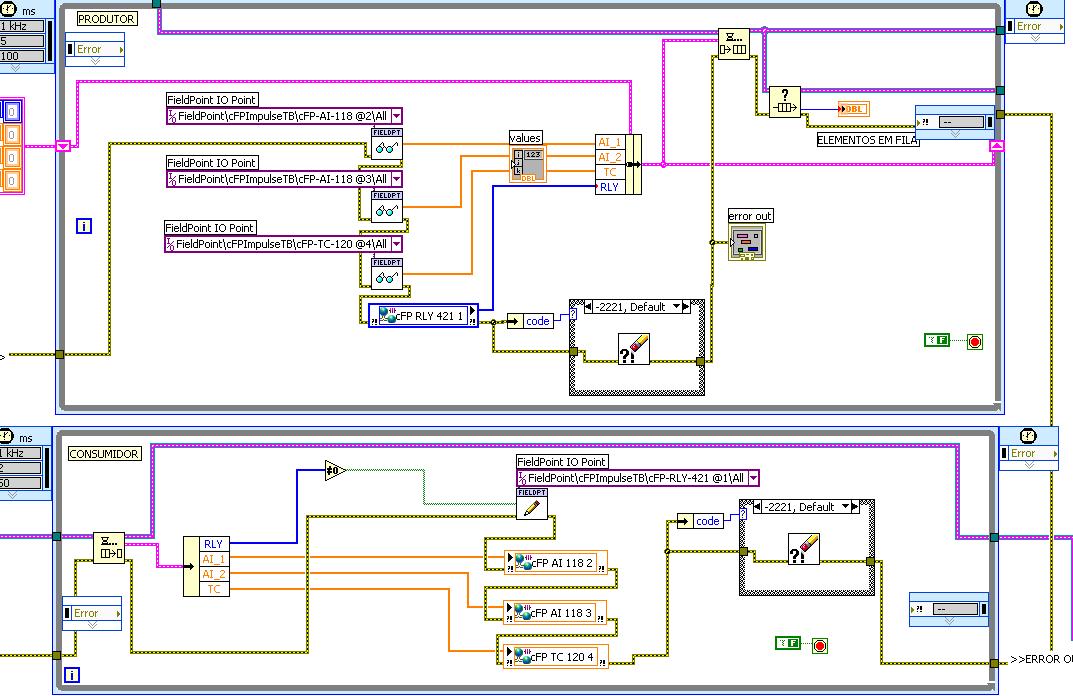

cFP rate

in Hardware

Posted

QUOTE(Matheus @ Oct 15 2007, 11:17 AM)

I can't say I have ever seen a cFP run that fast.

I remeber reading a post by an NI AE that spoke of a setting in MAX affecting the acq rate.

And yes TC = Thermocouples

Ben