-

Posts

129 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Wouter

-

Thanks! Totally missed that. So who is currently the creator of OpenG? And is this maybe something I can do myself?

-

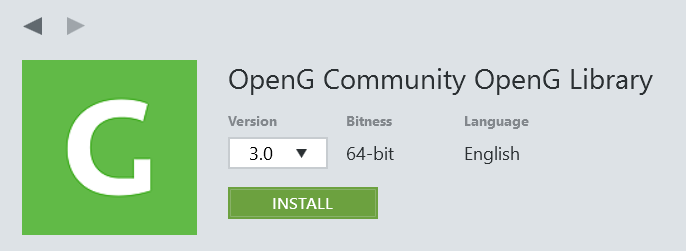

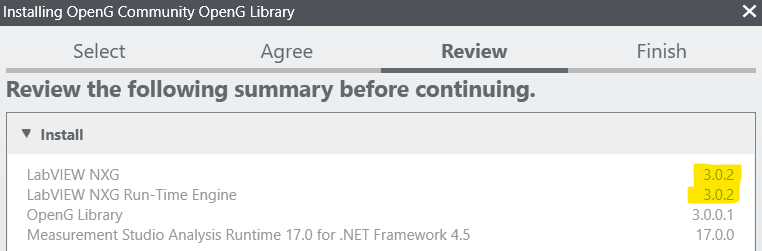

First question, Is OpenG not available for LabVIEW NXG 5.0? When I try to install it via the NI Package Manager I see version 3. Then when I want to install it, I see that the NI Package Manager wants to install LabVIEW NXG 3.0... so I understand that OpenG is only available for LabVIEW NXG 5.0? Second question, (cross post from https://forums.ni.com/t5/LabVIEW/LabVIEW-NXG-Polymorphic-amp-Malleable-VI-s/m-p/4047507#M1160978) How can I create a polymorphic or malleable .giv in LabVIEW NXG? In NXG they are called "overloads" The only information I can find is: https://forums.ni.com/t5/NI-Blog/Designing-LabVIEW-NXG-Configurable-Functions/ba-p/3855200?profile.l... But it does not tell or share how to do this in LabVIEW NXG? Where can I find this information? Or am I just missing something? Images (w.r.t. first question)

-

If you want to know if your data is within population I think it would be best to simply calculate the mean, mean + 3*std and mean - 3std of all datasets and plot those along with your new dataset.

-

Offtopic: You should use randomized data for a fair representation. Maybe the algorithm performs a lot better or a lot worse for certain values. Maybe the functionality posted in the OP functions a lot better for very large values. I would benchmark with random data which represents the full input range that could be expected. Furthermore I would also do the for-loop around 1 instance of the function. Then store the timings in array. Compute the mean, median, variance, standard deviation and show maybe a nice histogram :-) What is also nice to do, is by changing the input size for each iteration, 2^0, 2^1, 2^2, 2^3,... elements and plot it to determine how the computation execution scales.

-

@Steen Schmidt you know that if that is your benchmark setup, that the benchmark is not fair right?

-

Small note: there is a machine learning toolkit available, https://decibel.ni.com/content/docs/DOC-19328

- 4 replies

-

- discriminant analysis

- plsda

-

(and 1 more)

Tagged with:

-

Wikipage about this: https://en.wikipedia.org/wiki/Publish%E2%80%93subscribe_pattern Further I was wondering, your current implementation does not support subscription to multiple different publishers (who have a different name) right? That would be a nice feature, another extension would be that you can also publish across a network.

- 16 replies

-

- many to many messaging

- queues

-

(and 2 more)

Tagged with:

-

Working with arrays of potentially thousands of objects

Wouter replied to Mike Le's topic in Object-Oriented Programming

If it is a 2D array ánd it contains a lot of zero's you should consider using a sparse matrix datastructure. -

What do you mean with LU solver? Do you mean that this solves Ax = b using LU decomposition? Or does it do LU decomposition?

-

Lucky you can't patent software in Europ.

-

Good resources for Best OOP Practices?

Wouter replied to Mike Le's topic in Object-Oriented Programming

http://www.planetpdf.com/codecuts/pdfs/ooc.pdf I think this might be a good book. It goes about OOP in ANSI-C. -

Problem with data value reference creation

Wouter replied to DAOL's topic in Object-Oriented Programming

Hmmm... Using 2012 here and today I got the same error as described by the OP. I came to this topic by google. -

Don't the VI's give you the option to create X number of zip files? Each Y Bytes big? Like you see often? filename.rar0, filename.rar1, etc...

-

Need a true source of random numbers for your application?

Wouter replied to Aristos Queue's topic in LabVIEW General

http://www.random.org/ already provides the same functionality for several years. I wrote some VI's to get the random numbers/strings, https://decibel.ni.com/content/docs/DOC-13121 -

Maybe we should create a library for parsing JSON, YAML and XML, with a abstract layer on top of it. All 3 are basically the same, they are human readable data serializations, with each of them their own benefits. More at http://en.wikipedia....eadable_formats Question; In what LabVIEW version is it written, 2009?

-

Maybe this may interest the topic starter, http://halide-lang.org/. Paper: http://people.csail.mit.edu/jrk/halide12/halide12.pdf

- 5 replies

-

- imaq

- image processing

-

(and 1 more)

Tagged with:

-

For computer science I would like to recommend the book. T.H. Cormen, C.E. Leiserson, R.L. Rivest and C. Stein. Introduction to Algorithms (3rd edition) MIT Press, 2009. ISBN 0-262-53305-7 (paperback) It is made by a few proffessors from MIT, you can also see colleges ocw.mit.edu/courses/electrical-engineering-and-computer-science/6-046j-introduction-to-algorithms-sma-5503-fall-2005/ ocw.mit.edu/courses/electrical-engineering-and-computer-science/6-006-introduction-to-algorithms-spring-2008/ You can also download the video colleges as torrent, torrentz.eu/search?f=introduction+to+algorithms Further the following college notes may help you to get familiar. The first notes is about automata theory, it contains a few errors because it is not the most recent one because the author has decided to make a book from it. http://www.win.tue.n.../apbook2010.pdf. The second notes is about system validation and is a follow-up for automata theory http://www.win.tue.n...ted-version.pdf.

-

No I disagree. First your code size will increase which is totally unnecessary. Second to make the functions work for more complex datatypes you will also need to create a VI for that. When you then want to change something to your algorithm you have to update all VI's, this can indeed be done with a polymorphism wizard or some kind, but again what about the more complex datatypes I think it will make your code base unmanageable. Further I want to have a solution to the problem not a workaround which will for sure cost more time.

-

Rather prefer C++ templates for creating VI's like this ;-) but that has been suggested already a numerous times in the idea exchange.

-

http://en.wikipedia....lar_Expressions ^ Matches the starting position within the string. In line-based tools, it matches the starting position of any line.

-

I'm not suprised by it. I actually also build a version which used the string subset but then i thought of the case that the string "test" may not be on the front in each case, then I just choose to create a more general solution.

-

Yes you are totally correct. In the second run the memory is already allocated and hence does not need to be done anymore.

-

1. This is true but I think differences may be very small I think. We can design 2 different algorithms, 1 algorithm which swaps elements in a array inplace, 1 algorithm which copies contents to another array. Case 1) when we swap elements in a array its just changing pointers, so constant time. Case 2) when we retrieve a element from a certain datatype of array A and copy it to array B it may indeed be the case that a algorithm implementation of case 1 is faster because copying and moving that element for that certain datatype in the memory consumes more then constant time, it would have a cost function which depends on the amount of memory the datatype consumes. (I'm not very sure if case 2, also works like this internally as I explained but I think it does?) 2. This will always be the case. 3. True but that is not our failure. I think its fair to assume that the algorithm runs the fastest on the newest version of LabVIEW and the slowest on first version of LabVIEW. The goal, imo, is to achieve a algorithm that minimizes the amount of operations and the memory space.

-

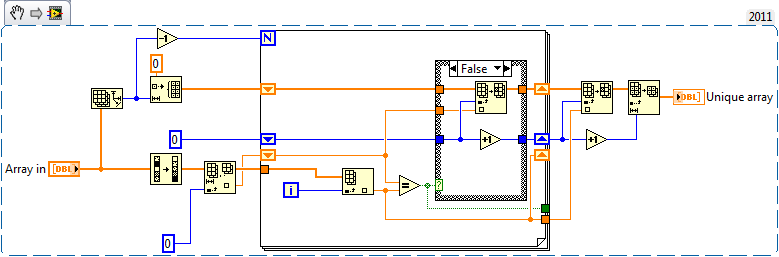

I would rather first sort the array and then iterate over the array, I think its faster because of how LabVIEW works. It still has the same theoratical speed, yours would be O(n*log(n) + n*log(n)) = O(2*n*log(n)) = O(n*log(n)) (because you do a binary search, log(n), in a loop thus multiply by n, becomes n*log(n). Mine is O(n*log(n) + n) = O(n*log(n)). But sure it would be faster then the current implementation which is O(n^2). -edit- Funny a simple implementation of the sort and then linear approach is still slower then yours.