-

Posts

1,986 -

Joined

-

Last visited

-

Days Won

183

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by drjdpowell

-

-

Shaun, I think Daklu is referring to the need to register on your site in order to download the example.

I just tried a test of attaching a VIPM package to this post and “This upload failed”, so perhaps the issue is with LAVA.

BTW, here is the presentation slides Daklu is referring to (it’s on the CLA group at NI.com, but that group is restricted):

In hindsight, I think it was too technical; I should have focused more on the “what” then the “how”.

-

For example, I assume Send.Send method is equivalent to MsgQueue.Enqueue?

It would be Address.Send if I were doing it today. “Send” is anything to which I can send a message. It’s a bit confusing because it is more general than a communication method like a queue (such methods are under the subclass “Messenger”).

Of course, if you do that then the RemoteTCP actor become 100% transparent, blindly forwarding all messages to the network, which you probably don't want. So to enable sending messages intended for the RemoteTCP actor itself, you added a SendButDontForward message to the transport. Now I not only have to make sure I'm sending the correct messages, but I have to make sure each message is using the correct 'Send' method depending on whether the message is stopping at the TCP actor or going across the network. There are more details the developer needs to keep track of, the api for using the TCP actor is more complicated, and there are more opportunities to make mistakes. What's the upside?No, I’m actually going for 100% transparent TCP communication. Having message-sending code by able to work with any transport method was the original motivation of my messaging system. Whether that is really achievable with the intricacies of TCP I’m not sure, as I have not had a TCP project to work on in years. But there is no “SendButDontForward” message.

If you'll forgive me for saying so, in my opinion the necessity of a SendButDontForward method is a code smell that points directly at the root problem. Creating RemoteTCPMessenger and overriding the Send method to wrap the messages in a SendViaTCP message is a mistake. I think it is more clear to either fully acknowledge the existence of the TCP actor by explicitly wrapping messages destined for the network client in a TCP.Send message on the same block diagram that is sending the message, or completely ignoring the TCP actor's existence by creating a proxy of the network client with an identical interface. (The proxy would handle all the TCP details internally.) Your mix of sort-of-acknowledging it and sort-of-ignoring it is confusing for me and my little brain.Well, part of my philosophy is to have simple subcomponents whose messaging interaction is configured by higher-level components. So the subcomponent still needs to be provided a simple “address” to “send” to, even if the higher-level component knows that “address” as a more complex object with extra methods.

Edit: Forgot to add: RemoteTCPMessenger is intended to either BE a network proxy, or be the Messenger inside a network proxy.

-

My comment was directed at systems using stepwise routing, not onion routing. You are correct the PrefixQueue overrides the Send method in the same way you are suggesting, but the use case is different. I use the PrefixQueue to send messages from subactors up to managing actors so the managing actor can identify the sender. The managing actor sets the prefix for the branch of its own input queue prior to instantiating the subactor and giving it the queue. If you apply what you are describing to Alex's diagram, you are wrapping messages sent from the managing actor to the subactor--the reverse of what I do with the PrefixQueue.

The technique of overriding a “Send” method can be used in multiple different ways. PrefixQueue might not be the most closely-related example.

Overriding Send to wrap all messages in a SendViaTCP message is functionally the same as not having a message handler in the TCP actor and automatically sending all the messages over the network. The wrapping serves no purpose. Furthermore, if you automatically forward all messages over the network you give up the ability to send messages to the TCP actor itself. When you do want to send a message to the TCP actor, you have to put conditionals in the Send method to only wrap those messages that should be sent over the network. Now your TCP actor has a MessageQueue subclass customized only for itself.

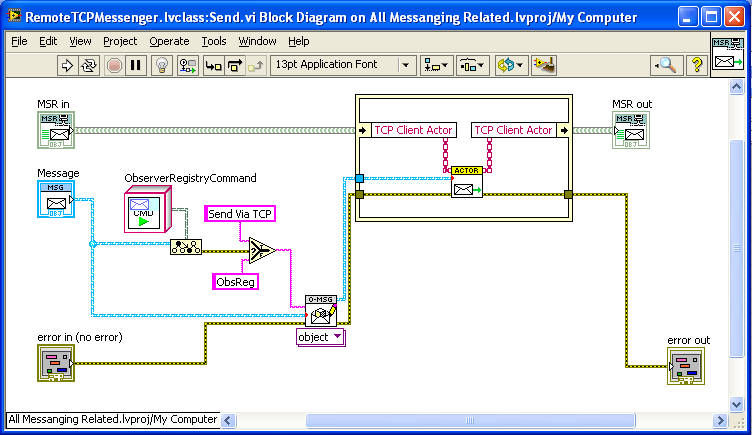

Well, as an example, here is the “Send” override method of my “RemoteTCPMessenger” class:

As you can see, RemoteTCPMessenger wraps and sends messages to a TCP Client Actor. Other methods of RemoteTCPMessenger can send alternate messages to TCP Client to control it. Could I send the TCP messages directly and skip the actor? Well, in this case I have to route message replies and published event messages back through the TCP connection and thus need some process listening on that. Once I have to have the client actor anyway, it is cleaner and simpler to let it handle all aspects of the TCP connection. A TCP connection is too complex a thing to be left to a passive object.

-

I think you'll find I didn't comment on your suggestion before at all. I just shut up because I was told to stop reading because it was POOP.

It WAS POOPy! I was protecting you.

-

(In fact my diagram above shows the TCP layer receiving a Send message instead of an EnableMotor message.)

Opps. Sorry, I missed that.

When a TCP actor exposes a Send message, other parts of the application have explicit knowledge of the TCP actor by virtue of having to use the Send message. When a TCP actor exposes receiver-specific messages is has a more transparent proxy-like interface. The message sender can send the message without knowing or caring whether the receiver is local or across the network.Not if you have a dynamic-dispatched “Send” method, in which case the wrapping of the original message in the SendViaTCP message can be inside the Send method. It’s the same technique you’re using in your LapDog PrefixQueue, where the sending process is unaware that the messages it is sending are having a prefix added. Similarly, a process doesn’t have to know it is sending messages via TCP.

My understanding of the intent of Outer Envelopes is:...This isn’t really what I’m thinking of. “Outer Envelope” suggests a message holding another message. I don’t see why the inner message need be empty (an unfilled “inner envelope”).

— James

-

In fact, your topology seems to mix onion routing with stepwise routing. Specifically, the WinMsgRouting element adds a wrapper message around the data (SendViaTcp) instead of just peeling off a layer and processing what's left. If I were a new developer on the project, this inconsistency would make it harder for me to figure out how I should implement a new feature. How much onion routing should I use? Where should I use stepwise routing instead? I'd encourage you to use one or the other, but avoid arbitrarily mixing them.

He’s actually using what I call “Outer Envelopes”. You didn’t like them when I suggested them before.

In fact, I use a “Send Via TCP” outer envelope in my TCP message system.

In fact, I use a “Send Via TCP” outer envelope in my TCP message system. -

But would it not be easier to refactor in TCP communication if the extra added processes of TCP send/receive don’t have to be custom created to handle specific messages. And if my end processes are “X” and “UI for X”, how useful is it to have three intermediate message-handling stages? Surely at least one of that long message chain doesn’t need to know what an “Enable Motor” message means?My development process is very organic and iterative, so I place a high value on practices that let me refactor easily.

-

How do you distinguish language from meta-language if both are text?

-

I really need to resolve this question of whether you can use LV's internal templates or not? I assumed I couldn't for this one and built the QMH from the ground up. Would have got this done a lot faster if I could have avoided that.

If it’s in a standard LabVIEW install then you can use it. I used the QMH template, if I recall.

-

JamesMc86 is suggesting the possibility of writing a by-val class and allowing the application level to decide on DVR use (or not). The other options are writing a class API that takes DVRs as you’re doing, or use DVRs internal to the class (as todd mentions). If one is doing a class API that takes DVRs, then I agree you probably want to restrict use of the IPE to within that API.

-

Some random thoughts:

1) I tend not to have an actual “UI actor”; instead I have each actor have it’s own UI on its front panel, and have the main actor present the subactors in one or more subpanels (or just open their windows. This involves less messages to pass around.

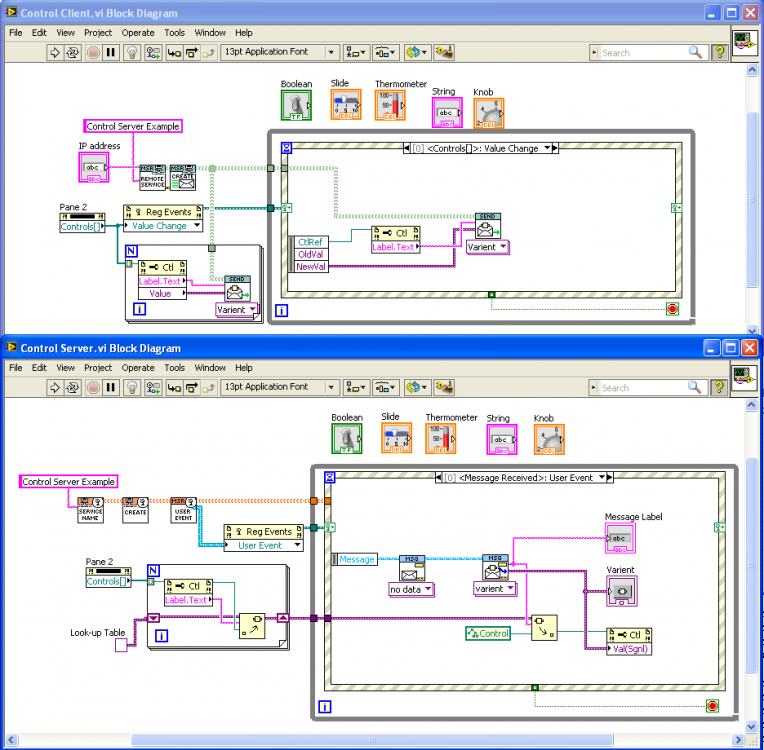

2) One can also deal with needing lots of messages for lots of UI controls by treating the controls generically using techniques illustrated below. This is a “Control Server” example that I don’t think is in the version of “Messenging” in the code repository, but will be in the next version.

Changing any control in Pane 2 of the “Control Client” sends a Variant Message that then fires a Value Change event in the Control Server.

You can do the same thing with “Notify”, and you can also write generic code for setting cluster items using OpenG functions. Would this kind of stuff help reduce the programming burden?

3) The above technique in (2) is event driven (User pushes button —> sends message —> fires Value Changed event). In contrast, using a DVR requires the receiver to poll for changes to the UI settings it cares about. It also works remotely (the example above works over TCP), though that is often not needed.

-

Any Thoughts on this?

You mention “actors”, but one of the main points of the Actor Model is to use message passing instead of sharing by-reference data. So why not just send update messages when the User changes something?

-

Is there a document or perhaps a more involved XControl example that could clarify proper coding guidelines/style for XControls, as well as when to use different Facade events?

Unfortunately it’s on the restricted CLA group, but here is a very interesting presentation on XControls: Round Table Discussion - XControls

-

Had an issue with JSON to Variant where types mismatch because of no enum support so variant to data threw an error.

It’s supposed to work with enums, and my test case seems to work. "Variant to JSON" delegates enums to OpenG’s “Scan from String” which calls OpenG “Set Enum String Value”, so it should work. What error did you get and what enum string did it have?

-

Have you tried recreating the parent “Execute” message from scratch?

-

Is the expected behavior in this case that the reference would persist for the lifetime of the launched actor?

Yes. It shouldn’t matter how deep in the call chain the reference is created.

BTW, what is the semaphore for?

-

1

1

-

-

I’m sort of A, but the large amount of C involved makes me consider B. Thus: D.

-

1

1

-

-

XControls?

A) Yes

B) No

C) <Head banging against wall> Thump…Thump…Thump...

D) All of the above

-

I want to create a plugin class hierarchy and have a generic config GUI. If a class overrides a specific method I will enable a button that allows to open a class specific config GUI.

Would it be better to have a “ConfigGUI” object that was recursive (contained an optional subConfigGUI)? Have a “Get ConfigGUI” method that has a “subConfigGUI” input. The parent implementation would initialize the generic GUI and add the inputted subConfig GUI. Child implementations could override the method to initialize a specific GUI and pass this in to the parent method. The “display” (or whatever) method of the ConfigGUI object would enable the button if a non-default subConfigGUI was present. That avoids any class introspection. It would also work at any depth (so your more specific GUIs could themselves have even more specific sub-GUIs).

-

In any “command pattern”-style process the messages are part of the process, so if one can write or load new messages then one is modifying the process.

-

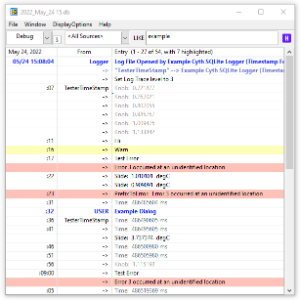

Cyth SQLite Logger

A logger and log viewer using an SQLite database.

The logger is a background process that logs at about once per second. A simple API allows log entries to be added from anywhere in a program.

A Log Viewer is available under the Tools menu (Tools>>Cyth Log Viewer); this can alternately be built into a stand-alone executable.

Requires SQLite Library (Tools Network).

Notes:

Version 1.4.0 is the last available for LabVIEW 2011. New development in LabVIEW 2013.

Latest versions available directly through VIPM.io servers.

-

Submitter

-

Submitted03/08/2013

-

Category

-

License TypeBSD (Most common)

-

-

Name: Shortcut Menu from Cluster

Submitter: drjdpowell

Submitted: 06 Mar 2013

Category: User Interface

LabVIEW Version: 2011License Type: BSD (Most common)

A pair of subVIs for connecting a cluster of enums and booleans to a set of options in a menu (either the right-click shortcut menu on control or the VI menu bar). Adding new menu options requires only dropping a new boolean or enum in the cluster.

See original conversation here.

I use this heavily in User Interfaces, with display options being accessed via the shortcut menus of graphs, tables, and listboxes, rather than being independent controls on the Front Panel.

Relies on the OpenG LabVIEW Data Library.

-

1

1

-

-

The trick to making this work is that the DUT parent class must have an abstract dynamic-dispatch method for every child class of the Method class, and the corresponding DUT dynamic-dispatch method must be embedded into the "Execute Message" override method of each Message child class.

No, the DD methods are not per child class; they are just methods to do stuff (which the children can override). Child DUTs can also provide new methods, and messages can be written that call them by casting the DUT input to the correct child class.

— James

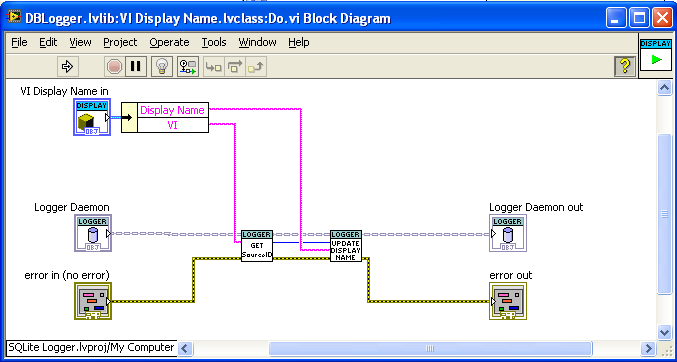

Added later: here’s an example “execute” method (though called “Do.vi”):

“VI Display name" is the message; it calls two methods on “Logger Daemon” to complete its task.

-

Your Message class should have an “execute message” dynamic-dispatch method that has a DUT input. Inside the execute method you call dynamic-dispatch methods of DUT. So you can make child message classes that call different DUT methods, and child DUT classes that override those methods.

What so bad about 'thread safe' singletons anyway?

in Application Design & Architecture

Posted

If I recall the quote right, it’s the singletons you think you made thread safe that particularly inspire God’s wrath. Presumably because you actually didn’t.