-

Posts

3,943 -

Joined

-

Last visited

-

Days Won

273

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Rolf Kalbermatter

-

I"m not quite sure if you mean to advocate a class hierarchy for an "has" relation. Part of the sentence sounds like it, then again the other part seems to indicate you mean not. One of the first principles in OOP anyone needs to understand is that two objects can either have an "is" relationship or a "has" relationship. For an "is" relationship inheritance is usually the right implementation, for "has" you should make the "owned" object a value/property of the owner, which could be by composition.

- 30 replies

-

I believe you should read the Drawable property and downcast it to the SceneMesh class. At least that is the class hierarchy, but I'm not sure if the Drawable is really a multiple inheritance object interface that simply implements all the SceneBox, SceneMesh and so on interfaces or if there are different drawable types that a scene object can have. If it is the second, I think the LabVIEW interface simply doesn't provide a way to do what you want.

-

CINTOOLS for ARM exists? Resize String in c++

Rolf Kalbermatter replied to x y z's topic in Calling External Code

I never worked with that Toolkit but if it is even possible to use the PDA Toolkit for development of Windows Embedded Compact 7.0 targets (there might be some serious trouble since Windows CE 5.0 is really a quite different platform than Windows Embedded Compact 7.0, almost as different than Android and iOS in comparison. Windows CE 5.0 was mainly for MIPS based platforms which was what Microsoft thought would be the future for embedded, although it also supported x86 and ARM platforms, but I'm not sure if the PDA Toolkit comes with support for all these platforms). But, I have a feeling that if it is possible, there are some object libraries that need to be linked with the compiled object files that were created by the PDA Toolkit. These object libraries provide all the LabVIEW manager functions in one way or the other. So it might be enough to simple create a C file that calls these functions and add it to the list of files to compile and link to create the final executable. The PDA Toolkit works AFAIK such that LabVIEW basically creates C++ files from the VI files, that then get compiled and linked with Toolkit provided support libraries to the final executable with the help of the Microsoft CE development system and compiler. So those functions must somehow call the manager functions too to manipulate the used memory blocks. I remember that it was kind of tricky to get at the according C++ files as the build process creates them only temporarily and then deletes them immediately after the C compiler compiled them into the object files. But I remember that when I played around with one of the Toolkits back in the very old days, I actually managed to get at those files somehow. -

Files end up as directories in zip archive

Rolf Kalbermatter replied to Mads's topic in OpenG General Discussions

Haven't forgotten this but there needs to be some more work done as I also updated the whole source code of the shared library in the past and I need to do some more tests. But for now I'm taking off for some well deserved vacation. -

Call Library Node Crashes LV in Edit Mode

Rolf Kalbermatter replied to viSci's topic in LabVIEW General

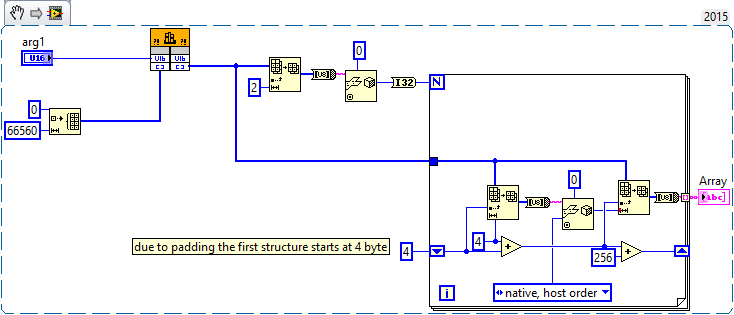

That won't work for sure!! This strange offset of 2 you added inside the loop would indicate that the according C code was not compiled with standard padding. A completely valid possibility although pretty unusual for DLLs that were not specifically compiled for LabVIEW. Instead you should most likely have changed the constant in front of the loop with the value 4 to 2 to get this properly working! -

Creating a password protected Zip file to store data files

Rolf Kalbermatter replied to usmanf's topic in LabVIEW General

The (none) in parenthesis means simply that if you leave this input unconnected, that there will be no password applied. Theoretically you could also apply an empty password, which would result in an encrypted file but with a very week password key. In the OpenG library there is no difference in wiring nothing to the input and wiring an empty string to it. LabVIEW doesn't give the ability to distinguish between the two for subVIs with the normally available possibilities. Still I feel it is useful to indicate in the control label what the default value of a control does mean, especially since there is this possible ambiguity between using an empty password or none. Simply wire your password to that input and then the specific file should be encrypted with this password and stored in the archive. Theoretically you can even use different passwords for each file but that will be problematic with most other ZIP archive viewers as they typically don't allow to enter different passwords for different files inside an archive. Testing this would have cost you not more time than writing your post here. Simply connect a password to that input string and then try to open the ZIP file in some other ZIP utility (Windows explorer extension, WinZIP or my favorite 7-ZIP).- 2 replies

-

- labview

- encryption

-

(and 2 more)

Tagged with:

-

Files end up as directories in zip archive

Rolf Kalbermatter replied to Mads's topic in OpenG General Discussions

Thanks I'll have a look at this over the weekend. EDIT: I did have some look at this and got the newer code to work a bit more, but need to test on a real x64 cRIO system. Hope to get my hands on one during this week. -

Direct access to camera memory

Rolf Kalbermatter replied to RayR's topic in Machine Vision and Imaging

Duplicate post -

Direct access to camera memory

Rolf Kalbermatter replied to RayR's topic in Machine Vision and Imaging

Without documentation of the .Net interface for this component, there is no way to say if that would work. This refnum that is returned could be just a .Net object wrapping an IntPtr memory pointer as you hope, but it could be also a real object that is not just a memory pointer. Without seeing the actual underlaying object class name it's impossible to say anything about it. It is to me not clear if that Memory object is just the description of the image buffer in the camera with methods to transfer the data to the computer or if it is only managing the memory buffer on the local computer AFTER the driver has moved everything over the wire. If it is the first, your IntPtr conversion has absolutely no chance to work, since the "address" that refnum contains is not a locally mapped virtual memory address on your computer but rather a description of the remote buffer in the camera, and the CopyToArray method does a lot more than just shuffling data between two memory locations on your local computer. If it is indeed just a local memory pointer as you hope, I would not even try to copy anything into an intermediate array buffer but rather use the Advanced IMAQ function "IMAQ Map Memory Pointer.vi" to retrieve the internal memory buffer of the IMAQ image and then copy the data directly in there. However you can usually not do that with a single memcpy() call for the entire image since IMAQ images have extra border pixels which make the memory layout have several more bytes per image line than your source image would contain. So without a possibility to see the documentation for your .Net component we really can't say anything more about this. -

Files end up as directories in zip archive

Rolf Kalbermatter replied to Mads's topic in OpenG General Discussions

How do you look at those ZIP files? Through the OpenG library again or a Unix ZIP file command line utility? -

CINTOOLS for ARM exists? Resize String in c++

Rolf Kalbermatter replied to x y z's topic in Calling External Code

The LabVIEW PDA Toolkit is not supported anymore as you may know and that is the only way to support Windows CE platform. That said, labview.lib basically does something like this which you can pretty easily program yourself for a limited number of LabVIEW C functions: MgErr GetLVFunctionPtr(CStr lvFuncName, ProcPtr *procPtr) { HMODULE libHandle = GetModuleHandle(NULL); *procPtr = NULL; if (libHandle) { *procPtr = (ProcPtr)GetProcAddress(libHandle, lvFuncName); } if (!*procPtr) { libHandle = GetModuleHandle("lvrt.dll"); if (libHandle) { *procPtr = (ProcPtr)GetProcAddress(libHandle, lvFuncName); } } if (!*procPtr) { return rFNotFound; } return noErr; } The runtime DLL lvrt.dll may have a different name on the Windows CE platform, or maybe it isn't even a DLL but gets entirely linked into the LabVIEW executable. I never worked with the PDA toolkit so don't know about that. -

Call Library Node Crashes LV in Edit Mode

Rolf Kalbermatter replied to viSci's topic in LabVIEW General

You should probably simply pass a Byte array (or string) of 2 + 2 byte padding + 256 * (4 + 256) elements/bytes as array data pointer and then extract the data from that byte array through indexing and Unflatten from String. -

Create objects in different LabVIEW versions

Rolf Kalbermatter replied to Leif's topic in Object-Oriented Programming

If you talk about patterns, then this follows the factory pattern. The parent class here is the Interface, the child classes are the specific implementations and yes you can of course not really invoke methods that are only present in one of the child classes as you only ever call the parent (interface) class. Theoretically you might try to cast the parent class to a specific class and then invoke a class specific property or method on them, but this doesn't work in this specific case, since that would load the specific class explicitly and break the code when you try to execute it in the other LabVIEW version than the specific class you try to cast to. -

Error accessing site when not logged in.

Rolf Kalbermatter replied to ShaunR's topic in Site Feedback & Support

I'm also seeing it in Chrome on Windows when not logged in. -

Tortoise SVN (+command line tools for a few simple LabVIEW tools) both at work as well as on a private Synology NAS at home.

-

Just Downloaded and Installed LabVIEW NXG.....

Rolf Kalbermatter replied to smarlow's topic in LAVA Lounge

Well Windows IoT must be based on Windows RT or its successor, as the typical IoT devices do not use an Intel x86 CPU, but usually an ARM or some similar CPU. And looking at the Windows IoT page it says: Windows 10 IoT Core supports hundreds of devices running on ARM or x86/x64 architectures. Now I don't think they can limit that one to MS Store app installs only, so they must not use that restriction on IoT, but technically it would seem to be based on the same .Net centric kernel than Windows RT. And in order to provide the taunted write once and run on all of them, they will push the creation of .Net IL assemblies rather than any native binary code, Maybe it's not even possible to use native binary code for this platform. At some point they did promise an x86 emulator for the Windows RT platform (supposedly slated for a Windows RT 8.1 update) in order to lessen the pain of a very limited offering in the App Store, but I don't think that ever really materialized. CPU architecture emulation has been many times tried and while it can work, it never was a real success, except for the 68k emulator in the PPC Macs, which worked amazingly well for most applications that didn't make use of dirty tricks. -

Just Downloaded and Installed LabVIEW NXG.....

Rolf Kalbermatter replied to smarlow's topic in LAVA Lounge

Windows RT and Windows Embedded are two very different animals. Windows Embedded is a more modular configurable version of Windows x86 for Desktop while Windows RT is a .Net based system that does contain a kernel specifically designed for a .Net system, without any of the normal Win32 API interface. Windows Embedded only runs on x86 hardware ,while Windows RT can run on ARM and potentially other embedded CPU hardware. On the other hand Windows Embedded can run .Net CLR applications and x86 native applications, while Windows RT only runs .Net CLR applications. RT here doesn't stand for RealTime but is assumed to refer to the WinRT system, which basically uses the .Net intermediate code representation for all user space applications to isolate application code form the CPU specific components. Windows RT officially was only released for Windows 8 and 8.1 but the new Windows S system that they plan to release, seems to be build on the same principle, strictly limiting application installation from Microsoft Store and only for apps that are fully .Net CLR compatible, meaning they can't really include CPU native binary components. These limitations make it hard for anyone to release hardware devices based on this architecture as only Microsoft Store apps are supported. But I think NI might be big enough to negotiate a special deal with Microsoft for a customized version that can install applications from an NI App Store . Perfect monetization! However with the track record Microsoft has with Windows CE, Phone, Mobile, RT and now S, which all but S (which still has to be released yet) were basically discontinued after some time, I would like to think that NI is very weary of betting on such an approach. For the current Realtime platforms NI sells I would guess that ARM support is still pretty important for the lower cost hardware units, so use of Windows Embedded alone is not really feasible If NI could use the Windows RT based approach for those units, they might get away with implementing an LLVM to .Net IL code backend for those, but since Windows RT is already kind of dead again, that is not going to happen.Therefore I guess NI Linux RT is not going away anytime soon. Yes the new WebVI technology based on HTML5 is likely the horse NI is betting on for future cross platform UI applications that will also run on Android, iOS and other platforms with a working web browser. Development however is likely going to be Windows only for that for a long time. -

Just Downloaded and Installed LabVIEW NXG.....

Rolf Kalbermatter replied to smarlow's topic in LAVA Lounge

No, .Net Core is open source, but .Net is quite a different story. And the difference is akin to saying that MacOS X is open source, because the underlying Mach kernel is BSD licensed! -

Just Downloaded and Installed LabVIEW NXG.....

Rolf Kalbermatter replied to smarlow's topic in LAVA Lounge

That would mean to trash NI Linux RT and go with a special variant of Windows RT for RT (sic). I'm not yet sure that NI is prepared to trash NI Linux RT and introduce yet another RT platform. But stranger things have happened. -

Just Downloaded and Installed LabVIEW NXG.....

Rolf Kalbermatter replied to smarlow's topic in LAVA Lounge

There was some assurance in the past that classic LabVIEW will remain a fully supported product for 10 years after the first release of NXG. Having 1.0 being released this NI Week would end LabVIEW classic support with LabVIEW 2026. And yes .Net is a pretty heavy part in the new UI of LabVIEW NXG (the whole backend with code compiler and execution support is for the most part the same as in current LabVIEW classic). Supposedly this makes integrating .Net controls a lot easier, but it makes my hopes for a non-Windows version of LabVIEW NXG go down the drain. Sure they will have to support RT development in LabVIEW NXG, which means porting the whole host part of LabVIEW RT to 64 bit too, but I doubt it will support more than a very basic UI like nowadays on the cRIOs with onboard video capability. Full .Net support on platforms like Linux, Mac, iOS and Android is most likely going to be a wet dream forever, despite the open source .Net Core initiative of Microsoft (another example of "if you can't beat them, embrace and isolate them") -

Accessing Microsoft Sharepoint with LabVIEW

Rolf Kalbermatter replied to Rixa79's topic in Database and File IO

Definitely! Accessing directly the Sharepoint SQL Server database is a deadly sin that puts your entire Sharepoint solution immediately into fully unsupported mode AFA Microsoft is concerned, even if you only do queries. -

Load lvlbp from different locations on disk

Rolf Kalbermatter replied to pawhan11's topic in Development Environment (IDE)

You usually need to scroll down in the list until you find issues that have at least one valid resolution option. Higher level conflicts that depend on lower level conflicts can't be resolved before their lower level conflicts are resolved. -

Load lvlbp from different locations on disk

Rolf Kalbermatter replied to pawhan11's topic in Development Environment (IDE)

Doesn't need to. The LabVIEW project is only one of several places which stores the location of the PPL. Each VI using a function from a PPL stores its entire path too and will then see a conflict when the VI is loaded inside a project, while the project has this same PPL name in another location present. There is no other trivial way to fix that, than to go through the resolve conflict dialog and confirm for each conflict from where the VI should be loaded from now on. Old LabVIEW versions (way before PPLs even existed) did not do such path restrictive loading and if a VI with the wanted name already was loaded, did happily relink to that VI, which could get you easily into very nasty cross linking issues, with little or no indication that this had happened. The result was often a completely messed up application if you accidentally confirmed the save dialog when you closed the VI. The solution was to only link to a subVI if it was found at the same location that it was when that VI was saved. With PPLs this got more complicated and they choose to select the most restrictive modus for relinking, in order to prevent inadvertently cross linking your VI libraries. The alternative would be that if you have two libraries with the same name on different locations you could end up with loading some VIs from one of them and others from the other library, creating potentially a total mess. -

How do you debug your RT code running on Linux targets ?

Rolf Kalbermatter replied to Zyl's topic in Real-Time

Unfortunately, 27 kudos is very little! Many of the ideas that got implemented had at least 400 and even that doesn't guarantee at all that something gets implemented.- 17 replies

-

How do you debug your RT code running on Linux targets ?

Rolf Kalbermatter replied to Zyl's topic in Real-Time

That's of course another possibility but the NI Syslog Library works well enough for us. It doesn't plug directly into the Linux syslog but that is not a big problem in our case. It depends. In a production environment it can be pretty handy to have a life view of all the log messages, especially if you end up having multiple cRIOs all over the place which interact with each other. But it is always a tricky decision between logging as much as possible and then not seeing the needle in the haystack or to limit logging and possibly miss the most important event that shows where things go wrong. With a life viewer you get a quick overview but if you log a lot it will be usually not very useful and you need to look at the saved log file anyhow afterwards to analyse the whole operation. Generally, once debugging is done and the debug message generation has been disabled, a life viewer is very handy to get an overall overview of the system, where only very important system messages and errors get logged anymore.- 17 replies