Tim_S

Members-

Posts

875 -

Joined

-

Last visited

-

Days Won

17

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Tim_S

-

how to change the blinking background color of string

Tim_S replied to willsan's topic in LabVIEW General

You changed the settings in LabVIEW, which get saved in the LabVIEW INI file. There is an INI file named the same as your executable that you have to copy the settings into. You can create a custom INI file and include it in your build so the build generates everything as you want. -

The musical alarms bring back (traumatic) memories of midi files playing Mary Had A Little Lamb and the opening to The A-Team at auto plants in Mexico.

-

EtherNet IP toolkit to use for point I/O Allen Bradley

Tim_S replied to CarlosLuevano's topic in LabVIEW General

Every time I've called NI tech support on Ethernet/IP the person has to go ask the specific group in NI that develops the product. The NI forums are monitored by NI personnel, so you might have better luck there on this one. https://forums.ni.com/t5/Industrial-Communications/bd-p/260 -

EtherNet IP toolkit to use for point I/O Allen Bradley

Tim_S replied to CarlosLuevano's topic in LabVIEW General

I've only tried to get the Ethernet/IP toolkit working talking to a Rockwell S7-300 PLC. We set up the PC/PLC communication to be an assembly (remote I/O in the PLC) so we could transfer a block of data. This was done rather than read/write tags as there was 7 msec overhead per tag and we had hundreds of tags. There was the option of creating a complex tag (tag consisting of tags) however that required a lot of work encoding/decoding using Rockwell documentation that was not easily located. I expect you'll find the Ethernet/IP toolkit cannot act as a master (not certain the terminology for Ethernet/IP -- the NI and Rockwell terminology don't quite match) in the system and the Point I/O will require one to communicate. -

Your request is like asking for a car with cruise control. There's a lot that goes into picking a camera, lens and (most importantly) a lighting system. Your best bet is to contact the local supplier and work with the salesman on your requirements. The lighting can make and break an entire system, so a supplier who can loan you different lights to try out is a godsend.

-

If you go over to National Instruments website and search for "Unicode" then you will get a listing including this document.That should give you an idea of how LabVIEW handles wide and unicode character sets.

-

NI started recommending virtual machines at the last seminar I was at. With the different versions of LabVIEW and drivers it's been the only sane way to manage. My IT is balking at the idea as Microsoft sees each VM as a different PC, so one physical (not-server) PC may get hit for many Windows licenses.

-

Got to second a LTS version. It is challenging to develop a product with LabVIEW with the version updates and which drivers support which versions. My development cycle has been about every 3 years, so I'm never calling tech support on the most recent versions of LabVIEW.

-

Your best resource to start with is the examples that ship with LabVIEW. You can find the examples under Help->Find Examples.

-

Lawyers can only be as good as the information provided to them; IT may have provided incorrect information. Using an open source application and using open source in an application can be worlds of difference.

-

Hrm... Some Google-fu indicates that USB is limited to 127 devices on a controller but can run into bandwidth issues. Powering a device off the USB port could cause problems as well if you exceed the current capability of the port.

-

Hadn't thought of a VM... that's a good thought. It's a good technical solution, though I'm not sure it's a good for non-technical reasons (e.g., can the customer work with it when we're not given remote access to the machine). I'll have to give that serious consideration.

-

Perfectly understandable... NI nearly got me to jump to 2016 with a couple of features, but had too much invested in 2015 versus the benefits. Still waiting for the long-term-support version of LabVIEW. Hrm... I like the time improvements. The improvements won't impact how I'm using JSON, though, so no worries.

-

I'm back on LabVIEW 2015, so can't test it... what kind of performance does this have versus the JSON API?

-

I'm using Windows, so I wonder if there is an issue with the build in the installer for Windows rather than the Linux packages. Did a uninstall/reinstall/setup-from-scratch and had the same issue.

-

Thanks. I'm assuming someone will want to mine data outside of what I provide, so ODBC is part of my requirements. I tested with PostgreSQL 9.6 64-bit. The 64-bit ODBC would setup in Windows ODBC Data Source Administrator and test as working. Soon as I tried using it I got an error of version incompatibility (64-bit ODBC with 32-bit application). I tried the 32-bit ODBC driver and that worked OK. There was strange behavior with the SELECT and INSERT statements any time I tried specifying a column in a table where the error indicated the column was not part of the table (happens even in pgAdmin 4). Could be corruption, bug in something I did, corporate IT settings, butterfly flapped its wings... Not sure what is going on. MariaDB 10.2 has worked out of the box. There are features I prefer in PostgreSQL (better documentation, built-in backup and recovery strategies, method of accessing information in JSON...) but it's (at least initially) more complicated to set up. HeidiDB has a more straightforward interface, but it looks like installing plugins to get the features I'm looking for is going to be interesting (there's documentation on how, but what I've found is written for UNIX/Linux rather than Windows though that seems to be true of much of PostgreSQL as well).

-

Did you try to get ODBC working with PostgreSQL? Having some out of the box issues with that and see you posted a communication package you were working on in the other thread.

-

Appreciating people's responses. Thanks for the link!

-

I'm reviewing how we're storing data and looking at databases. As I'm looking through the hundreds of database options out there I began wondering what other people are using for databases and what they are using it for. Starting off... Currently using files for local test record storage, but also use SQL Server to aggregate data.

-

My customers in China require the translated manuals before we do training so they can read them in entirety. Maintenance tends to keep a copy for their tasks (some related to software). Otherwise we do a lot of 'well, let's look at the manual... ah here on page...' to discover the dead-tree manuals are long ago lost/recycled.

- 9 replies

-

- over budget

- over time

- (and 2 more)

-

Network published shared variables work fine in the runtime environment. Do you have the shared variable engine installed on the runtime-only PC? If you're going across PCs then you want to look at the shared variable primatives rather than the global/local-looking variable on the client side. You'll need to specify an IP address; the primitives is a good route to do that.

-

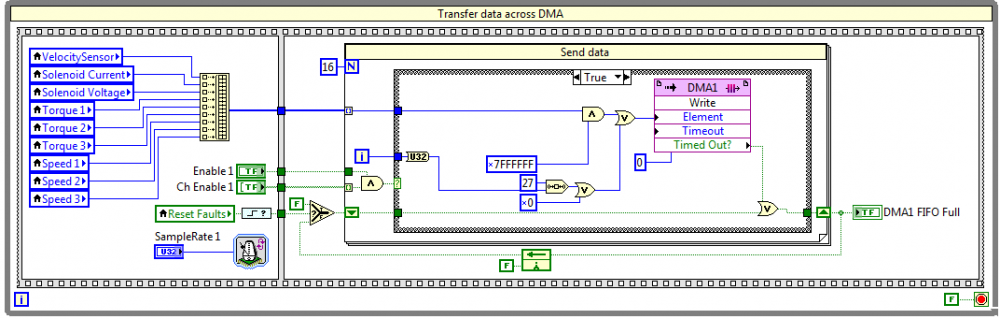

Data transfer strategies for FPGA-RT-Host

Tim_S replied to Max Joseph's topic in Application Design & Architecture

Ideal approach really depends on your application. Your description sounds like you're streaming large amounts of data from FPGA->RT->PC. To that, I have an application that is similar in that the cRIO is a glorified DAQ card (at least initially). The design was able to get 9 channels at 1 MS/sec and 9 channels at 1 kS/sec simultaneously. I did an overnight test checking all 18 channels arrived at the PC without loss, and the design has been in use (at much lower and less intensive transfer rates) for over a year, so the design should be solid though I expect there is a lot of room for improvement. FPGA side... The FPGA folds, spindles and mutilates the signals to where each is a U32 value. The top byte contains a 'channel number' and the bottom 3 bytes contains the data. This gives 256 possible channels and up to 24 bits resolution. This gets transferred across a DMA to the RT. The commands to the FPGA (e.g., sample rate) are done by reading/writing the control from the RT side. This is doubled so I can have the two sample rates on the same channels. RT side... The RT side grabs whatever data is in the DMAs (which are as big as could make it) and puts it in a circular buffer. The circular buffer gets sent to a network stream to the PC at regular intervals. PC side... The PC side connects to the network stream using the primitives. The channel identifier (top byte) is used to sort out the data into an array of waveforms. If I recall right, performance blips up to about 5% CPU usage on my test machine (2.6 GHz i7). -

Ah, good to know. Thanks!

-

May be easier said than done. VIPM 2017 installed with LabVIEW 2017 for me. My coworker tried downloading from JKI's website and got 2016 instead. That's been a week or so ago so it might be fixed now.