-

Posts

3,463 -

Joined

-

Last visited

-

Days Won

298

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by hooovahh

-

Thanks for the tips AQ, what about Automatic Error Handling, and Debugging? Shouldn't those be turned off too? In either case I just re-ran the timing test from above and it isn't a surprise that the XNode is still much much faster. I ran with the changes you suggested 100000 loops and the XNode registered 0ms, and my version with OpenG was 8724ms. I inlined the OpenG VIs out of curiosity and it went down to 8413ms. I'm torn because I like this XNode, but won't add it to my reuse tools due to the fact that it is an XNode. Which is why I tried implementing a solution just as easy to use but with OpenG. Attached is my benchmark saved in 2012. Selector Speed Test.zip

-

Using timestamps to integrate data at specific values

hooovahh replied to kap519071680's topic in LabVIEW General

Very true I was thinking this would be used in a finite sample type of situation but yes if you are doing this continuously you'll need some extra work. It was just meant as an example anyway.- 9 replies

-

- labvew

- integrating

-

(and 3 more)

Tagged with:

-

Using timestamps to integrate data at specific values

hooovahh replied to kap519071680's topic in LabVIEW General

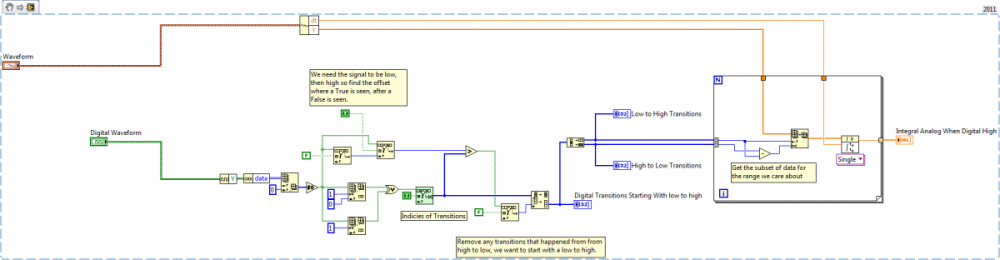

Using the transitions output would probably be easier but for my test I created the analog and digital data. I think it works but you'll want to check my work of course. I don't have 8.0 (which your profile says you use) so I attached an image as well as the VI saved in 2011. Integrate Analog Digital Values.vi- 9 replies

-

- labvew

- integrating

-

(and 3 more)

Tagged with:

-

Create pictures with different opacity levels

hooovahh replied to GregPayne's topic in Machine Vision and Imaging

Saved in 2010 Get Image Data From PictureBox 2010.vi -

Create pictures with different opacity levels

hooovahh replied to GregPayne's topic in Machine Vision and Imaging

If you are interested in getting your image back into a LabVIEW data type you can use the VI I attached. Provide the Image from the PictureBox and you'll get the Image Data back out in PNG format. From here you can perform a save and I believe transparency will be kept. If you try to display the this image in a 2D Picture the transparency will be lost. Using this PNG to Stream or Stream to PNG dance you can go to and from the picturebox and system drawing tools, to the LabVIEW tools and back. Get Image Data From PictureBox.vi -

Using timestamps to integrate data at specific values

hooovahh replied to kap519071680's topic in LabVIEW General

Are the Analog and Digital samples driven from the same clock? Are they sampled at the same rate? Are they started at the same time? If you answer yes to any of these your life can get a little easier. Saying no to all of these questions means lots of manual code searching for times that match up and will likely take much more time but still do able.- 9 replies

-

- labvew

- integrating

-

(and 3 more)

Tagged with:

-

Create pictures with different opacity levels

hooovahh replied to GregPayne's topic in Machine Vision and Imaging

There are other members on the forums with more experience then I. But what I do know is that LabVIEW doesn't really support transparency. It does support the data, but not displaying the alpha layers. http://digital.ni.com/public.nsf/allkb/00736861C29ADFB786256D120079D119 Because of this there are many tricks people have come up with to get around this like reading the color of the background, then merging color of the background with the alpha layer to come up with a pretend color that looks semi transparent. Other trick involve using the Windows .NET PictureBox control which does support alpha layer information. I've had success with using this in the past but only to display already made images that had alpha characteristics. -

Personally my activity on LAVA (and NI) has increased a lot in the last "several years". Recently I've crossed over the 1000 post count on both forums. I never plan on attaining any status like Crelf here, or Altenbach on NI but I wanted to be more active. My current employer even made it a goal of mine last year to be more active on LAVA. Bonuses and pay raises are based on how well you work toward your goals so you could say I have added incentive.

-

Labview is packed with wonderful pieces at the cutting edge of fashion

hooovahh replied to Jim Kring's topic in LAVA Lounge

Now NI will sue the pants off of them. See what I did there? Because their a clothing store? /crickets -

First the original question. It sounds like you don't have DAQmx installed. This is a driver add-on that also adds functions to you palette. It is a rather large download and the latest has no support for 2009 so you'll want to check the release notes for the right version. As for integrating a zip. What is in this zip? How was it made? I'm guessing someone just zipped the user.lib but without knowing what's in it it is impossible to say how it should be used.

-

Changing colors in a specific part of image

hooovahh replied to wewtalaga's topic in Machine Vision and Imaging

The Draw Flattened Pixmap doesn't accept an array or output an array. If want more information about a function open the context help by pressing CTRL + H, or clicking on the help button then mousing over the function. From here you can click Detailed Help for even more information. -

Sorry to hear this I'm sending Michael a message to see if he has a fix. In the mean time what you can do is go to the user who posted the spam, and report them instead of reporting the single post.

-

This is not typical of a LabVIEW built EXE and I have only ever seen it in programs that have issues finding some component of the EXE that is external like .Net assemblies, DLL calls, or dynamic VI calls. I'd suggest building an EXE with debugging turned on, and then when it loads it might give a reason like expected loading location of a component was different from the expected location.

- 9 replies

-

- labview

- deployment

-

(and 1 more)

Tagged with:

-

Synchronizing digital and analog waveforms

hooovahh replied to kap519071680's topic in LabVIEW General

If you didn't pick up on it before, a useful technique is to simulate the hardware in MAX and then your code will function as if the hardware was connected. This is obviously not the same but can help catch problems like this. Here is a white paper on simulating hardware. http://www.ni.com/white-paper/3698/en/- 6 replies

-

- waveform synchronization

- digital i/o

-

(and 2 more)

Tagged with:

-

Synchronizing digital and analog waveforms

hooovahh replied to kap519071680's topic in LabVIEW General

All I can do is simulate a myDAQ in MAX but the testing I did kept giving errors stating the the route for the timing source to the digital read wasn't allowed. The easiest way to do this is to provide the DI with a sample clock of the built in 100KHz timer. I also tried using the single counter the myDAQ provides, and the FREQ OUT which can be configured to have different rates where 6250Hz is the slowest it can run, and it also reported an error with the route being attempted. I haven't used the hardware enough, but it appears it doesn't support what you are trying. You should get the opinion of someone with more experience with this hardware before giving up.- 6 replies

-

- waveform synchronization

- digital i/o

-

(and 2 more)

Tagged with:

-

Synchronizing digital and analog waveforms

hooovahh replied to kap519071680's topic in LabVIEW General

Welcome to the forums. And congratulations on using a myDAQ with the DAQmx API instead of DAQ Assistant, or some other myDAQ express VI. What you need to do is as you suggested, create a common clock that is shared between both tasks. So you would make an analog task, and for the timing source provide an internal clock channel. Depending on your hardware there are several and some are more flexible then others. You then make a digital reading task that uses the same clock source, then you start your analog and digital task. Neither one will take samples until the clock source you created has started, but when it does it will take samples from both, at the same time. So the third task you need to start, is the one associated with the common clock source. Another method that I just found by searching I've never tried but looks like it creates the analog task, then uses that clock from that task to read from the digital task. It can be found here. https://decibel.ni.com/content/docs/DOC-12185 Also notice in that example how they only create the tasks once, and close once. This is a better way of programming because there is overhead from opening and closing the task over and over. Also you are doing finite reads so your data won't be continuous, it will have breaks between loop iterations and data will be lost where the example I linked to will continue reading where the last read left off. EDIT: For any of this to work your hardware needs to support providing the internal clock to both tasks. I think myDAQ should be able to do this but I have never done this with that hardware.- 6 replies

-

- 1

-

-

- waveform synchronization

- digital i/o

-

(and 2 more)

Tagged with:

-

How do you make your application window frontmost?

hooovahh replied to Michael Aivaliotis's topic in User Interface

Thanks for the tip. I understand the edge case and so far my applications that have been built haven't seen any strangeness but I will make that change for future applications. I think the reason I stuck with U32 was because the WIN API I linked to earlier used U32 for all the HWND values but that was developed before a 64 bit Windows. @ThomasGutzler the VI you posted ran and worked as expected without error or crash. The EXE ran without crash or error, but it didn't actually bring it to the front. EDIT: A quick search resulted in someone over on Stack Overflow saying that even in 64 bit Windows, only the lower 32 bits of a HWND are used. The internet has been wrong before. -

How do you make your application window frontmost?

hooovahh replied to Michael Aivaliotis's topic in User Interface

For me I use code similar to this. It gets the HWND using a VI reference instead of window title. This is more robust for things that maybe in subpanels, or hidden but in most cases the get HWND from window title you posted should work. Where we differ more is on the make window top. The VI I have for set Z order comes from code posted here. I've used this technique in built EXEs without a problem. EDIT: OpenG error, and Application Control is required. Make Window Top.zip -

The one I hate is something like "An attribute of this VI has changed". Well thanks I guess. One thing I've seen is going from and x64 to x86 machines the path to "Program Files (x86) would some times cause VIs to need to be re-saved but this is with the source, not the toolkit.

-

Relevant XKCD.

-

Should an application have File >> Exit?

hooovahh replied to Aristos Queue's topic in LabVIEW General

What I was referring to is developers who use a big red stop button to stop a while loop from running. Leaving their programs in limbo where the program isn't running, but the run-time engine is still open. Stopping an operation or function means something to a operator. Stopping the program might not be what they are thinking about. -

Topic moved. Next time feel free to use the report button.

-

Should an application have File >> Exit?

hooovahh replied to Aristos Queue's topic in LabVIEW General

You mean a giant red boolean labeled "STOP". Because to a user using an application stop obviously means exit or close right? Stop the execution of the program isn't what I think when I am trying to close file explorer, or chrome. -

Should an application have File >> Exit?

hooovahh replied to Aristos Queue's topic in LabVIEW General

I realize that you shouldn't really give a user too many ways of doing the same operation. Otherwise you get a fragmented experience between users. But I think this case is common enough that maybe some percentage of users prefer File >> Exit (for whatever reason) over another method of exit. Do I use File >> Exit? Nope, but it's in my programs. If I was using a program and found File >> Exit was missing I wouldn't care, but in the back of my mind I would think the developer forgot to put it in, rather then deciding to leave it out. Oh and I just check Word 2010 and File exit is still there. Has it been remove in newer versions? Now that I'm looking at it I notice they moved the help to be under File, which I also don't like. -

How to programmatically get the formula node content

hooovahh replied to maxprs's topic in LabVIEW General

I'm not saying you can't do what you are suggesting, but if you are choosing to effectively store the data ran when in the development environment, and load it when we are in the run-time, it would be much easier to just deal with the string value which can be pulled out and saved as a constant. What I am describing is the code that I posted earlier dealing with the string value, and not the image.