-

Posts

1,824 -

Joined

-

Last visited

-

Days Won

83

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Daklu

-

I've been working on a cRIO-9074 project recently primarily via remote desktop into the customer site. This morning I discovered my connection was down and there was nobody around to restart it. I couldn't really do nothing all day, so I cloned my project, ported it to an sbRIO I had on hand, and continued working. I discovered I really like being able to easily shift between RT/FPGA targets. It makes development so much easier and faster compared to remoting in to a client's site. My question is what are some good ways to manage code so it can run on multiple target types? Currently I have two targets in my project, one sbRIO and one cRIO. All the source code is listed under both targets. It seems to work, but there's a lot of bookkeeping to track because many resources aren't shared between targets. (FIFOs, registers, memory blocks, build specs, etc.) It requires me to keep all the resource names synched and make sure the properties are identical. I'm constantly worrying about screwing it up. Is there a better way to do this? I noticed the sbRIO-9636 uses the Spartan 6 LX45 and the 9074 uses the Spartan 3 with 2M gates. I assume I cannot use the bitfile from one for the other? I've been using conditional disable structures a bit and noticed there is a TARGET_CLASS symbol available when using it on an FPGA vi. The help files don't give any information about that symbol. Anyone know what it does?

-

Application Task Kill on Exit?

Daklu replied to hooovahh's topic in Application Design & Architecture

I have to ask... how did you do this? -

[Ask LAVA] Must Override exists; could Must Implement?

Daklu replied to JackDunaway's topic in Object-Oriented Programming

My apologies. That's what I get for not rereading the whole thread. -

[Ask LAVA] Must Override exists; could Must Implement?

Daklu replied to JackDunaway's topic in Object-Oriented Programming

Additional follow up question: Do you envision a use case for using these two features independently, or does using one of them automatically entail using the other? I can kind of see how one mould want to use them together as a single feature, but as independent features...? ----------- I believe Jack is on his way to Paris, so may not be able to respond for a couple days. Here's my response to Shane and MJE's comments based on my understanding. (Still haven't gone back to the LapDog example you posted and I'm not trying to put words in your mouth, Jack. Please slap me down if I'm wrong.) Jack's goal is to help users create the child class correctly in the first place. He wants to help users avoid mistakes in the initial implementation, not necessarily make it easier to maintain an existing code base. If that's true, there may be untapped value in the idea, but I'm not convinced that is how the technology is best applied. One of my fundamental beliefs regarding API development is trying to help end users too much via restrictions or conveniences usually does more harm than good. The best APIs are conceptually simple. If the concept is simple to understand then there's no reason to take extra precautions to prevent them from making a mistake. Assuming MI and MCP are always used together and is restricted to a link between one parent method and one child method, there is no situation where Create Message.vi can not define a conpane. If Create Message.vi doesn't exist, then there's no way to flag it as MI, just as there's no way to flag a non-existent method with MO. The use case is for object injection scenarios where one developer (i.e. Jack) is writing an execution framework, and another developer (i.e. me) is writing subclasses for my app because I want to use that framework. The framework requires all objects to go through some sort of initialization routine during creation. If they do not go through this process then they will not work correctly within the framework. The calling code doesn't need to know about Create StringMessage because the framework never calls that method. It's up to the user to call the method and give the StringMessage object to the framework for processing. The framework itself calls the other methods defined by the Message class. Supposing the Message class had three methods, A, B, and C, and the framework design dictated they were always called in that order, then an easy way to get that guarantee is to create a protected initializing method, I, flag it MCP, and call that first. (I-A-B-C) If the framework design allows A, B, and C to be called in any order, then there are significant design tradeoffs the framework designer is faced with. I can... Insert I in the parent methods, flag them as MCP, and take the run time hit for checking to see if the object is initialized every single time, or Not do anything and hope the end user is smart enough to figure out why his objects aren't working in the framework. Jack doesn't want to do #1 and wants to provide a better user experience than #2. MI is a reminder for the child class developer that the child class 'must implement' the same kind of behavior defined in the parent method carrying the MI flag. It can't guarantee end users will do the right thing, but he hopes it will guide the users toward the correct implementation. Overall I agree with Todd's assessment of the situation (though not of his abilities.) Low level users will still screw it up and high level users don't need it. -

[Ask LAVA] Must Override exists; could Must Implement?

Daklu replied to JackDunaway's topic in Object-Oriented Programming

There's still some fundamentals I'm missing here, 'cause I'm slow like that. Once I understand them I'll go back and look at how they apply to the LapDog example you gave. My apologies if these questions have been discussed already. I'm trying to organize the ideas so I can process them. With dynamic dispatching a 'Must Override' method imposes the requirement that child classes must have a method of the same name. The static dispatch equivalent, a 'Must Implement' method, imposes the requirement that child classes must have a method not of the same name (since they are sd and can't have the same name) that is somehow marked as fulfilling that requirement. The intent, I assume, is to force the child class to implement a static dispatch behavioral equivalent to the parent class method with the 'Must Implement' requirement. (i.e. Parent ctor is static dispatch and flagged MI, child class has to implement a ctor and tag that method as filling the MI requirement.) On track so far? With dd a 'Must Call Parent' flag on the parent method requires the overriding method to invoke the parent method somewhere on the block diagram. The sd equivalent on the parent method will require the child method that fulfills the MI requirement also invoke the parent method that imposes the MI requirement on the child method's block diagram. Am I getting it? Is the primary use case for this functionality ctors? -

[Ask LAVA] Must Override exists; could Must Implement?

Daklu replied to JackDunaway's topic in Object-Oriented Programming

Quick question: When you say Must Implement, are you using it to mean Must Call or Must Invoke? In some languages, implements is a keyword associated with Interfaces to indicate the class contains the source code to execute the methods defined by the Interface. In other words, putting a must implement requirement on a vi implies (to me) that the developer of the target vi has to write the source code that does some bit of arbitrary functionality. Based on your post above, it seems like you really want to make sure the target vi developer calls some other arbitrary vi on the block diagram of the target vi. (Must Invoke.) Is that accurate? -

[Ask LAVA] Must Override exists; could Must Implement?

Daklu replied to JackDunaway's topic in Object-Oriented Programming

Very briefly... (yeah, as if) That comment was actually intended for Greg. I'd long since forgotten what you were looking for and didn't have the energy to go reread everything. I think my comment is applicable to his situation, even though it is not applicable to yours. In later posts I assumed your situations were similar based on AQ's drawing a parallel between the two. (Like how I shifted the blame there? ) I don't care that you responded to a comment I intended to Greg's situation--you referenced LapDog. You're aces, Jack. <Spur of the moment non sequiter> I just invented a new game named SighJack based on that last sentence. The object of the game is to fit as many references to a deck of cards as possible into a single sentence in the course of a normal conversation with you. Referencing the numbers 1-10 and the colors red and black don't count. Too easy. The words jack, queen, king, ace, spade, heart, diamond, and club are eligible. Why SighJack? because you'll get so tired of the game you'll sigh in resignation every time someone does it. And it sounds very similar to hijack, which is what's going to happen to all your conversations now. Entries are awarded one point for each eligible word with multipliers given for style. Jackpacking--unnaturally cramming eligible words into the sentence out of the context of the conversation--is penalized by disqualification. My entry has two eligible words for 2 points, and a 2x multiplier for smoothness. Oh, and a 1 gajillion mulitiplier for being the first entry in existence. (All future multipliers must be approved by me.) Total score: 4 gajillion. (Yep, it took me all of 1.7 seconds to create the game. Thinking of the name and typing everything out took another 10 minutes.) </resequiter> It's waaaayyyyyy past the stupid hour for me, there's a lot of stuff in your post to cognitize, and I think it will be several days before I can spend sufficient time figuring it out. But I promise I will, because you referenced LapDog. That was a kingly thing to do, Jack. (Booo... DQ for Jackpacking.) -

[Ask LAVA] Must Override exists; could Must Implement?

Daklu replied to JackDunaway's topic in Object-Oriented Programming

Okay... then to answer Jack's original question: Or is this desire for "Must Implement" indicative of poor OO design choices? Yes, insofar as the design choices have led you to need some capability LVOOP, as a single-dispatch OO system, cannot provide for you. If LVOOP supported multiple dispatch it might not be a poor design choice. And to address the question in the topic title... Must Override exists; could Must Implement? I don't know. I see a lot of problems with actually implementing the concept. Regardless, I think there are better ways to address your particular concerns. Must Implement feels a lot like a hack. I've never liked the phrasing of the SRP, but I confess I can't think of a better way to say it. In Greg's case, you could define the DAQ Output class' responsibility as "write data to the DAQ output," and that sounds a lot like just "one thing." But you could also say its responsibility is to "check the data type to be written to the DAQ and the type of DAQ card being used, then invoke the appropriate dynamic dispatch vi to handle those data types." That doesn't sound anything like "one thing." When you get right down to it, the SRP (as I understand it) simply means to make sure you are assigning responsibilities to your classes such that the behavioral change implemented in all your subclasses occur along a single axis. Greg's DAQ Output class wants to change behavior along two axes: the DAQ card and the data type to be sent on the DAQ output channel. Clearer? Greg and Jack both want "Must Implement" so they can define some behavior in a child class they currently don't have the ability to define. Why can't they use "Must Override?" Because overriding methods require identical conpanes. Why is that a problem? Because they want the overridden method to dispatch on multiple inputs. Remove the expectation of multiple dispatching (or add multiple dispatching to LVOOP) and the request for "Must Implement" goes away. There may be a valid use case for a "Must Implement" requirement, but I don't think this is it. -

[Ask LAVA] Must Override exists; could Must Implement?

Daklu replied to JackDunaway's topic in Object-Oriented Programming

I don't know if I am one of those you are referring to as arguing "everything should be public," but I don't think I made that claim at all. I assume you're referring to Shaun. I have claimed that putting restrictions on a function reduces it's utility, which I loosely define as the number of places the function can be used successfully, but that's not the same as claiming everything should be public. There are definitely some elements in your argument that contradict my interpretation of the straitjacket's purpose, and given my interpretation the conclusion doesn't follow from the arguments you've presented. By and large I agree with a lot of the stuff you say in the post, but I disagree with your conclusion. Trying to suss out the specific areas of disagreement... This is the most obvious place where our understanding of straitjackets differ. First, whether the requirements are embedded in source code or applied via an external file isn't the important question in this discussion. The important question is, "who is responsible for declaring and applying the restrictions, the code author or the code user?" The mechanism for declaring restrictions can't be decided until after we figure out who is able to declare them. Second, the nature of the restriction isn't important. A restriction is a restriction. Labview allows us to declare certain kinds of restrictions (input types) and doesn't allow us to declare certain other kinds (execution time.) For this discussion let's assume all restrictions are declarable and verifiable. In the statement above you're setting up a straw man. No useful code can have *no* restrictions, else it doesn't do anything. As soon as you define something the code does, you're also implicitly defining things the code doesn't do, and that is a type of restriction. You go on to give several examples showing where the code author implemented the correct amount of code checking. In all of these cases he had knowledge of the environment where the code is going to be called and could set the restrictions accordingly. This is also where your argument starts to go astray, imo. In the math function example, the author chose to include array checking inside the function. That is a valid design decision, especially if he has complete knowledge of the calling context. As it turns out, he didn't have complete knowledge of all future calling contexts where someone would want to use the function, and, because he included array checking as part of the function, it couldn't be used in those other contexts. The built-in array checking prevented someone from using the exact behavior that function provided simply because it didn't meet an arbitrary requirement. He could have let the caller be responsible for array checking prior calling the function. That trades away a bit of convenience in exchange for increased utility. He also could have written and exposed two functions, one implementing the basic operation without any checking, and another that checks the array and then calls the basic operation. That slightly increases overall complexity to get the utility gains. Was he "wrong" to include array checking in the function? No, not at all. Did it reduce the function's overall utility? Yep, absolutely. Where he was "wrong" was in his assumption that he knew all the contexts where the function would ever be called. Would prior knowledge of the other context been sufficient justification to change the design? I don't know... that's a subjective judgment call. But it did have real consequences for someone and I don't think anyone would argue against him being better prepared to make a good decision if he is aware of other contexts that people might want to use the function than he is by assuming those contexts don't exist. What you're saying here is a little different than what I remember you saying before. Before you claimed the parent class should have the ability to declare arbitrary restrictions that all child classes must adhere to, because the calling vi might wish to establish some guarantees on the code it calls. Here, you don't explicitly say who should declare the restrictions. Are you still maintaining that all the contractual requirements between a calling vi and a callee parent class should be declared in the parent class? -

Ah, yes, another OO architecture question

Daklu replied to GregFreeman's topic in Object-Oriented Programming

You're the only one who can decide if a design decision is kosher or not. We can only tell you how we prefer to do it and what some potential consequences might be of the design decision. I don't think there's anything inherently wrong with giving the DAQmx Task to the visitor, if that division of responsibility provides you with the flexibility you need. It's been a while since I've done stuff with DAQmx and I don't remember the details of how it works, but with any shared resource it's easier to understand if only one "thing" has the ability to use it at any given point in time. Moving the Task reference between the acquisition object and the visitor object sounds like a bug hatchery. -

[Ask LAVA] Must Override exists; could Must Implement?

Daklu replied to JackDunaway's topic in Object-Oriented Programming

I agree with AQ on the practical difficulties of enforcing a "must implement" requirement, or even trying to figure out what it means. I'm not sure I fully understand your objectives, but I don't think the problem is the lack of a "must implement" flag. There is already a way to force child classes to implement a "Write" method--create it in the parent class and flag it must override. Your objection is that you need the connector pane to be different based on different data types and we can't do that with dynamic dispatching. If you need different connector panes on the input terminals, what you're usually asking for is double dispatching, or the ability to dynamically choose at runtime which vi is called based on two input types instead of just one. In your case, you would like that choice to be made based on both the type of the DAQ Output object and the type of the data to be written. Labview doesn't directly support double dispatching, and the need to do it is indicative of needing to revisit how you've assigned responsibilities to the classes and/or methods. What you are trying to do is a violation of the Single Responsibility Principle. The SRP is usually presented as "a class should only have one reason to change." Unfortunately that's very abstract and it's hard to understand exactly what it means in practice. In your particular case, it means "in an inheritance heirarchy, the runtime behavior (i.e. the block diagram defining the chunk of code that executes) should be completely defined based on a single input terminal." Or in more direct terms, "don't design your methods so they require double dispatching." (Yeah, I know... it's circular. It's one of those things you need to experience to really understand.) There are relatively easy ways to get the effect of double dispatching with languages that only support single dispatching, but it requires splitting your DAQ Output object into multiple classes. There are also ways you can kind of simulate multiple dispatching by using polymorphic vis and carefully managing the separation between how child classes inherit behavior from the parent and the users invoke the behavior. For an example of the latter, check how I implemented the EnqueueMessage behavior in LapDog.Messaging. (Drop an ObtainPriorityQueue method to get the libraries into your dependencies folder.) I wanted the EnqueueMessage method most commonly used to have different connector panes depending on the data type on the message terminal, so I created a set of polymorphic vis to do the necessary type conversions. I also wanted child classes to be able to override the behavior that is invoked when EnqueueMessage is called, so the polymorphics vis delegate that responsibility to a protected _EnqueueMessage vi. Child classes, like PriorityQueue, override the protected method to implement the custom functionality being added by the class. [Edited to fix minor grammatical error] -

Predictably there was lots of pranking on otherwise serious corporate websites yesterday, but Netflix had a take on it that caught me off guard. If you went to their website and scrolled through the movies categories they presented, you might have come across some odd ones. Here are two I saw: (My wife had to explain to me that "nephrotic" refers to a "nephron," which is the basic functional unit of the kidney.)

-

*sigh of relief* With your original post not getting any questions I thought I wasn't understanding something that was perfectly clear to everyone else. There's nothing quite as confidence-shattering as feeling like the dumbest person in the room.

-

Clarification question: The place to put shared QD plugins is [Labview Data]VI Analyzer Tests, not [Labview Data]Quick Drop Plugins, correct? And since LV apparently does not create that folder by default we should create it ourself?

-

I'm glad this post got bumped. I missed it the first time around, but I've done my duty and kudoed Darin's idea. I agree with that, with the minor clarification of that being the progression of public perception of the idea exchange. I'm not sure people inside NI ever thought it was going to revolutionize Labview. I suspect they always considered it to be exactly what it is: one of many tools for obtaining feedback from the user community. Same with me. The ideas that generate the most kudos tend to be on fluffy side. (i.e. Changing the boolean constant.) Don't get me wrong, I hugely prefer the new constant over the old one, but it doesn't fundamentally change what we can do with Labview. I'd prefer to see more meat and less sugar in the top kudo list. Only one? I consider it a victory when one of my ideas reach double digits. Lovely, thank you. I prefer them to be behind everything else but rarely take the time to do it manually. I did make minor changes that turns it into a Project menu item if you drop it in the <Labview>Project folder. (I still get that annoying front panel pop-up-and-immediately-disappear when I use it, but whatever... it works.) Did you collect the data manually or do you have code to scrape it off the web? Move Error Wires To Back.zip

-

Regex Challenge: How to exclude variable-width parts of a match?

Daklu replied to JackDunaway's topic in LabVIEW General

If you have to do regular expressions very often and want to learn them, RegexBuddy is your friend. -

Actor-Queue Relationship in the Actor Framework

Daklu replied to mje's topic in Object-Oriented Programming

I must have missed out on (or forgotten) that conversation. It seems obvious to me the AF's messaging paradigm *is* the command pattern. Important point of clarification: When I talk about command messages vs request messages, I am specifically not referring to command pattern messages. Command pattern messaging is one way of implementing the decision making process for invoking the message handling code. It is contrasted with a message ID system. Command pattern messaging uses dynamic dispatching; message ID systems use a case structure that switches out on the ID of the message received. Command messages vs request messages is all about deciding who is responsible for making sure the message receiver doesn't do something it is not supposed to do. If the receiver depends on the sender to only send the subset of messages that are appropriate for its state, the messages are in effect commands. (That's what I mean by "sender-side filtering.") The sender is "commanding" the receiver to execute the message regardless of whether or not the receiver is in a state where it should execute the message. When the receiver implements its own state checks prior to executing a message it receives ("receiver-side filtering") the messages are in effect requests. The receiver always has the option to refuse the message. Correct, the AF doesn't force the developer towards either end of the spectrum (and I can't think of any way a framework could enforce that if it wanted to.) My assertion is that well-designed actors, regardless of the framework used, always use requests. And it doesn't only apply to actors; it applies to any concurrent programming model. It's the the test-and-act problem on a different scale. With concurrent programming, as long as test value and set value are unique operations separated by a finite amount of time, there's no way to guarantee a concurrent process hasn't changed the value after it was tested and before it is set.* It is a race condition. In those situations, instead of testing the value first to see if the action can be taken, programmers typically attempt to do the action and see if it generated an error. The principle is the same for actors. Assuming the actor has more than one behavioral state (i.e. it responds to the same message differently depending on its internal state) any message filtering *has* to be done in the receiving actor's logical thread. If message filtering is only done by the sending actor then you have a test-and-act race condition. (*You can't make that guarantee in the general case. Sometimes you can verify the guarantee via code inspection if your application tightly controls who is able to send messages to the actor.) Building a robust plugin framework that prevents rogue plugins from taking down the app or other plugins doesn't strike me as a particularly trivial task, regardless of the framework/messaging system used. The system has to be designed so each plugin runs in its own logical thread; otherwise a rogue plugin can enter an infinite loop and starve everything else. That means spinning up an independent actor for each one. Each plugin also needs a unique queue to send messages to the app. That makes it so the rogue plugin cannot, a) kill the queue and prevent other plugins from sending messages to the app, and b) flood the queue so the other plugin's messages are drowned out. If robustness against rogue plugins is a requirement, you're going to need to implement another abstraction layer. I don't think the AF has any disadvantage here. -

"With over 900 years of motorbike building prowess behind them Triumph have created two wheeled motorbike classics." "Argument juice is added from the argument lobe of the female brain allowing the finished bike to change direction in an instant."

-

- 4

-

-

Cross-posted on NI.

-

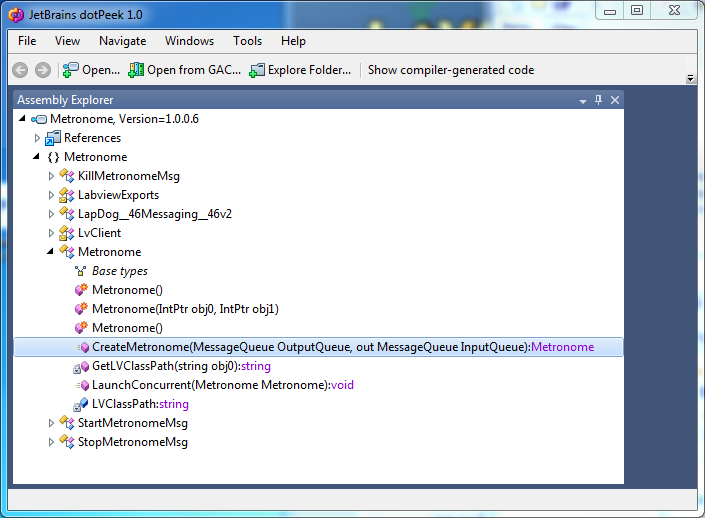

I have been doing that (see attached zip); I was just hoping somebody else had been down this road and could provide some hints and tips. For instance, I discovered the .Net methods generated by Labview's interop assembly builder are all static. I also discovered it mangles my namespaces in ways I don't particularly like. (Replacing invalid characters with _charcode.) I ended up adding an interop layer in my LV code for the sole purpose of simplifying the assembly's API. Another tip I learned: If you're using LVOOP don't use the new keyword to construct objects exposed by the interop assembly. You get a search dialog asking for the location of the .lvclass file, which obviously an end user wouldn't have. Even if I did link to the .lvclass file stuff crashed later on so I'm thinking it's better to avoid doing it completely. My totally awesome and uber-cool sister (who wrote documentation for .Net and WPF for years and might be reading this thread) clued me in. Instead of... Metronome met = new Metronome(); <--- Triggers a LV find dialog ...the code should read. Metronome met; In her words: ...when you use an out parameter, the C# specification says that the caller doesn't have to initialize the variable before making the call, but the method guarantees that the variable is assigned when it returns. Hopefully LV assemblies honors those rules. -------------------------------------- Unfortunately I'm still getting errors with any calls into the assembly that have both in and out parameters. For example, this code works fine: MessageQueue myQ; MessageQueue.Init(out myQ); ...but this code does not: MessageQueue metQ; MessageQueue myQ; MessageQueue.Init(out myQ); Metronome met; Metronome.Init(myQ, out met, out metQ); <--- Raises error The error is generic and unhelpful. "MyProj.vshost.exe has encountered a problem and needs to close." I have no idea why it is occurring or how to fix it. Any insight is appreciated. (The attached zip file includes Labview source, built assembly, and a C# project that uses the assembly. It should work out of the box as long as you have the LV2012 runtime installed.) .Net Interop.zip

-

Actor-Queue Relationship in the Actor Framework

Daklu replied to mje's topic in Object-Oriented Programming

I spent a couple hours this morning trying to implement this and it looks like AQ effectively locked out our ability to implement message filtering that way. Apparently there is a document somewhere explaining how to implement message filters in AF, but I didn't find it in my quick search through the AF community. -

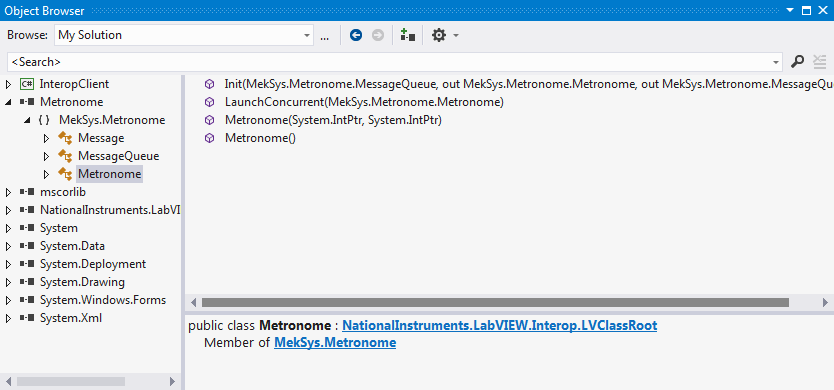

I created a simple Metronome actor, built a .Net Interop Assembly, and used dotPeek to poke around in it. I'm a little unclear on exactly how one would go about using these objects in .Net code. For example, Labview doesn't have constructors, so we often manually create our own creators and call them directly. (i.e. Metronome.CreateMetronome) However, the .Net Metronome object exposed by the assembly *does* have a constructor... three of them in fact. So, is the C# code... Metronome m = new Metronome(); the .Net equivalent of dropping a class cube on a block diagram? And I assume I'd have to follow it up with... m = CreateMetronome(OutputQ, InputQ); If so and there's no way to automatically bind the .Net constructor to the CreateMetronome method, then my naming convention sucks and I'll have to redo it a bit.

-

(Hmm... wrong forum. Would someone be so kind as to move this to the Calling External Code forum?)

-

Actor-Queue Relationship in the Actor Framework

Daklu replied to mje's topic in Object-Oriented Programming

I don't understand what you're saying. Can you explain it a little more? Do you mean the dichotomy between a command and a request? I also don't get what you mean by Actor-Message and Actor-Queue relationships. Being able to swap out actors can be a good thing. It allows you to use actors to implement a state diagram, where each actor represents a different state. I've slightly modified the project and added a UI element to change between one of four different actors. (See Test2.vi) Personally I don't consider swapping out Actor classes to be equivalent to killing off an actor. The AF strongly ties Actor classes to the abstract concept of actors, but in my mind the essence of all actors is the message handling loop--Actor Core in the AF. Actor Core isn't ever stopped, so you're not really chopping off the actor's head. If malicious code is a concern you might be able to override Actor Core and test the messages when they arrive, discarding any messages that you did not put on your white list. Or just don't ever expose the Enqueuer and wrap all message sends in actor methods. (i.e. OriginalActor.SendGetPathMsg) I've pretty much decided that if someone has enough access to the source code or runtime environment where they're able to inject malicious code, I've got far bigger problems that hardening the actor framework of choice won't solve. Actor Switch.zip -

I know how to call and use .Net from within Labview, and I have on a few occasions compiled LV code into dlls and called them from other languages. What I'd like to do is compile Labview actor-oriented code (not necessarily based on the Actor Framework) into an interop assembly and be able to exchange messages with it from .Net languages. The idea is to have the Labview code and .Net code running concurrently. The problem is I don't have much any experience building actor-oriented apps in .Net, or trying to use a message-based interface between LV and .Net. The simplest way to do it would be to expose the actor, queue, and messages as objects through the assembly. I'd let the .Net code interact with the actor in much the same way parallel loops interact with each other in Labview. This puts some burden on the .Net developer to write their message handler so it runs concurrently with the rest of their code. A more complex alternative is to write the Labview code so the .Net code registers callbacks with it. It would be similar to how Labview developers can register for events in .Net components, except the other way around. To be honest I have no idea how this would be implemented, or if it is even possible. Would I have to create a .Net class named "Labview Event Handler" and call that from my LV code, while having the .Net developer subclass it for each callback they want to register? Have any of you tried these kinds of things? Can you share what worked well and what kind of technical obstacles you ran into? Any inputs or suggestions are appreciated.