-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

Error 4 (EOF)still really annoys me. I still maintain it should be a warning and not an error. Every time I have to use my filter error to prevent passing it through I curse and wish a plague on NI. lol

-

There is an opk package in one of the feeds if it's not there already. Chances are, if it has SSH, it'll have compatible binaries. NIlibeay is just NI's compilation of OpenSSL.

-

Nice work. Glad you got there.

-

The destroy should be an Unsigned Pointer Sized integer (Passed by value) rather than adapt to type. You can also set the nodes to "Run in any thread" Rolf got there 10 secs before me.lol Untitled 1.vi

-

I don't think you can see the wood for the trees. I am saying I can't supply the actual code I used because it's commercial but I can help you "discover" how to do it which isn't bound by commercial restraints.

-

That is the old way. The EVP_Digest interface abstracts away the different hashes into unified set of functions. All that is needed is a while loop where they have two update functions in the example about 2/3rds of the way down in that link..

-

As I explained. The code I used is part of ECL so commercial IP prevents me from sharing. You should know or at least appreciate this! Correct. New, Init, update, final and free (the EVP interface). You will also need digestbyname. Once you have this you will also be able to do *all* the hashes, not just MD5.

-

I have a different solution... .. which is demonstrably an improvement. You can lead a horse to water but, this time, I guess I underestimated it's thirstiness.

-

Sorry. No can do. It's part of ECL but I've given you all the info to replicate it and proved it might be worth your while

-

-

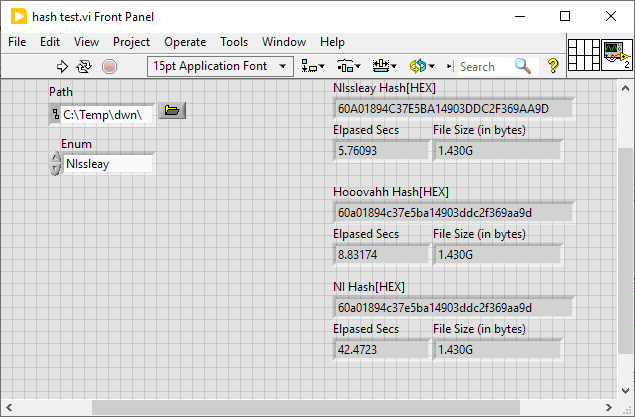

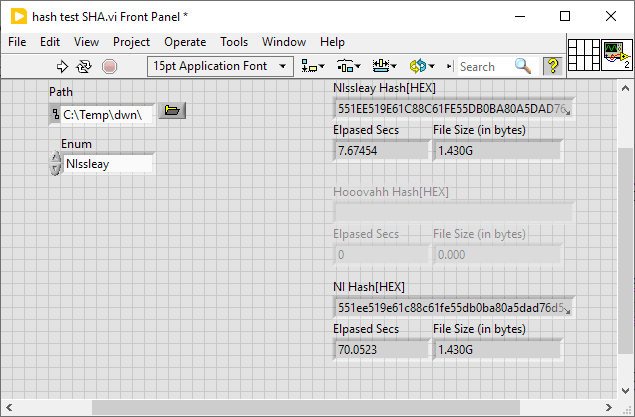

Yuck. Cmd line Try using the EVP_Digest interface of the NIlibeay32.dll You can even have progress events if you want to be fancy I don't know why NI didn't use it

-

That's BS. MD5 is still the 2nd fastest checksum (SHA-1 being the fastest) and a checksum has little to do with security. They are obviously confused between security and integrity.

-

Hmmmm. They have removed MD5Checksum from the palette in recent LabVIEW versions?

-

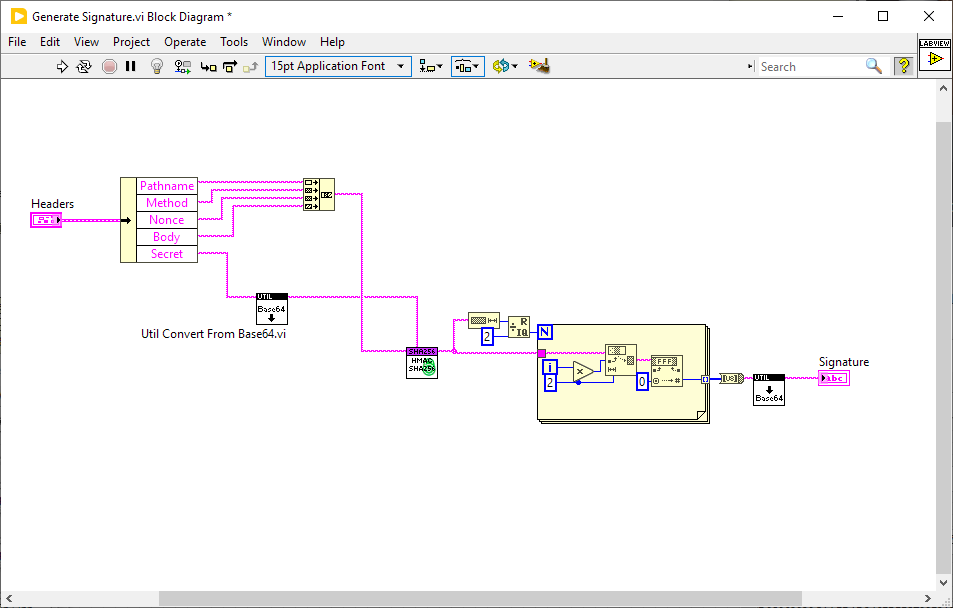

Well. the output of the python hmac is a byte array and the output of the LV hmac is a hex string so I expect you have to convert the LV hmac output into a byte array before passing it to the encode like this: Generate Signature.vi By the way. None of your sources (message or key) are base64 encoded so you may run into errors when trying to b64decode normal text.

-

Well. If your key is greater than the block size (64 bytes) then the library you are using will hash it first to make it 64 bytes in length. If you are passing the hex-text representation of the key, it will probably be longer than that so maybe it's being hashed first.

-

There are some primitives but not easy to get to, so here they are. UTF8 LV80.vi

-

At a guess I expect it's because you are decoding with UTF-8 in your python. I checked the HMAC library you are using and it produces the correct hashes for test vectors.

-

Only the Widechar functions support it. The functions are listed at the end of the link I posted.

-

To use long pathnames you need Registry setting. Use the filename functions ending in W (not A) e.g. CreateFileW instead of CreateFileA. LongPath in the executable manifest. The native LabVIEW functions use the functions ending in A, under the hood, which is why I wrote drop-in replacements for the native file functions which uses the functions ending in W. When you create an executable, you have the option to define a manifest file so the replacements work in the built executable. So as long as the registry or policy enables long paths, the built executables work fine for >260.

-

Of course there is something they could do. I expect the use of the ANSI versions of the file operations is making them resistant. I've my own set of compane equivalent of the native file operations that do it just fine - it's the Path Control that is the problem.

-

I'd say it's a bug. If you uncheck the "Lock FP" for the "Do" case it works as expected. Clearly the FP locking is being triggered when it shouldn't. Changing the compiler optimisation has no effect so maybe it's something else. Rather nasty IMO. Congrats for isolating it. Also does it in 2009 so I suspect it's been around for a while.

-

I can't account for your experience but for this library it is fine.

-

Windows handles are always 32 bit. It should be fine in 64 bit LabVIEW unless there are pointers in there - but didn't see any.

-

A.K.A. Not gonna fix so will memory-hole them. Was just looking at the SFTP from the beta link you provided. VI's invoking Command Line? Really? I've had SFTP for ECL for a while now but never put it in because no-one asked for it. I've been procrastinating about putting in SFTP, SCP, Websockets and MQTT for some time but decided I wanted a better HTTP client first (more configurable than the NI one). I've so many new features I could implement and so little feedback about what people would like to see I've only been updating with things I would find useful which, at this point, is very little than it already supplies.

-

They must be the newbies. Here are the apprentices.