-

Posts

4,997 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

Looking for option to speed up my usage of SQLite

ShaunR replied to Thang Nguyen's topic in Database and File IO

Save the images as files and just index them in the database and any meta data you need. As a rule of thumb, you only save data that is searchable in databases. Images are not searchable data, only their names are. Doing it this way also enables you to process multiple images simultaneously, in parallel, and just update the meta data in the DB as you process them. -

I think you've either wandered into the wrong forum or are advertising motherboards. Which is it?

-

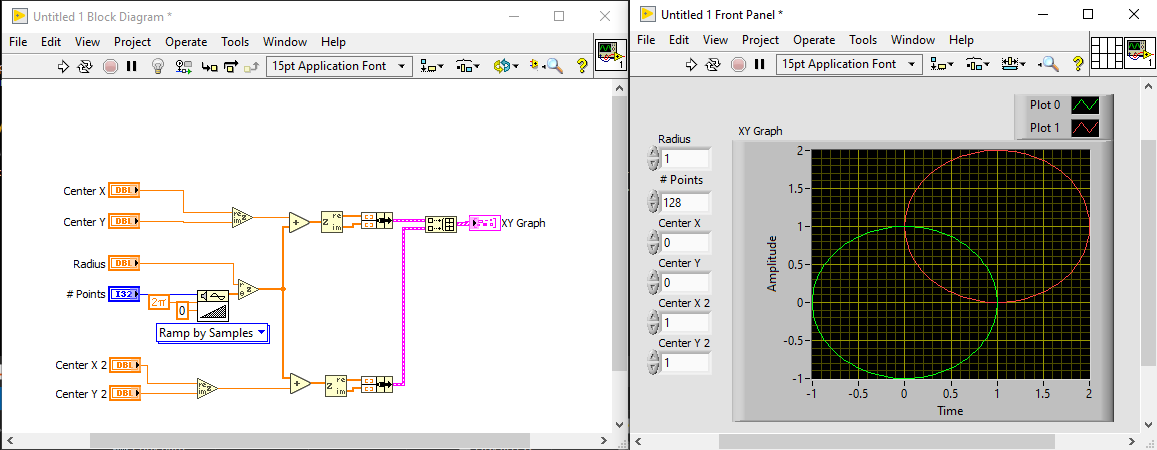

Nice. IMO demodulation and using the sound VI's was harder than writing the interface VI's

-

Conditionnal Disable structure dont display same choice

ShaunR replied to Bobillier's topic in LabVIEW General

OS and CPU are defined within a project. You've created a new VI outside of a project. -

Do something you are actually interested in and will be able to use beyond the mere task of learning to program. If you have a hobby, then program something to solve an annoying aspect of the hobby, Perhaps it's that you have to keep printing out user manuals. Perhaps you have to keep calculating something over and over. Identify an issue and solve it programmatically in a way that will actually be useful to you. If you are a gamer, then maybe something to do with how the game operates. For example. Maybe damage output is dependent on a number of aspects such as weapons or various statistics. Write something that enables you to ascertain what combinations are best for your character or simulates gear changes for different scenarios. Maybe you are into Astrology or Numerology so you can write something that calculates the positions of constellations or the number significance of names. The point of programming is to solve problems. Find a problem that is close to what you know and love and the programming will come along with a solution you can use to make your life a little bit easier. Your current issue is that your problem scope is infinite. Narrow it down to something close to you. Maybe write a program to help ;)

-

I have a whole directory in my toolkit called "Windows Specific" which is for VI's that call the Win32API. But unarguably THE most useful for me is the Win Utils I originally wrote in LabVIEW 5 and still use today. Windows API Utils 8.6.zip

-

¯\_(ツ)_/¯

-

I don't have access LV2020 right now for sets but I maybe have a solution for the arrays which should be much faster and accommodate any number of dimensions,

-

Maybe make the DLL import wizard actually useful. Not sure why they are doing this though. .NET was supposed to be the next generation of Win32 API's. I guess they got fed up of migrating the functionality. I used to have a program for Windows WMI queries that did something similar. It wasn't one that I wrote but was a tool intended for Borland languages that meant you could query the WMI database live and create the queries in C++ and Delphi prototype snipits. This looks similar for Win32 API's.

-

NI abandons future LabVIEW NXG development

ShaunR replied to Michael Aivaliotis's topic in Announcements

Are you suggesting we should grab a sword for the next visit to the sales and marketing dept.? I can see some merit in the idea -

NI abandons future LabVIEW NXG development

ShaunR replied to Michael Aivaliotis's topic in Announcements

Nah. That's just one of those vague marketing place-holders like "synergy" or "convergent" that is deliberately obtuse so that the customer interprets it in their own context. -

It depends how it is compiled. There seems to be a function to determine whether the binary is thread safe, yielding 1 if it is and zero if it isn't. int PQisthreadsafe(); Source

-

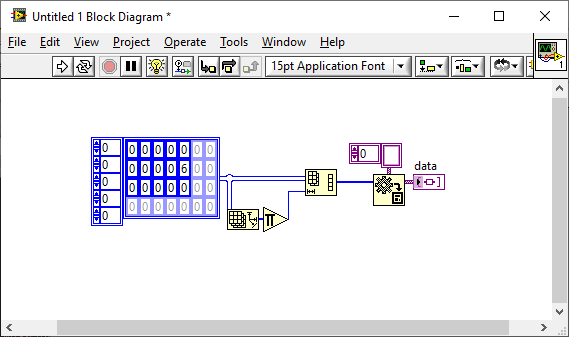

How does VIPM set the VI Server >> TCP/IP checkbox?

ShaunR replied to prettypwnie's topic in LabVIEW General

I don't know how VI manager does it but here are the things I do know. A fresh install has no tcp settings in the LabVIEW.ini. If you change the VI Server settings then the following settings are added to the LabVIEW ini depending on what you change from the defaults - the prominent ones are: server.tcp.enabled= server.tcp.port= server.tcp.access= There are also property nodes that relate to those settings. Access via these nodes (externally) requires that the VI server is available (i.e enabled). You can turn it off, but not on. In 2020? a new variable was added to the ini which is server.tcp.acl= I don't know much about that one but it will probably bite me in the backside at some point since I know what an ACL is -

I colour my VI's occasionally for 2 reasons. To tell the difference between 32 and 64 bit. Both for LabVIEW version and when using conditional disables for 32/64 and OS's-especially with CLFN's). To mark sections of VI's that have problems or need revisiting. To mark VI's that are incomplete that i must come back to.

-

Updating to new version of LabVIEW

ShaunR replied to Bjarne Joergensen's topic in Development Environment (IDE)

Bearing in mind Antoine's comment with which I wholeheartedly agree with, I would suggest upgrading to 2020 now and plan for deliverables with SP1 or later as and when they arrive. You can have multiple versions installed side-by-side on a machine and you want time to find any upgrade issues with your current codebase and gradually migrate with fallback to your current version if things go wrong. Reasoning for 2020 is that that it supports HTTPS - which none of the previous versions do out-of-the-box and is essential nowadays. 2020 is, by now, a known entity in terms of issues and work-arounds whereas waiting for 2021 you will be at the cutting edge - where production systems never want to be unless it facilitates a show-stopping feature requirement. -

/usr/bin/ldd or /usr/sbin/ldd depending on the distro. But the fact that it isn't in your environment path maybe a clue to the problem.

-

If yo think it's a linking problem then ldd will give you the dependencies.

-

That's not very American. Where's the guns?

-

The DLL I used didn't have this feature exposed and I only got as far as identifying the board info before I lost access to the HackRF that I was using. However. From the hackrf library, it seems you call hackrf_init_sweep then hackrf_start_rx_sweep. I guess the problem you are having is that the results are returned in a callback which we cannot do in LabVIEW. So. There are two options. Implement your own sweep function in LabVIEW or write a dll wrapper in <insert favourite language here> that can create a callback and return the data in a form that LabVIEW can deal with. I have a project in another language that unifies hackRF and the dongle SDR's into a standard interface so it creates the callback for the HackRF but I haven't revisited it for a few years and it's status at the time was that it covered similar functionality between the devices-so no sweep function.

-

This is the problem that WinSxS solves, as you probably know. The various Linux packaging systems aren't much help here either. There is, of course, a binary version control under Linux that utilises symlinks-usually the latest version is pointed to though. That is where we end up trying to find if the version is on the machine at all and then creating special links to force an application to use a particular binary version with various symlink switches to define the depth of linking. It's hit-and-miss at best whether it works and you can end up with it seeming to work on the dev machine but not on the customers as the symlink tree of dependencies fails. That resolution needs to be handled much better before I re-instate support for the ECL under Linux again. And like Rolf says, that has to be done again in 6 (I argue weeks, not months) for the release of a new version in order for the application to continue working when there have been zero changes to the application code.

-

It never will be until they resolve their distribution issues which they simply do not even acknowledge. Even Linus Torvalds refuses to use other distro's because of that. What Windows did was to move common user space features into the kernel. The Linux community refuses to do that for ideological reasons. The net result is that application developers can't rely on many standard features out-of-the-box, from distro-to-distro, therefore fragmenting application developers across multiple distro's and effectively tieing them to specific distro's with certain addons. Those addons also have to be installed by the end-user who's level of expertise is assumed to be very high.

-

NI abandons future LabVIEW NXG development

ShaunR replied to Michael Aivaliotis's topic in Announcements

There's your problem, right there. AF. If you are going to use this type of architecture, I suggest using DrJPowels framework instead The key to multiple simultaneous actor debugging is hooking the messages between them; not necessarily the actors themselves. -

Which is the best platform to teach kids programming?

ShaunR replied to annetrose's topic in LabVIEW Community Edition

An often overlooked platform for kids is Squeak. (Adults could learn a thing or two as well )