-

Posts

5,001 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

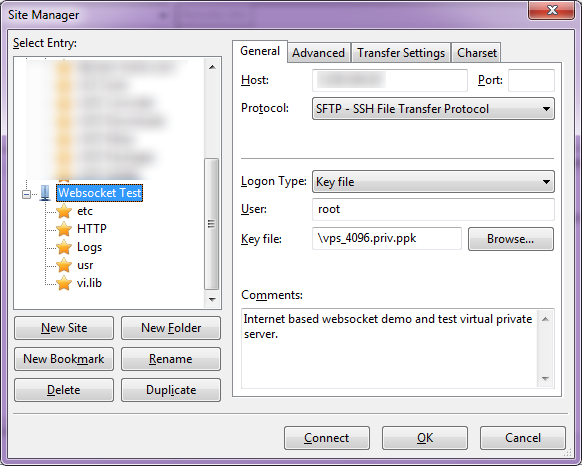

​ ​Indeed. However I would suggest using a key file. If you already have SSH access (which I presume you do) then you just point Filezilla to the private key file You can then disable username/password authentication completely on the server.. This is far more secure but difficulties arise with many (hundreds) of users. However having many users requiring SSH access is not a usual use-case in LabVIEW.

-

​Not having played with a myRIO or other Linux RT (yet!) so I might be off track. But if it has SSH then it probably has SFTP Edit: Sometime later​, after searching to see if there was a wmware image of the NI Linux real-time somewhere (silly fool for even thinking this might be a thing), I came across the Linux RT whitepaper. . ​So there ya go. ------------------- ​Incidentally. It seems that the document also states that FTP was removed (not included?) in Linux RT in LabVIEW 2013 (or maybe that was just when they added webdav) ​ ​[Except the SFTP, of course!]

-

​I think that has been superseded by sc.exe (comand line) and Srvinstw.exe (GUI version) which means you don't have to hack the registry any more.

-

I can find no statement to that effect. (I don't have a 2014 RIO; only 2013). This was published on Oct 16, 2015 so hopefully it is just an oversight as it references a 2011 document.

-

There is an unsecured FTP server on RT devices that is enabled by default. Do people turn this off when deploying as a habit or procedure?

-

I was just checking to see if it was still the main repository. Can we put a link on the CR page?

-

Is this no longer on Bitbucket? Where is the repository now?

-

Point and click FTW. Typing is so 1980s Seeing as you went for a Rube Golberg footswitch solution , maybe it would be fun to try a head tracking one? There's some home-made ones around as well as off-the shelf that are used for gaming. You only need 3 LEDs and a webcam. There is something very appealing about the thought of using a nod as "Return" and a head-shake for "Escape". You could just look up slightly to put the caps lock on, maybe?

-

Am I right in thinking you are a full fingered typist? i.e. you type with all fingers like a secretary with hand position as static as possible? To activate, say CTRL+R you would then twist your hand and separate the pinky? I was looking at how I type. I'm kind of a 3 fingered (neanderthal ) typist (index, middle and thumb for space bar). This means that when I operate the control key I move my whole hand and my pinky is bent downwards/inwards but parallel to the others. So my pivot point is the elbow controlled by the biceps and shoulders rather than the wrist. I wonder if it would benefit you to just swap the ALT and CTRL keys to bring it closer to your hand position?

-

Got for it Hmm. The version in the CR is later than in the repository. Maybe drjdpowell is building off of his own branch?

-

Not at all. Just saying it's been discussed before and there was no consensus. People were more worried about NULL

-

It was discussed in the past. The problem is that the JSON spec forbids it specifically.

-

Outside of UX are there any known issues with things like URL mapping to the LabVIEW code (sanitation and URL length) or the API keys (poor keyspace)? Are LabVIEW developers even aware that they may need to sanitise and sanity check URL parameters that map to front panel controls?(especially strings)

-

As an addendum. Do you have specific issues with using the NI webserver on public networks? Can you detail the specifics of why it is "woefully incapable" for us?

-

Setting the receive buffer isn't a help in the instance of rate limiting. There is a limit to how many packets can be buffered and they stack up. If you send faster than you can consume (by that I mean read them out through LabVIEW), you hit a limit and the TCPIP stops accepting - in many scenarios, never to return again or locking your application for a considerable time after they cease sending. For SSL, the packets (or records) are limited to 16KB so the buffer size makes no difference. Therfore it is not a solution at all in that case but that is probably outside the scope of this conversation but does demonstrate it is not a panacea. I'm not saying that setting the low level TCPIP buffer is not useful. On the contrary, it is required for performance. However. Allowing the user to choose a different strategy rather than "just go deaf when it's too much" is a more amenable approach. For example. It gives the user an opportunity to inspect the incoming data and filter out real commands so that although your application is working real hard to just service the packets, your application is still responding to your commands. As for the rest. Rolf says it better than I. I don't think the scope is bigger. It is just a move up from the simplified TCPIP reads that we have relied on for so long. Time to get with the program that other languages' comms APIs did 15 years ago I had to implement it for the SSL (which doesn't use the LabVIEW primitives) and have implemented it in the Websocket API for LabVIEW. I am now considering also putting it in transport.lvlib. However. No-one cares about security, it seems, and probably even less use transport.lvlib so its very much a "no action required",in that case. Funnily enough. It is your influence that prompted my preference to solve as much as possible in LabVIEW so in a way I learnt from the master but probably not the lesson that was taught As to performance. I'm ambivalent bordering on "meh". Processor, memory and threading all affect TCPIP performance which is why if you are truly performance oriented you may go to an FPGA. You won't get the same performance from a cRIOs TCPIP stack as even an old laptop and that assumes it comes with more than a 100Mb port. Then you have all the NAGLE, keep-alive etc that affects what your definition of performance actually is. Obviously it is something I've looked at and the overhead is a few microseconds for binary and a few 10s of microseconds for CRLF on my test machines. It's not as if I'm using IMMEDIATE mode and processing a byte at a time

-

Wall street maybe?

-

I want to secure myself against you. your dog, your friends, your company. your government and your negligence. (Obviously not you personally, just to clarify ). How much effort I put into that depends on how much value I put on a system, the data it produces/consumes, the consequences of compromising its integrity and the expected expertise the adversary has. If I (or my company/client) don't value a system or the information it contains then there is no need to do anything. If my company/client says it's valuable, then I can change some parameters and protect it more without having to make architectural concessions. The initial effort is high. The continued effort is low. I have the tools, don't you want them too? When your machine can take arms or legs off or can destroy thousands of pounds worth of equipment when it malfunctions. People very quickly make it your problem "Not my problem" is the sort of attitude I take IT departments to task with and I don't really want to group you with those reprobates Your last comment sentence is a fair one, though. However. Web services are slow and come with their own issues. By simply deploying a web server you are greatly increasing the attack surface as they have to be all things to all people and are only really designed for serving web pages If you are resigned to never writing some software that uses Websockets, RTSP or other high performance streaming protocols then you can probably make do. I prefer to not make do but make it do - because I can. Some are users of technology and some are creators.Sometimes the former have difficulty in understanding the motives of the latter but everyone befits.

-

The issue is the LabVIEW implementation (in particular, the STANDARD or BUFFERED modes). The actual socket implementations are not the issue; it is the transition from the underlying OS to LabVIEW where these issues arise. Standard mode waits there until the underlying layer gets all 2G then dies when returning from the LabVIEW primitive. The solution is to use immediate mode to consume at whatever size chunks the OS throws it up to LabVIEW at. At this point you have to implement your own concatenation to maintain the same behavior and convenience that STANDARD gives you which requires a buffer (either in your TCPIP API or in your application). When you have consigned yourself to the complexity increase of buffering within LabVIEW, the possibility to mitigate becomes available as you can now drop chunks either because its coming in too fast for you to consume or because the concatenated size will consume all your memory. I'd be interested in other solutions but yes, it is useful (and effective). The contrived example and more sophisticated attempts are ineffective with (1). If you then put the read VI in a loop with a string concatenate in your application; there is not much we can do about that as the string concatenate will fail at 2GB on a 32 bit system. So it is better to trust the VI if you are only expecting command/response messages and set the LabVIEW read buffer limit appropriately (who sends 100MB command response messages? ) In a way you are allowing the user to be able to handle it easily at the application layer with a drop-in replacement of the read primitive, It just also has a safer default setting. If a client maliciously throws thousands of such packets then (2) comes into play. Then it is an arms race of how fast you can drop packets against how fast they can throw them at you. If you are on a 1Gb connection, my money is on the application You may be DOS'd but when they stop; your application is still standing. As said previously. It is a LabVIEW limitation. So effectively this proposal does give you the option to increase the buffer after connection (from the applications viewpoint). However, there is no option/setting in any of the OS that I know of to drop packets or buffer contents. You must do that by consuming them. There is no point in setting your buffer to 2G if you only have 500MB of memory.

-

Indeed. The Transport.lvlib has a more complex header, but it is still a naive implementation which the example demonstrates. It doesn't check the length and passes it straight to the read, which is the real point of the example. Only on 32 bit LabVIEW 64 bit LabVIEW will quite happily send it. However. That is a moot point because I could send the same length header and send 20 x 107374182 length packets for the same effect.. I agree. But for a generic, reusable TCPIP protocol; message length by an ID isn't a good solution as you make the protocol application specific. Where all this will probably be first encountered in the wild is with Websockets so the protocol is out there. Whatever strategy is decided on, it should also be useful for that too too because you have a fixed RFC protocol in that case. Interestingly. All this was prompted by me implementing SSL and emulating the LabVIEW STANDARD, IMMEDIATE, CRLF etc. Because I had to implement a much more complex (buffered) read function in LabVIEW, it became much more obvious where things could (and did) go wrong. I decided to implement two defensive features. A default maximum frame/message/chink/lemon size that is dependent on the platform - cRIO and other RT platforms are vulnerable to memory exhaustion well below 2GB. (Different mechanism, same result) A default rate limit on incoming frame/message/chunk/lemon - attempt to prevent the platform TCPIP stack being saturated if the stack cannot be serviced quickly enough. Both of these are configurable, of course, and you can set to either drop packets with a warning (default) or an error. Yes. This is actually a much more recent brain fart that I've had and I have plans to add this as a default feature in all my APIs. It's easy, ti implement and raises an abusers required competence level considerably.

-

Whos TCPIP read functions use the tried and tested read length then data like the following? (ShaunR puts his hand up ) What happens if I connect to your LabVIEW program like this? Does anyone use white lists on the remote address terminal of the Listener.vi?

-

Yes Orange="run in UI thread" Yellow="run in any thread" Orange: Requires the LabVIEW root loop. All kinds of heartache here but you are guaranteed all nodes will be called from a single LabVIEW thread context. This is used for non thread-safe library calls when you use a 3rd party library that isn't (thread-safe) or you don't know. If you are writing a library for LabVIEW, you shouldn't be using this as there are obnoxious and unintuitive side effects and is orders of magnitude slower. This is the choice of last resort but the safest for most non C programmers who have been dragged kicking an screaming into do it Yellow: Runs in any thread that LabVIEW decides to use. LabVIEW uses a pre-emptively scheduled thread pool (see the execution subsystems) therefore libraries must be thread-safe as each node can execute in an arbitrary thread context.. Some light reading that you may like - section 9 If you are writing your own DLL then you should be here - writing thread safe ones. Most people used to LabvIEW don't know how to. Hell, Most C programmers don't know how to. Most of the time, I don't know how to and have to relearn it If you have a pet C programmer.; keep yelling "thread-safe" until his ears bleed. If he says "what's that?" trade him in for a newer model It has nothing to do with your application architecture but it will bring your application crashing down for seemingly random reasons. I think I see a JackDunaway NI Days presentation coming along in the not too distant future

-

There is a whole thread on LabVIEW and SSH. and one of the posts has your solution Cat is the expert on SSH now I will however reiterate that using a username and password, although exchanged securely, is a lot less desirable than private/public keys. The later makes it impossible to brute force. There is only one real weakness with SSH - verification. When you first exchange verification hashes you have to trust that the hash you receive from the server is actually from the server you think it is. You will probably have noticed that plink asked you about that when you first connected. You probably said "yeah, I trust it" but it is important to check the signature to make sure someone didn't intercept it and send you theirs instead. Once you hit OK, you won't be asked again until you clear the trusted servers' cache so that first time is hugely important for your secrecy.

-

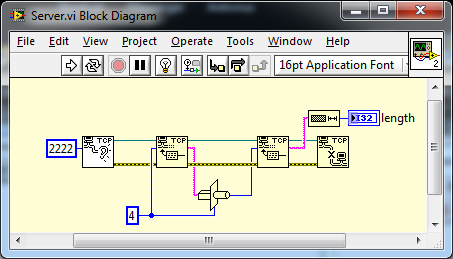

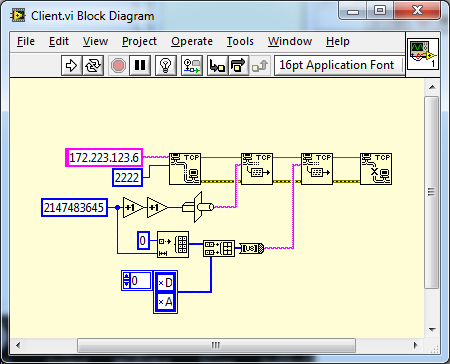

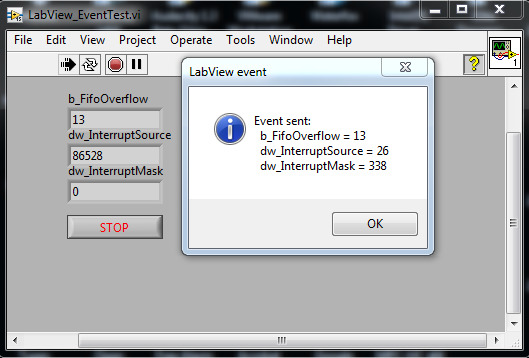

Indeed they are. In fact. It is this that means that the function calls must be run in the root loop (orange node).. That is really, really bad for performance.. If you passed the ref into the callback function as a parameter then you could turn those orange nodes into yellow ones. This means you can just call the EventThread directly without all the C++ threading, (which is problematic anyway) and integrate into the LabVIEW threading and scheduling. The problem then becomes how do you stop it since you can't use the global flag, b_ThreadState, for the same reasons. I'll leave you to figure that one out since you will have to solve it for your use case if you want "Any Thread" operation When you get into this stuff, you realise just how protected from all this crap you are by LabVIEW and why engineers don't need to be speccy nerds in basements to program in it (present company excepted, of course ). Unfortunately, the speccy nerds are trying their damnedest to get their favourite programming languages' crappy features caveats into LabVIEW. Luckily for us non-speccy nerds; NI stopped progressing the language around version 8.x.

-

-

Answered in a PM.