-

Posts

4,997 -

Joined

-

Days Won

311

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by ShaunR

-

Well. It depends if you are a LabVIEW island in a C [sic] of programmers. Then you only have to learn that you *must* specify the DLL function prototypes and keep shouting "Threadsafe, Threadsafe, Threadsafe" until their ears bleed No need to learn C/C++ then I think every LabVIEW programmer should have a pet C programmer

-

My usual retort to this sort of jibe is: "Real programmers use a number of languages and preferably one that isn't over 40 years old-do you also know Algol?" Learning another language won't make you a better LabVIEW programmer - they are generally different paradigms so require a different thought process. What it will enable you to do is fill in the gaps in LabVIEW capabilities and/or leverage other code. My preferred "alt" is actually "Free Pascal" for which I use CodeTyphon but Codeblocks is my mainstay for C/C++. Since C/C++ programmers are 10-a-penny (it's generational so the numbers are dwindling) and there are a lot of projects written in C/C++ which are must-haves for me.. I personally wouldn't jump straight to C as next in line after LabVIEW. I would probably opt for Python which is a much more vibrant and growing community even though it is (ahem) interpreted. It is also a much better option for web services (where all software is heading) so will have much more future relevance. Some others might argue for Javascript but that's just scripted and interpreted C with obfuscation features (AKA client side PHP), as far as I'm concerned. Python seems more thoughtful and designed as well as yielding elegant code. TL;DR If you want to compile DLLS (there be dragons); learn a bit of C. If you want to learn another language. Learn Python.

-

My tuppence. None work great. This comes up at least once a year and always ends the same. SVN externals work OK. (My preference) Git submodules work, sort of. (Git is too complicated for me). Checkout in a single directory tree so LV uses relative paths to find sub VIs. The enemy is linking and re-linking which has never been resolved but .is better than it used to be. Resign yourself to just using text based SCC as binary backup system that you can quickly do a restore or branch and life is good. LabVIEW has always needed its own SCC system but NI have little interest in providing one. We cope at best but usually suffer.

-

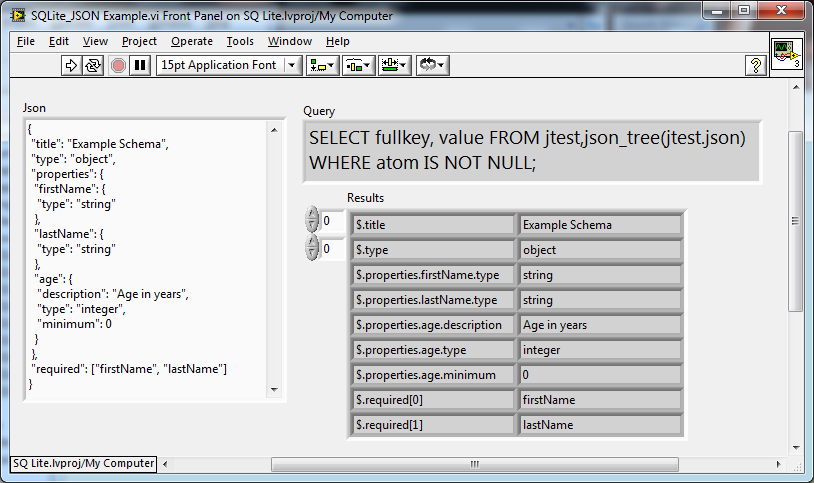

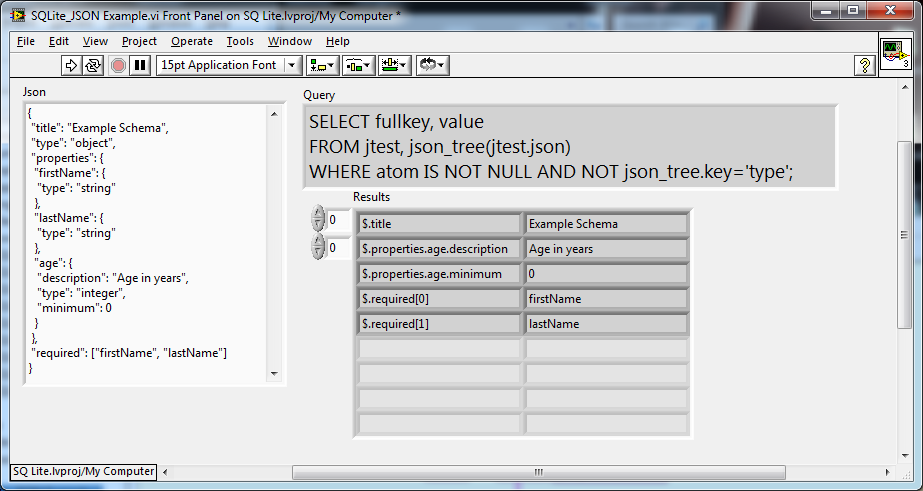

This is awesome. How much of a pain in the backside WAS flattening JSON objects so we can deal with them as self indexing arrays or name/value tables? And we can filter them on the fly too. How easy is that?

-

SQLite has added the json1 extension module in the source tree. It implements eleven SQL functions and two table-valued functions that are useful for managing JSON content stored in an SQLite database. This is a huge feature feature improvement that effectively allows transparent queries of embedded JSON strings in the DB and will be of special interest to LabVIEW web enabled applications with SQLite back-ends for direct to DB insertions and remote queries. It achieves an impressive pars speed of over 300 MB/s (allegedly)

-

Full DataGridView for LabVIEW - OPEN SOURCE project underway

ShaunR replied to Mike King's topic in User Interface

Have you considered making it an XControl so it is a drop in replacement? -

"Upsert" is a database function. I don't know how the salesforce thing is set up but I don't think this has anything to do with HTTP but is probably describing a query executed by the HTTP request. PATCH is detailed here. It won't help you with using the NI HTTP VIs though because I believe they only implement GET and POST. You will have to fall back to the TCPIP primitives and hand craft the HTTP headers and body. Maybe Rolfs HTTP VIs would be useful here.

-

Interactive Webpage VI Options

ShaunR replied to hooovahh's topic in Remote Control, Monitoring and the Internet

You seem to be all over the place with this. I'm not even sure you know what you want. You started off with a complaint about toolkit developers failing to deliver multi-client websocket servers. Moved onto complaining that the websocket toolkits only enable LabVIEW UIs to be exported (all of which is untrue) and ended up complaining at not being able to write LabVIEW code in a browser (which only NI would be able to do). When I try to ask you for specific examples of failures or requirements you seem to just ride off on another unicorn hunt. Maybe you should post your requirement on the Ideas Exchange because I don't think it is anything I can help with. -

Your impression of Brits is rather coloured when you can't even spell 50.

-

Interactive Webpage VI Options

ShaunR replied to hooovahh's topic in Remote Control, Monitoring and the Internet

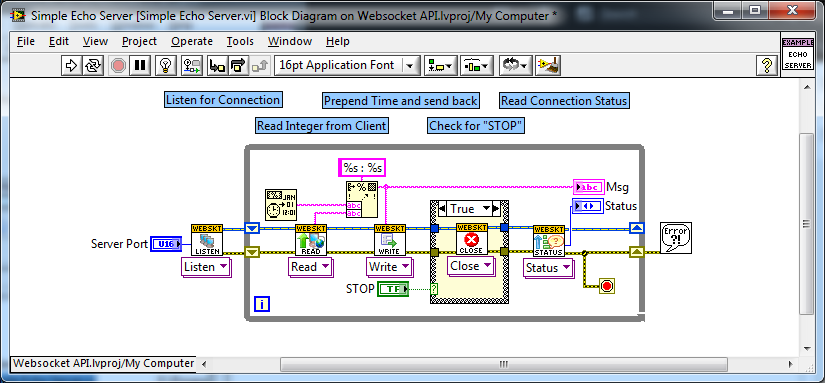

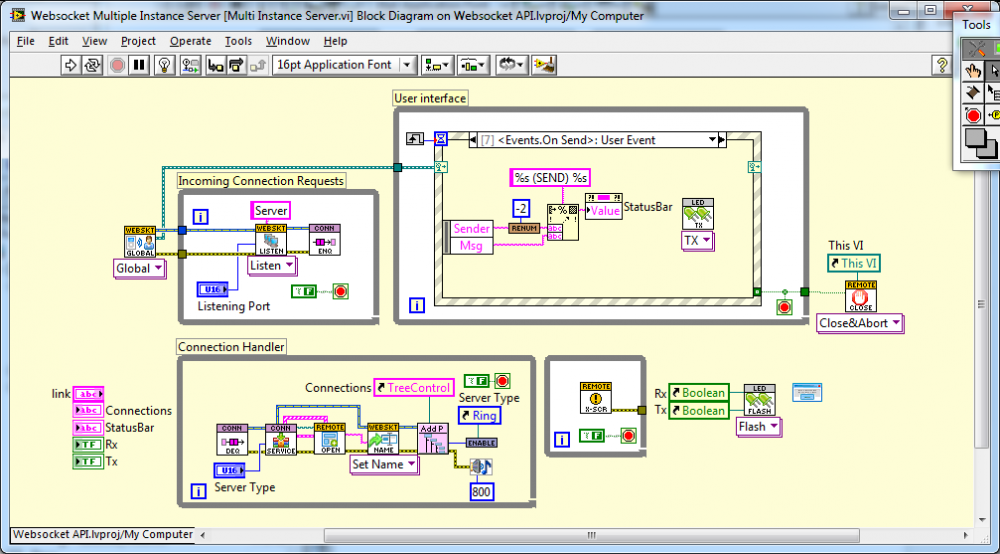

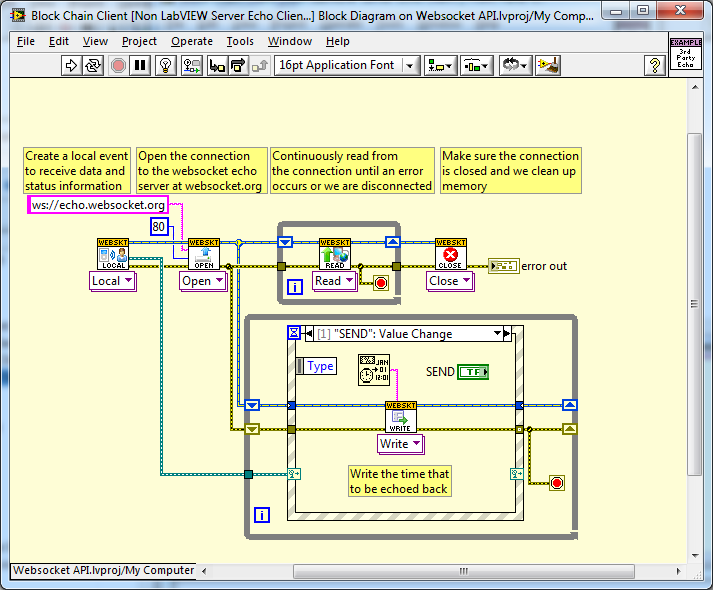

1. You don't need a client. That's what the browser is. If you want a LabVIEW client, then you need to write one in LabVIEW. If you want a C# one. Then you need to write one in c# etc. Websockets is a protocol! If you do want a LabVIEW client, then here is one that sends the time to the www.websocket.org echo test server. The Open, Read, Write and Close should be a familiar concept. 2. You don't need a web server. With websockets, a web server is sometimes used so that the browser HTML and javascript can be found and downloaded. It isn't a requirement though if the client already has the HTML and Javascript. The web server is a distribution tool for the HTML and JavaScript. Here is a LabVIEW websocket server. You can see it is just a direct replacement for the TCPIP primitives and you use it exactly the same. It supports 1 connection just like it would do if you used the TCPIP primitives. . Here is another websocket server that supports multiple clients. You can download and play with this one here. You can see it uses the primitives to create the server in a producer/consumer fashion just like you would with the TCPIP primitives . There's nothing tremendously complicated here and since the VI services are launched dynamically, you just need to add it to them the the "Always Include" of your build spec. If you use a web server to enable the end user to download the HTML and Javascript rather than supply it, say, as a zip file. The web server still has nothing to do with the websocket communication. Where the toolkits vary is in how they get the HTML and javascript to the clients browser. (which is required only for browsers) Some rely on a web server and if they rely on the NI web server then you need all the deployment malarky. Some pretend they are a webserver and spurt out the webpage on port 80. All that has nothing really to do with websockets just ways of deploying the HTML and Javascript for web browsers. You still have to create/write the HTML and Javascript and some devs are attempting to do that dynamically (like Thomas). So. I will try one more time. What are your concerns with the available toolkits around creating multi client, browser enabled applications. As far as I am aware, they all can but you insist they "fail" -

It doesn't stop me so I expect people in here are acclimatised Guess how many toolkits Mac users buy? Guess how many queries I have had for installing any of my toolkits on Mac? :

-

dynamic content in other web servers

ShaunR replied to smithd's topic in Remote Control, Monitoring and the Internet

Nothing really to say except nice work. I have come to the conclusion that too little rediscovery is sought and many would learn a great deal by doing exercises from first principles like this of technology we all take for granted. -

Easy tiger. He was just being jocular with an expression..

-

Interactive Webpage VI Options

ShaunR replied to hooovahh's topic in Remote Control, Monitoring and the Internet

Hmmm. Extreme A isn't an extreme, IMO. It is what all languages provide when it comes to servers - diddly squat. Servers are applications, not language constructs so you have to write them. Extreme B has been sculpted by your LabVIEW experience and again. I don't see this as an extreme. I don't know of many corporate environments that use the NI webserver. They all want interfaces to their Apache, Nginx, Lighttpd etc. I don't know of a single IT department that I could convince to install the NI webserver, let alone supporting it so I'm not quite sure where to start with this. That aside. Probably the only place the NI webserver is used exclusively is to embedded NI products, so that may be why this particular method is appealing to you The deploy/undeploy capability from the project manager is an abomination in my opinion. I don't want to have a full version of LabVIEW just to install servers. This is before we get to how undocumented and how no NI support is available when interfacing to the project explorer. It's a lot of effort for very little gain with probably you as the increase in sales due to this feature with what? One licence? There is nothing stopping you using one of the toolkits and creating such a deployment method but this is a very subjective requirement based on a particular workflow in one use case. So I am not surprised you don't see this unless you specifically commission someone to do it. But in relation to the available toolkits. I'm not seeing any arguments around issues to create multi client, browser enabled applications. In particular can you expand on: Your terminology concerns me here."Work on the system" implies that you think these toolkits are an IDE extension for multi developer programming environments. This is clearly not anywhere near their remit. -

Over my head. Need help on designing a framework.

ShaunR replied to Mojito's topic in LabVIEW General

Many of us aren't fans of QMH. I'm somewhat ambivalent about them but a couple of points I will make from your description. 1. Future proofing is marketing speak. Adding complexity for something that is not required and just a guess as to future requirements is unproductive, will bite you and adds nothing except complexity. Design your system for the requirements now and iteratively innovate when necessary to encompass new requirements. Experienced engineers will recognise patterns in solutions and code in a way that they know will be easy to modify in the future but the key point there is easy to modify, not seamlessly integrate. 2.If you are going to have multiple consumers of your messages/data then queue based message handling is probably the wrong solution. You will get tied in knots re-transmitting messages/data since queues are a many to one topology and you are trying to create a one to many or many to many architecture. An event based architecture is much more suited to this but you will find very little in the way of formal examples or templates outside of user interfaces. 3. You can have more than one architecture within your program! I see this all the time with even knowledgeable engineers. They choose a magic bullet framework, shoehorn all their code into it and it becomes a mess that only the developer can ever understand and is riddled with bugs. Modular code can have frameworks or architectures at the module or abstraction level and you can mix and match dependant on module requirements (QMH, State Machines, Producer Consumer etc). You can then choose one or more architectures to sew all the modules together to form a cohesive program with defined areas of responsibility that can be debugged in isolation. When new to designing software systems I would suggest the following axiom when using LabVIEW. Design the system top down (from the UI usually) but create the modules/code bottom up. A natural partitioning of code reuse arises from bottom up that is different from the partitioning of responsibilities that top down produces. It is reconciliation of these two partitioning views that yields robust and modular code. So. Enough sermonising Here are some insightful write-ups you may find useful NI QMH Template QMH's Hidden Secret Some Context -

inspect queued events in a compiled application

ShaunR replied to ensegre's topic in Development Environment (IDE)

Correct.- 12 replies

-

- event inspector

- dynamical events

-

(and 1 more)

Tagged with:

-

inspect queued events in a compiled application

ShaunR replied to ensegre's topic in Development Environment (IDE)

If you want a queue. Use a queue primitive. The queue that is used for events is an opaque implementation detail that is part of how Events are realised internally within LabVIEW. If you want a more accurate timestamp, pass it as a parameter. In some of my event systems and even some TCPIP implementations I have a "send" time. You can hook the event with a viewer and logger if you want to analyse your messaging and compare sent and received times. Forget that events have a queue and even forget that they happen to have order on any particular event stack. Certainly don't rely on it - you'll live longer and with more hair.- 12 replies

-

- event inspector

- dynamical events

-

(and 1 more)

Tagged with:

-

Interactive Webpage VI Options

ShaunR replied to hooovahh's topic in Remote Control, Monitoring and the Internet

There are two modes of operation that users typically want. A single application which deals the same data to multiple clients. This is fine for read only interfaces but for bidirectional you have to decide who has control for sending data to it. One client to rule them all otherwise you get config whack-a-mole.. It is the preferred option for resource reasons. An instance for each client which allows each client to control their instance. Each client sees something different and if making them all see the same thing is required, you have to handle it in the software itself (centralised data store) . This is extremely resource intensive and can bring your server to it's knees with enough clients. There are lots of similar projects around the web . With this one, you create plug-in data sources that interface to your back-end. I prefer that model otherwise they would have to define a propriety protocol and dictate comms methods. This way they leave it up to you, which I prefer. There are examples and fairly easy to implement. -

Write to Binary File Cluster Size [x-post to ni forums]

ShaunR replied to GregFreeman's topic in Database and File IO

Yes. If you use the Variant To Flattened String instead you will see the type info on the separate terminal. That won't get rid of the length byte that is prepended to strings though. You will have to process the flattened string and remove that manually. -

Interactive Webpage VI Options

ShaunR replied to hooovahh's topic in Remote Control, Monitoring and the Internet

Sort of the wrong question. It's like saying can my TV pick up satellite? Well. Yes, if you have the right receiver. Can you build browser interfaces to LabVIEW with menus, cursors and sub panel mimic screens with these tool-kits? Many of them, yes. Getting UI information out of LabVIEW into a browser is trivial. Getting the user control requests back in to affect LabVIEW is far more complicated and requires a protocol your specific LabVIEW implementation understands. Is there a wrapper solution that you flip a switch and it is in your web browser? Yes. It's called Remote Panels. I think people see these technologies fundamentally differently to me. I see it as a way to "fix" what NI refuse to do. Maybe I have to address the general need rather than just my own and my customers' for this technology. I see developers being able to view/gather information and configure disparate systems in beautifully designed browser interfaces with streaming real-time data from multiple sensors (internet of things) Interfaces like this but with LabVIEW driving it. Customisable dashboard style interfaces to LabVIEW and interactive templated reporting - all with LabVIEW back ends. You can do this with these toolkits. Most LabVIEW developers, however, just seem to want their crappy LabVIEW panels in a browser frame . That is like being given a house and using it as a shed. -

Interactive Webpage VI Options

ShaunR replied to hooovahh's topic in Remote Control, Monitoring and the Internet

I think it is a good idea to do so. This is detracting from ThomasGutzlers software solution which is an ongoing development and that is getting lost in the general debate. -

Interactive Webpage VI Options

ShaunR replied to hooovahh's topic in Remote Control, Monitoring and the Internet

That just boils down to "I don't know how to do it and I won't pay someone to do it". When you realise that it is just messaging for control and UI updates you end up at a point where you can use any user interface in any language and two main possibilities come out when web browsers are concerned - REST and Websocket Then you start pressurising the IT departments' web developers to stop playing Doom and do something useful . Hooovahhs list is proffered as all products being equivalent. They are not. They just have the common denominator of being able to display a LabVIEW UI in a web browser and demo as such for LabVIEW developers. If your current client server/relies on NI Server remote functions. You are locked in, I'm afraid. If you use messaging, you can create headless, back end services with rich browser interfaces to serve multiple users. -

Fastest way to read-calc-write data with cDAQ

ShaunR replied to volatile's topic in Application Design & Architecture

I presume you are using custom scales so you don't have to calculate inside your code? A quick win over the classic read-then-write for DAQ is to pipeline. If that isn't good enough you may need hardware timed, clocked and routed signals and that will be dependant on the hardware you are using. -

Interactive Webpage VI Options

ShaunR replied to hooovahh's topic in Remote Control, Monitoring and the Internet

If you're giving away free advertising I expect at least a mention -

Interactive Webpage VI Options

ShaunR replied to hooovahh's topic in Remote Control, Monitoring and the Internet

I'm feeling a bit left out