-

Posts

1,986 -

Joined

-

Last visited

-

Days Won

183

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by drjdpowell

-

GOOP Development Suite v4.5 is released

drjdpowell replied to spdavids's topic in Object-Oriented Programming

An ‘empty’ object in LabVIEW is just the default constant of that wire type. So you can just ask if your ‘Dough’ is not equal to the default ‘Dough’ constant. -

PRAGMA synchronous = OFF

-

Unloading VI From Subpanel

drjdpowell replied to GregFreeman's topic in Application Design & Architecture

Also, isn’t this unnecessarily complicated. You already are keeping track of the communication references to these subcomponents; now your going to have to keep track of the VI refs of their VIs (plus whatever mechanism you use to get the VI refs to the top-level subpanel owner). An “Insert into the attached subpanel” message is trivially simple. -

Unloading VI From Subpanel

drjdpowell replied to GregFreeman's topic in Application Design & Architecture

Why does each VI have a copy of the subpanel reference? I would just have the top-level owner of the subpanel just send send the subpanel ref to the chosen subView, after calling “Remove VIEW”. The receiving subView would call “Insert VI” as part of handling the message, but wouldn’t bother saving the reference. -

LV Dialog box holds Open VI Reference

drjdpowell replied to eberaud's topic in Application Design & Architecture

Getting the ref with “Open VI Ref” is blocking, but increasing the size of the pool when needed does not, so there isn’t a need to prepopulate the clone pool. On my benchmarks adding an extra clone is about 1000us, while reuse of a clone in the pool is about 100us (LabVIEW 2011). -

LV Dialog box holds Open VI Reference

drjdpowell replied to eberaud's topic in Application Design & Architecture

As a word of warning to anyone reading this, be aware of the issue of Root Loop blocking by things such as the User opening a menu. Safety-critical code must never be dependent on Root-Loop blocking functions, such as “Open VI ref” or “Run VI”; the User can open a menu then walk away. If in doubt, test it by opening a menu and leaving it open just before your critical code executes. If it doesn’t execute until you dismiss the menu then you have the problem. The simplest fix is to open necessary references once at the start of the program, and use the ACBR node instead of the “Run VI” method. -

LV Dialog box holds Open VI Reference

drjdpowell replied to eberaud's topic in Application Design & Architecture

I would suspect it is a “Root Loop” issue. I know that Open VI Ref can require LabVIEW’s Root Loop, and this loop is blocked by the User opening a menu. Perhaps a LV dialog also blocks root loop? BTW, if it is Root Loop, then a method used to get around this problem is to use a single reference to a pool of clones to do all asynchronous running of VIs. Then the ref is only acquired once, before any action that blocks root loop can happen. The latest Actor Framework’s “Actor.vi” is an example of this. -

"Rebundling" into class private data.

drjdpowell replied to GregFreeman's topic in Object-Oriented Programming

I don’t have a consistant plan, but I usually tend to assume it modifies the object (or could at some future point be changed such that it modifies the object). If I’m sure it doesn’t (and never will), then I consider removing the object output, which is the clearest indication you can have that you aren’t changing the object. One can also use the IPE structure to unbundle, instead of a private accessor method as you are using. Then one is required to connect up the output. Connecting the output never hurts. -

That might be best, and easy to do. Also easy to do (just one abstract class with two empty methods). I would have the “to” and “from” methods deal in JSON Value objects rather than a cluster in variant; the User can always call the Variant to JSON methods if they want, and they have the option of using the direct JSON methods as well. — James

-

Feedback Requested: The LabVIEW Container Idea

drjdpowell replied to Chris Cilino's topic in LabVIEW General

Have a look at JSON LabVIEW in the CR, as it is uses another container-like structure so might be similar (or not, as I haven’t looked at your code). Is your Container by-ref or by-value? Looks like by-ref as you have “Create”, “Destroy” and “Copy” methods. -

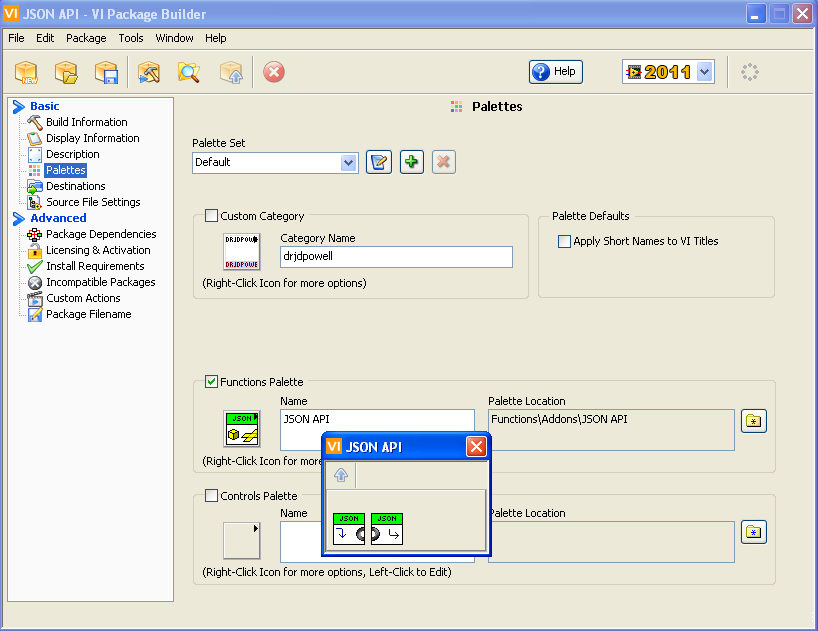

The repo is now under “LAVAG”. I don’t no why your palettes are different I’ve just noticed that I have TWO “JSON API” palettes under “Addons”, the one with two items, and full set that you see. I’ll try and investigate.

-

The VIPM package includes a palette with only the two polymorphic Get/Set VIs. Are you creating a different VIPM package?

-

What palettes are you looking at? My JSON API palette only has two items (the Get and Set polymorphic VIs).

-

Should an application have File >> Exit?

drjdpowell replied to Aristos Queue's topic in LabVIEW General

I’ve obviously not properly thought about, as I had to check my latest app and found I’ve left File>>Exit in but not trapped it to shutdown properly. Opps! I always trap "panel close” as a shutdown command. -

Interested in your ideas for improving the JKI State Machine

drjdpowell replied to Jim Kring's topic in LabVIEW General

Below the top-level cluster I tend to have objects (or arrays of objects) whose methods do the “heavy lifting”. The typical “state” will unbundle only a couple of things and call a couple of methods. So I am doing something similar to what you do. But the problem with the top-level cluster is that it is a grab-bag of everything; UI details mixed with INI file details mixed with analysis details. It’s not cohesive. Because the objects/typedefs that make up the top-level cluster ARE chosen to be cohesive, it is rare to need more the a couple of them. If you find you’re unbundling six things, that’s a good reason to stop and consider if some of them really should be a class/typedef. But because they really naturally go together. -

Interested in your ideas for improving the JKI State Machine

drjdpowell replied to Jim Kring's topic in LabVIEW General

I actually never do that. The main cluster is a set of data related by being “stuff this QSM needs”, rather than being a cohesive single thing. Even when 90% of it is a cohesive thing (and then that part can be an object), there will be extra bits of data (update flags, say) that don’t belong in it. So I never make the full cluster a typedef or object, even though it is mostly composed of typedef/objects. -

Show another picture of what you are doing. Shaun’s idea should work.

-

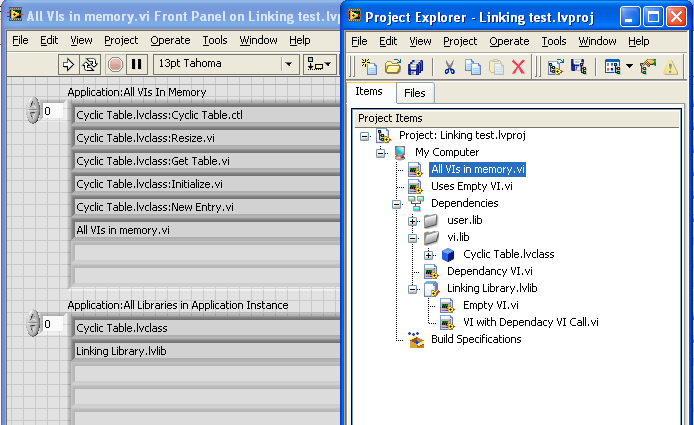

Uh oh. Your right. I hadn’t appreciated this before. I built a test project and library myself (LabVIEW 2011). I used the Application properties to find what VIs are actually in memory as opposed to just listed in dependancies. The unused VIs in a library are NOT actually loaded, but any libraries in dependancies ARE, and if that library is a class, then the contained VIs (and all their dependancies) are loaded. Here “Uses Empty VI" is in the project but not loaded. It uses “Empty VI”, which is in the same library as VI that calls a method of "Cyclic Table” class. None of the three VIs in the chain are loaded, but the Library and Class are. NI really needs to fix the library system; it’s badly broken. Added later: a useful link.

-

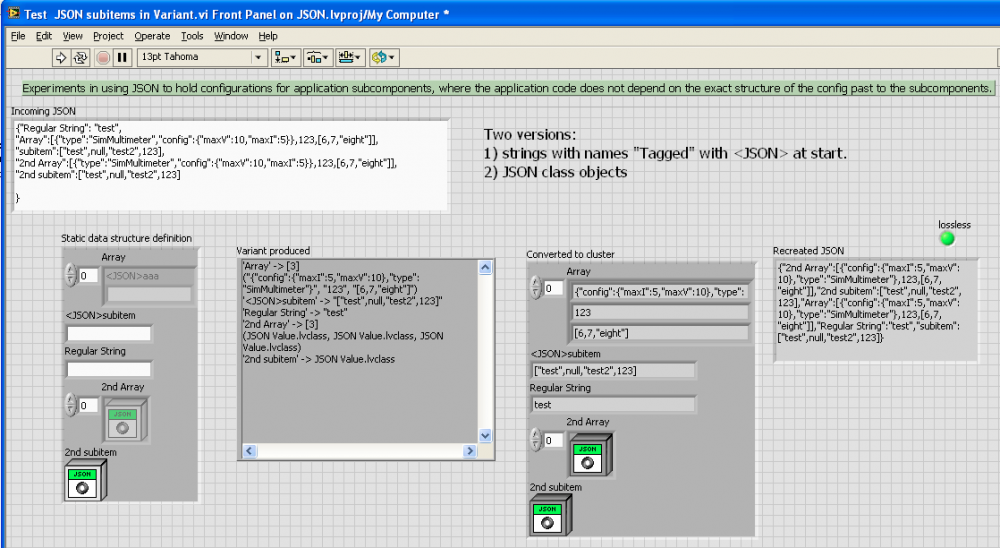

Also see “Test JSON subitems in Variant.vi”. I’ve added the ability to use “JSON Value” class objects in the Variant JSON tools. I should have thought of this first, before using “tagged” string names, so perhaps the tagged string stuff should be dropped.

-

I’ve made an addition that I could use comment on. See “Mixed type array experiment JDP.vi” in the Bitbucket repo. Basically it is about JSON like this: {“instruments”:[{"type":"SimMultimeter","config":{"maxV":10,"maxI":5}}, {"type":"SimVoltmeter","config": {"Voltage":100}}, {"type":"SimDAQ","config": 12.3}] } Here “instruments" is an array of objects corresponding to modules. Each has an identifier, and also a configuration object that varies from module to module. We can’t make a LabVIEW cluster that matches this structure, so at the moment we have to use the lower-level functions to get at it. However, if we have a means of defining a string in a cluster as a JSON string, then we can hold arbitrary JSON subitems in this string. To do this I have added support for a “tag”, <JSON>, at the beginning of the name of any string in a cluster. With this, we can convert the above into a cluster containing an “instruments" array of clusters of two strings, named “type” and “<JSON>config”. Thus we can still work with static clusters, while retaining dynamic behavior at lower levels. Have a look at the example and see what you think? — James

-

Seems a bit strange to be considering which number is most reasonably used as “not a number”, but I note that “-1” is often used in LabVIEW as “not a number” in things like Find in Array. “-1” in U32 is 4294967295.

-

Code-Development collaboration through Bitbucket (GIT and Mercurial)

drjdpowell replied to Ton Plomp's topic in LAVA Lounge

Yes. Source code is on Bitbucket; it is the VIPM installers that are in the LAVA CR. One should also upload the installer to the files section of Bitbucket (though I often forget that). Source code should also be in an as early a LabVIEW version as reasonable (I use 2011), to maximize the number of people who can use it. -

You can also, BTW, take the property-node-in-array sections and put them in a subVI set to run in the UI thread. Running your VI in the UI thread reduced to “Grad” update time from 450 to 250ms (this is because property nodes run in the UI thread, and thread switching is part of the performance problem with property nodes).

-

All the cell color-formatting property node calls in the “Grad” event seems to be your main problem (takes 450ms on my computer). If you added a comparison and only updated changed cells you’d get much better performance.