-

Posts

763 -

Joined

-

Last visited

-

Days Won

42

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by smithd

-

Looks like you might have an unsupported excel version? although this says 2016 should be compatible... http://www.ni.com/product-documentation/54029/en/ The fast answer is that you should try to click on each of the broken nodes and see if you can re-select whatever is shown. So for example click on the "chart" node and see if "application" is an option, and so on. I've done this before with "incompatible" versions (eg excel 2016 with report gen 2014, or database toolkit in labview 64-bit) and its worked, but obviously you get no guarantees. There are also other excel toolkits out there, although I know paying more is not ideal. For example: http://sine.ni.com/nips/cds/view/p/lang/en/nid/212056 see next post

-

Did you try just emailing the company?

-

Yeah I suppose splitting it up theres three sorts Obvious issues: things you can get down to a simple test case, or easily describe (eg when I use quickdrop to insert delete from array onto a wire, it isn't correctly wired) Crashes: They already have NIER. It would be interesting to see how common a crash you just got is, but at least they know (for the x% of labview instances that are on a network and not firewall blocked). Wtfs: This is what you described, generally one-off issues And of course I have no clue what proportion of issues fall into which bucket. Take crashes, for example-- I wouldn't guess the total quantity of distinct crash types is all that high. So if thats true, are there more clear-cut issues or wtfs out there?

-

One thing I absolutely wish is that NI would have a publicly facing issue tracker. For example, the jira for jira: https://jira.atlassian.com/projects/JRASERVER/issues/JRASERVER-67872?filter=allopenissues or the bugzilla for mozilla: https://bugzilla.mozilla.org/buglist.cgi?query_format=specific&order=relevance+desc&bug_status=__open__&product=Firefox&content=crash&comments=0 I've heard the NI argument against older issues -- there may be internal or customer-specific information, but I don't see that as an excuse moving forward. Or, another way, make an idea exchange for bugs. If something hasn't been fixed in 8 years it means that people thought it was too hard to fix or that not enough people are effected or some other combination of factors -- being able to locate bugs and see the workaround or press a button to say "this affects me too!" would make their decisions significantly less noisy, it seems to me. Much of marketing's purpose in life is to take feedback from customers & sales, market research, business needs, etc and make strong recommendations on how to capture more and more desirable customers. I think a more accurate statement would be that you think marketing is wrong...and as an engineer, the belief that "marketing is wrong" is kind of a given

-

You could try going back to the older .net version: https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z0000019LDcSAM The Gzipstream msdn doc says: Starting with the .NET Framework 4.5, the DeflateStream [which~=Gzipstream] class uses the zlib library for compression. As a result, it provides a better compression algorithm and, in most cases, a smaller compressed file than it provides in earlier versions of the .NET Framework. I'm assuming that a zip is a zip and the algorithm doesn't matter, but maybe something else changed then as well.

-

Theres also the argument that you should just get rid of any error wire that has no immediate purpose. So...open file? wire it up. Write to file? wire it up. Close file? Why do I care if close file has an error? Nothing hurts my soul more than someone making a math function that has an error in, a pass through to error out, and a case structure around the math. Whyyyyyyy?

-

I sometimes get something like B when I start with A and then I ctrl+drag up in the wrong place :/ Personally I only ever get A, because I use block diagram cleanup, because life is too short. People have a semi-constant level of irritation with me and my code as a result, but

-

NI Modbus API on GitHub

smithd replied to Porter's topic in Remote Control, Monitoring and the Internet

Well to be fair, there are a lot of variants: https://en.wikipedia.org/wiki/Cyclic_redundancy_check#Polynomial_representations_of_cyclic_redundancy_checks The comment was intended more in the sense of "I can't be assed to figure out which variant modbus uses when I have a functional implementation right over here" -

NI Modbus API on GitHub

smithd replied to Porter's topic in Remote Control, Monitoring and the Internet

yay! please don't look inside some of the code is terrible In all seriousness, I think a lot of the class stuff was overkill for what the code needed to do, but most of it doesn't cause a problem. The biggest issue is the (positive) feedback I posted on yours -- I did the serial stuff wrong, in my opinion, by trying to follow the spec rather than doing it the more pragmatic way (looking at the function code and parsing out the length manually). A series of leaky abstractions all the way from linux-rt up through visa and into the serial layer in that library led to a very hacky workaround on the serial side, and the workaround could have been avoided if I had been more pragmatic at the time. It does work, but its definitely less than ideal. The significant area where your version and this version differ is your attempt to make the object thread safe which...doesn't hurt, but I'm still not sold on. Maybe its just how I write applications, but I can't think of any situation where I'd want to share the port between loops. I thought I had a use not too long ago but since the port is multidrop anyway I just serialized everything. -

if I understand you correctly you want to name the field in your cluster <JSON>field2. That is you start with a cluster on your block diagram: {field1:dbl=1.0,<JSON>field2:string="{"item1":"ss", "item2":"dd"}"} When you call flatten to json you get: " {"field1": 1,"field2": { "item1":"ss", "item2":"dd"}} " because the library automatically pulls off the <JSON> prefix and interprets that whole string as JSON. When you unflatten the reverse happens,

-

I'd suggest: Trying it with a VI that just runs a loop with the i terminal going to an indicator OR: Set the subpanel instance to allow you to open the block diagram (right click option) and then open the VI at runtime, verifying that its actually executing correctly -- maybe it got stuck and thats why everything is unresponsive Checking to see if the variant to data function is producing an error or warning. I seem to recall labview not liking to convert strict VIs into generic VIs It looks like you are closing the VI front panel? Sometimes that can cause a VI to stop as well

-

I dont understand why these need a tree structure? Just for groupings and the like? In any case, sounds like TDMS could do the job, and it even has an in-memory version: http://zone.ni.com/reference/en-XX/help/371361M-01/glang/tdms_inmem_open/ You can also use sqlite in-memory although sadly it has no array support for the configuration use case the lava json library as I recall unflattens json into an object tree.

-

Moxas units look quite nice. I usually go with ethercat because it's so dead simple but their Ethernet modules (ioLogik) come close to swaying me. To add to the list, beckhoff is best known for ecat but has bus couplers for profibus, serial, can open, devicenet, Ethernet IP, and modbus tcp. I also quite like their I/o physical design. Along the lines of hooovahhs cheaper unit, you might look at these guys (raspberry pi): https://www.unipi.technology

- 15 replies

-

- daq

- alternatives

-

(and 1 more)

Tagged with:

-

Do you have performance requirements? (acquisition rate, throughput, latency, buffer size, etc?) For example you say "distributed" "automation" tasks which to me says 10-100 Hz, single point measurements, continuously, forever. So for this I would probably look at an ethernet RIO 9147 or ethercat (what you linked)... But you are using cdaq which is not really an industrial automation device so I'm confused about what you want. You may also want to add a budget, since for example a cDAQ unit is like $1200 which in the US is like a day of an experienced engineer's time...in a lot of cases just evaluating other options would be more expensive. You also have to consider the code quality reduction associated with now using the NI DAQ driver and some other driver in your application, and whether that is worth some few hundred dollars.

- 15 replies

-

- daq

- alternatives

-

(and 1 more)

Tagged with:

-

FPGA compilation is basically a simulation so you can get the clock rate from that and use cycle-accurate simulation of the fft core to determine throughput performance. So if the calculation can be buffered, I think we all collectively want to know what your control requirement is. From detecting a 'bad' value on your camera, how long do you have to respond? If you have 100 usec latency budget it doesnt matter that the gpu can do the processing in 4 usec -- it might not even get there in time. However a fpga card with the camera acquisition and processing happening on the same device makes 100 usec feasible using multiple fft cores to increase throughput. You even get to cheat since you read each pixel as its acquired from the sensor, rather than waiting for the entire acquisition to be complete and buffered as you would with a normal cpu driver. On the other hand if you have a 10 ms latency budget then the CPU+GPU implementation will probably be much much much simpler to implement for the same throughput.

-

Re gpu: 2048 16-bit ints is 4096 bytes, per 4usec is 1,024,000,000 bytes/sec or 976 MB/s. Except its both directions, so actually ~2 GB/s. If youre using a haswell for example (PCIe v3) thats 3 lanes already...without giving your GPU any processing time. A x16 card would give you 3.5 usec, assuming the cuda interface itself has no overhead. As mentioned above it also depends on the rest of your budget -- whats the cycle time, how much time are you allocating for image capture itself, and what do you need to do with that fft (if greater than x write boolean out? send a message? etc)?

-

yeah that pretty wildly depends on where that 4 usec requirement comes from and what you want to do with the result. It seems like an oddly specific number. In any case, with sufficient memory on the fpga I believe you can do a line fft in a single pass (although I can't remember for sure) although its several operations so you'd have to do a lot of parallelization, clock rate fiddling, etc -- at default rates, 4 usec is just 160 operations. Your best bet would probably be to look at the xilinx core (http://zone.ni.com/reference/en-XX/help/371599N-01/lvfpgahelp/fpga_xilinxip_descriptions/) and see how much data you can feed it.

-

A very possible answer is: ghosting However, there are a lot of unanswered questions in your post. For example: Do you have Mod2 physically wired to Mod3, or do you have a signal generator hooked up to mod3? Whats passed on the "Input_FPGA_Cluster"...which is actually the output data? Is is constant? Changing? When you scale it by 15, what is the value? Can it be represented by that fixed point type? How is the input oscillating? By how much? How completely incorrect is it? What are your AI voltage ranges set to? Are they differential or single ended? My suggestion? Wire AO0 to AI0 if you haven't and be sure to connect Mod2/com to mod3/com. Com should just be system ground, but it can't hurt to be sure. Set AI0 to +/-10V range and referenced single ended Wire aisense to com (shouldn't be important in RSE mode but lets be sure) Drop down mod2/ao0 and wire up a fixed point control and put a constant of 5.0V in the control Drop down mod3/ai1 and wire that to an indicator Press run If that works (you read 5V+/- a few mV out), that proves the module works right and you can expand. Suggestion 2 would be set AI0 to +5 and AI1 to -5 and see what kind of ghosting appears between channels once you start using the multiplexer.

-

I feel like you are conflating OOP with "correct". OOP is a way to accomplish tasks, but it is not the only good way. More to the point, if you don't know much about how to implement it, it seems to be a better plan to code the way you know as a starting point, while simultaneously trying to learn from other example applications what problems OOP solves. Then if you reach one of those problems in your code you can just refactor the code enough to solve the problem. The alternative, deciding "I want to use oop" and then building your code can be successful, but it can just as easily end up in a big mess. You also appear to consider a queued message handler and OOP as mutually exclusive, which they are not. Actor framework is a queued message handler solution with the message handling components implemented by OOP, but there is nothing stopping you from using a more traditional string-based approach with messages handled by an object, or somewhere in between a la http://sine.ni.com/nips/cds/view/p/lang/en/nid/213091 . In fact, outside of a library like actor framework where the object includes not only your code but also the control flow, you will still always need some sort of control flow structure to give activity to the object. I believe this is what delacor's qmh does although its been a while and I may be mis-remembering, and if you watch mikael's videos you'll see the same If you want to learn actor framework a good go-to is this https://forums.ni.com/t5/LabVIEW-Development-Best/Measurement-Abstraction-Plugin-Framework-with-Optional-TestStand/ta-p/3531389 which also has various blog posts from Eli about how he built it: https://labviewguru.com/2013/03/28/designing-a-labview-measurement-system-with-multiple-abstraction-layers/

-

Maybe by 2020 they'll add some nxg-style slide-out palette animations

-

They're trying to ease you into nxg To give a real answer: I didn't notice anything different in 2018, but my main version is 2017.1

-

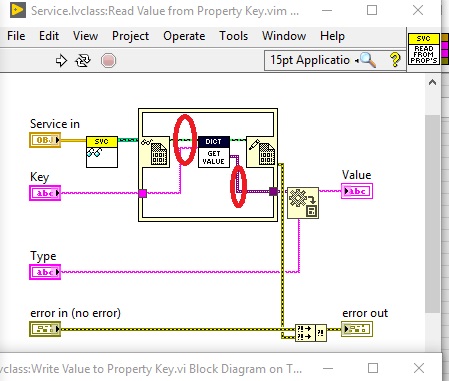

As a just for fun test, I'd suggest maybe adding an always copy dot to the class and variant wires here: This will probably do nothing, but the always copy dot is a magical dot with magical bug-fixing powers, so who knows. You could also pull the variant-to-data function inside of the IPE structure. Fiddling around with that stuff may trick labview into compiling it differently and help narrow down whats going on...and it takes 5 minutes to test. As to your question about it being RT specific...I've never heard of such a thing, but have you tried your simple counter module in a windows exe? My only other suggestion is to instrument the crap out of your code with this fine VI which should output to the web console. Basically just use flatten to XML (it handles classes) or flatten to string (variant attributes) on everything you can find in that set of functions -- the server class, the dvr, the dictionary class, the variant you pull out, etc. This will give you a debug mechanism without connecting the debugger, and I'd bet you find at least one of the things going wrong pretty quickly.

-

I see that they made the same choice NI did on that as well...limited to "+Infinity" and "-Infinity" -- it would be nice if it were more accepting (eg "Inf", "-Inf", "+infinity")...same thing with booleans. If I type something manually I always forget if its "true" or "True", and I often forget that it matters. Probably silly to do so, but I eventually just edited jsontext in a branch to support the different cases. Of course I admit you do eventually reach Yaml-level of parsing difficulties: y|Y|yes|Yes|YES|n|N|no|No|NO |true|True|TRUE|false|False|FALSE |on|On|ON|off|Off|OFF

-

Dunno if this came up elsewhere, but I just stumbled across this: https://json5.org Its a "standard" only so far as "some person put something on github", but it might be nice to adjust the parser half* to accept it, if that fits your use cases -- the changes seem pretty simple and logical. I do hand-write some of my config files, but most of the time I just write a "make me some configs.vi" function and leave it at that. Just thought I'd share. *ie "be conservative in what you do, be liberal in what you accept from others"

-

DVR Read and Write Malleable VIs - Simplify Your Block Diagram

smithd replied to the_mitten's topic in Code In-Development

DVRs are dynamically created