-

Posts

1,201 -

Joined

-

Last visited

-

Days Won

114

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Neil Pate

-

1. What type of source control software you are using? Currently use Git for my LabVIEW work with my repos hosted on github.com 2. You love it, or hate it? Love it. Sure there is a learning curve, but anything worth learning will take a bit of time. 3. Are you forced to use this source control because it's the method used in your company, but you would rather use something else My choice. I have gone down the path of Nothing-->Zips-->SVN --> Mercurial --> Git and am happy with git. I still have heaps to learn (even coming from pretty high competency with Mercurial), but I am treating this as an opportunity to get better. 4. Pro's and Con's of the source control you are using? Pro: Lot's of resources out there to help me when I screw up. Git is ubiquitous now. I love the GitKraken client! Con: Some of the things still confuse me a bit, especially how local and remote can have different branches. 5. Just how often does your source control software screw up and cause you major pain? I don't think I have ever actually lost work or been caused major pain because of my misuse of source code control. Certainly I have made mistakes but have always managed to recover things. Contrasting this, I still sometimes lose stuff when I am being careless with documents that are not yet under any kind of control In a nutshell I could not live without Source Code Control as a regular part of my daily workflow.

- 26 replies

-

- source code control

- subversion

-

(and 3 more)

Tagged with:

-

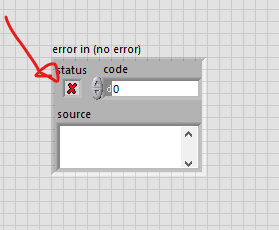

Having the Status visuals as a tick or a cross but represented by a boolean. After 17 years of LabVIEW development I still make mistakes when using the error Status boolean and forget that a True means "Error Present".

-

So again I am definitely not an expert but my understanding is that a virtual environment is like a small sandboxed instance where you can install packages and generally work without affecting all other python code on the system. I guess it's like nuget. If you don't use a virtual environment when you install packages it affects the global python installation on your machine. Seems like pretty sensible stuff (and hopefully what Project Dragon will do for LabVIEW). However I have not been able to get the native python node to work with a virtual environment.

-

Nice question James! I have tried to go down this route myself and had a very similar journey. In the end I have decided to stick with VS Code for Python dev work as it seems to be gaining so much traction and is improving at a great rate. None of the python connectors I tried worked very well. I would prefer to use the native one but it does not seem to support virtual environments which is what seems to be the preferred way of doing things in Python. In the end I rolled a simple TCP/IP client/server solution and was planning on using that to get data to/from Python. But. I have no real experience with this so am going to shut up now for the rest of this thread and read every one else's answers with interest.

-

LabVIEW programmers competition 2021 + points for CLD-R/CLA-R

Neil Pate replied to Artem.Sh's topic in LAVA Lounge

This sounds nice, thank you for organising it! I think the points reward for participating needs to be higher though as currently you can get 5 points for attending a user group. I would expect to actually get a working solution would be quite a bit of time. -

I have never really trusted breakpoints on wires like this, especially going to indicators. I prefer structures/primitives/VIs. I suspect if the breakpoint was on the outside of the top while loop it would break correctly. Still seems like a bug though.

-

I had no idea there were so many out there! http://blog.interfacevision.com/design/design-visual-progarmming-languages-snapshots/

- 1 reply

-

- 1

-

-

Check this out https://fabiensanglard.net/gebb/index.html I just wish I had time (and the brainpower!) to do a deep dive.

- 1 reply

-

- 1

-

-

I too have a sprinkling of Win32 calls I have wrapped up over the space of many years. Most are pretty simple helper stuff like bringing a window to the front or printing or getting/setting current directory etc. No rocket science here.

-

Obviously I don't know what I am talking about, but could this in the future be used to simplify configuring Win32 API DLL calls in LabVIEW? https://blogs.windows.com/windowsdeveloper/2021/01/21/making-win32-apis-more-accessible-to-more-languages/

-

Log File Structure

Neil Pate replied to rharmon@sandia.gov's topic in Application Design & Architecture

I totally get you wanting to display newer entries first, but surely this is just a presentation issue and should not be solved at the file level? I really think you are going against the stream here by wanting newer entries first in the actual file on disk. It is almost free to append a bit of text to the end of a file, but constantly rewriting it to prepend seems like a lot of trouble to go to. Rotating the log file is a good idea regardless though. Notepad++ has a "watch" feature that autoreloads the file that is open. It is not without its warts though as I think the Notepad++ window needs focus. -

Git delete branch after merging Pull Request

Neil Pate replied to Neil Pate's topic in Source Code Control

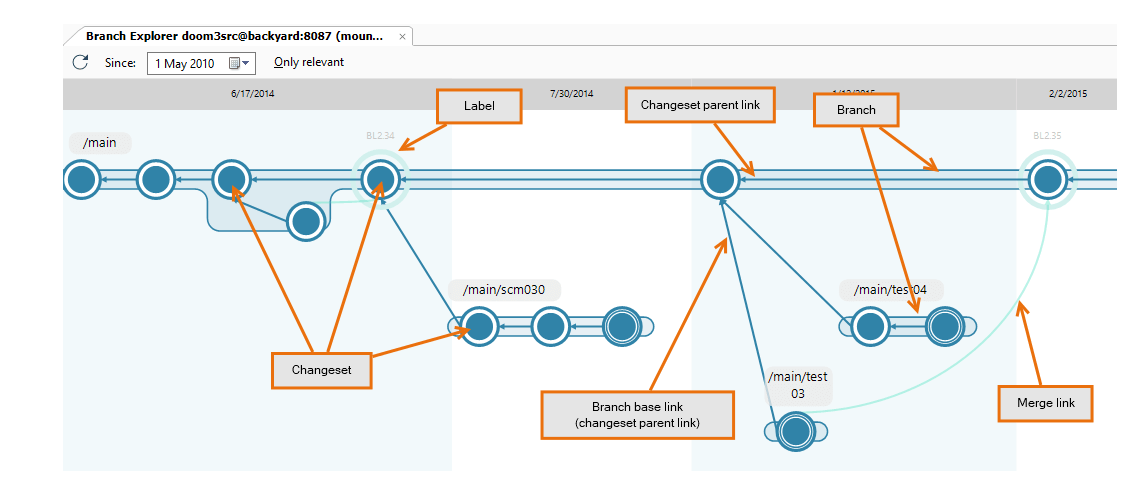

Tangentially, I use Plastic SCM at work (Unity/C# dev), it really hits the sweet spot of easy to use but powerful DVCS. I believe it is modelled on Git but designed to trivially handle many branches. -

Git delete branch after merging Pull Request

Neil Pate replied to Neil Pate's topic in Source Code Control

OK, so deleting the branch on the remote only deletes it from being used in future, it still exists in the past and can be visualised? Sorry I misunderstood and thought that git did magic to actually remove the branch in the past (which would be a bad thing). I know about rebase but have never thought to use it. -

Git delete branch after merging Pull Request

Neil Pate replied to Neil Pate's topic in Source Code Control

I still don't really get this. I want to see the branches when I look in the past. If the branch on the remote is deleted then I lose a bit of the story of how the code got to that state don't I? -

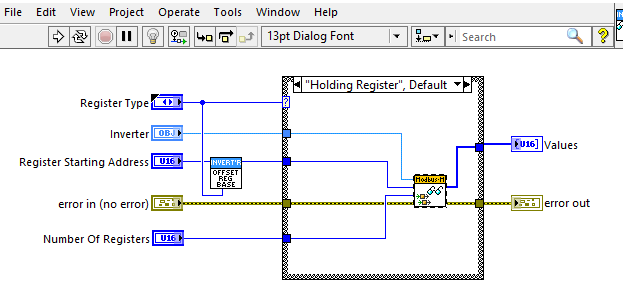

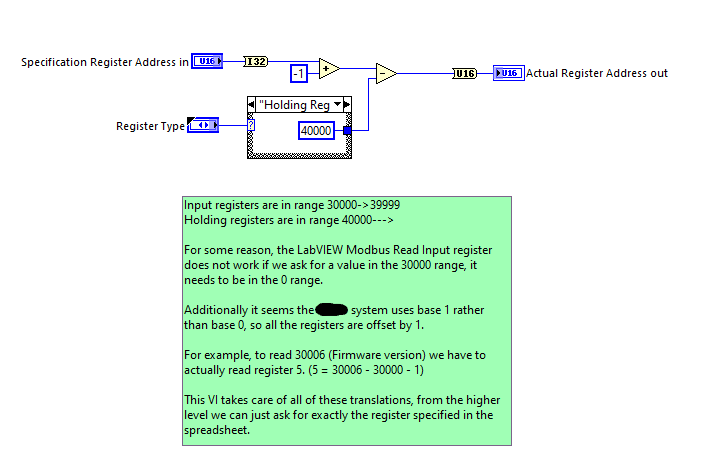

It's been a while but when using the NI Modbus library I found there was some weirdness regarding what the base of the system is. This might just be my misunderstanding of Modbus but for example to read a holding register that was at address 40001 I would actually need to use the Read Holding Register VI with an address of 1 (or 0). This snippet works fine for reading multiple registers, but see the VI I had to write on the left to do the register address translation.

-

So I am pretty new to GitHub and pull requests (I still am not sure I 100% understand the concept of local and remotes having totally different branches either!) But what is this all about? I have done a bit of digging and it seems the current best practice is indeed to delete the branch when it is no longer needed. This is also a totally strange concept to me. I presume the branch they are talking about here is the remote branch? Confused...

-

NI: "Engineer Ambitiously" "Change some fonts and colours, that will shut up the filthy unwashed masses who are not Fortune 500 companies"